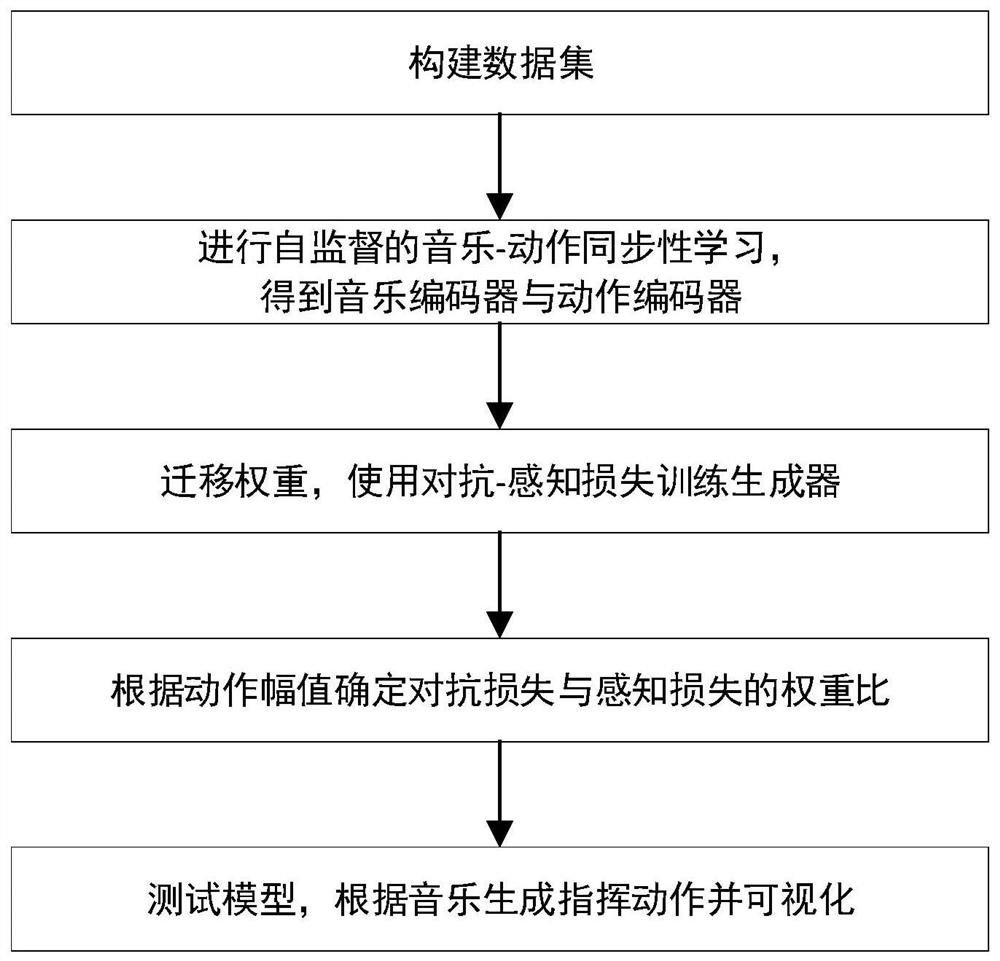

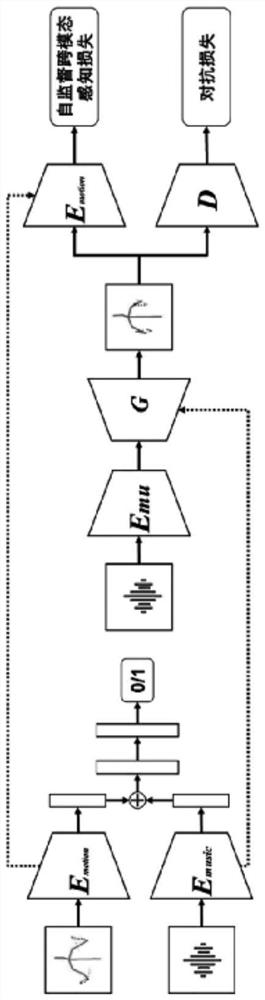

Band command action generation method based on self-supervised cross-modal perception loss

A cross-modal, action technology, applied in neural learning methods, biological neural network models, speech analysis, etc., can solve problems such as unnatural image details, and achieve the effect of accelerating the convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

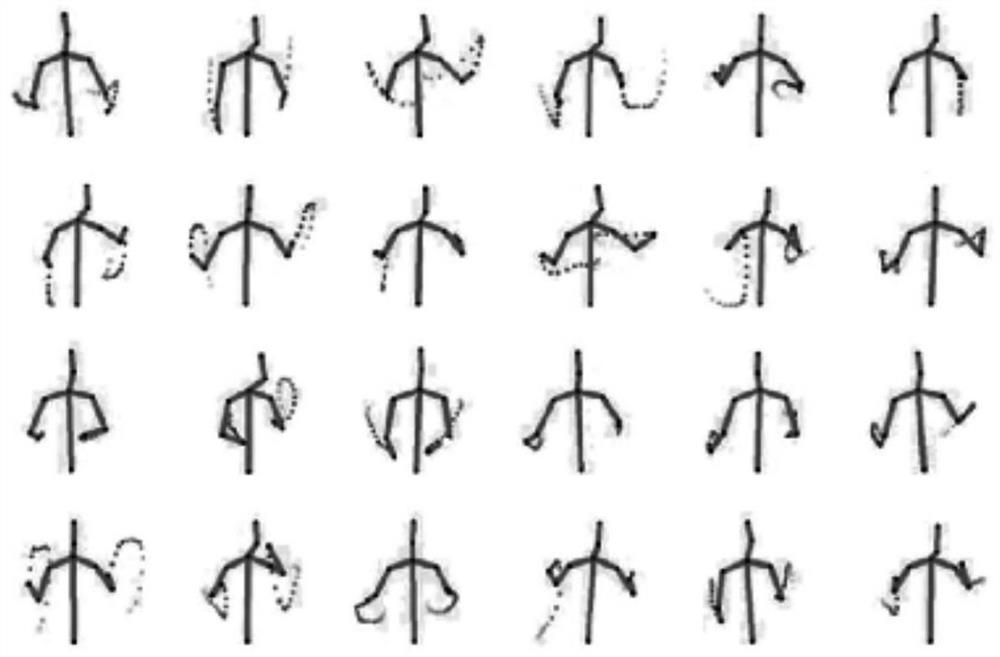

[0046] Embodiments of the invention are described in detail below, examples of which are illustrated in the accompanying drawings. The embodiments described below by referring to the figures are exemplary only for explaining the present invention and should not be construed as limiting the present invention.

[0047] In recent years, many scholars have realized the great value of multimodal data widely existing in the Internet, and proposed many cross-modal self-supervised learning methods. Different from single-modal self-supervised learning, the feature representations of the two modalities in cross-modal self-supervised learning guide each other's learning, and can mine richer information from data. Perceptual loss was proposed by Johnson et al. in 2016 as a loss function for generation tasks. Different from the traditional Euclidean distance measurement or loss in the sample space, the perceptual loss measures the distance between the generated sample and the real sample ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com