Method for correcting pronunciation based on machine vision

A technology of machine vision and standard pronunciation, which is applied in the direction of instruments, speech analysis, speech recognition, etc., can solve problems such as inability to accurately recognize and correct confusing pronunciation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

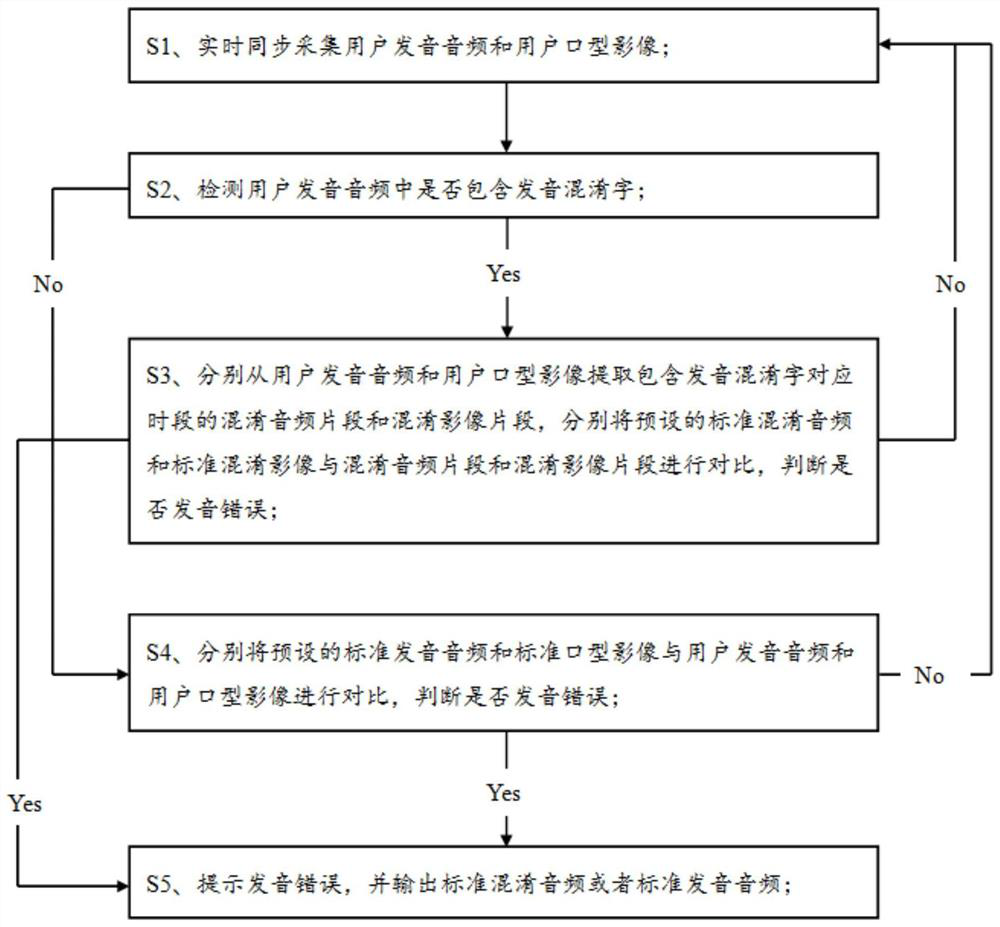

[0032] The embodiment is basically as attached figure 1 shown, including:

[0033] S1. Real-time synchronous collection of user pronunciation audio and user mouth shape images;

[0034] S2. Detect whether the user's pronunciation audio contains pronunciation confusion words: if yes, proceed to S3; if not, proceed to S4;

[0035] S3. Extract the confused audio segment and the confused video segment containing the pronunciation confused word from the user's pronunciation audio and the user's mouth shape image respectively, and compare the preset standard confused audio and standard confused video with the confused audio segment and confused video segment respectively , to judge whether the pronunciation is incorrect: if yes, proceed to S5; if not, return to S1;

[0036] S4. Compare the preset standard pronunciation audio and standard mouth-shape image with the user's pronunciation audio and user's mouth-shape image respectively, and judge whether the pronunciation is wrong: if...

Embodiment 2

[0048] The only difference with Embodiment 1 is that, in S3, before extracting from the user's pronunciation audio and the user's mouth-shaped image, the user's pronunciation audio and the user's mouth-shaped image are degraded. Noise processing, such as the use of Gaussian filtering, can improve the quality of the user's pronunciation audio and user's mouth shape image, and ensure the accuracy and precision of the extraction process.

Embodiment 3

[0050] The only difference from Embodiment 2 is that S3 also detects whether the user's pronunciation audio includes the pronunciation confusion dialect: if so, extracts the confusion audio segment and the confusion image containing the corresponding period of the pronunciation confusion dialect from the user pronunciation audio and the user's mouth shape image respectively segment, respectively comparing the preset standard obfuscated audio and standard obfuscated video corresponding to the pronunciation confused dialect with the confused audio segment and the confused video segment to determine whether the pronunciation is wrong: if yes, go to S5; if not, return to S1. In this embodiment, consider such a situation: due to the extensive and profound Chinese culture, there are unique local dialects in various places, especially the Sichuan dialect. Many dialects have corresponding Mandarin pronunciations, but the meanings of the two are completely different. . For example, in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com