Voice adaptive completion system based on multi-modal knowledge graph

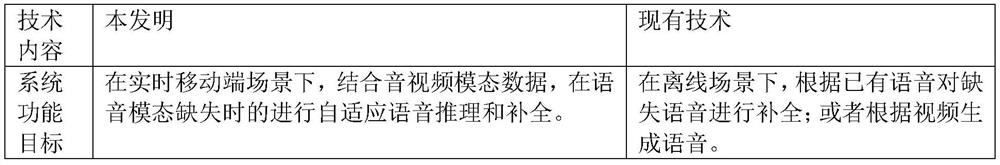

A knowledge graph, multi-modal technology, applied in the field of speech adaptive completion system, can solve the problems of cognitive limitation, low interpretability, out-of-order or packet loss, etc., to achieve high accuracy and high interpretability Sexual, Semantically Appropriate Effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

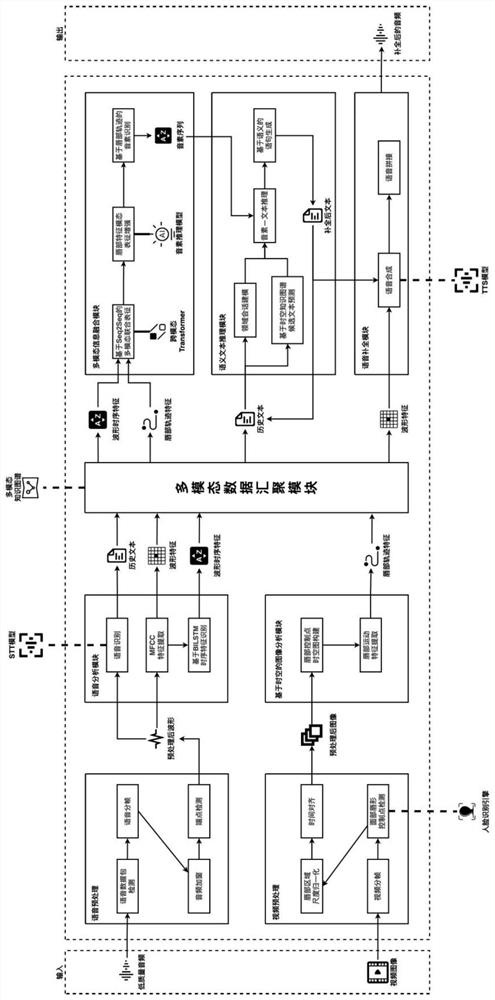

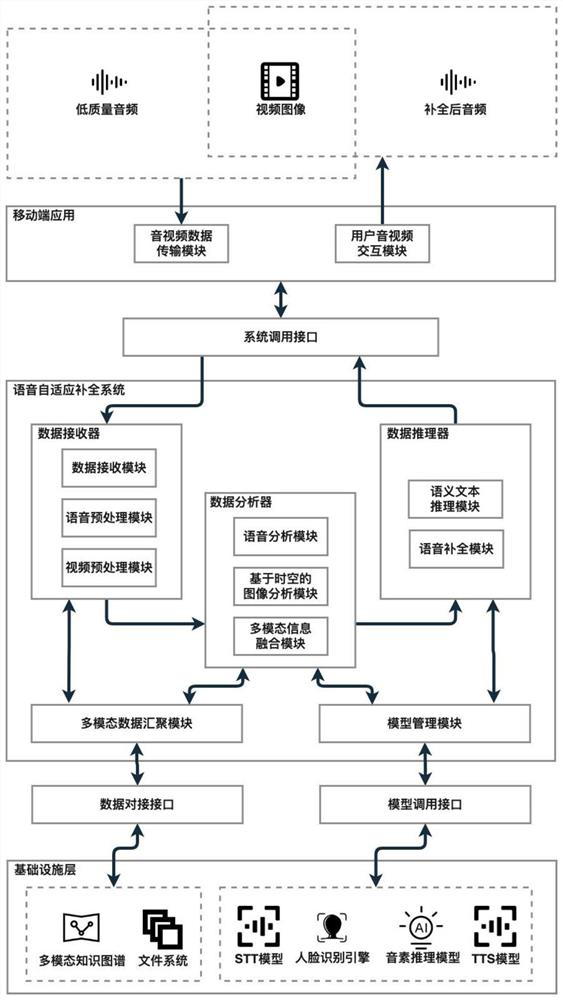

[0040] Such as figure 1 As shown, this embodiment relates to a speech adaptive completion system based on a multimodal knowledge map, including: a speech preprocessing module, a speech analysis module, a video preprocessing module, a spatiotemporal image analysis module, a multimodal Data aggregation module, multimodal information fusion module, semantic text reasoning module and speech completion module, among which: the speech preprocessing module collects and preprocesses speech packets at the receiving end, and takes low-quality real-time audio with packet loss as input , preliminarily process the voice modal data through voice data packet detection, voice framing, audio windowing and endpoint detection, obtain the preprocessed waveform and output it to the voice analysis module; the video preprocessing module collects the video packets at the receiving end and pre-processing, taking continuous video images as input, and performing preliminary processing on the video modal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com