Neural network accelerator model conversion method and device

A neural network model and neural network technology, applied in the field of neural network accelerator model conversion methods and devices, can solve the problems of cumbersome hardware, large limitations, hindering the deployment efficiency of neural network models, etc., and achieve the effect of reducing reasoning time and number

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings. It should be understood that the specific embodiments described here are only used to illustrate and explain the present invention, and are not intended to limit the present invention.

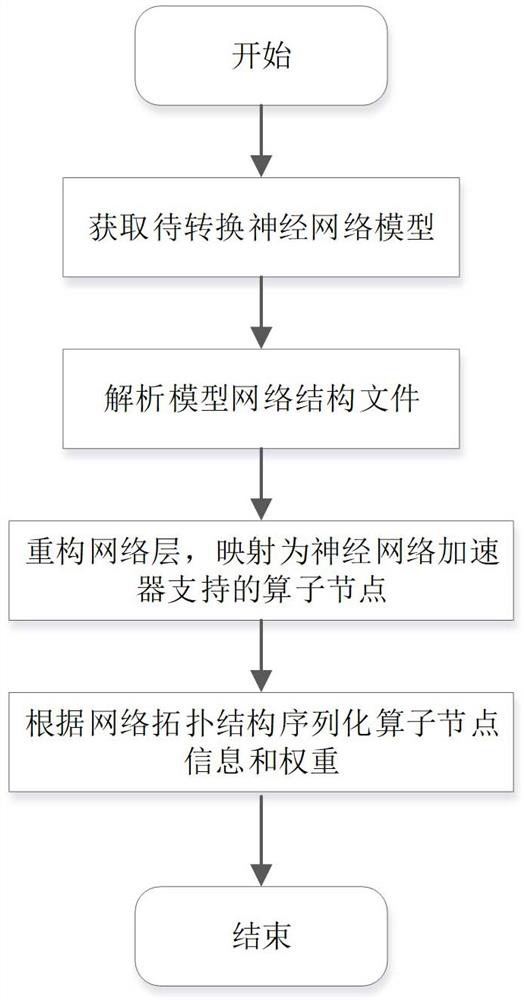

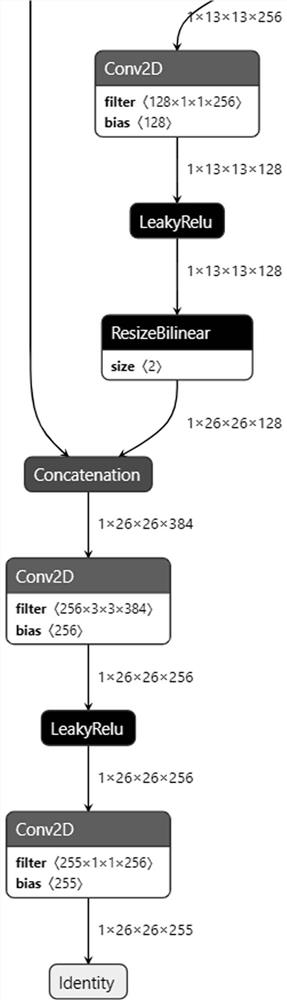

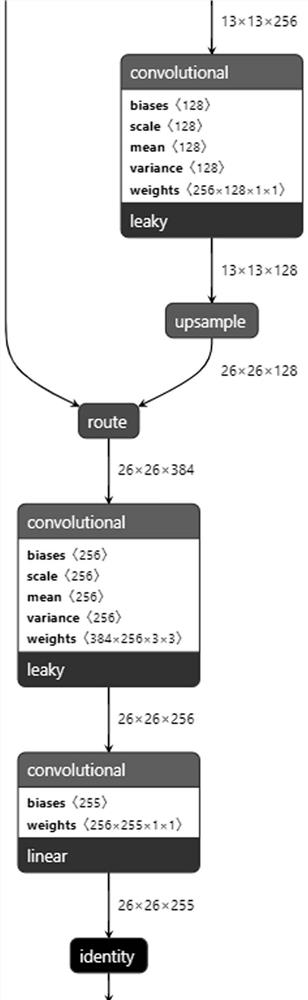

[0030] The present invention adds an agent between the machine learning framework and the neural network accelerator, for the machine learning framework, for the files saved in several mainstream machine learning frameworks, using software to read their network layer information, and for the neural network The accelerator can save the read network layer information in a data format and supported operator types that are convenient for the neural network accelerator to read the network layer information. In this way, the neural network model parameter files in different formats are converted into file formats suitable for neural network accelerators (including dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com