Target detection method and system for enhancing foreground and background distinction degree

A target detection and discrimination technology, applied in the field of target detection and deep learning, can solve the problems of false negatives, target pixels and non-target pixels, and insufficient distinction between foreground information and non-target background information, so as to improve the characteristics of The effect of proportion, enhanced discrimination, and enhanced overall feature expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0056] Such as figure 1 As shown, this embodiment provides an object detection method that enhances the discrimination between the foreground and the background. It includes terminals, servers and systems, and is realized through the interaction between terminals and servers. The server can be an independent physical server, or a server cluster or distributed system composed of multiple physical servers, or it can provide cloud services, cloud database, cloud computing, cloud function, cloud storage, network server, cloud communication, intermediate Cloud servers for basic cloud computing services such as software services, domain name services, security service CDN, and big data and artificial intelligence platforms. The terminal may be a smart phone, a tablet computer, a laptop computer, a desktop computer, a smart speaker, a smart watch, etc., but is not limited thereto. The terminal and the server may be connected directly or indirectly through wired or wireless communic...

Embodiment 2

[0126] This embodiment provides an object detection system that enhances the discrimination between the foreground and the background.

[0127] A target detection system for enhancing foreground and background discrimination, comprising:

[0128] A mark map generation module, which is configured to: generate a mark map with the same size as the original image according to the label information of the target in the original image;

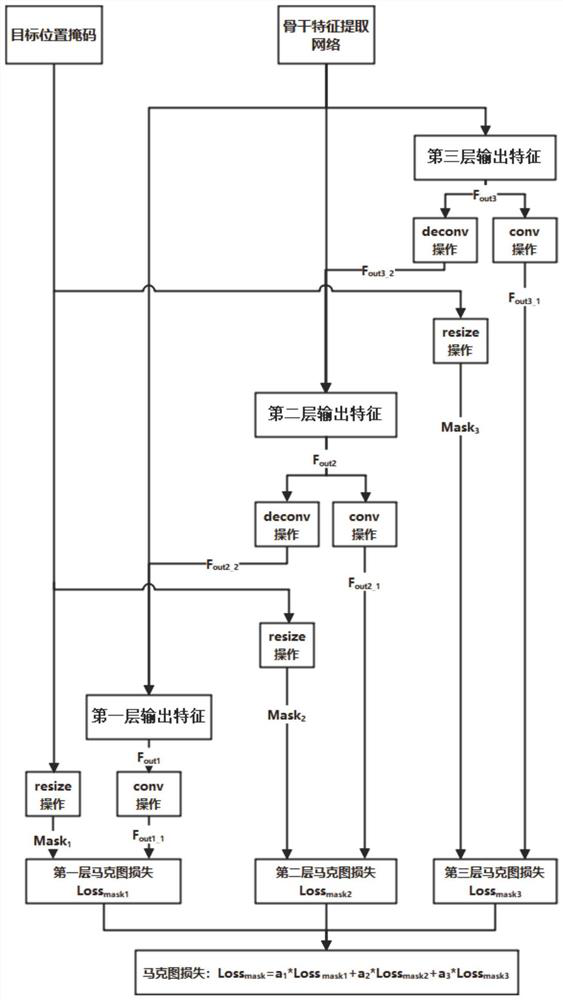

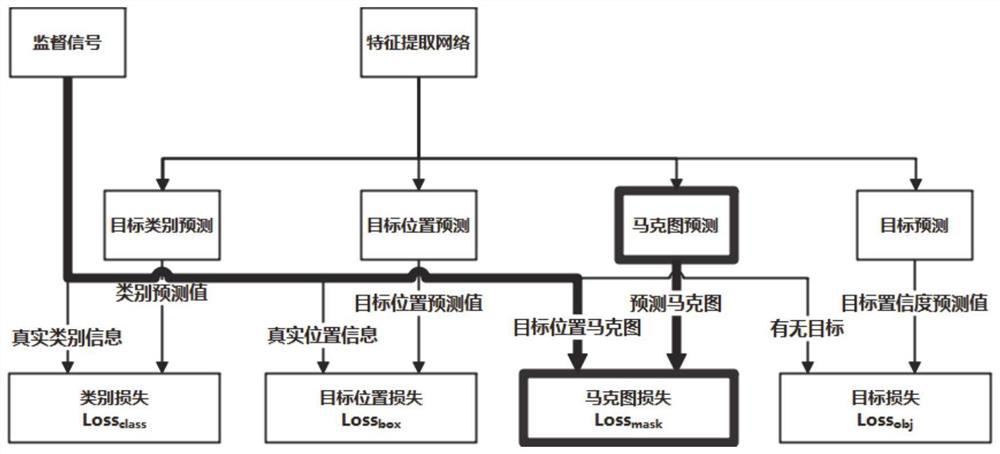

[0129] The feature output module is configured to: use feature networks of different scales based on the original image to obtain feature outputs corresponding to feature networks of different scales;

[0130] An image adjustment module, which is configured to: introduce the mark map, adjust the size of the mark map, and obtain mark maps of different scales;

[0131] Mark map loss calculation module, which is configured to: calculate the mark map loss at different scales according to the feature output corresponding to the feature network of differ...

Embodiment 3

[0136] This embodiment provides a computer-readable storage medium on which a computer program is stored. When the program is executed by a processor, the steps in the object detection method for enhancing foreground-background discrimination as described in the first embodiment above are implemented.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com