Data transmission method and device for computing cluster and storage medium

A data transmission method and computing cluster technology, applied in computing, transmission systems, memory systems, etc., can solve problems such as poor performance, inability to solve heterogeneous hardware communication problems, poor flexibility of cross-node data access, etc., to improve data transmission efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] Specific embodiments of the present disclosure will be described in detail below in conjunction with the accompanying drawings. It should be understood that the specific embodiments described here are only used to illustrate and explain the present disclosure, and are not intended to limit the present disclosure.

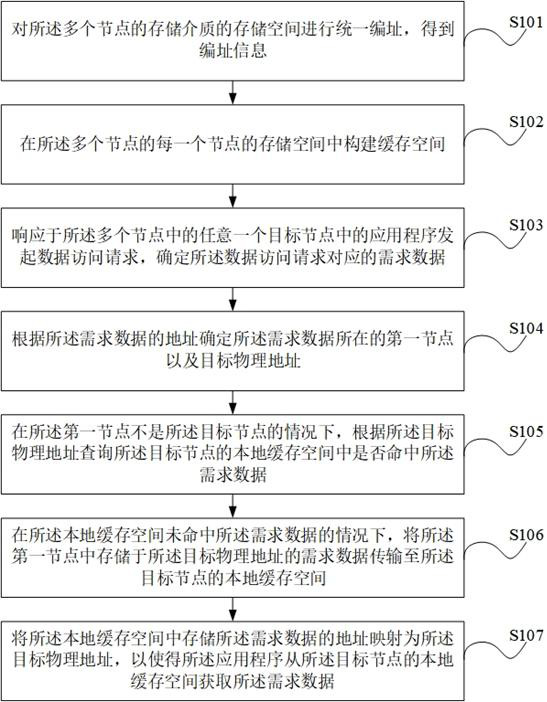

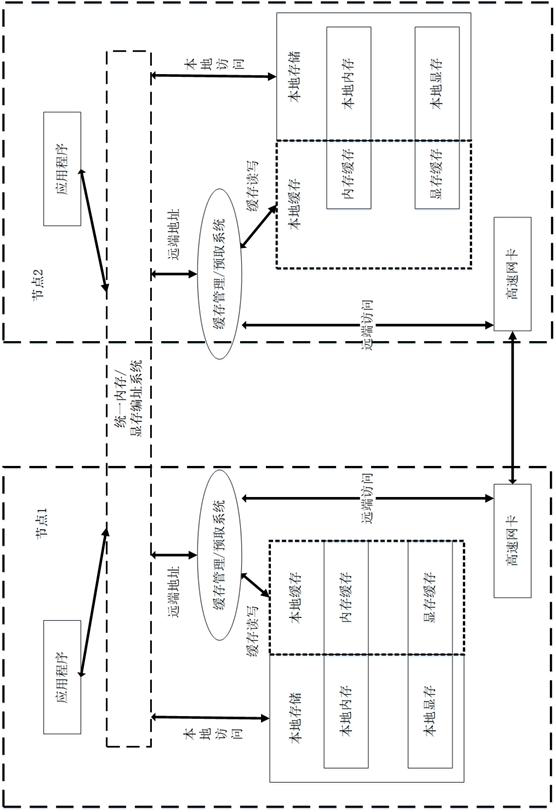

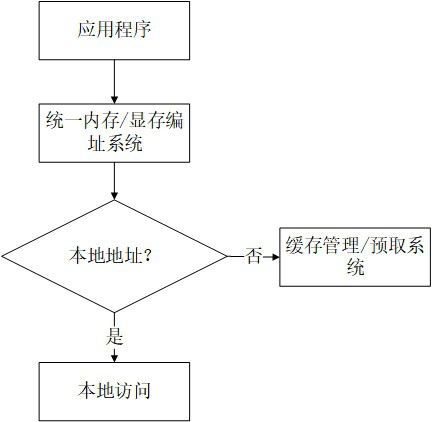

[0057] figure 1 It is a data transmission method for a computing cluster shown according to an exemplary embodiment, and is applied to a computing cluster including multiple nodes. The computing cluster can be used as a server, and the method includes:

[0058] S101. Perform unified addressing on the storage spaces of the storage media of the multiple nodes to obtain addressing information, where each address in the addressing information is mapped to a storage medium of any one of the multiple nodes A physical address of storage space.

[0059] In some possible implementation manners, in step S101, the storage space includes internal memory and / or video me...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com