Human body behavior recognition method based on deep learning

A deep learning and recognition method technology, applied in the field of deep learning, can solve problems such as insufficient training data, high computing and storage overhead, and difficulty in dynamic model update, achieving good recognition results, optimized speed, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

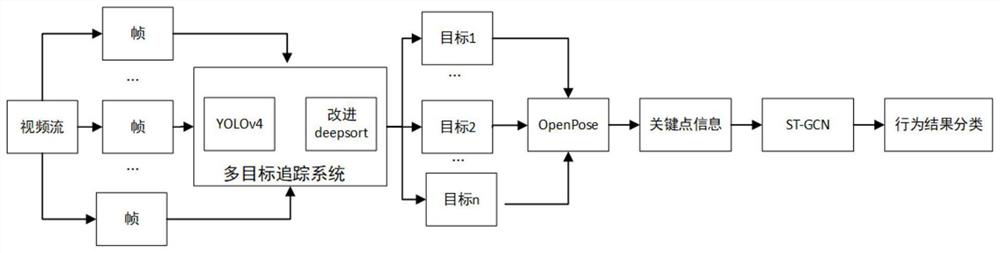

[0071] Embodiment: the recognition method of the human behavior based on deep learning, comprises the following steps:

[0072] S1 collects data: collects data and forms a data set; the data collected in the step S1 includes 5 actions of running, walking, sitting, standing, and falling, and the data set is composed of several video clips; the data set part comes from NTU-RGB+ D, partly from on-site collection, partly from network collection, a total of 200 video clips, such as figure 2 Shown; The data set that is made of video segment (video stream) is divided into training set and test set, and training set is used for training model, and test set is used for testing;

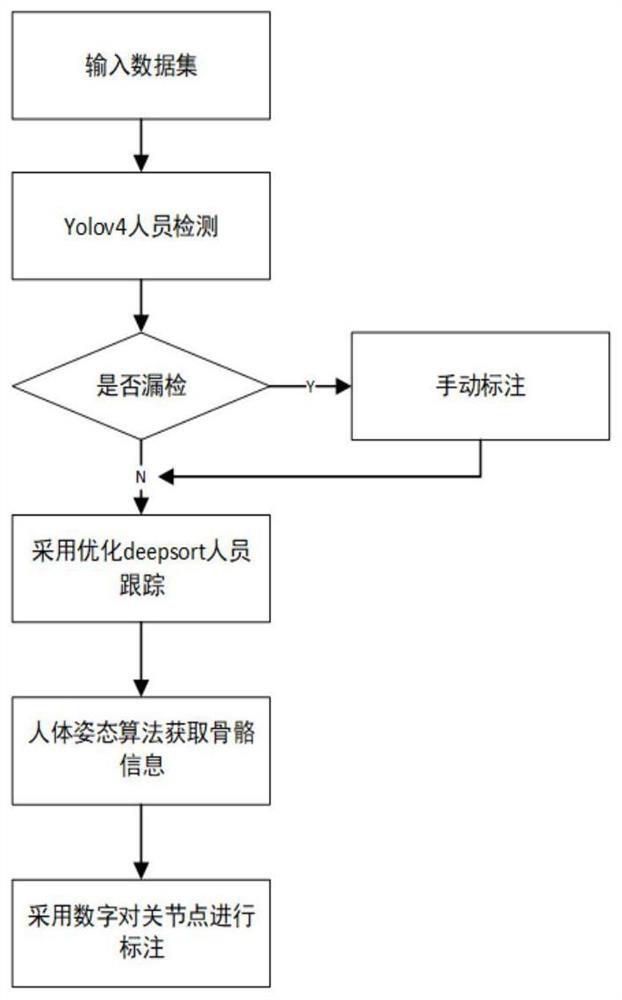

[0073] S2 data set processing: input the data set, and perform personnel detection and tracking on the data in the data set, and extract the skeleton information of each data set through human body pose estimation, and perform pose estimation to obtain the pose estimation result, and use digital pair joints ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com