Model training method, text classification method, system, equipment and medium

A technology for model training and text classification, applied in the field of deep learning, can solve the problem of inaccurate noise data classification, and achieve the effect of improving accuracy, increasing diversity, and alleviating the problem of data imbalance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

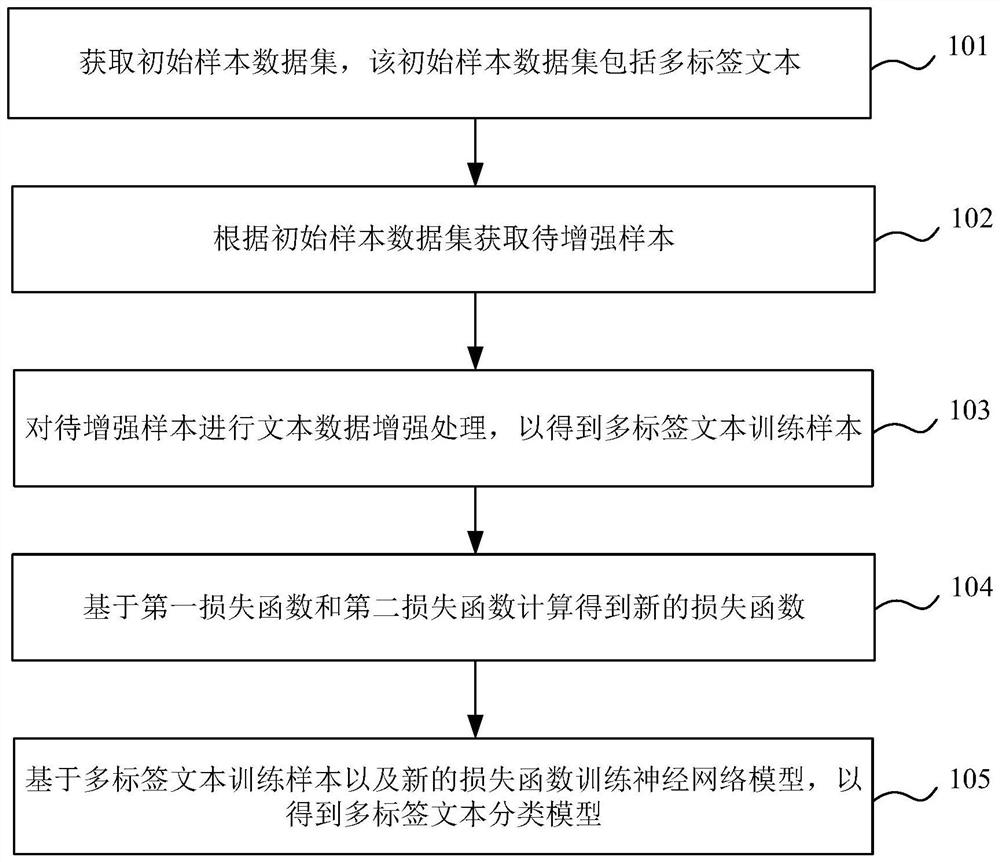

[0071] Such as figure 1 As shown, this embodiment provides a model training method, including:

[0072] Step 101, obtaining an initial sample data set, the initial sample data set includes multi-label text;

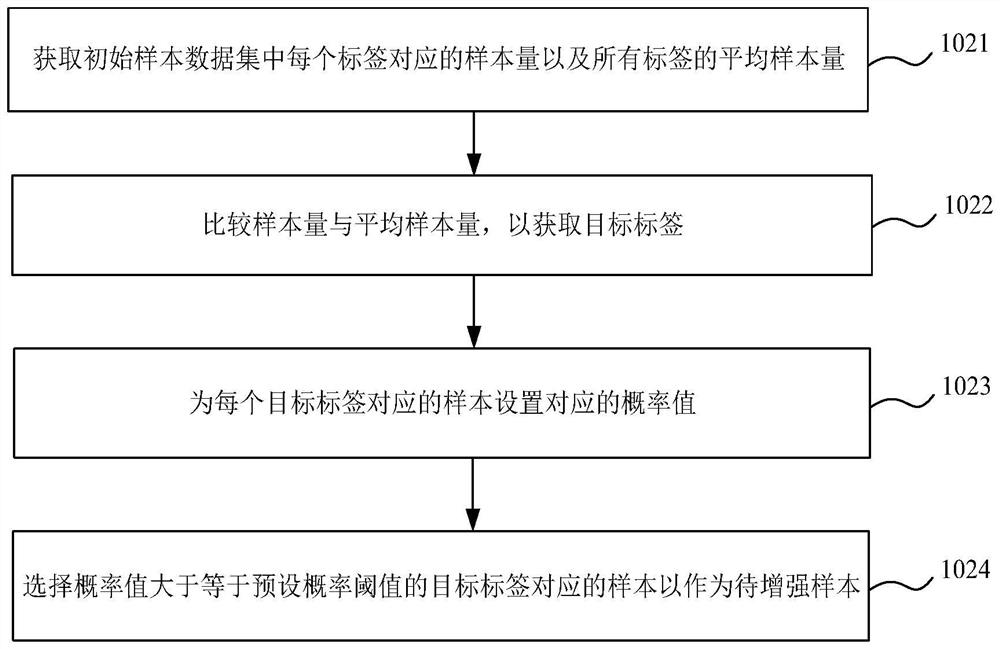

[0073] Step 102, obtaining samples to be enhanced according to the initial sample data set;

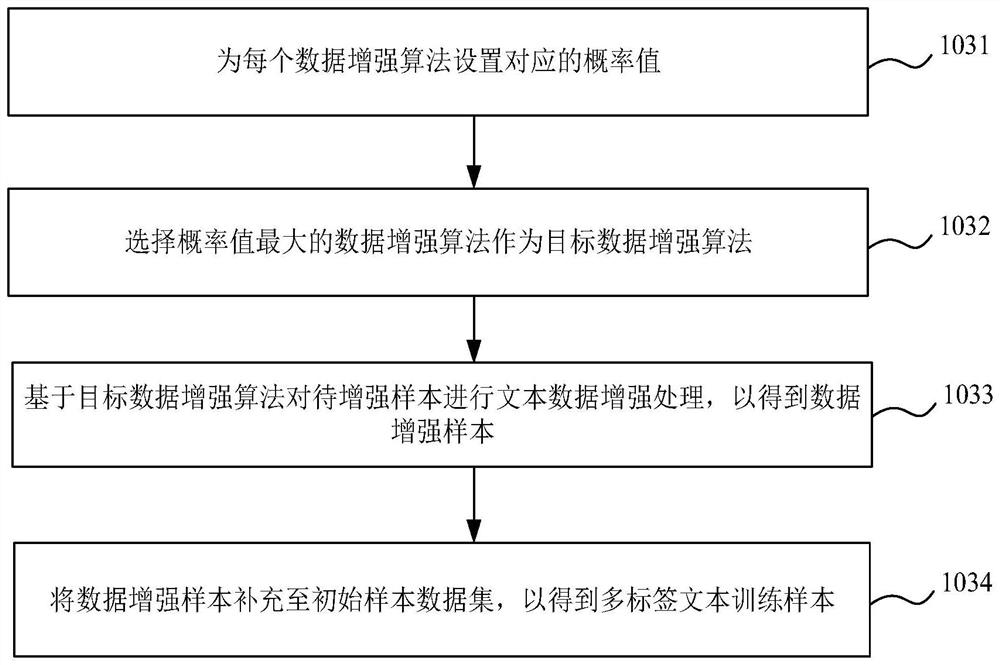

[0074] Step 103, performing text data enhancement processing on the samples to be enhanced to obtain multi-label text training samples;

[0075] Step 104, calculating a new loss function based on the first loss function and the second loss function;

[0076] Step 105, training the neural network model based on multi-label text training samples and a new loss function to obtain a multi-label text classification model;

[0077] In this embodiment, the first loss function is a CE Loss function, and the second loss function is a KL Loss function.

[0078] In this embodiment, the CE Loss function is used to measure the loss of the predicted classification result and the real class...

Embodiment 2

[0101] Such as Figure 4 As shown, the present embodiment provides a model training system, including a first acquisition module 1, a second acquisition module 2, a processing module 3, a calculation module 4 and a training module 5;

[0102] The first acquisition module 1 is used to acquire an initial sample data set, the initial sample data set includes multi-label text;

[0103] The second obtaining module 2 is used to obtain samples to be enhanced according to the initial sample data set;

[0104] The processing module 3 is used to perform text data enhancement processing on the samples to be enhanced to obtain multi-label text training samples;

[0105] Calculation module 4, for calculating and obtaining a new loss function based on the first loss function and the second loss function;

[0106] Training module 5, for training the neural network model based on multi-label text training samples and new loss function, to obtain multi-label text classification model;

[01...

Embodiment 3

[0131] Figure 5 It is a schematic structural diagram of an electronic device provided by Embodiment 3 of the present invention. The electronic device includes a memory, a processor, and a computer program stored on the memory and operable on the processor, and the model training method of Embodiment 1 is implemented when the processor executes the program. Figure 5 The electronic device 30 shown is only an example, and should not limit the functions and scope of use of the embodiments of the present invention.

[0132] Such as Figure 5 As shown, electronic device 30 may take the form of a general-purpose computing device, which may be a server device, for example. Components of the electronic device 30 may include, but are not limited to: at least one processor 31 , at least one memory 32 , and a bus 33 connecting different system components (including the memory 32 and the processor 31 ).

[0133] The bus 33 includes a data bus, an address bus, and a control bus.

[01...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com