Sound processing method and device in virtual scene, equipment and storage medium

A virtual scene and sound processing technology, which is applied in the field of virtual scenes, can solve the problems of affecting the efficiency of sound processing, large amount of calculation, large resource consumption, etc., and achieve the effect of reducing resource consumption, simplifying the calculation process and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

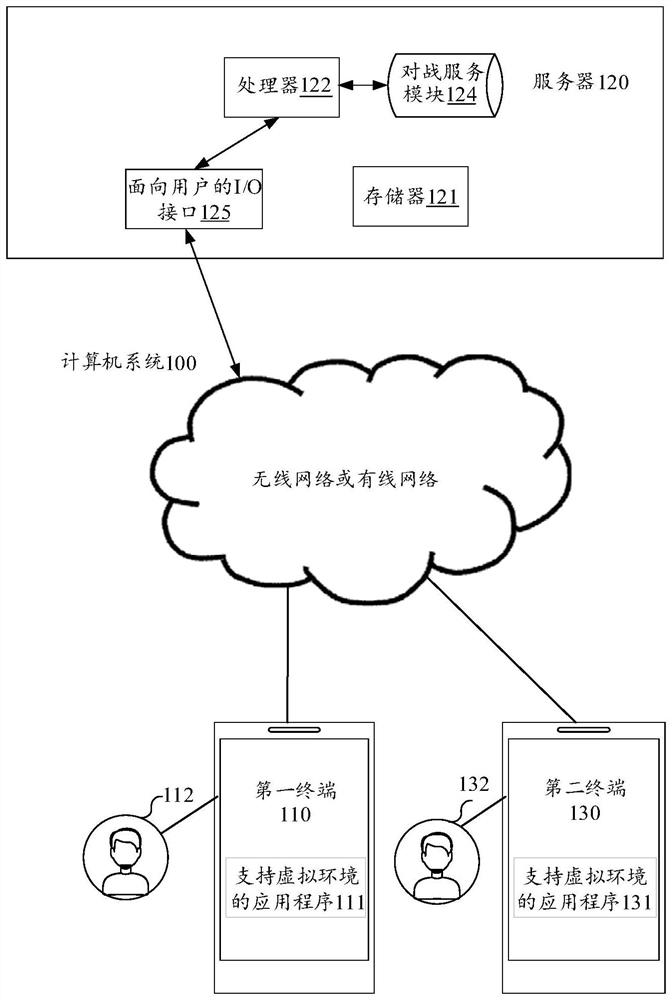

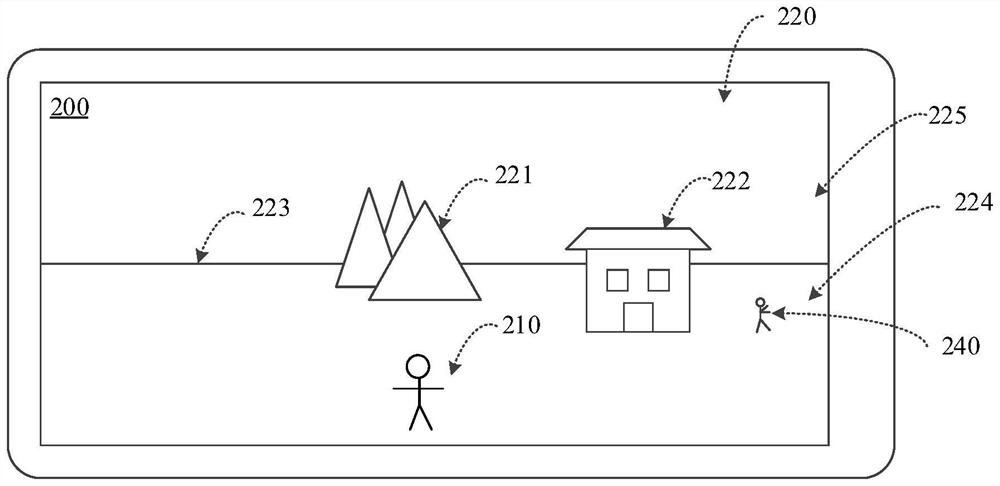

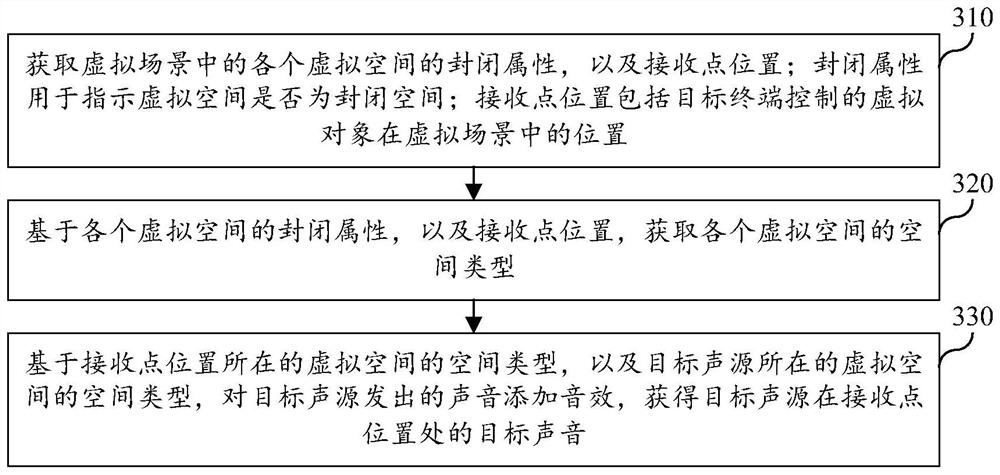

[0082] Reference will now be made in detail to the exemplary embodiments, examples of which are illustrated in the accompanying drawings. When the following description refers to the accompanying drawings, the same numerals in different drawings refer to the same or similar elements unless otherwise indicated. The implementations described in the following exemplary embodiments do not represent all implementations consistent with this application. Rather, they are merely examples of apparatuses and methods consistent with aspects of the present application as recited in the appended claims.

[0083] It should be understood that "several" mentioned herein refers to one or more, and "multiple" refers to two or more. "And / or" describes the association relationship of associated objects, indicating that there may be three types of relationships, for example, A and / or B may indicate: A exists alone, A and B exist simultaneously, and B exists independently. The character " / " gener...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com