Light-vision fusion integrated system

An object and lidar technology, applied in the field of intelligent transportation, can solve the problems of prolonged data output, difficulty in large-scale promotion, and inability to fully realize deep sensor fusion.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

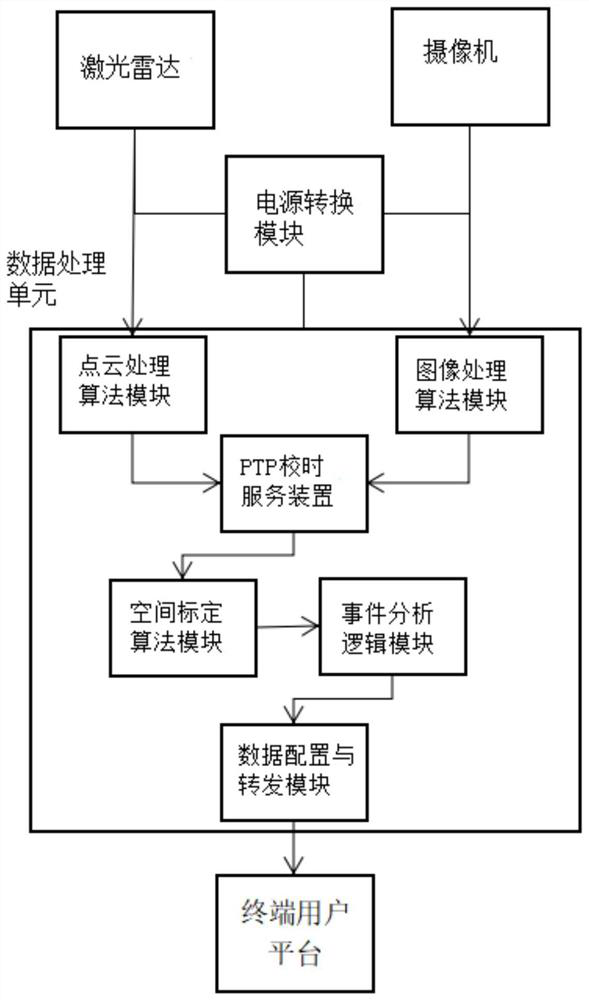

[0025] A light and vision fusion integrated system, such as figure 1 As shown, the system includes: a laser radar, a camera and a data processing unit, and the laser radar, a camera and a data processing unit are arranged in the same device, and the laser radar and the camera are connected with the data processing unit, and the data processing unit includes: Cloud processing algorithm module, image processing algorithm module, PTP time calibration service device, space calibration algorithm module, event analysis logic module and data configuration and forwarding module; the data of lidar and camera pass through point cloud processing algorithm module and image processing algorithm module respectively Carry out preliminary data analysis; then use the PTP time calibration service device to perform time calibration of the lidar and camera sensors and the core processor to achieve synchronous calibration of the frame rate of the three; then perform spatial calibration through the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com