Human action recognition method and system based on multi-scale features

A multi-scale feature and action recognition technology, applied in the field of image processing, can solve the problem of independent feature modeling without video sequence time and space dimensions, and achieve the effects of reducing computing resource consumption, improving performance, and reducing computing load

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0043] Embodiments of the invention and features of the embodiments may be combined with each other without conflict.

[0044]Nowadays, action recognition algorithms have been deployed in different application scenarios, such as power plants, factories, streets and other scenes with relatively complex background environments. For spatiotemporal feature extraction, the algorithm needs to identify the location of humans in different environments and identify some features that contribute more to action classification. Due to the diversity of human actions, action recognition algorithms with better performance need to recognize more fine-grained actions. For different actions, the execution time of the execution object is relatively different, which requires the action recognition algorithm to consider both short-term features (short-term) and long-term features (long-term) in the time dimension. Due to the requirements of current monitoring-level equipment, action recognition a...

Embodiment 1

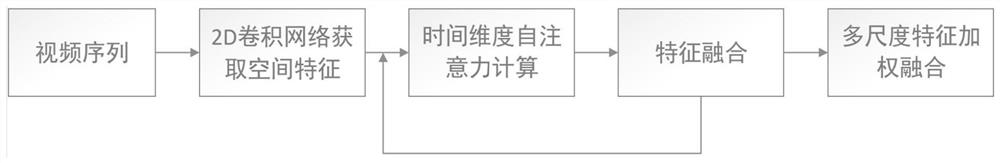

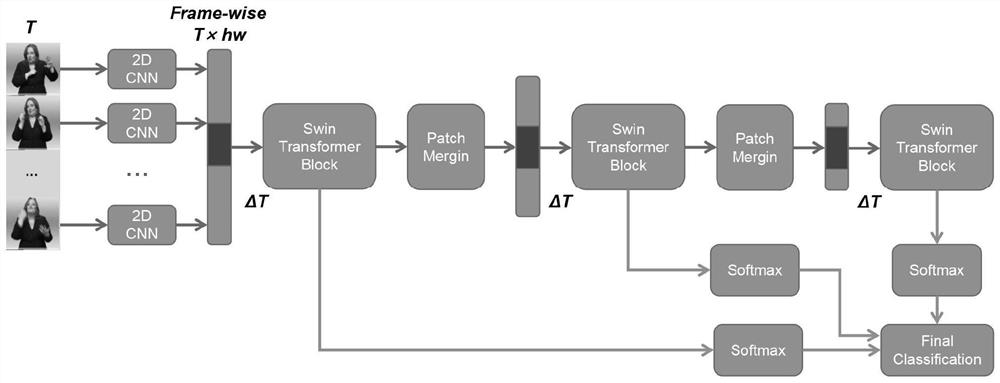

[0046] see attached figure 1 , 2 As shown, this embodiment discloses a method for human action recognition based on multi-scale features, including:

[0047] For a video sequence containing T frames, the implementation of this model first extracts the features of each frame through a 2D convolutional neural network, and obtains the feature representation of the T×HW dimension;

[0048] Take the local window ΔT in the time dimension, perform self-attention calculation in the window, and obtain the maximum response R based on the primary local features 1 .

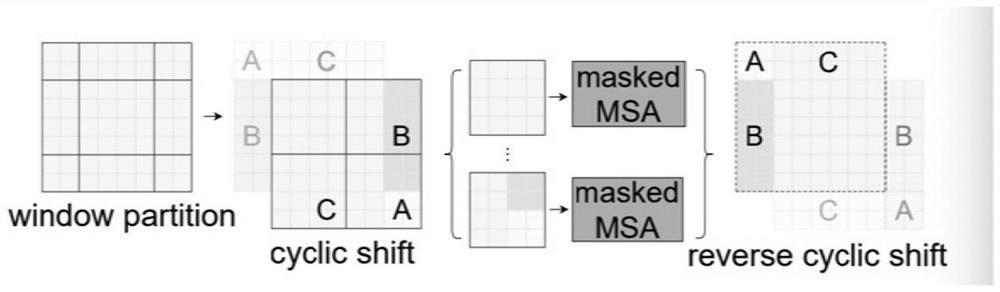

[0049] Shift operations are performed on primary features and self-attention calculations are performed to expand the receptive field of the model.

[0050] The above HW represents the size of the feature map extracted by the neural network. The feature vector of 1*HW dimension is formed by reshape, and then T frames are stacked to form the feature map of T*HW dimension. The shift operation is an operation of the SwinTra...

Embodiment 2

[0072] The purpose of this embodiment is to provide a computing device, including a memory, a processor, and a computer program stored in the memory and running on the processor, and the processor implements the steps of the above method when the processor executes the program.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com