High-performance unblocking parallel memory management device for coordinative executed parallel software

A memory and memory pool technology, which is applied in memory systems, uses stored programs for program control, and concurrent instruction execution, and can solve problems such as complexity and error-proneness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] In describing the preferred embodiment of the invention, reference will be made to the accompanying drawings 1-11, wherein like numerals indicate like features of the invention. Features of the invention are not necessarily shown to scale in the drawings.

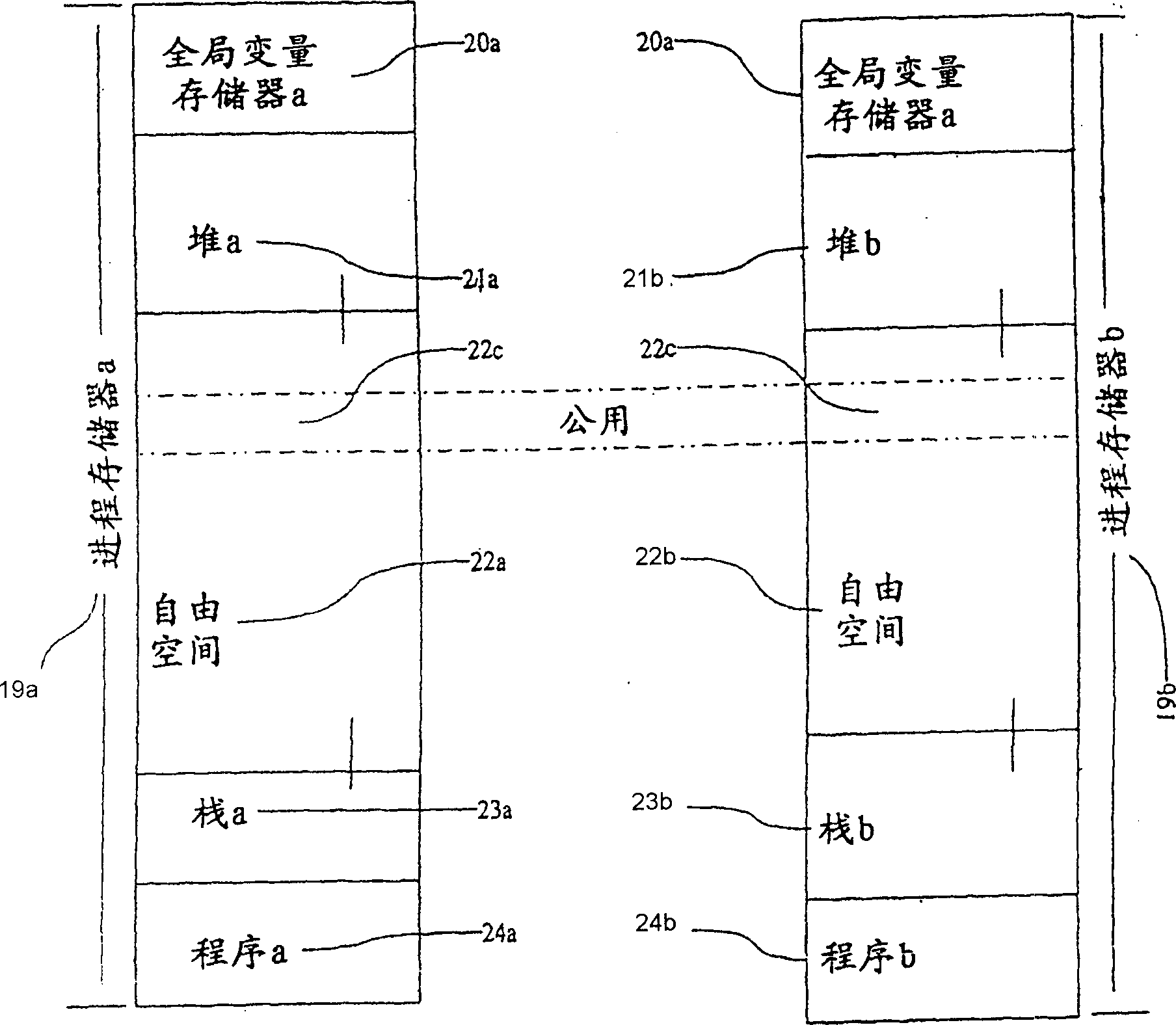

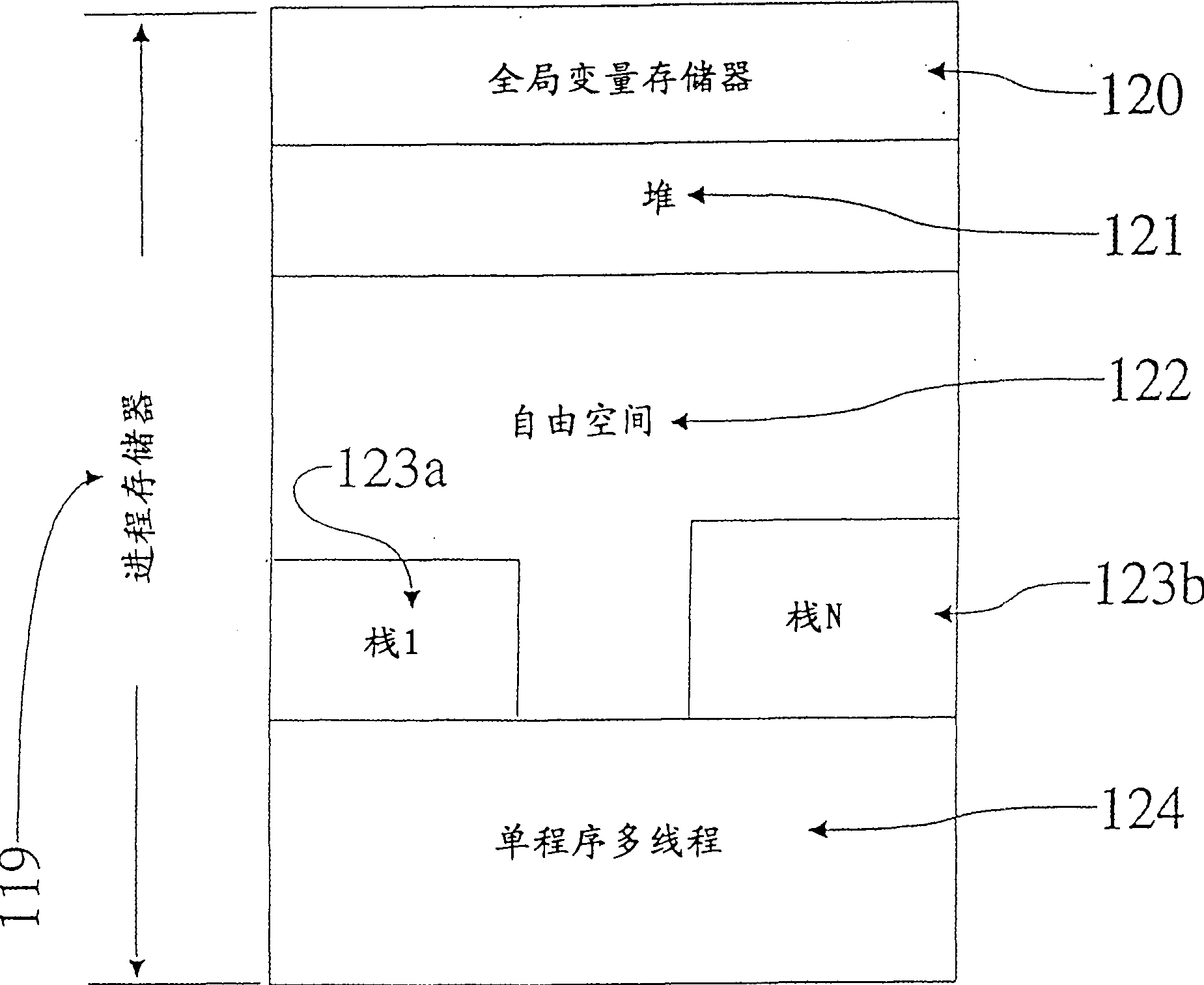

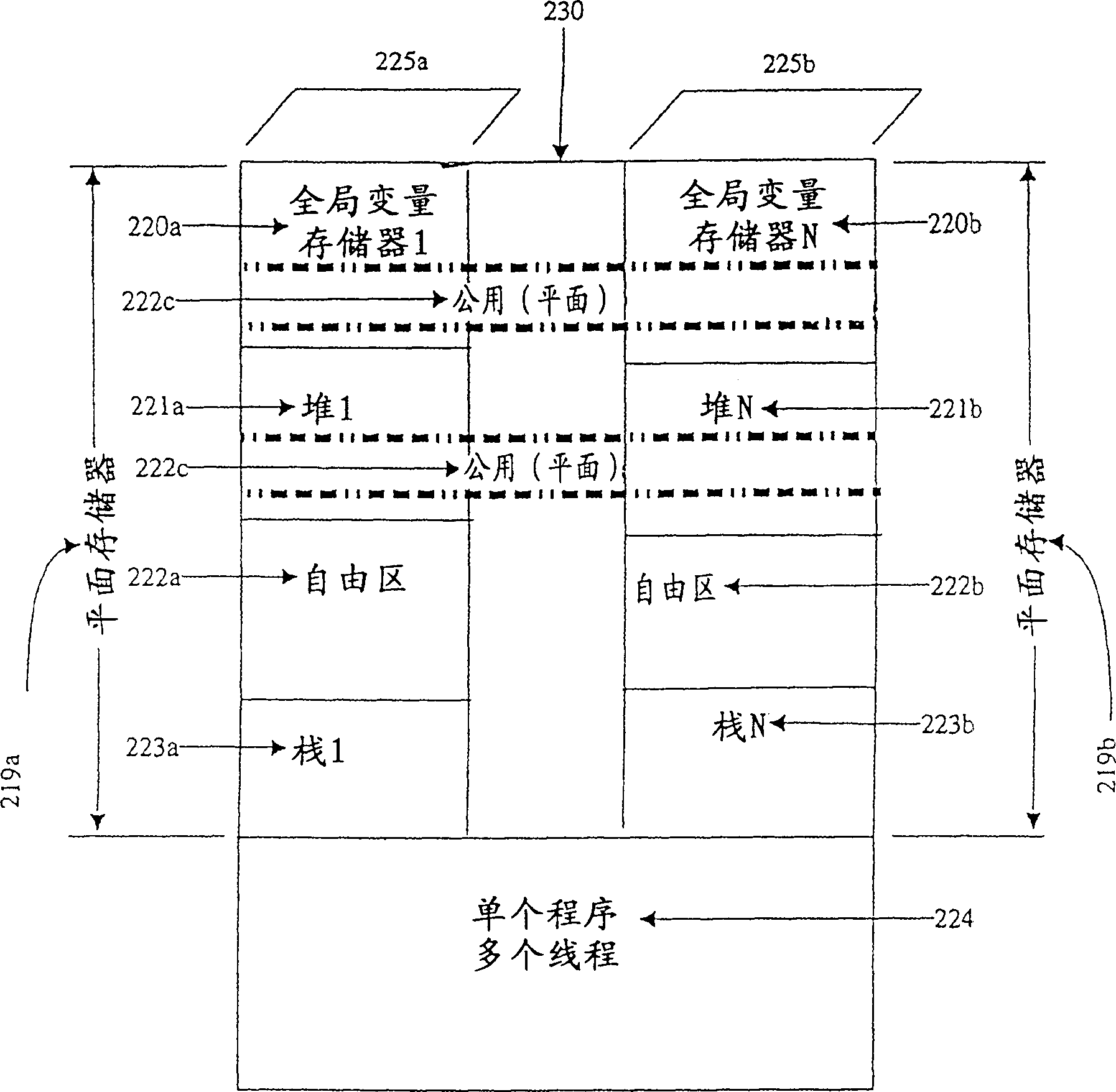

[0041] parallel software processing system

[0042] To overcome serialization limitations in accessing system services during parallel processing, the present invention tailors a programming approach under high-level language syntax that implicitly removes these considerations from the programmer's purview resulting in significant improvements in parallel applications. Specifically, the invention provides, in various aspects, a coordination system that naturally separates the data space and each parallel thread, a method of associating threads and data spaces, and a high-level language to describe and manage this separation. The system of the present invention containing the structure described further below may be ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com