Computer micro system structure comprising explicit high-speed buffer storage

A high-speed cache and architecture technology, applied in the field of computer systems, can solve the problems of invisible compilers, poor program locality, memory access misses, etc., and achieve the effect of easy identification and implementation, and fast access.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

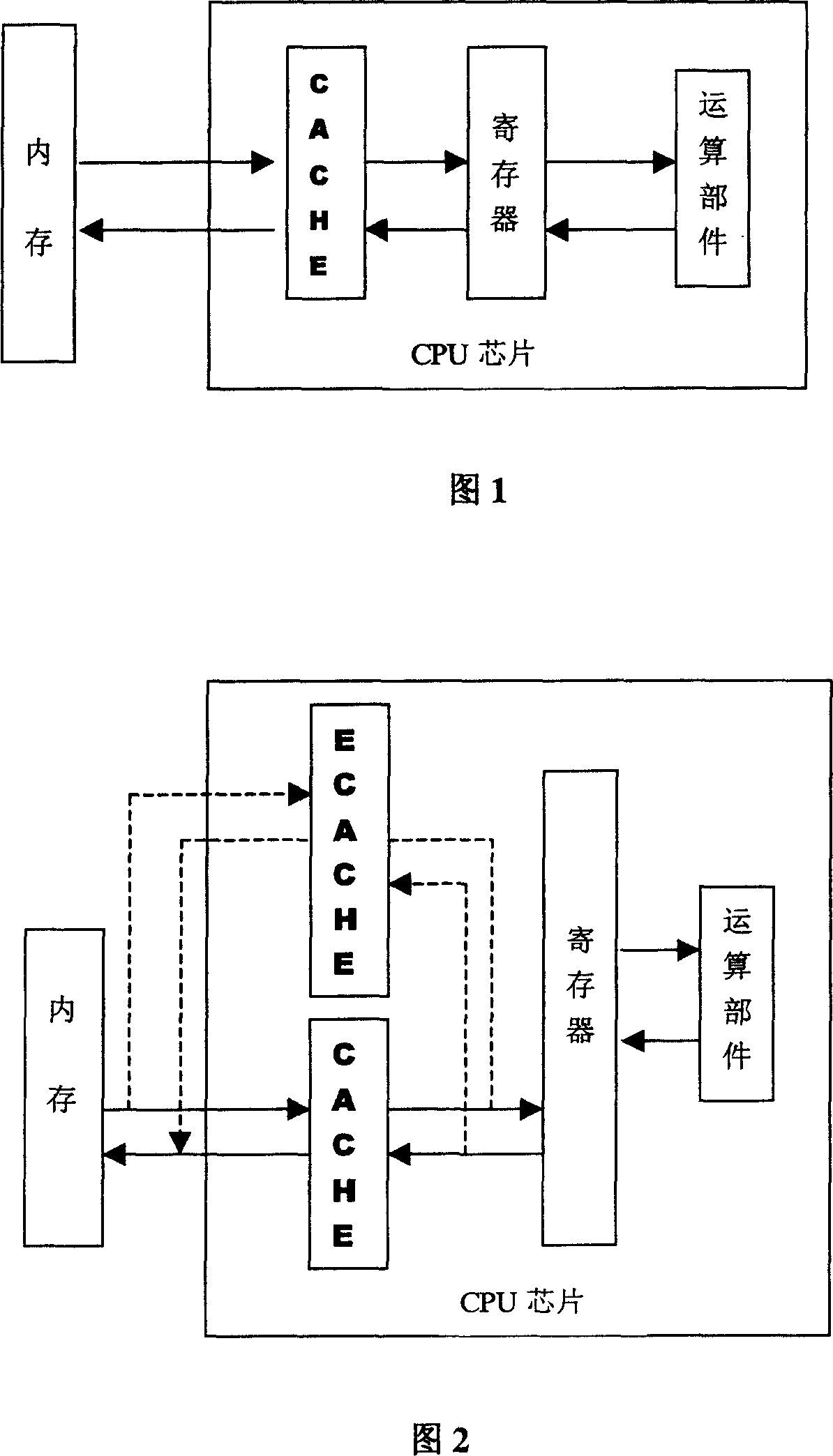

[0031] Describe the present invention below in conjunction with accompanying drawing. As shown in Fig. 2, the present invention adds an Ecache in the CPU chip, and the Ecache is essentially a high-speed memory in a chip. The connection mode of Ecache is the same as the cache in the existing computer system, one end is connected to the register, and the other end is connected to the memory in the computer system. Moreover, the data transmission between Ecache and memory and registers shares the existing mechanism of cache.

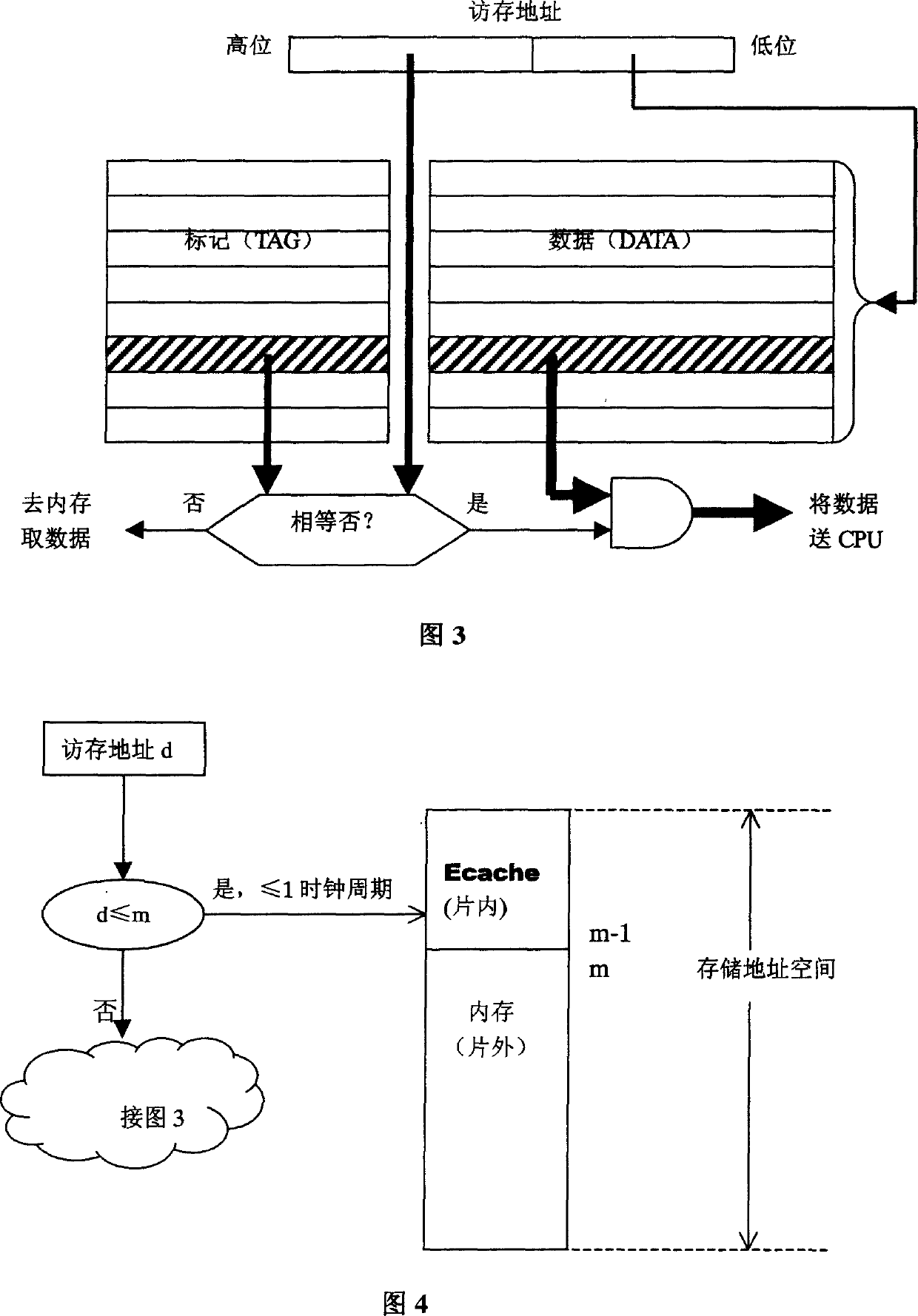

[0032] FIG. 4 is a schematic diagram of unified addressing and address space division of Ecache and memory. It can be seen from Figure 4 that the Ecache located in the CPU chip and the memory located outside the CPU chip are uniformly addressed, and start from a small address. In the implementation of accessing Ecache, it is first judged whether the access address is < m. If < m, it means direct access to Ecache, and the compiler ensures that the data to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com