Reducing context memory requirements in a multi-tasking system

a multi-tasking system and context memory technology, applied in computing, instruments, electric digital data processing, etc., can solve the problems of increasing the number of channels, increasing the cost of manufacturing, and all the memory contained on the chip, so as to reduce the context memory requirement of each task

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

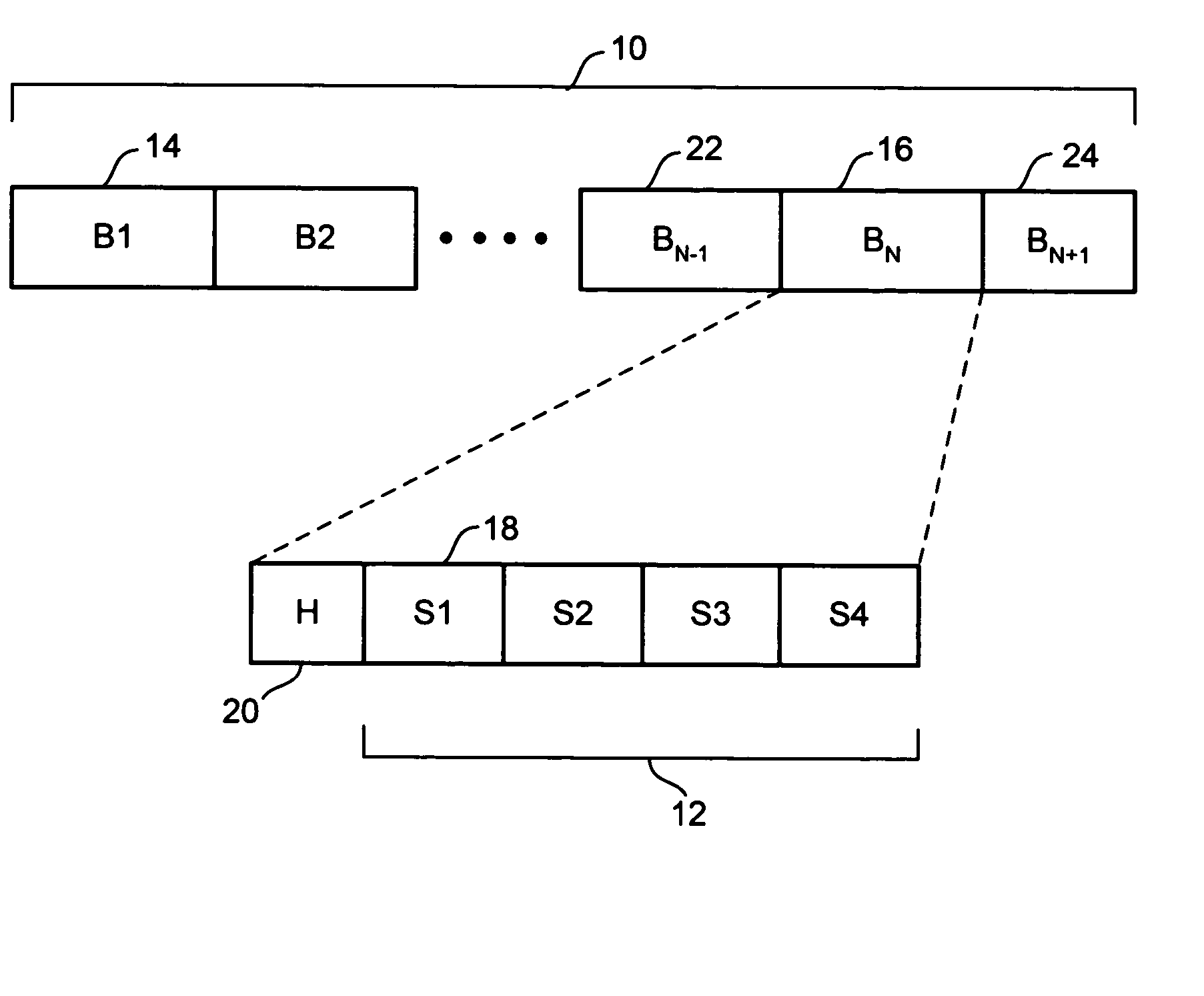

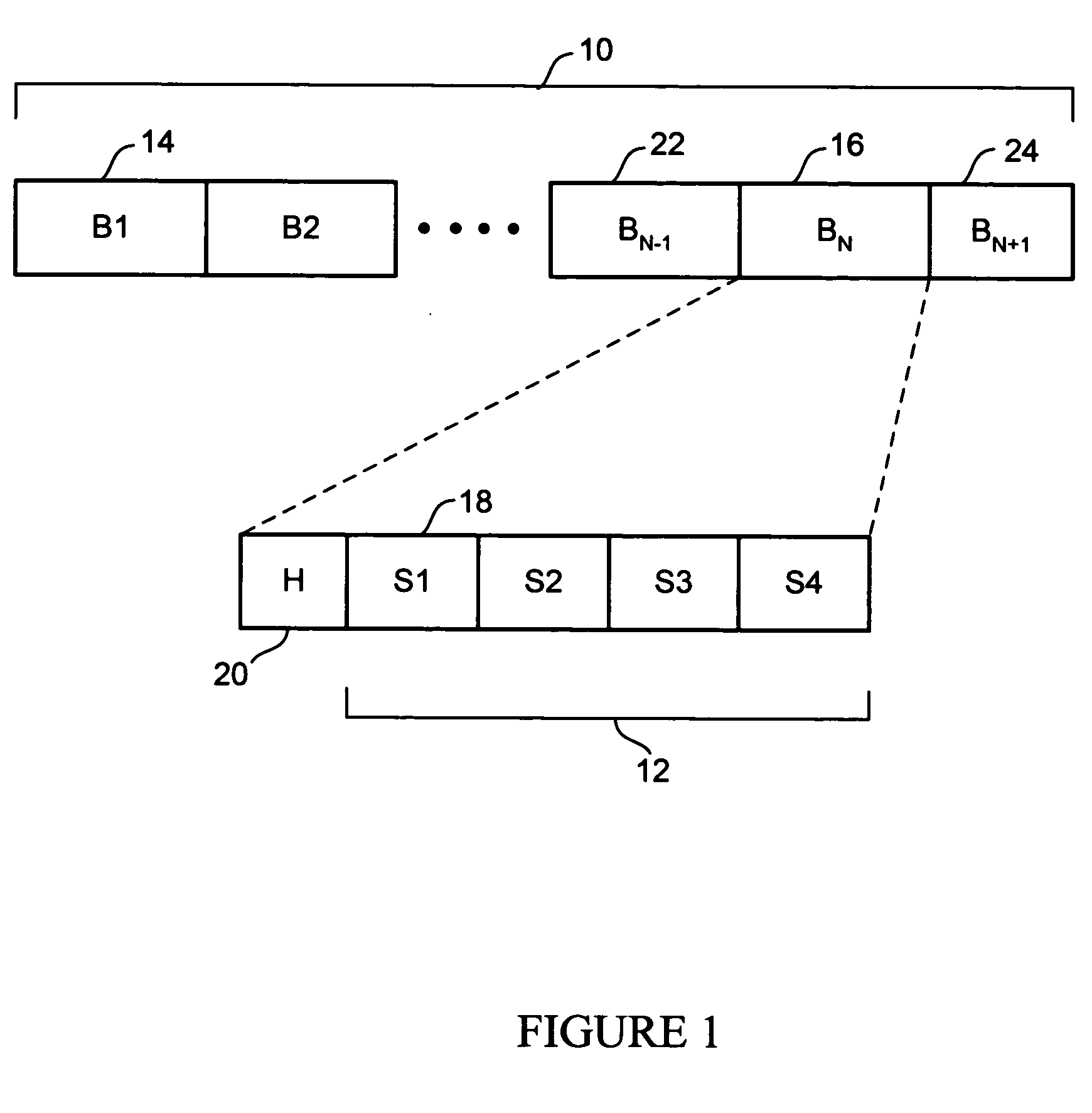

[0013] The preferred and alternative exemplary embodiments of the present invention include a channel-context compression algorithm that operates through a hardware engine in a processor having 16-bit data words. However, the algorithm will operate effectively for processors using 32-bit or other sizes of data words. The exemplary encoder is an adaptive packing operation. Referring to FIG. 1, input to the encoder is divided into blocks 10 of four 16-bit words 12 illustrated as samples S1 through S4. The blocks 10 may contain any reasonable number of words as samples, such as six, eight, or ten words. These words 12 are treated as twos-complement integers. Each block 14 is examined to find the word with the maximum number of significant bits. This number of significant bits is called the packing width and each word in the block can be represented with this number of bits. For example, if the word S1 (18) has the largest magnitude in block (16) of −100, then block BN 16 is assigned a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com