Heterogeneous multi-core system-oriented process scheduling method

A process scheduling, heterogeneous multi-core technology, applied in the direction of multi-programming device, resource allocation, etc., can solve the problems of slow response speed and increase of total time, and achieve the effect of load balancing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] 1) Implementation of process allocation strategy

[0029] In the heterogeneous multi-core system, all processing is not exactly the same, but consists of a master general-purpose processing core and several identical auxiliary processing cores, but all processing is the same in terms of data operations, that is The main memory and I / O devices are accessed in the same way, all of which are processed separately for the main processing core, while other multiple auxiliary processing cores are treated as a processing core pool.

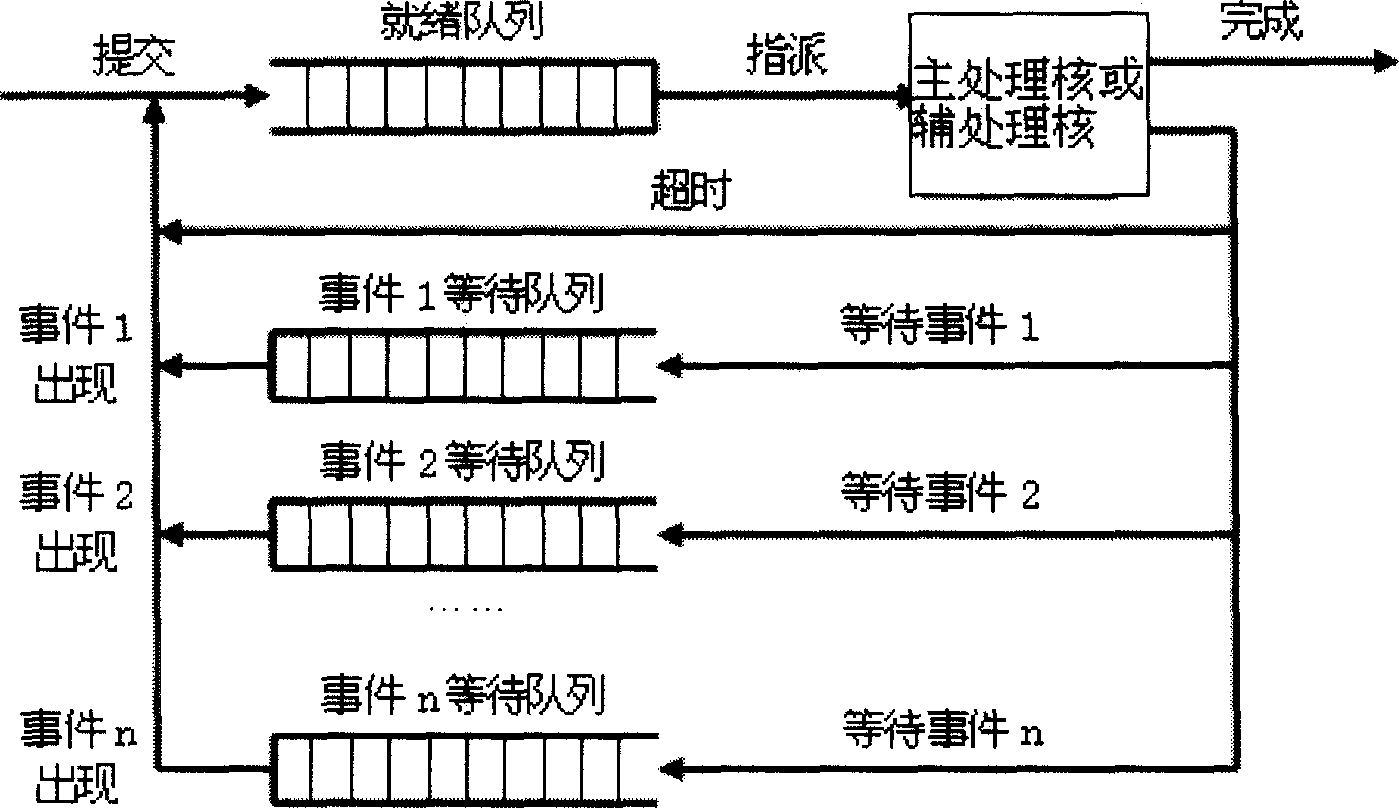

[0030] Adopting a dynamic allocation strategy, the operating system maintains a common ready queue for all processing cores, and each ready process has a flag to mark whether the process is running on the main processing core or the auxiliary processing core. When a processing core is idle, a ready process is selected from the corresponding ready queue to run on the processing core. As shown in the figure, the ready queue model is outlined.

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com