Interactive system based on CCD camera porjector technology

A technology of interactive system and projector, which is applied in the system field of computer technology field, can solve the problems of high cost and expensive, and achieve the effect of saving cost, improving the scope of application, and reducing cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

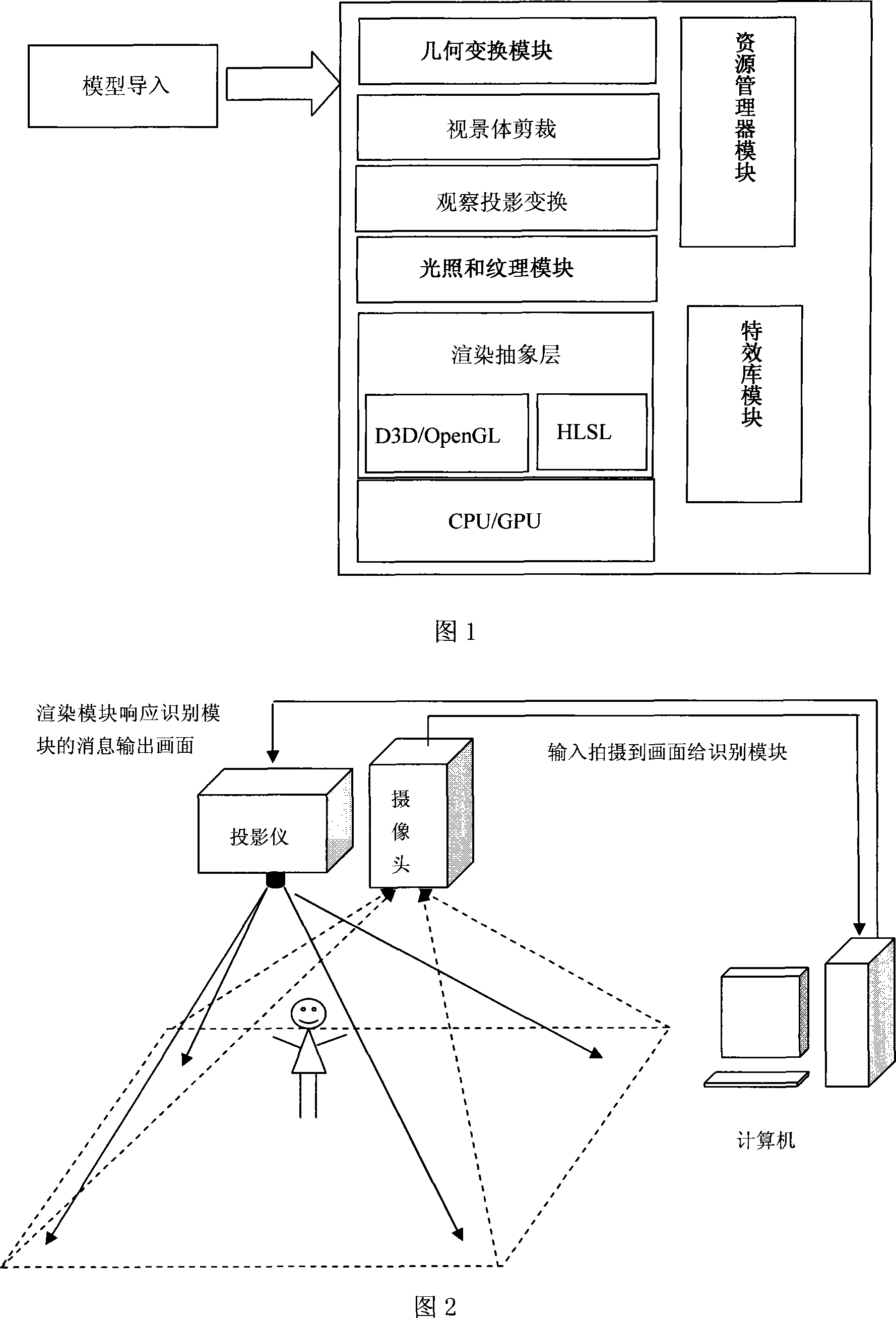

[0029] Below in conjunction with the accompanying drawings, the embodiments of the present invention are described in detail: the present embodiment is implemented on the premise of the technical solution of the present invention, and provides detailed embodiments and specific operation processes, but the protection scope of the present invention is not limited to the following described embodiment.

[0030] As shown in FIG. 2 , the system of this embodiment includes a personal computer, a projector, and a common camera. The interactive identification module and the rendering module run in the computer, wherein the interactive identification module will control the camera in the picture to obtain the image, then analyze and then use the rendering module to control the projector to output the picture. The camera is plugged into the USB port of the computer, and the projector is connected to the DVI interface on the graphics card of the same machine. The shooting range of the c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com