Internal memory distribution, cleaning and releasing method, and internal memory management apparatus

An allocation method and memory allocation technology, applied in the field of memory management, can solve the problems of increasing the number of splitting and recycling partners, reducing the granularity of memory blocks, and increasing time overhead, so as to avoid memory oscillation, reduce the overhead of splitting and merging, and reduce Effect of Internal Fragments

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

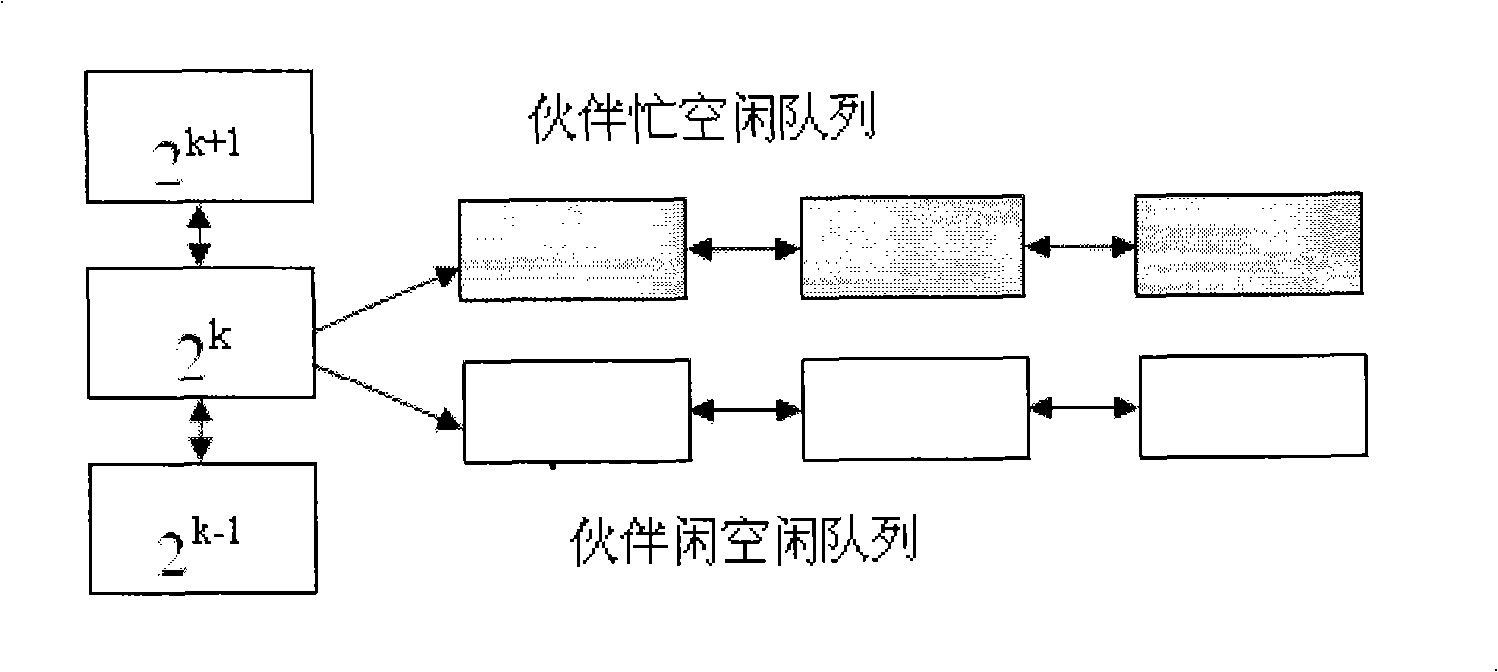

[0025] The embodiment of the present invention implements an on-demand allocation strategy for memory requests to provide flexible memory block sizes, thereby achieving the purpose of reducing internal fragmentation. At the same time, a strategy of delayed merging of released memory blocks is proposed to avoid memory shock caused by frequent splitting and merging, thereby reducing the overhead of frequent splitting and merging of the system to improve system performance.

[0026] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings.

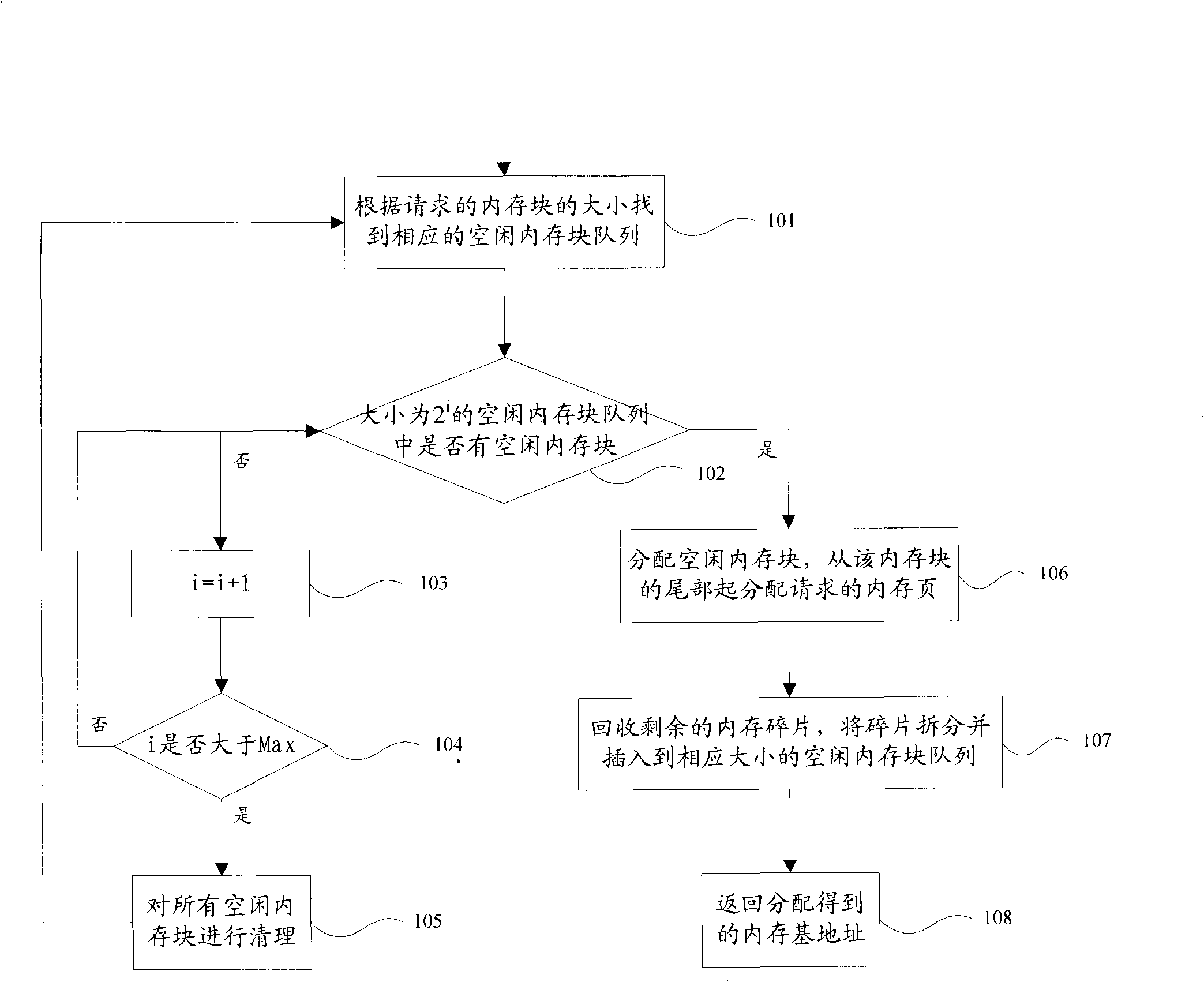

[0027] When performing memory allocation, the core idea is to allocate memory requests on demand, split the allocated internal fragments, and insert them into the free queue of memory blocks of corresponding size. The procedure of this method is as follows figure 1 Shown:

[0028] Step 101 , a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com