Method and system for extracting and correlating video interested objects

A technology of objects of interest and video, applied in video data retrieval, metadata video data retrieval, image data processing, etc., can solve the problem that value-added information does not have user personalization, cannot meet user preferences, value-added information and video content have a low degree of correlation and other issues, to achieve the effect of easy understanding and exploration, high degree of correlation, and wide application scenarios

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

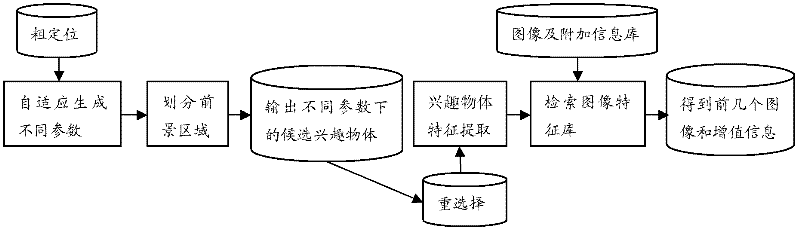

[0035] figure 1 A rendering of the method for extracting and associating video interest objects adopted by the embodiment of the present invention is shown. Various aspects of the present invention will be described in detail below through specific embodiments and in conjunction with the accompanying drawings.

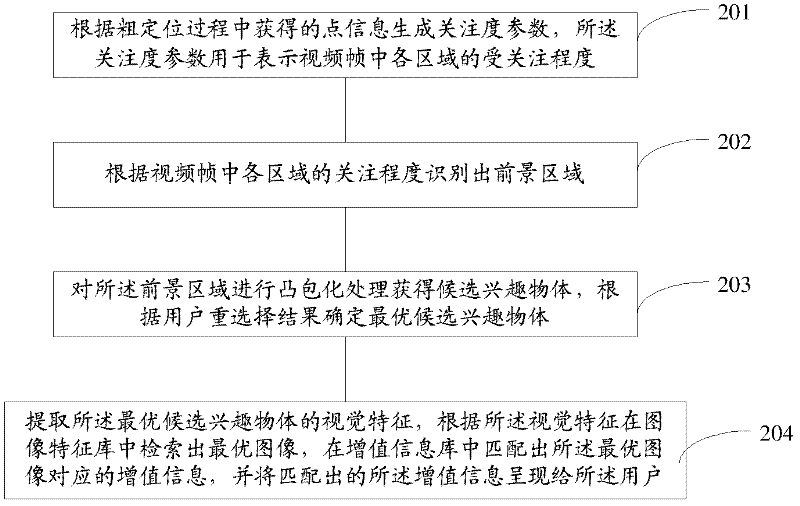

[0036] Such as figure 2 As shown, a method for extracting and associating a video object of interest provided by an embodiment of the present invention includes:

[0037] Step 201: Generate a degree of attention parameter according to the point information obtained in the rough positioning process, and the degree of attention parameter is used to indicate the degree of attention of each area in the video frame;

[0038] Step 202: Identify the foreground area according to the degree of attention of each area in the video frame;

[0039] Step 203: Perform convex hull processing on the foreground area to obtain candidate objects of interest, and determine the optimal ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com