Multi-robot combined target searching method of animal-simulated space cognition

A technology of target search and spatial cognition, applied in the field of positioning and path planning of robots, can solve problems such as inaccurate sensors, lack of reference objects for robots, and difficult positioning, so as to expand the scope of application, improve work efficiency, and improve accuracy. Effects on Sex and Efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

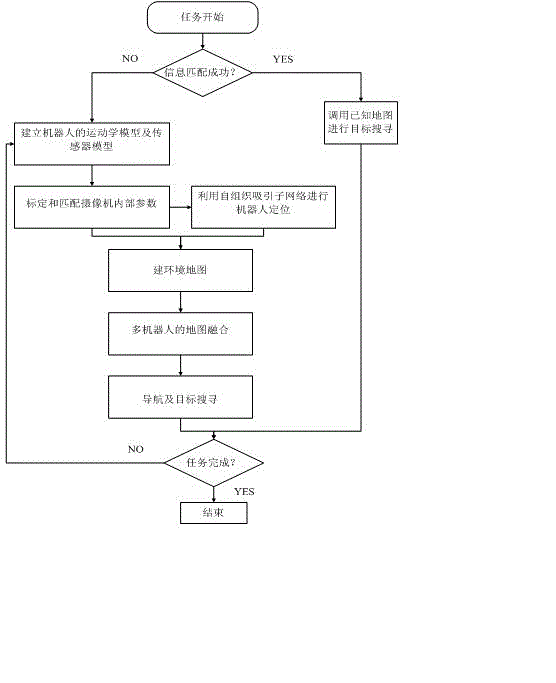

[0021] In order to make the technical means, creative features, goals and effects achieved by the present invention easy to understand, the present invention will be further described below in conjunction with specific embodiments.

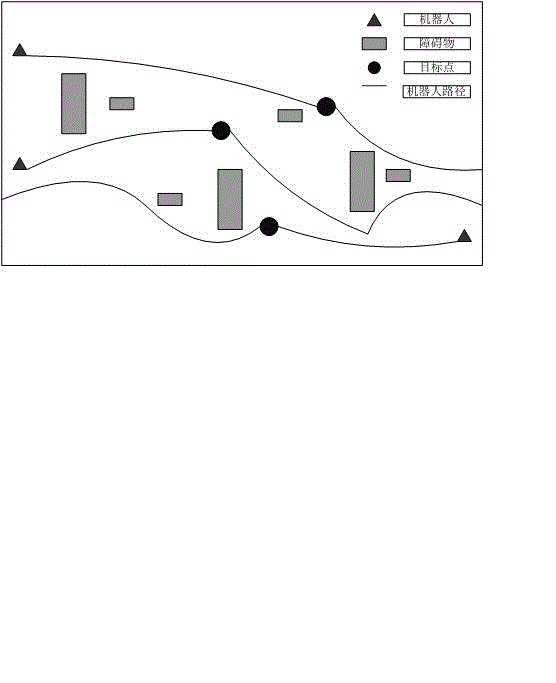

[0022] as attached figure 1 As shown, a hardware device block diagram of this embodiment includes multiple robots, odometers, cameras, wireless communication systems and storage devices. The odometer, video camera, wireless communication system, and storage device are all installed on the robot. In the multi-robot system, each robot is regarded as an intelligent body, and each robot carries an odometer, two cameras, wireless communication equipment and storage devices; the robot tracks its own position through the odometer, and uses two cameras to collect the environment Real-time images, using the wireless communication system to send the map information stored by itself to the companion robot, and receive map information from the companion robo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com