Compression and decompression method based on hardware accelerator card on distributive-type file system

A distributed file and hardware acceleration technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve problems such as occupying large CPU resources, system processing capacity decline, etc., and achieve the effect of improving effective bandwidth

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0043] This embodiment implements a compression prototype based on a hardware accelerator card based on Apache HDFS (Hadoop Distributed File System). HDFS is an open source implementation of Google GFS, which is the basis of various projects in the Hadoop ecosystem.

[0044] HDFS upper-layer applications use clients to write or read files. A file in HDFS is divided into multiple files of the same size, and the size of the last file block may be smaller than other file blocks. Different file blocks belonging to the same file may be stored on different data nodes, and each data block has three copies in the data nodes.

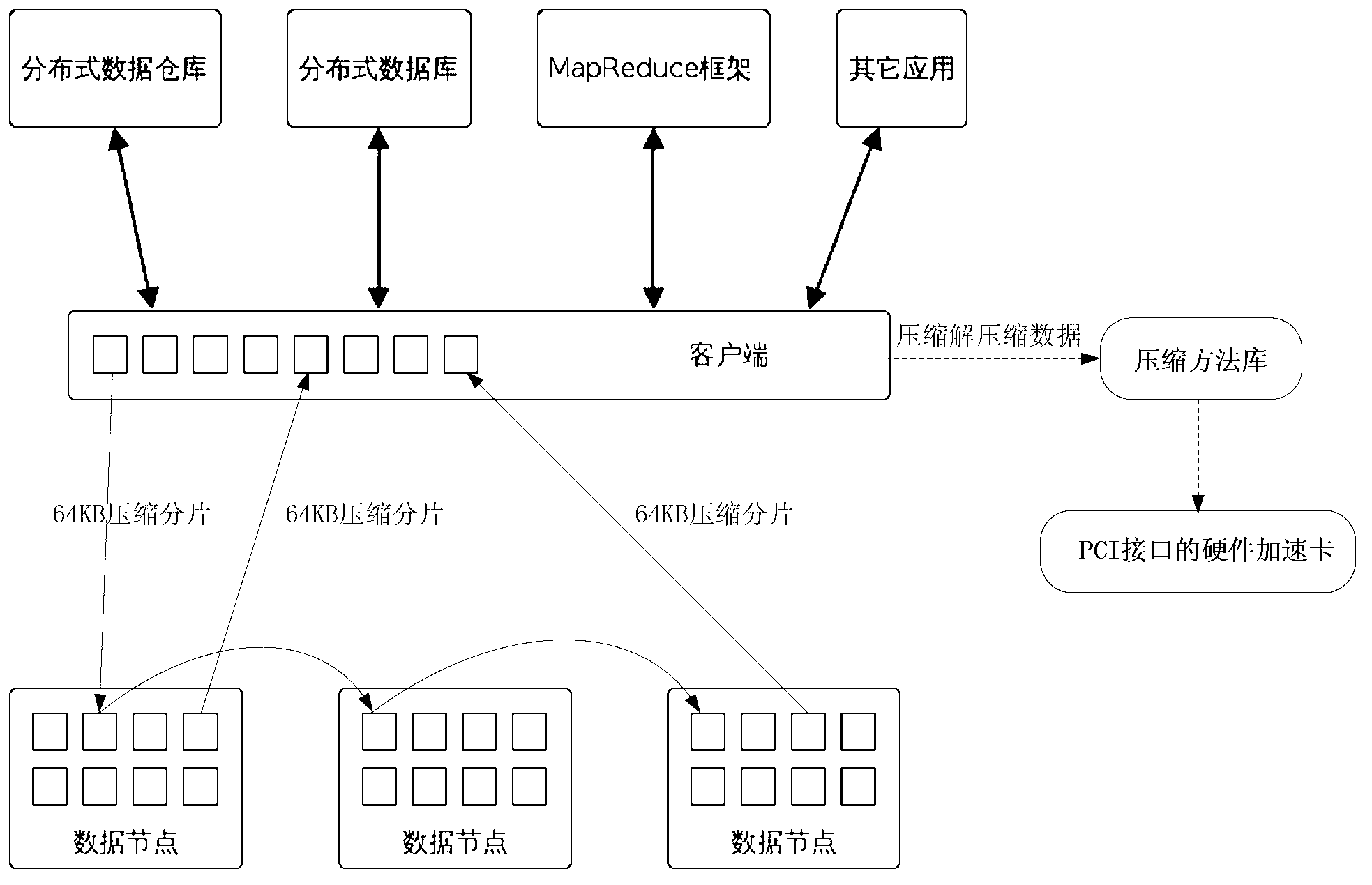

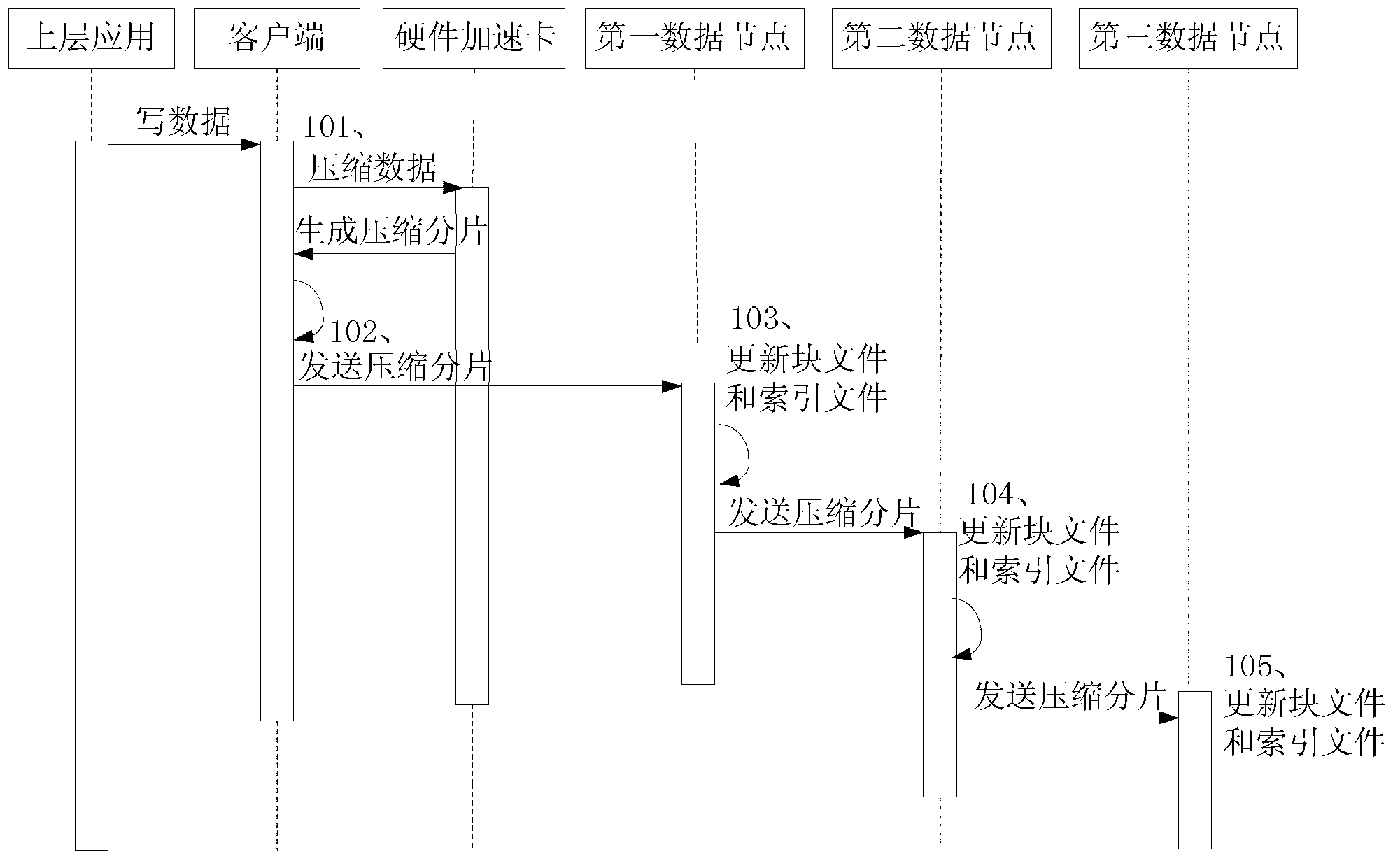

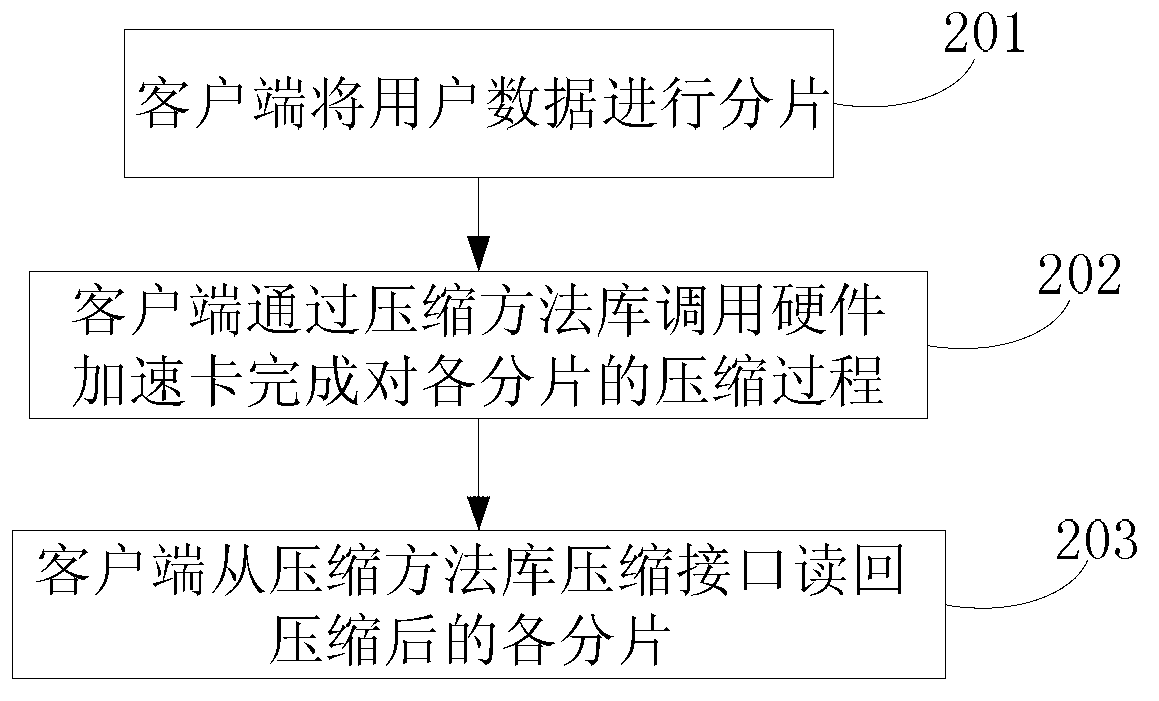

[0045] see figure 1, a system structure diagram of a compression and decompression method based on a hardware accelerator card on a distributed file system. The compression and decompression method provided in this embodiment is to complete the compression or decompression of file blocks by invoking a hardware accelerator card, and the compression or decompres...

Embodiment 2

[0073] see Image 6 , a schematic diagram of the system structure of another compression and decompression method based on a hardware accelerator card in a distributed system. This embodiment is a further extension of Embodiment 1. In addition to being used on HDFS clients and data nodes, the hardware accelerator card can also be used for upper-layer applications. For HDFS upper-level applications, such as distributed databases, distributed data warehouses, MapReduce frameworks, and other applications that require data storage, the hardware accelerator card can be called independently to compress or decompress the data in the form of data streams, and then the processed The data stored in the distributed file system, local file system, transmitted over the network or used for other purposes. The compression method library drives the hardware accelerator card to compress or decompress through the driver program, and can create data input streams and output streams. The stream ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com