Virtual reality occlusion handling method, based on virtual model pretreatment, in augmented reality system

A virtual model, virtual and real occlusion technology, applied in image data processing, 3D image processing, instruments, etc., can solve the problems of large amount of calculation and unsatisfactory realization effect, and achieve real-time requirements and good virtual and real occlusion effect Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

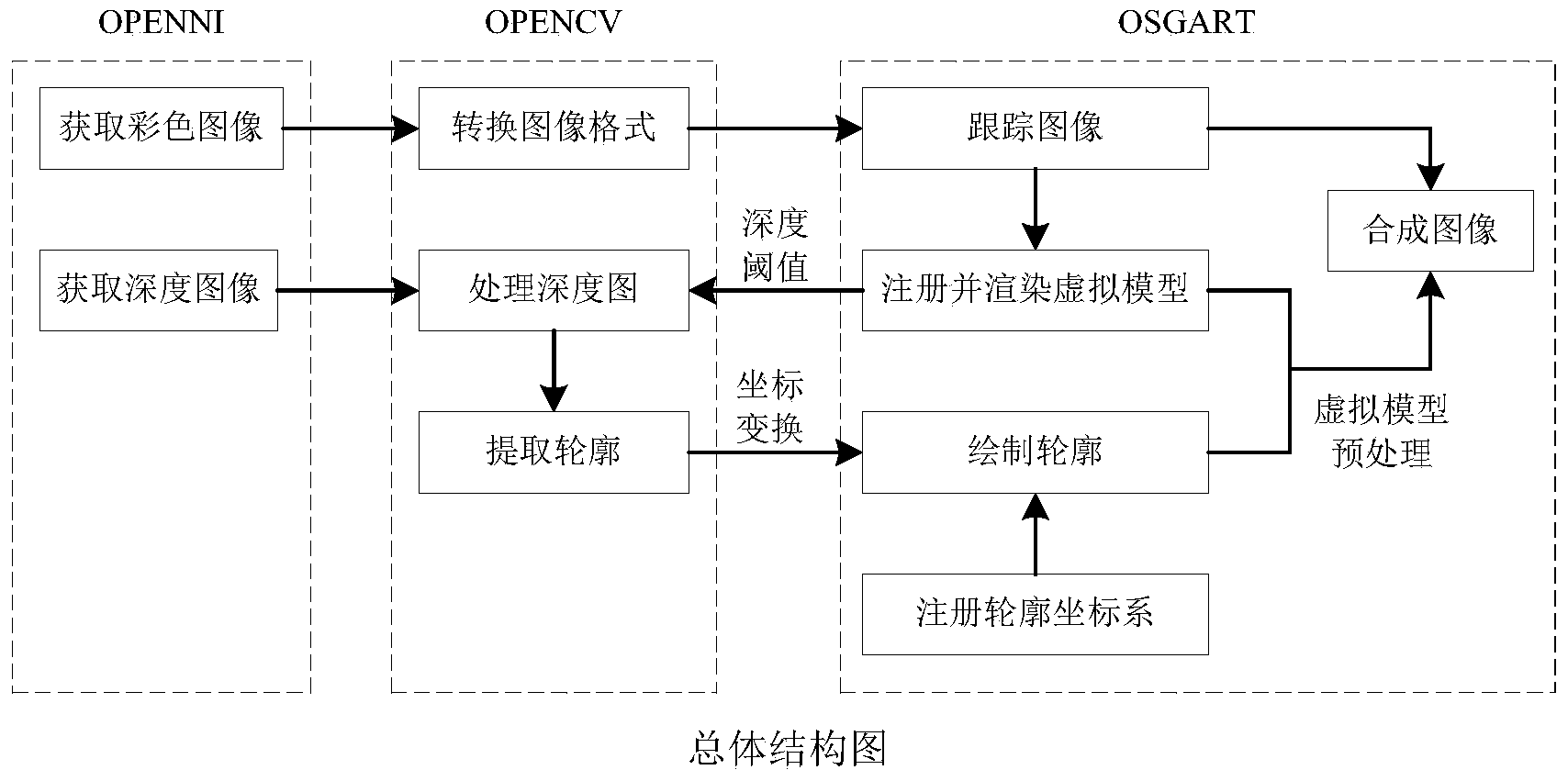

[0020] The present invention is a virtual-real occlusion processing method based on virtual model preprocessing in an augmented reality system, which adopts the method of registering the extracted occluder outline in the scene of rendering the virtual model and drawing it faithfully to process the virtual-real occlusion of augmented reality.

[0021] As shown in the accompanying drawings. The overall steps of the method of the present invention are: using the depth camera KINECT to obtain the color image of the scene and the grayscale image representing the depth information; converting the color image into a bitmap image that can be identified and tracked by the augmented reality virtual and real occlusion system and registering the virtual model in three dimensions; Combined with the 3D registration position of the virtual model and the depth of the virtual model itself, the grayscale image representing the depth information is thresholded and the outer contour of the real ob...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com