Multi-channel speech enhancing method based on auditory perception model

A technology for auditory perception and speech enhancement, applied in speech analysis, hearing aids, instruments, etc., can solve problems such as distortion and inability to realize phase in real time, and achieve the effect of overcoming phase distortion, ensuring signal reconstruction effect, and low computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The technical solutions of the present invention will be described in further detail below with reference to the accompanying drawings and embodiments.

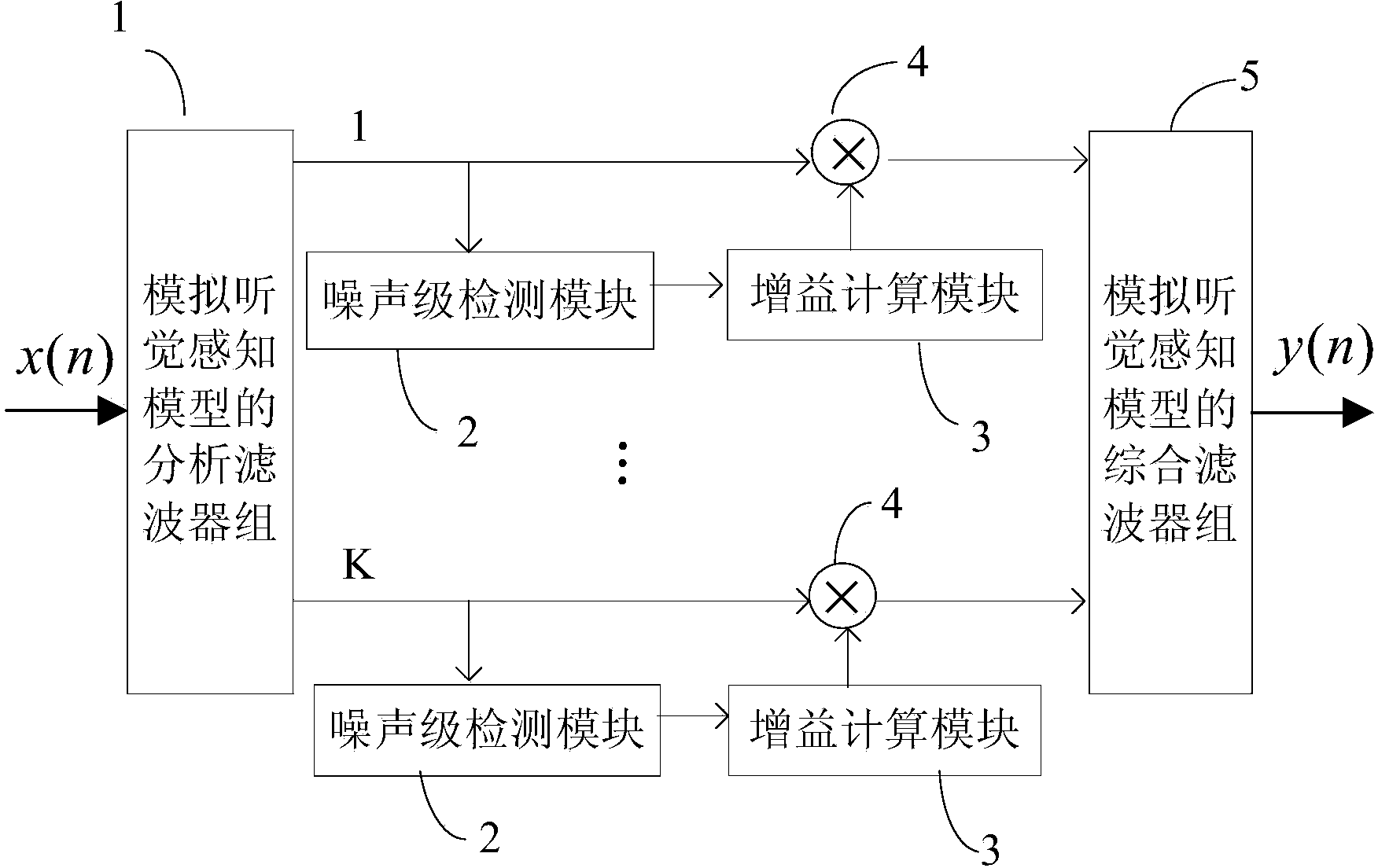

[0044] A multi-channel speech enhancement method based on an auditory perception model in the embodiment of the present invention can be applied to digital hearing aids. In the embodiment of the present invention, the weighted splicing and adding structure is combined with the all-pass transformation in the signal analysis process, which has high efficiency , the advantages of real-time implementation, the human ear resolution is simulated with a small number of channels, and the all-pass inverse transformation is added in the signal synthesis process to solve the problem of phase distortion.

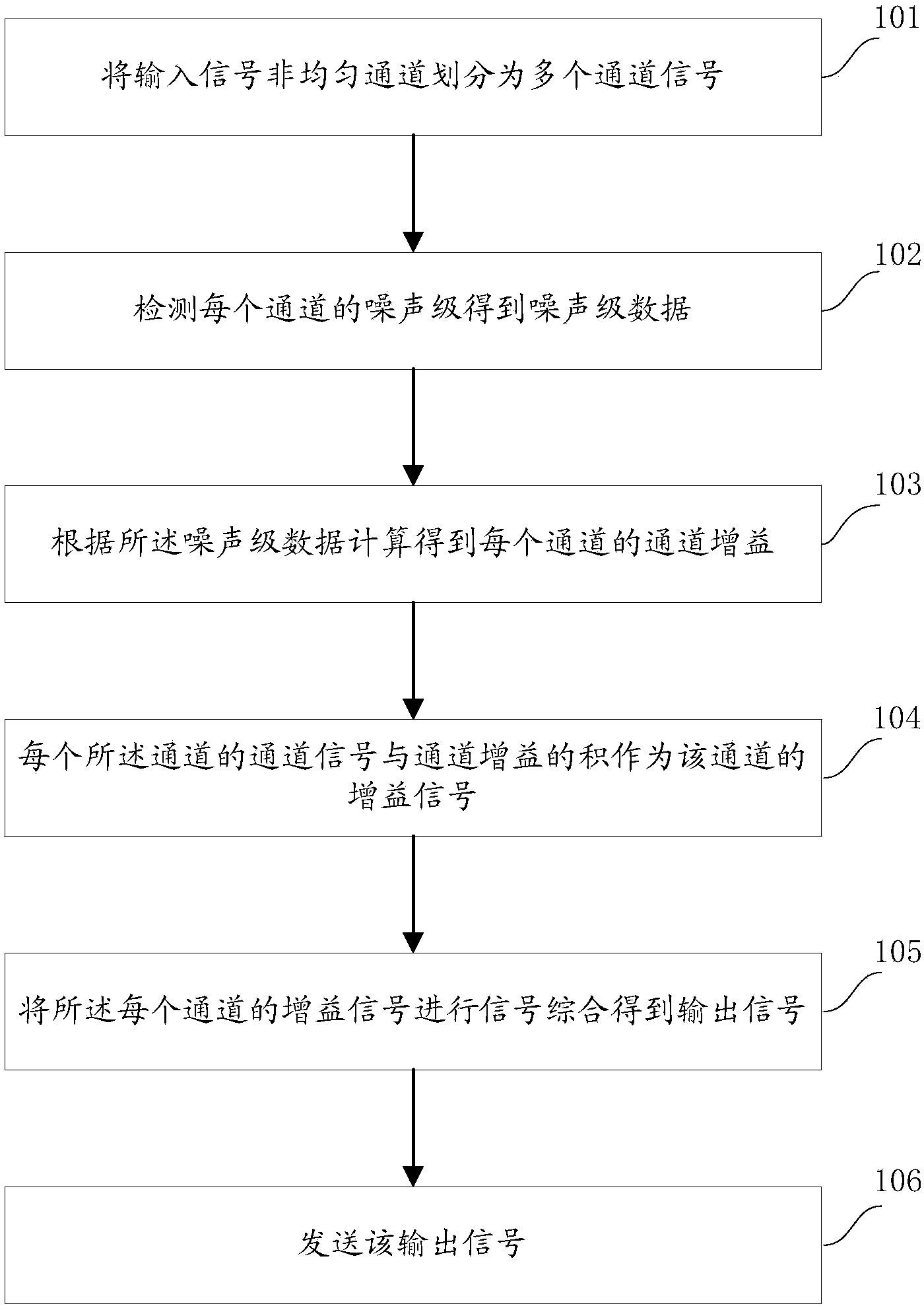

[0045] figure 1 It is a flowchart of a multi-channel speech enhancement method based on an auditory perception model according to an embodiment of the present invention, as shown in the figure, and specifically includes the foll...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com