Method and device using vector instruction to process file index in parallel mode

A file indexing and parallel processing technology, applied in electrical digital data processing, special data processing applications, instruments, etc., can solve the problem that the binary search method is not suitable for parallel processing, cannot use parallel computing power to improve efficiency, etc., and reaches the peak of improvement. Computational power, exploiting data parallelism, reducing performance penalty

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

[0034] The following symbols are used to describe the process:

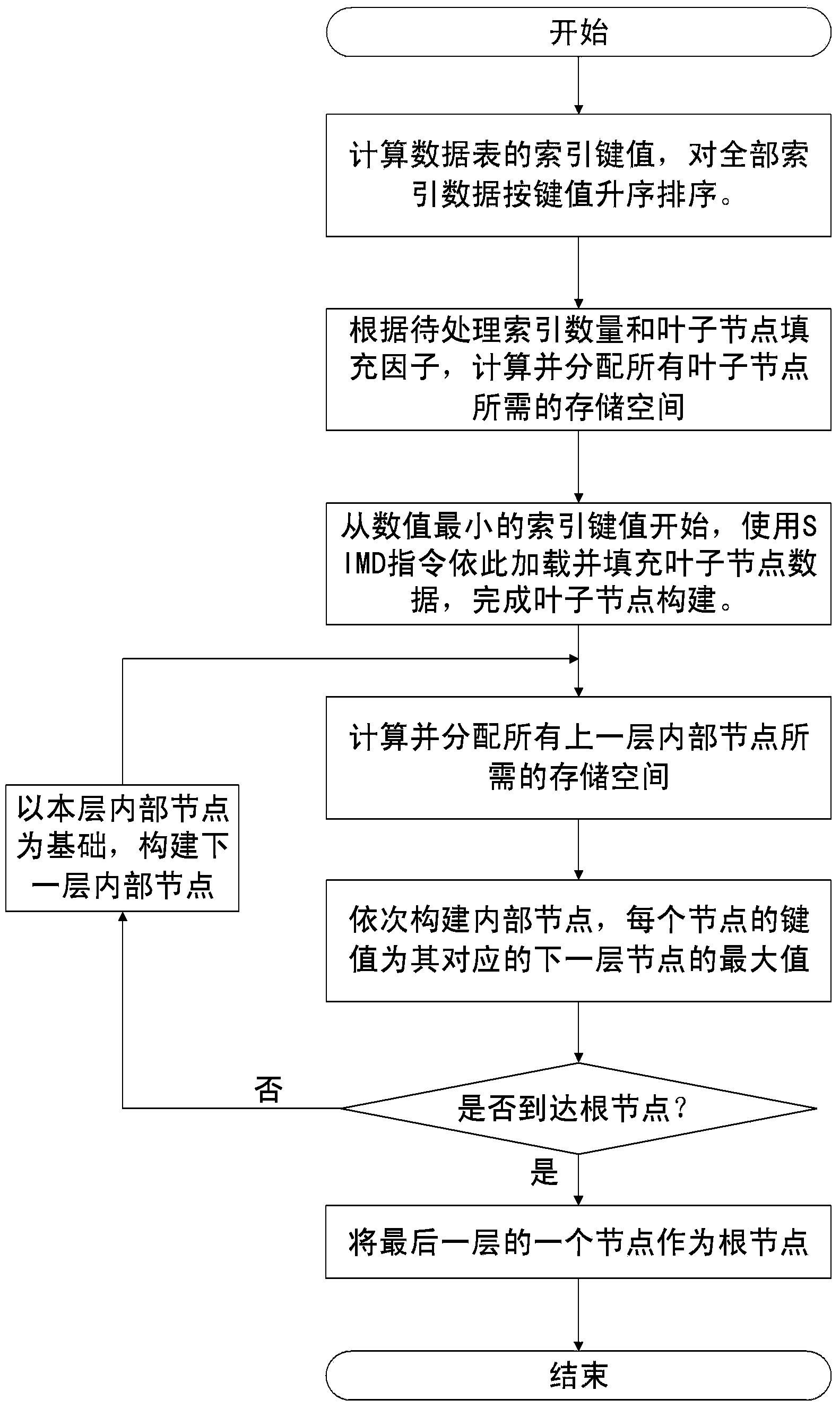

[0035] N: Number of index items to be processed B: Data width of index items R: Data width of vector registers n: B+ tree leaf node capacity α: Filling factor of leaf nodes (the ratio of index data to leaf node capacity when first constructing leaf nodes )

[0036] g: degree of B+ tree

[0037] h: the height of the B+ tree

[0038] In the following specific implementation manners, B+ tree is taken as an example for illustration, and the method described in the present invention can also be used for other tree indexes commonly used in file indexes.

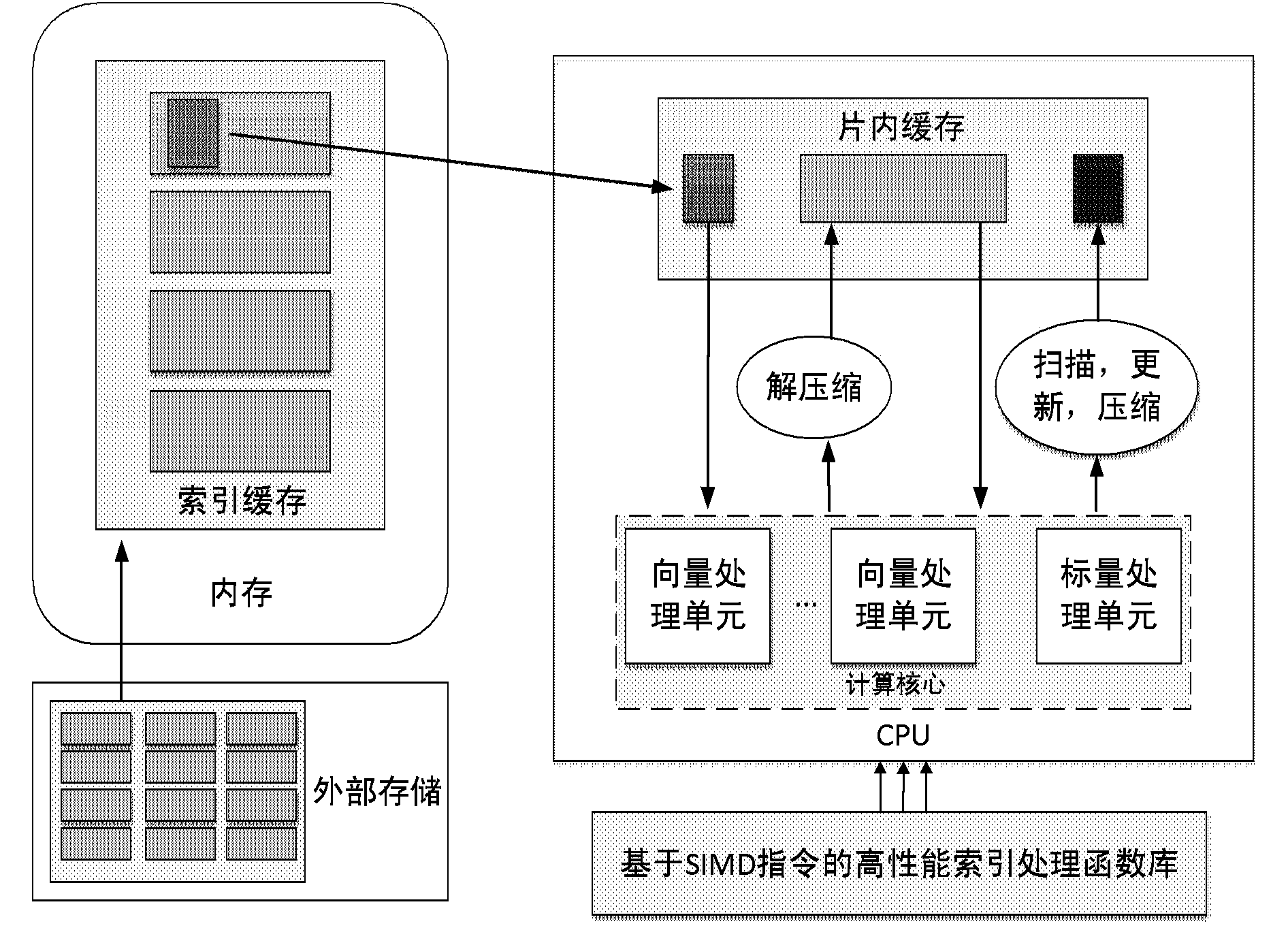

[0039] figure 1 It is a structural block diagram of the file index data parallel processing system propose...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com