Graph based unconstrained in-video significant object detection method

An object detection and video technology, applied in the field of images, can solve the problems that the video with complex motion is not robust, cannot accurately and completely extract the saliency map, and affects the wide application of the video saliency model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] Embodiments of the present invention will be described in further detail below in conjunction with the accompanying drawings.

[0041] The simulation experiment carried out by the present invention is realized by programming on a PC test platform with a CPU of 3.4GHz and a memory of 8G.

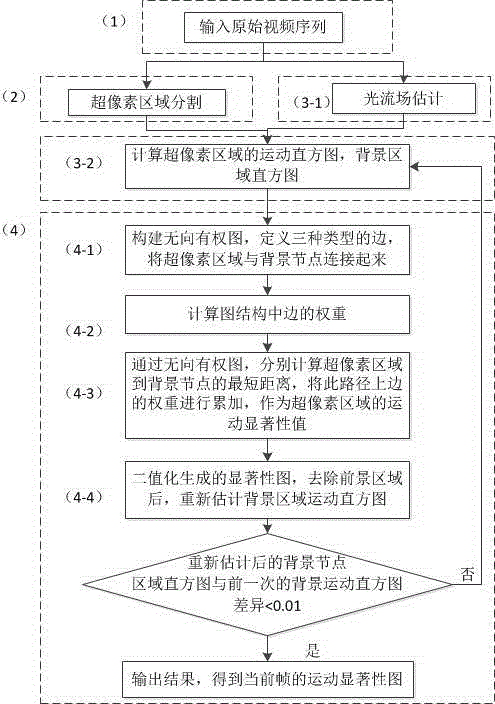

[0042] Such as figure 1 Shown, the saliency detection method of the video based on graph of the present invention, its specific steps are as follows:

[0043] (1), input the original video frame sequence, record the tth frame in it as F t ,Such as figure 2 shown;

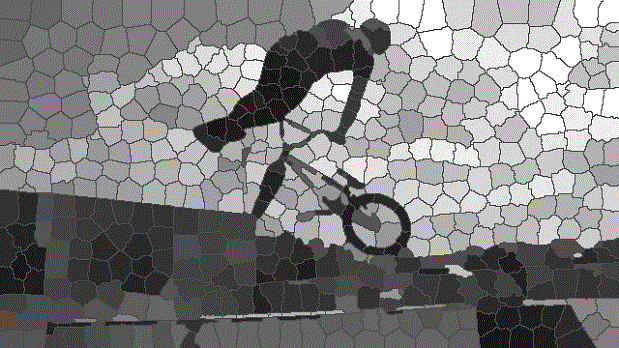

[0044] (2), using the superpixel region segmentation method, the entire video frame F t split into n t superpixel regions, denoted as sp t,i (i=1,...,n t );

[0045] (2-1), for the video frame F t , its width is denoted as w, its length is denoted as h, and a video frame F of w×h size is set t The number of regions to be divided is: n t = w ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com