Method for evaluating video quality from virtual viewpoint

A technology of video quality and evaluation method, applied in the field of virtual viewpoint video quality evaluation, which can solve the problems of only considering the distortion of the drawing process, the calculation is not comprehensive enough, and the time domain flicker distortion of the virtual viewpoint video is not considered.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

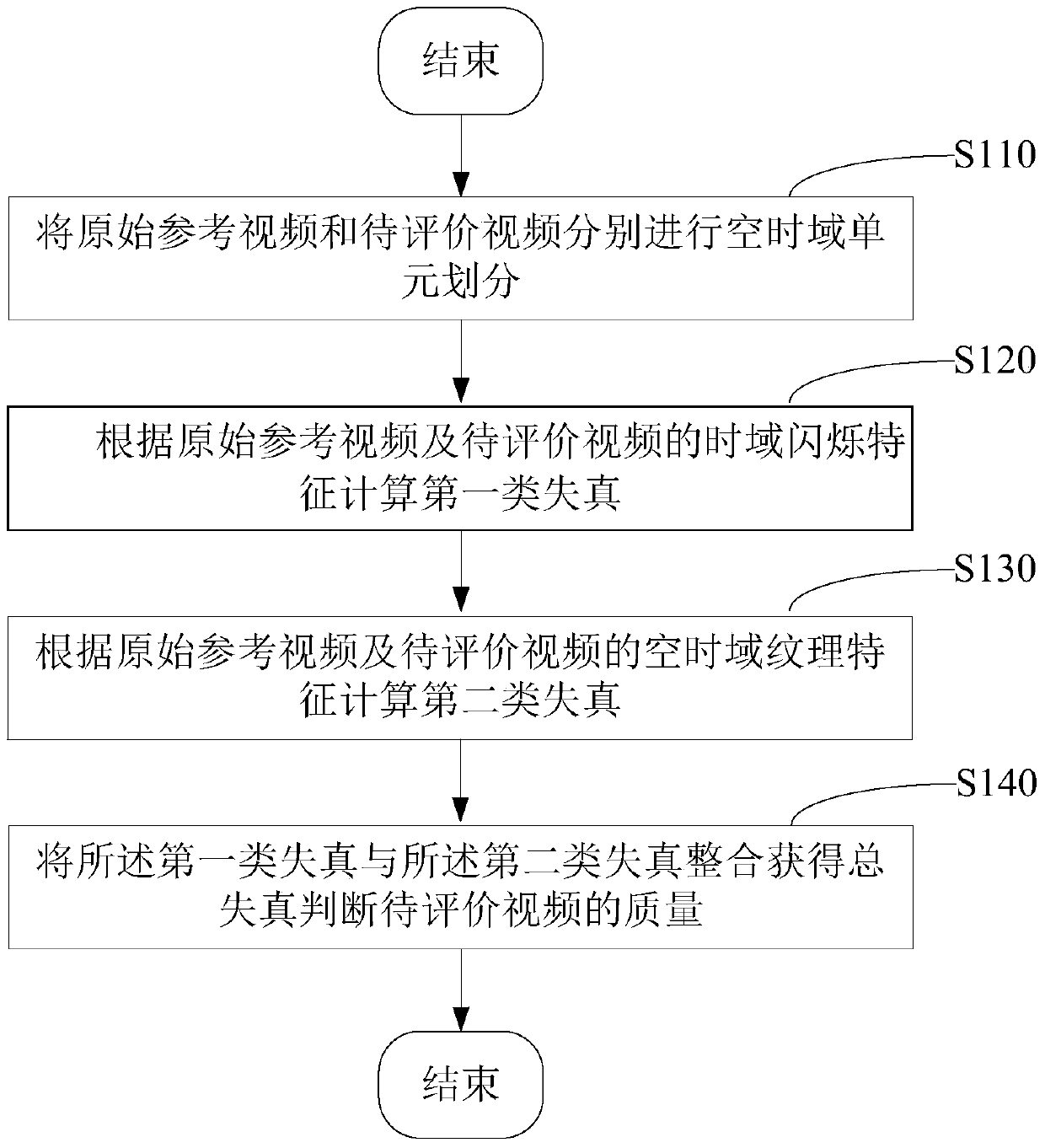

[0056] A method for evaluating the quality of virtual viewpoint video, including the following steps:

[0057] Step S110: The original reference video and the video to be evaluated are divided into space-time domain units respectively.

[0058] Step S110 includes:

[0059] ① Divide the original reference video and the video to be evaluated into groups of images composed of consecutive frames in the time domain.

[0060] ② Divide each image in the image group into several image blocks, and the consecutive image blocks in the time domain form space-time domain units.

[0061] In this embodiment, the space-time domain unit is composed of several time-domain continuous image blocks with the same spatial position.

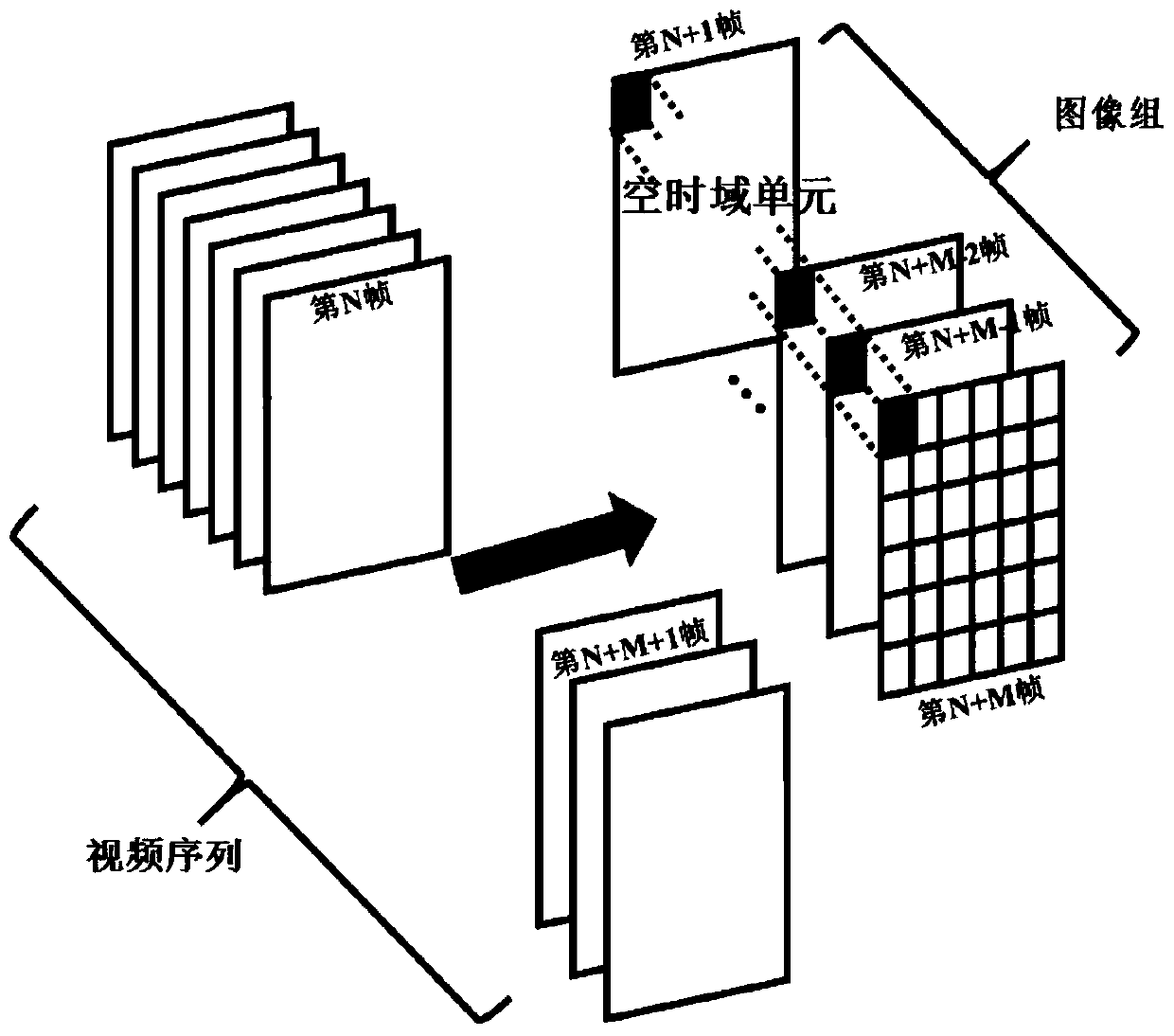

[0062] Specifically, it is first necessary to divide the original reference video and the video to be evaluated into space-time domain units. The schematic diagram of the process is as follows: figure 2 Shown. The video sequence (the original reference video and the video to be ...

Embodiment 2

[0121] Step S110: The original reference video and the video to be evaluated are divided into space-time domain units respectively.

[0122] Step S110 includes:

[0123] ① Divide the original reference video and the video to be evaluated into groups of images composed of consecutive frames in the time domain.

[0124] ② Divide each image in the image group into several image blocks, and the consecutive image blocks in the time domain form space-time domain units.

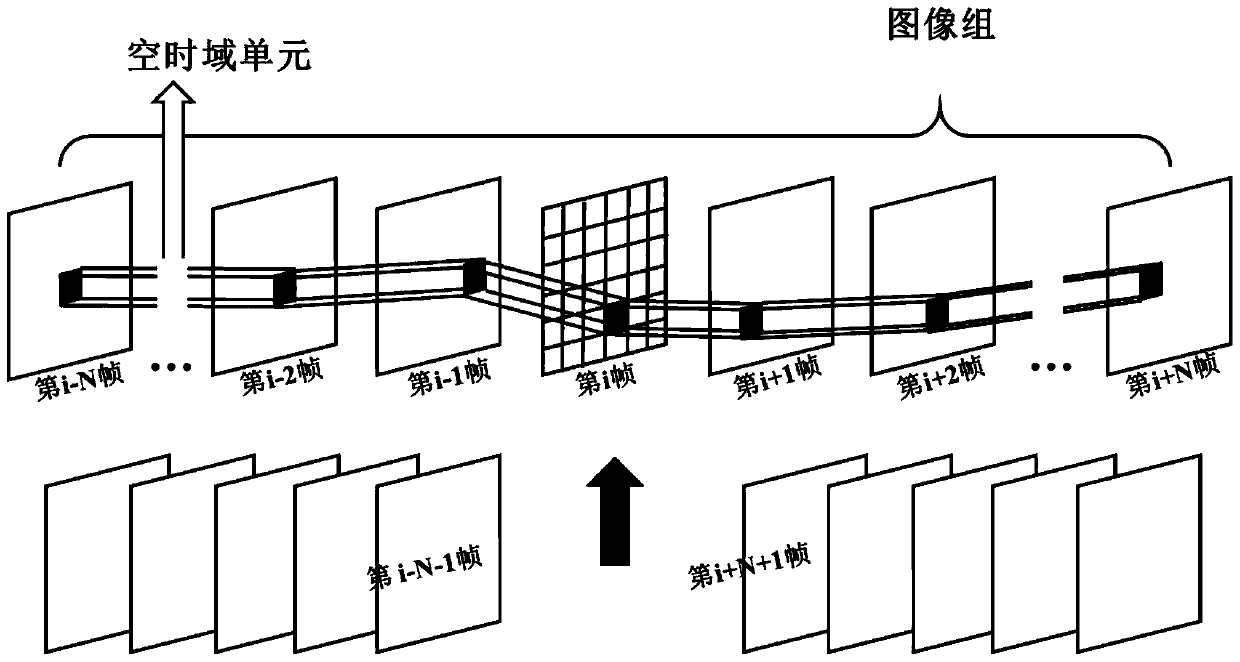

[0125] The space-time domain unit is composed of several time-domain continuous image blocks with different spatial positions describing the motion trajectory of the same object.

[0126] Specifically, it is first necessary to divide the original reference video and the video to be evaluated into space-time domain units. The schematic diagram of the process is as follows: image 3 Shown. The video sequence (the original reference video and the video to be evaluated) is divided into several image groups, and each image group ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com