Weighted extreme learning machine video target tracking method based on weighted multi-example learning

An extreme learning machine and multi-instance learning technology, applied in the field of target tracking, can solve the problems of poor tracking accuracy and achieve the effect of improving stability, accuracy and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

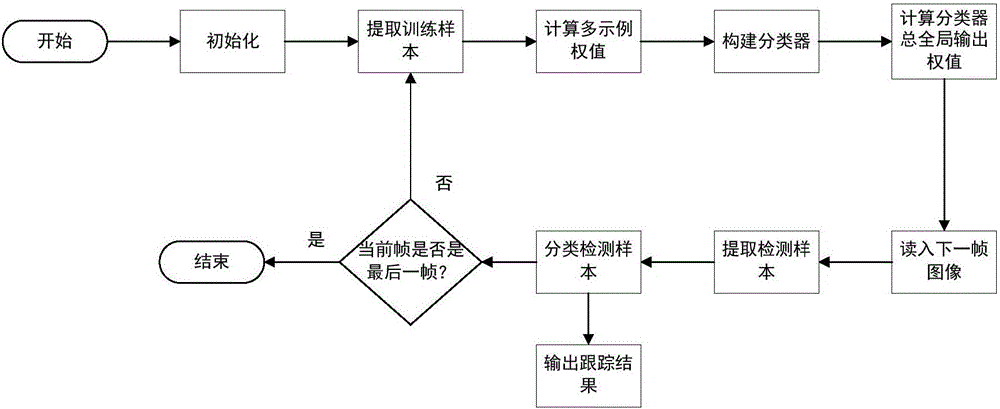

[0038] Below with reference to accompanying drawing, technical scheme and effect of the present invention are further described:

[0039] refer to figure 1 , the specific implementation steps of the present invention are as follows:

[0040] Step 1. Initialize.

[0041] 1.1) Initialize target features:

[0042] Commonly used features in video tracking include: grayscale features, red, green, blue RGB color features, chroma, saturation, brightness HSV color features, gradient features, scale invariant feature transform SIFT features, local binary pattern LBP features, Haar-like features; this example uses, but is not limited to, Haar-like features in existing features as the target feature, and constructs a feature model pool Φ containing M-type Haar feature models;

[0043] 1.2) Randomly assign the feature models in the feature model pool Φ to get the total E group of feature model blocks Where e is the serial number of the feature model block, the value is 1,...,E, E is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com