Patents

Literature

114 results about "Learning by example" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Scenario image annotation method based on active learning and multi-label multi-instance learning

InactiveCN105117429AGuaranteed accuracyHigh precisionCharacter and pattern recognitionSpecial data processing applicationsPattern recognitionManual annotation

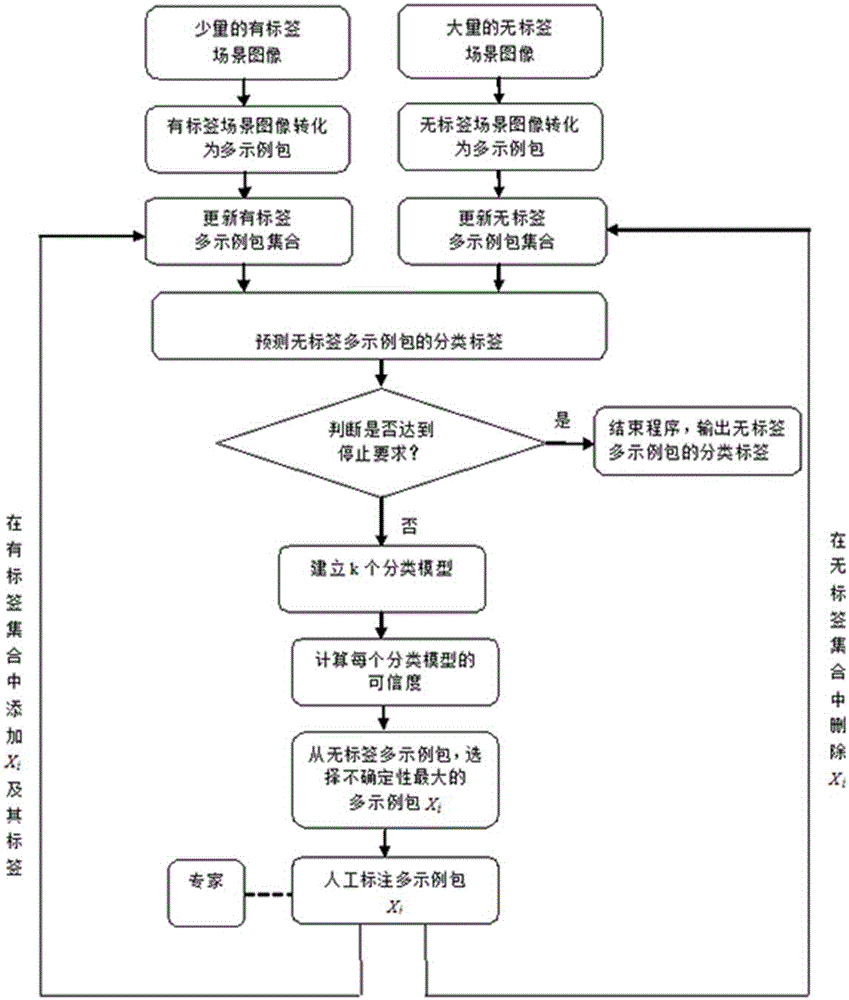

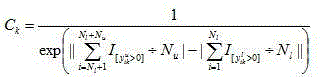

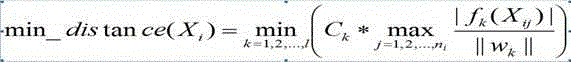

The present invention is directed to two fundamental characteristics of a scene image: (1) the scene image often containing complex semantics; and (2) a great number of manual annotation images taking high labor cost. The invention further discloses a scene image annotation method based on an active learning and a multi-label and multi-instance learning. The method comprises: training an initial classification model on the basis of a label image; predicting a label to an unlabeled image; calculating a confidence of the classification model; selecting an unlabeled image with the greatest uncertainty; experts carrying on a manual annotation on the image; updating an image set; and stopping when an algorithm meeting the requirements. An active learning strategy utilized by the method ensures accuracy of the classification model, and significantly reduces the quantity of the scenario image needed to be manually annotated, thereby decreasing the annotation cost. Moreover, according to the method, the image is converted to a multi-label and multi-instance data, complex semantics of the image has a reasonable demonstration, and accuracy of image annotation is improved.

Owner:GUANGDONG UNIV OF TECH

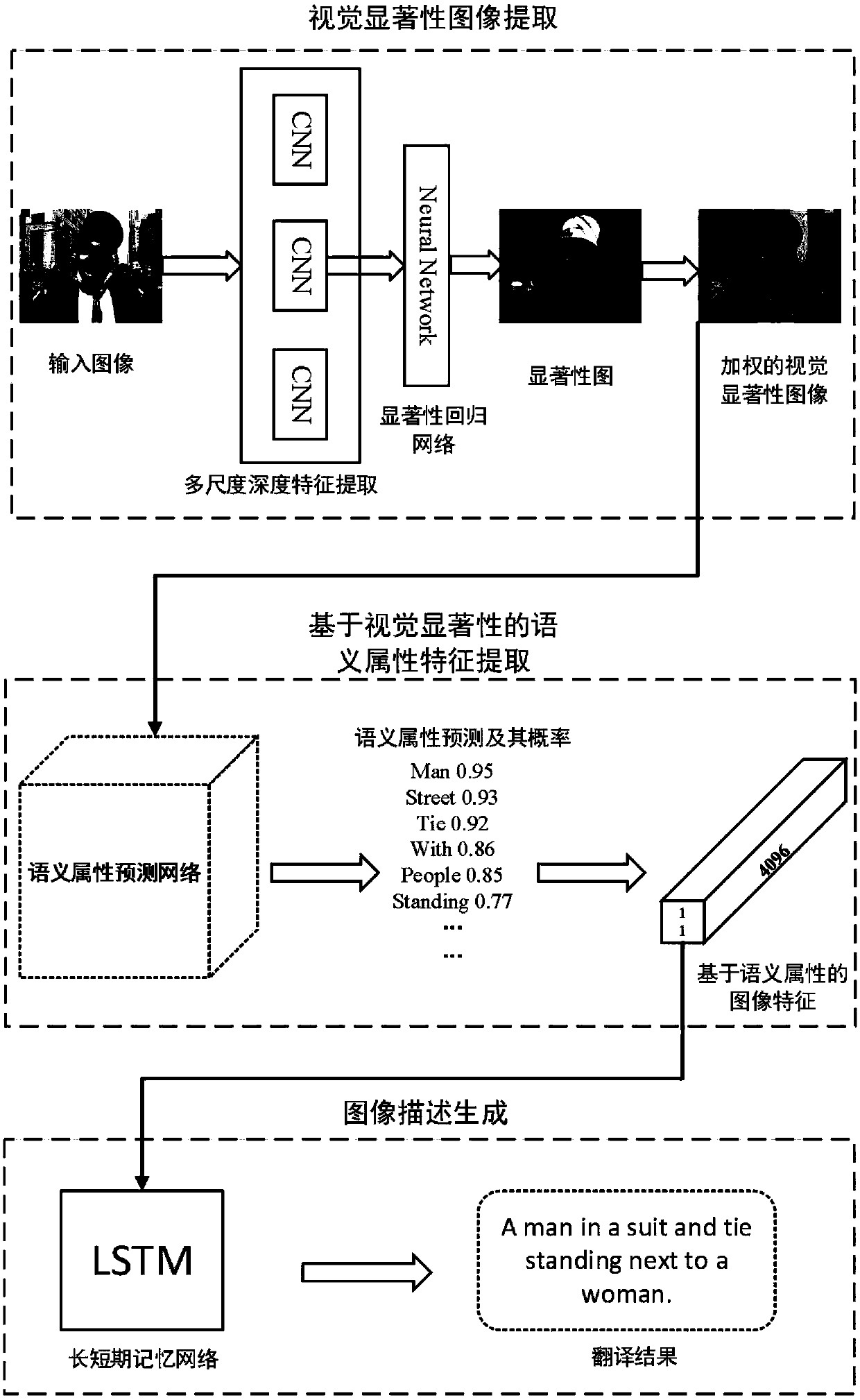

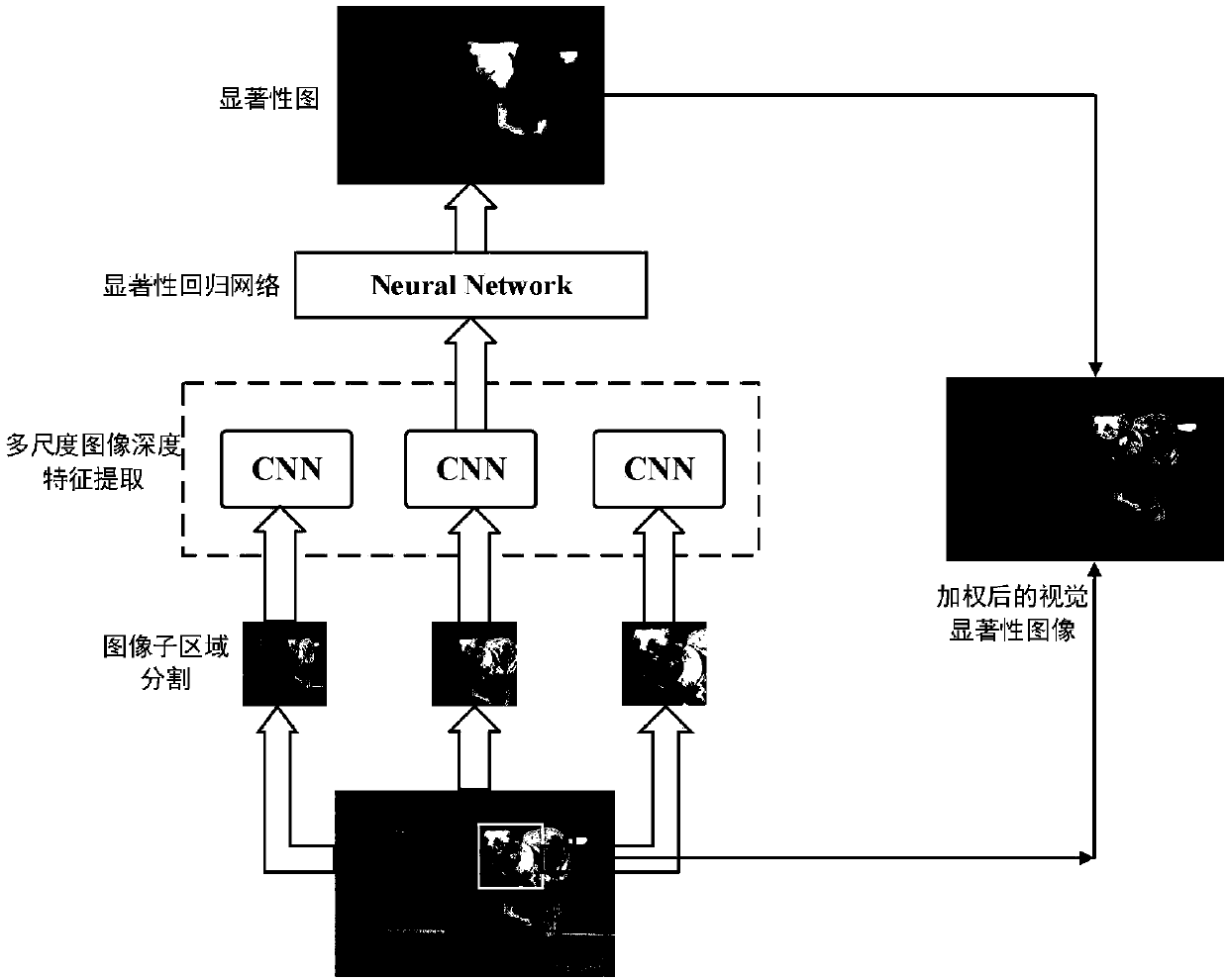

Visual salience and semantic attribute based cross-modal image natural language description method

ActiveCN107688821AIncrease the importanceReduce contributionCharacter and pattern recognitionNeural learning methodsSemantic propertyVision based

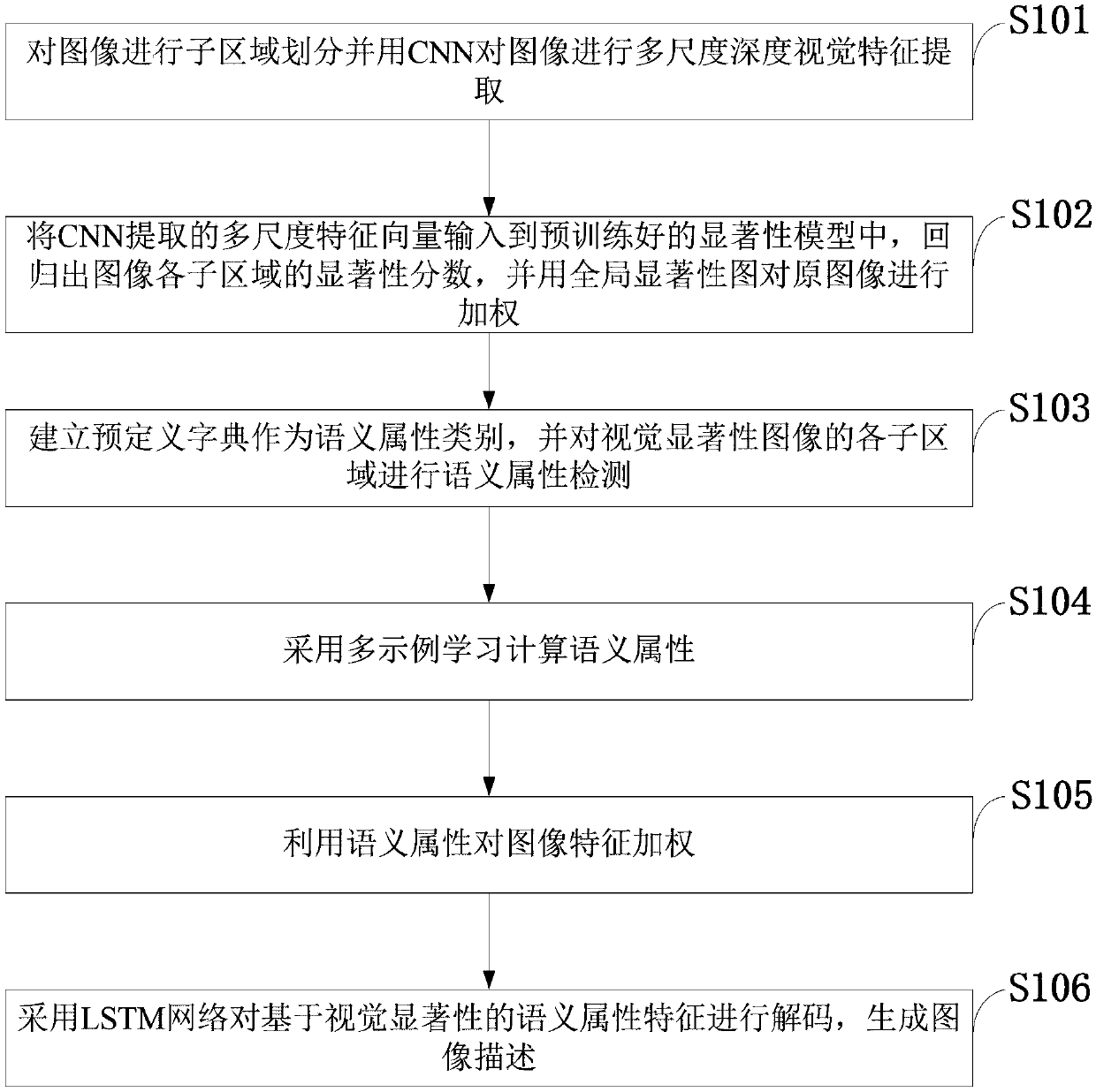

The invention belongs to the technical field of computer vision and natural language processing, and discloses a visual salience and semantic attribute based cross-modal image natural language description method. The method comprises the steps that multiscale deep visual features of all regions are extracted by adopting a convolutional neural network; by means of a pre-trained significance model,an image significance graph is returned, and an original image is weighted; a predefined dictionary is built to serve as a semantic attribute category, and semantic attribute detection is conducted ona visual significance image; semantic attributes are calculated through multi-instance learning; image features are weighted through the semantic attributes; visual-salience-based semantic attributefeatures are decoded through a long short-term memory network, and image description is generated. The method has the advantage of being high in accuracy and can be used for image retrieval under complex scenes, multi-objective image semantic understanding and the like.

Owner:XIDIAN UNIV

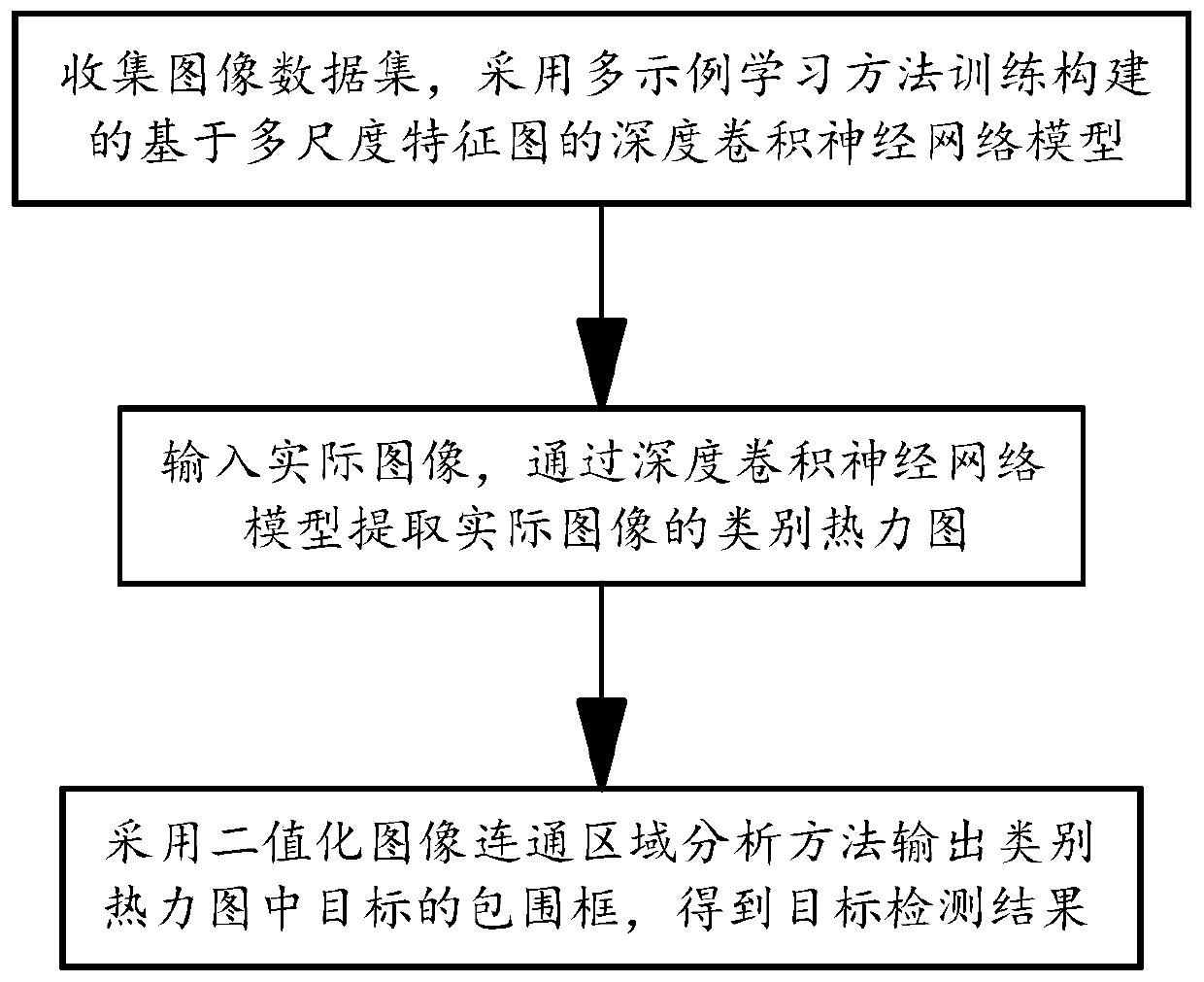

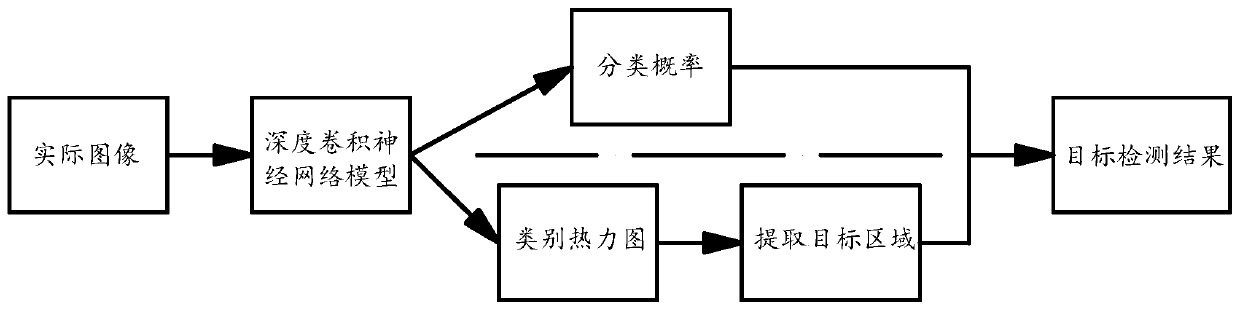

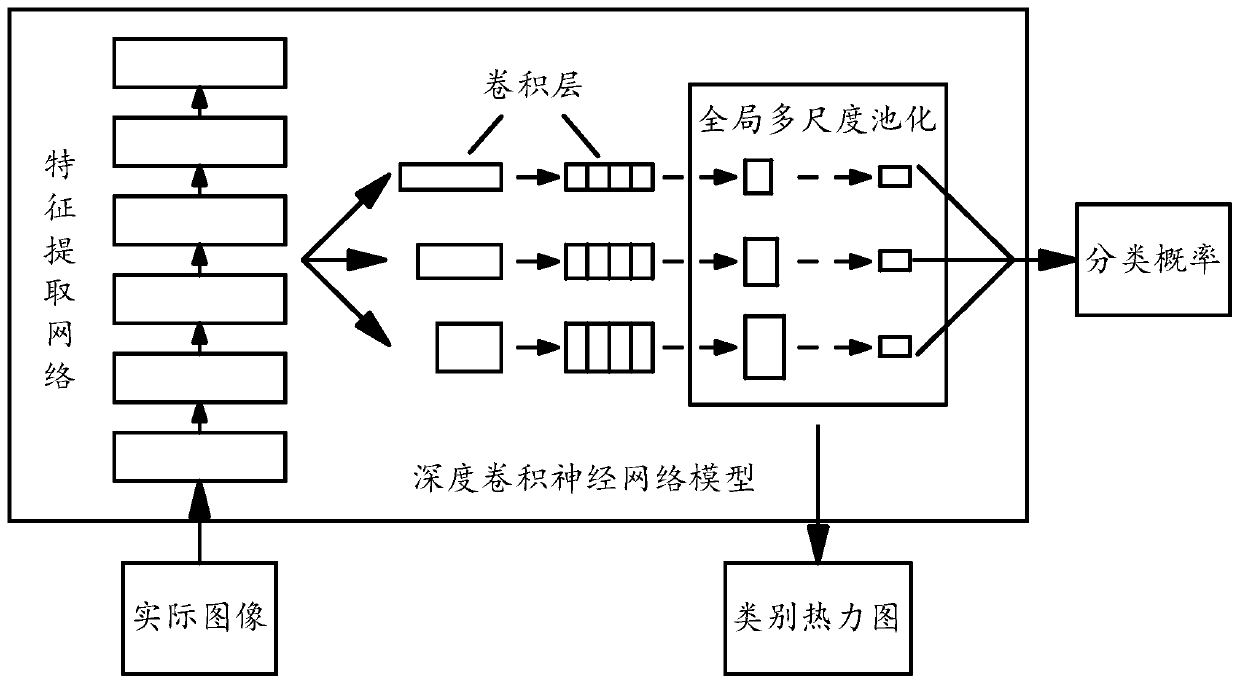

Image target detection method based on weak supervised learning

PendingCN110349148AWork lessGuaranteed accuracyImage analysisCharacter and pattern recognitionData setMachine vision

The invention discloses an image target detection method based on weak supervised learning, and belongs to the technical field of machine vision. The method comprises the following steps: firstly, collecting an image data set, and training a constructed deep convolutional neural network model based on a multi-scale feature map by adopting a multi-example learning method; inputting an actual image,and extracting a category thermodynamic diagram of the actual image through the deep convolutional neural network model; and finally, outputting a bounding box of a target in the category thermodynamic diagram by adopting a binarization image connected region analysis method to obtain a target detection result. According to the method, an image target detection task is realized based on a weak supervised learning method. The target detection task can be completed only by using the image-level classification annotation information in annotation in convolutional neural network model training, which is different from the target bounding box annotation information required in the prior art, so that the work of manually annotating the target in the image is greatly reduced, and the completionof the image target detection task has more economic benefits.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

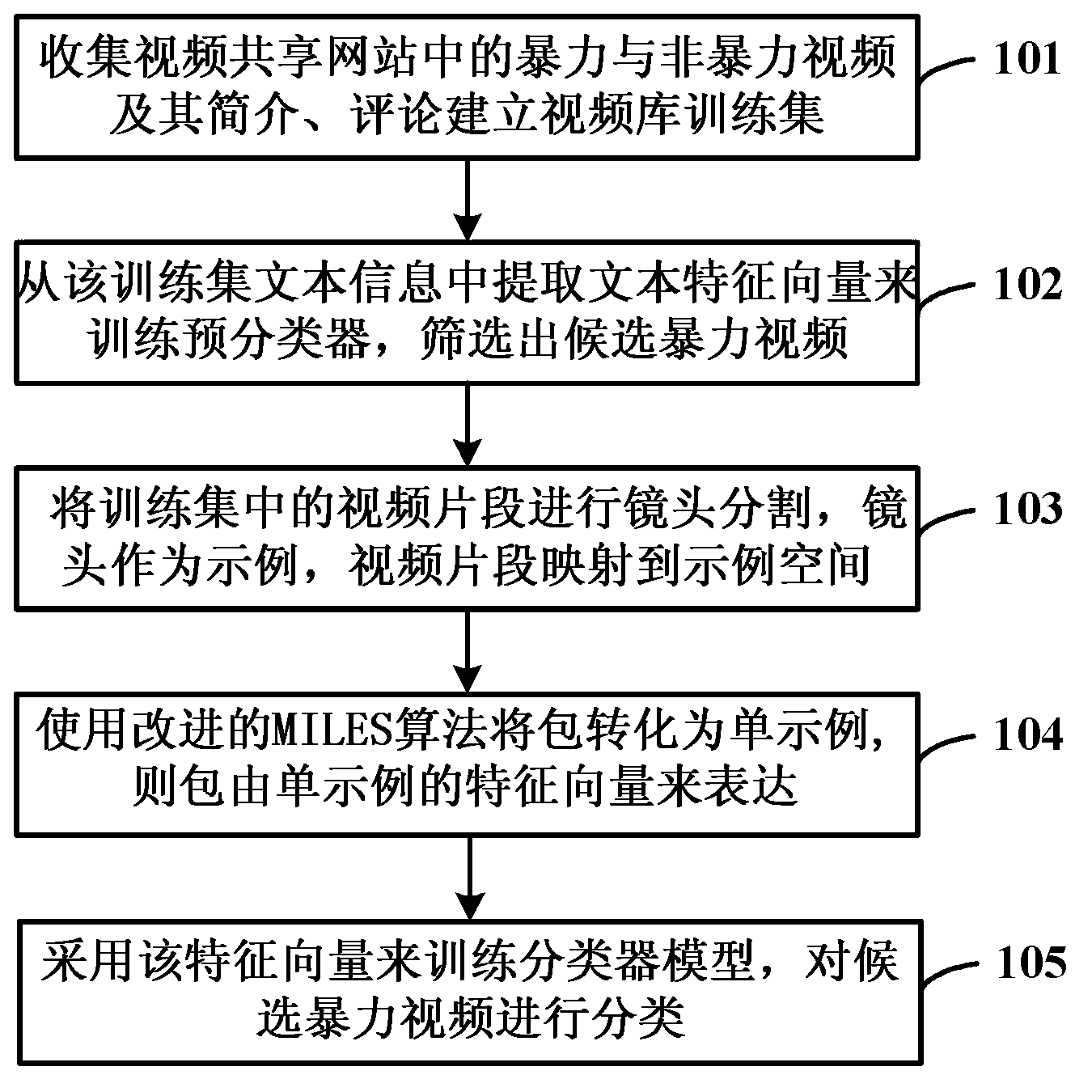

Network violent video identification method

ActiveCN103218608AReduce dimensionalityReduce space complexityCharacter and pattern recognitionFeature vectorVideo sharing

The invention discloses a network violent video identification method based on multiple examples and multiple characteristics. The method for identifying the network violent videos comprises the steps of grasping violent videos, non-violent videos, comments on the violent videos, comments on the non-violent videos, brief introductions of the violent videos and brief inductions of the non-violent videos from a video sharing network, and structuring a video data training set; extracting textural characteristics from textural information of the training set, forming textural characteristic vectors to train a textural pre-classifier, and screening out candidate violent videos by using the pre-classifier; using a shot segmentation algorithm based on a self-adapting dual threshold for conducting segmentation on video segments of the candidate violent videos, extracting related visual characteristics and voice frequency characteristics of each scene to express the scene, taking each scene as an example of multi-example study, and taking video segments as a package; and using an MILES algorithm for converting the package into a single example, using a characteristic vector for training a classifier model, and using the classifier model for conducting classification on the candidate violent videos. By the utilization of the network violence video identification method, bad influences that the network violent videos are broadcasted without constrain are largely lightened.

Owner:人民中科(北京)智能技术有限公司

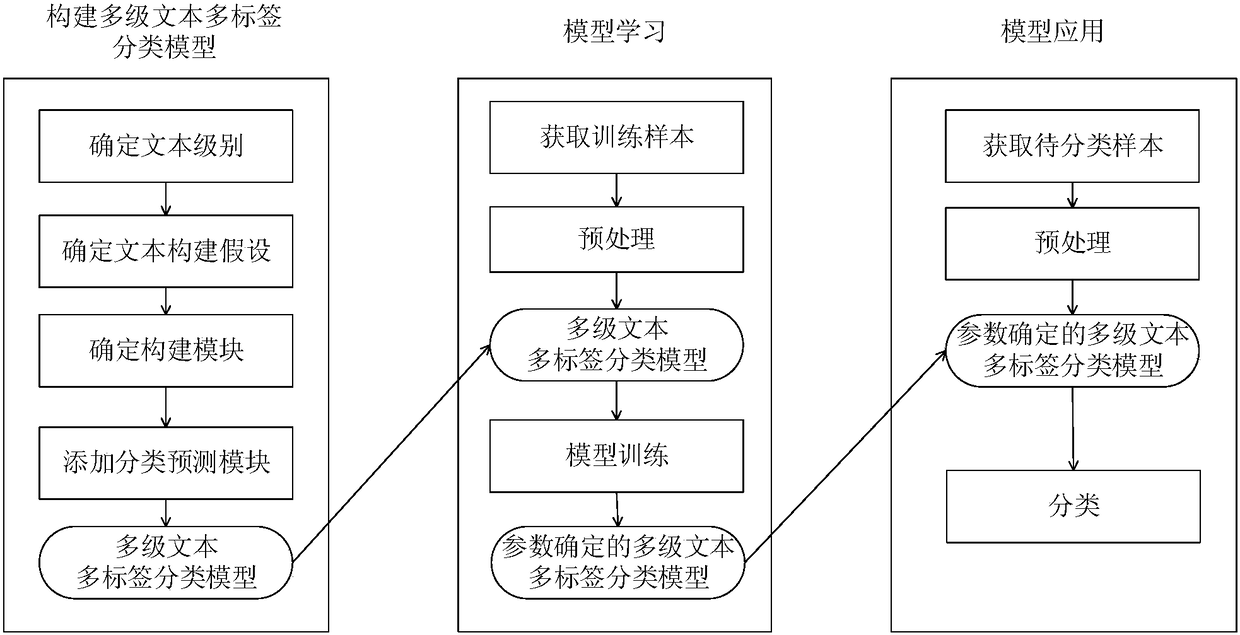

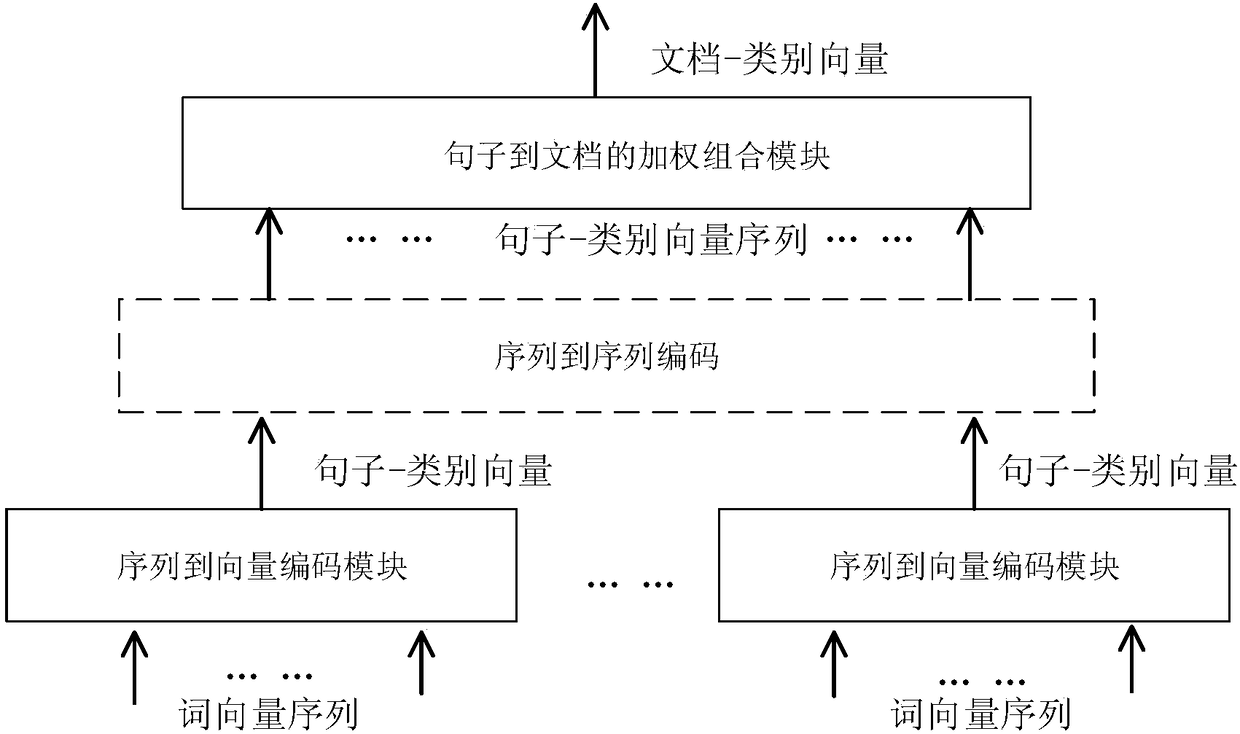

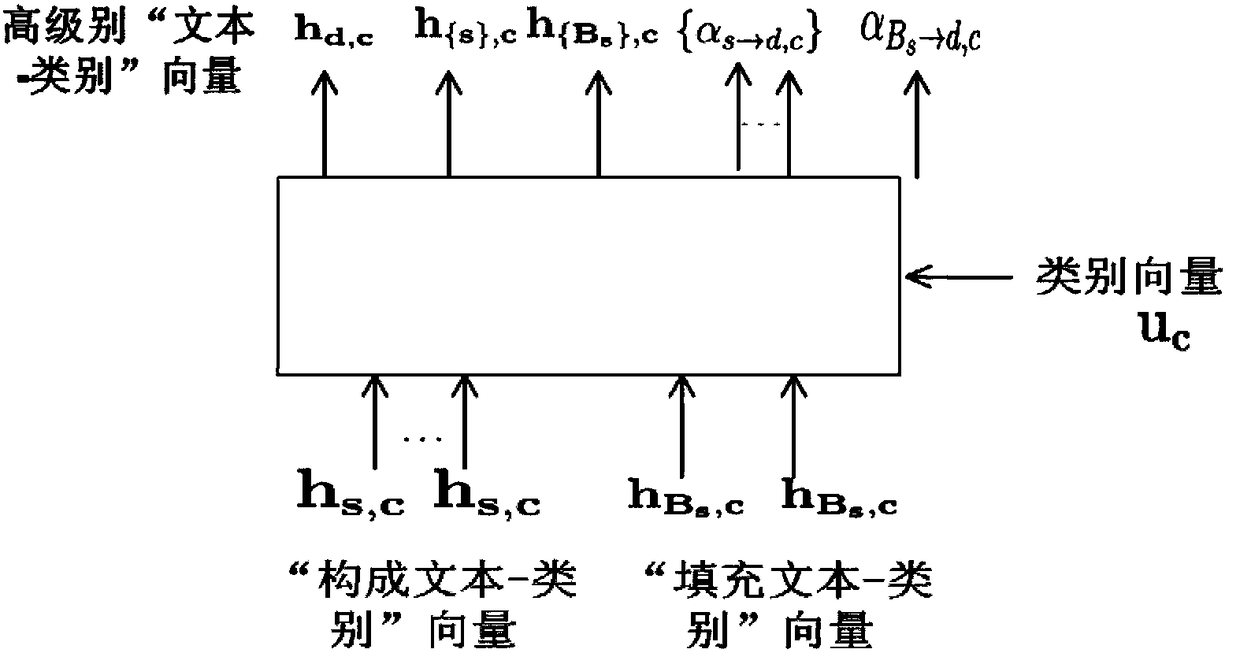

Artificial intelligence-based multi-label classification method and system of multi-level text

ActiveCN108073677AFit closelyImprove scalabilityCharacter and pattern recognitionSpecial data processing applicationsExtensibilityMulti-label classification

The invention relates to an artificial intelligence-based multi-label classification method and system of multi-level text. The method includes: 1) utilizing a neural network to construct a multi-label classification model of the multi-level text, and obtaining text class prediction results of training text according to the model; 2) carrying out learning on parameters of the multi-label classification model of the multi-level text according to existing text class labeling information in the training text and the text class prediction results, which are of the training text and are obtained inthe step 1), to obtain a multi-label classification model of the multi-level text with determined parameters; and 3) utilizing the multi-label classification model of the multi-level text with the determined parameters to classify to-be-classified text. The method infers labels of the formed text simply through the document-level labeling information, and can be well applied to scenes where labels of formed text are difficult to collect; compared with traditional multi-instance learning (MIL) methods, the method of the invention introduces minimal assumptions, and can better fit actual data;and the method of the invention has good scalability.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

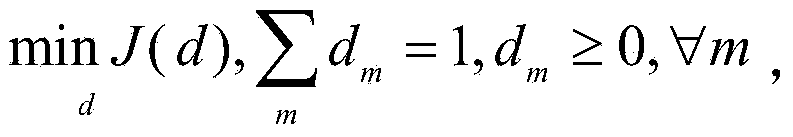

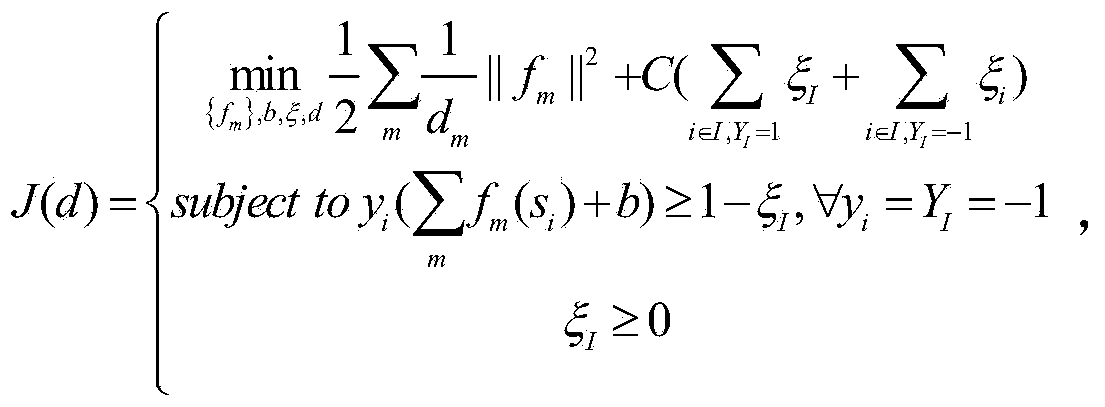

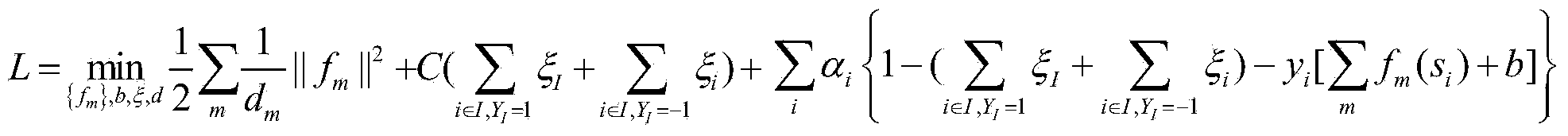

Multi-kernel support vector machine multi-instance learning algorithm applied to pedestrian re-identification

ActiveCN103839084AImprove recognition rateCharacter and pattern recognitionRe identificationPedestrian

The invention discloses a multi-kernel support vector machine multi-instance learning algorithm applied to pedestrian re-identification. The algorithm includes two main steps, namely multi-feature description and a multi-kernel SVM model multi-instance learning algorithm. According to the algorithm, HSV color features and SIFT local features of two pictures, taken under a camera A and a camera B, of the same pedestrian are extracted to construct a word bag, and difference vectors of the two kinds of the features represent the conversion relation under the two cameras to serve as two instance samples and are encapsulated as a bag; then a multi-kernel support vector machine model is optimized, the bag is trained by means of linear fusion of the Gaussian kernel and a polynomial kernel, optimal parameters are obtained through multi-instance learning, and a high identification rate is achieved.

Owner:HUZHOU TEACHERS COLLEGE

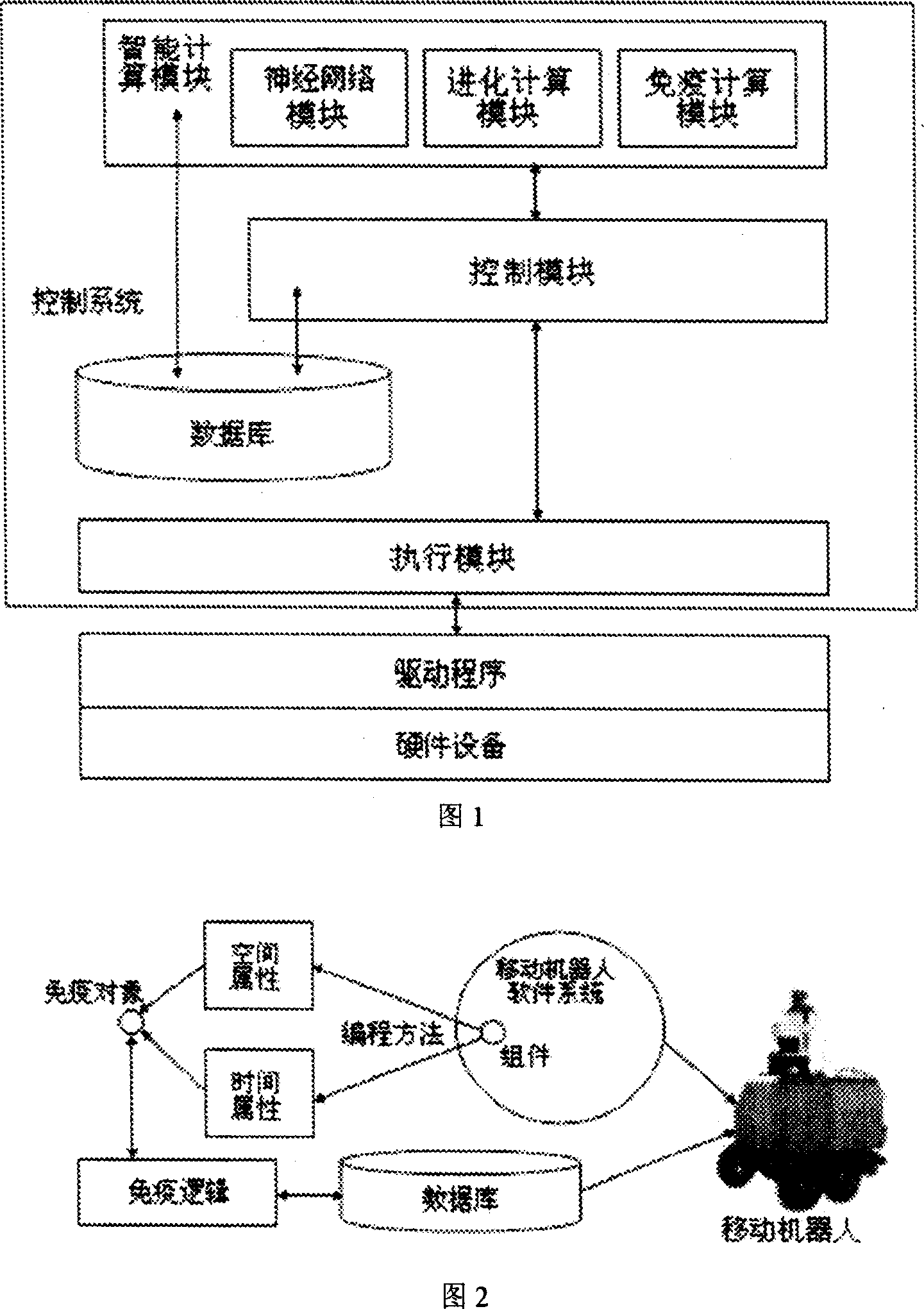

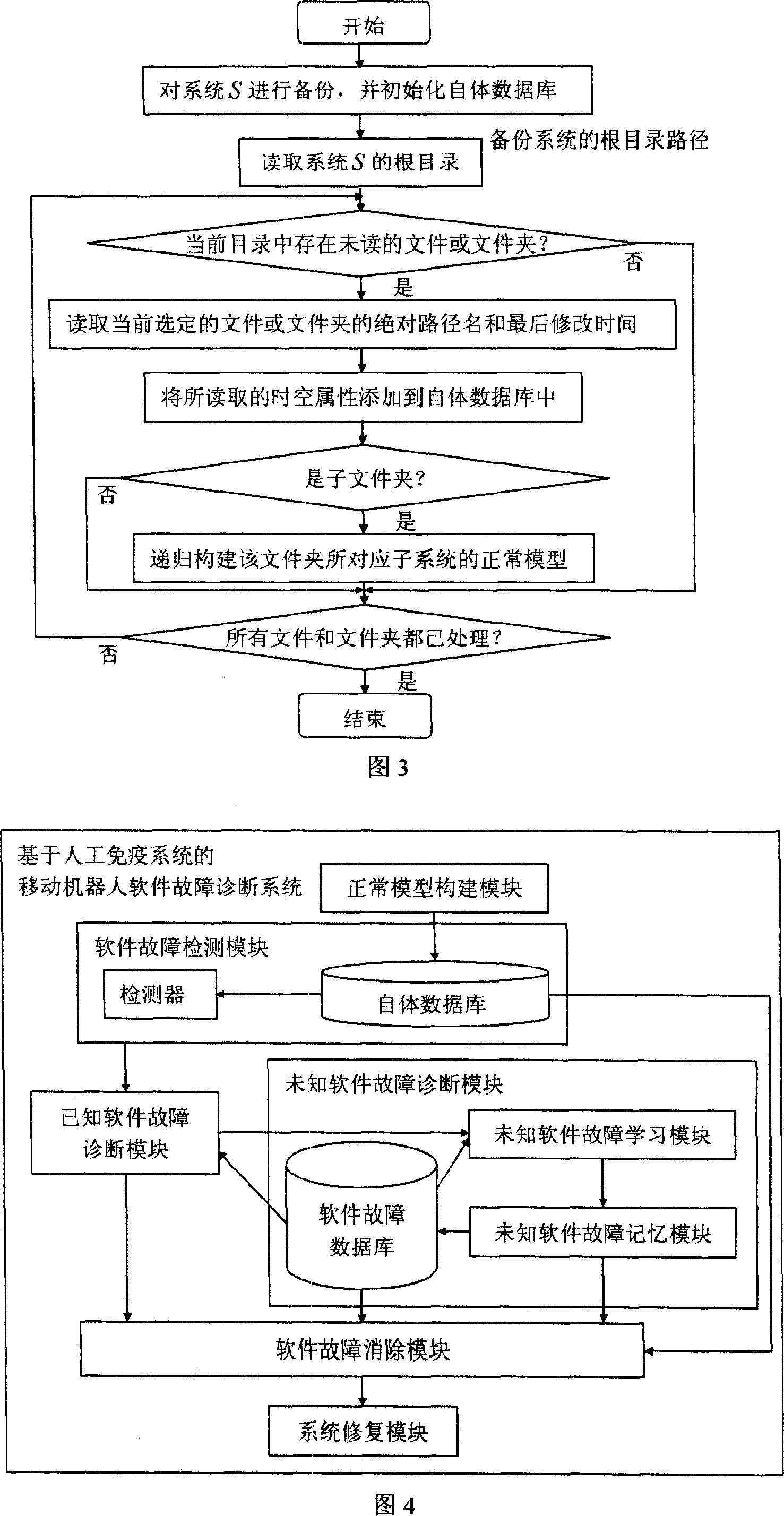

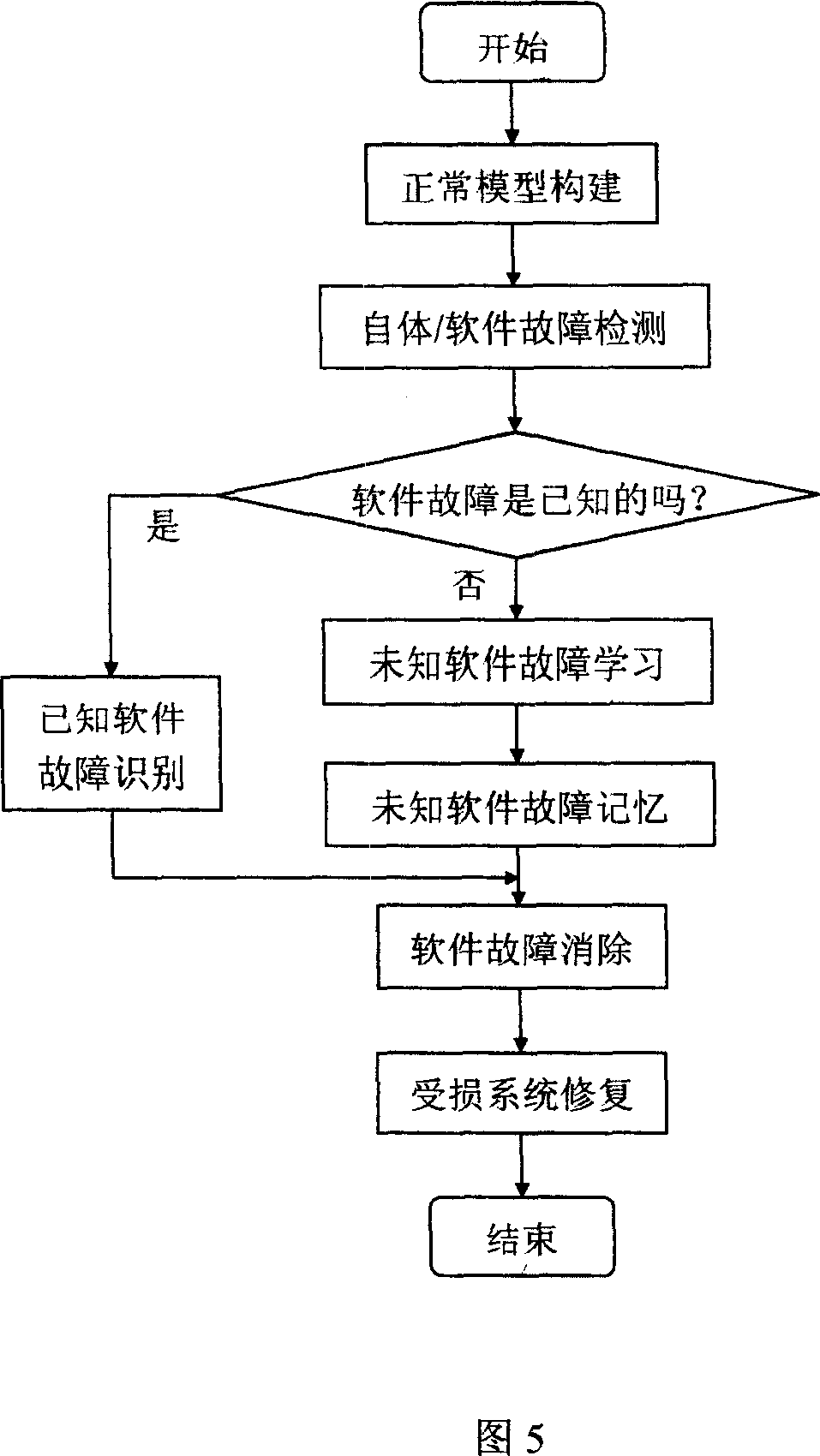

Fault diagnostic system of mobile robot software based on artificial immune system

InactiveCN101008917ASolving automation problemsSoftware testing/debuggingSpecial data processing applicationsArtificial immune systemNerve network

This invention relates to mobile robot software fault dialogue system based on human immune system, which comprises normal module form module, software fault test module, known software fault dialogue module, unknown software dialogue module, software remove module and system restore module, wherein, the normal mode form uses mobile robot software system to establish database; the software fault test module is to test whole software fault; normal mode is determined by all abnormal time and space property.

Owner:CENT SOUTH UNIV

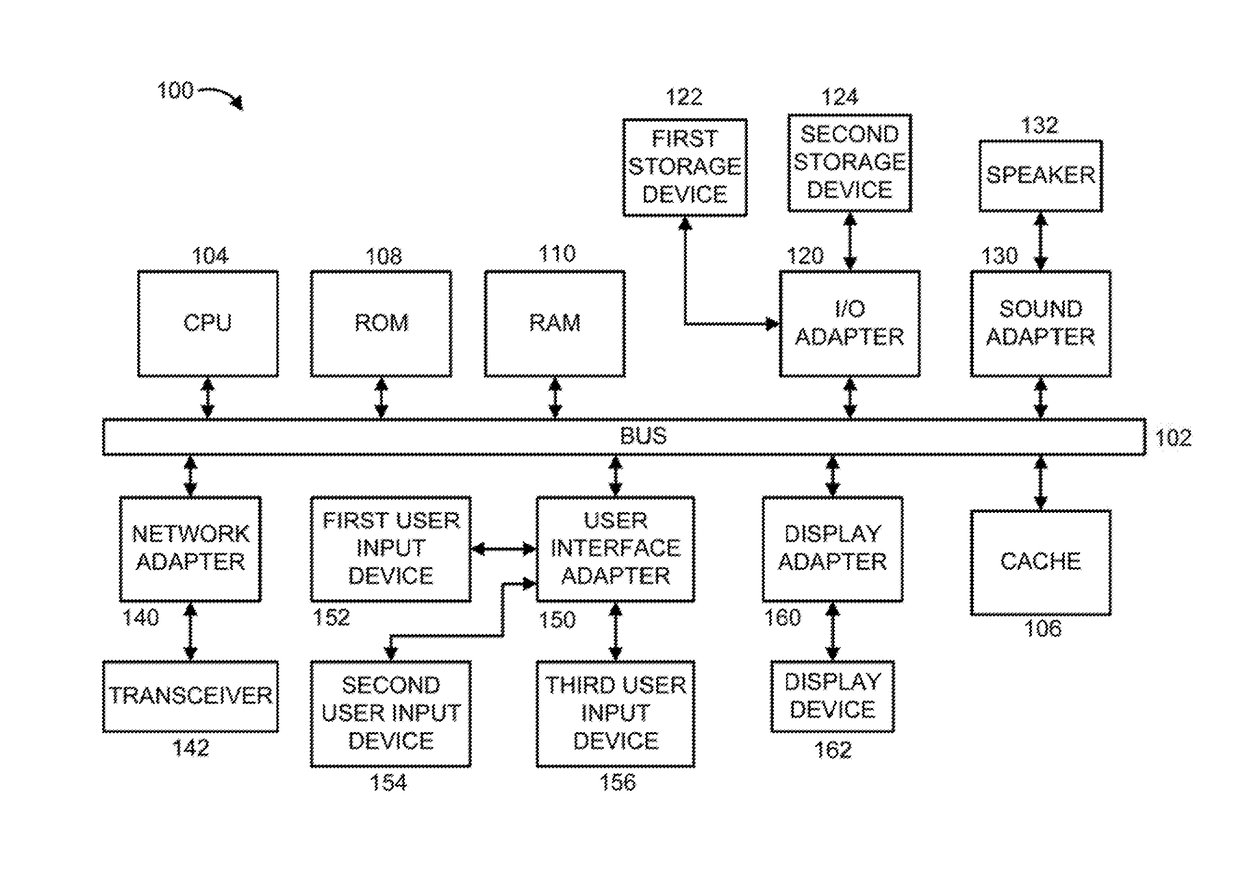

Deep high-order exemplar learning for hashing and fast information retrieval

InactiveUS20170293838A1Improve efficiencyStill image data retrievalNeural architecturesFeature vectorData set

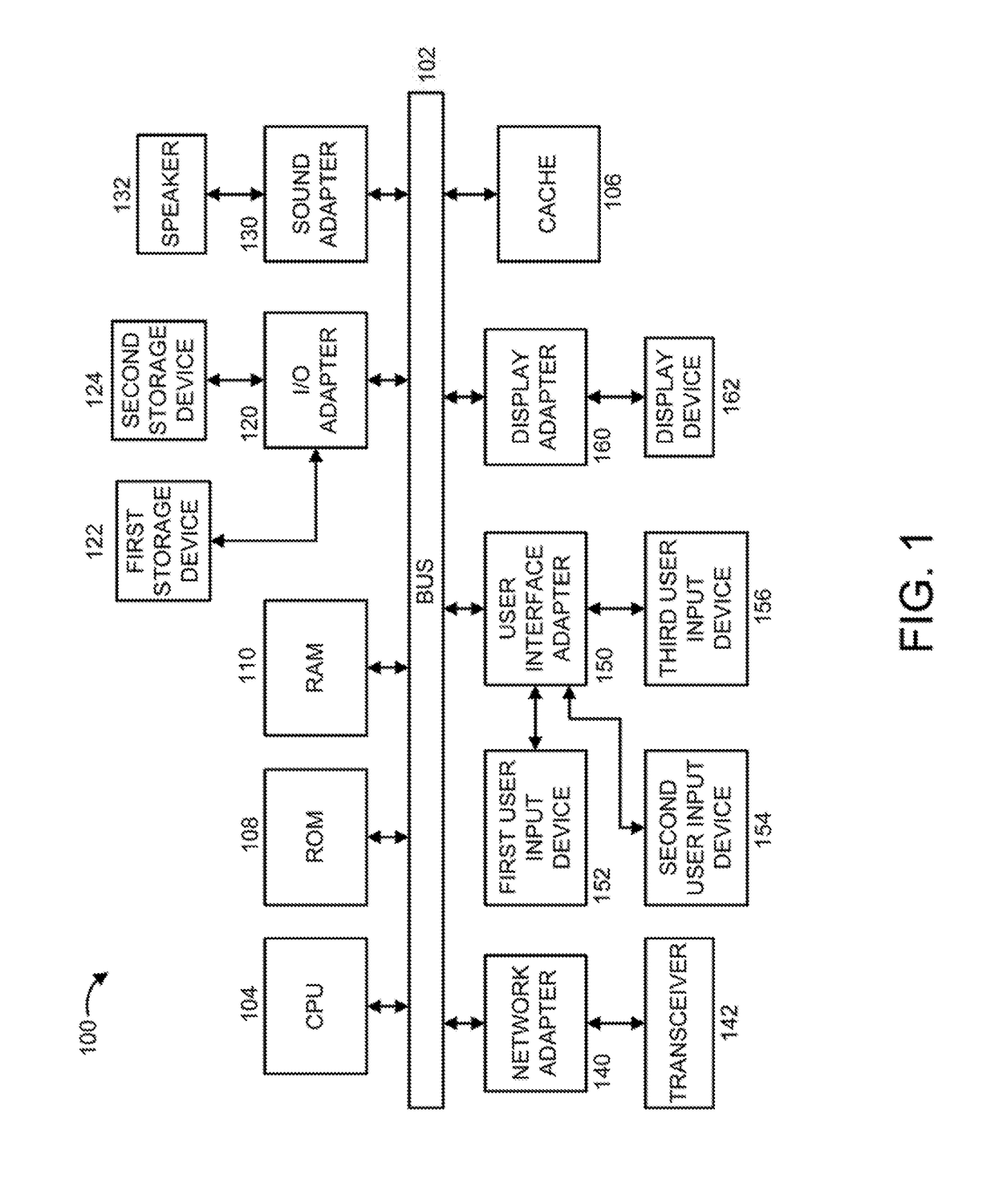

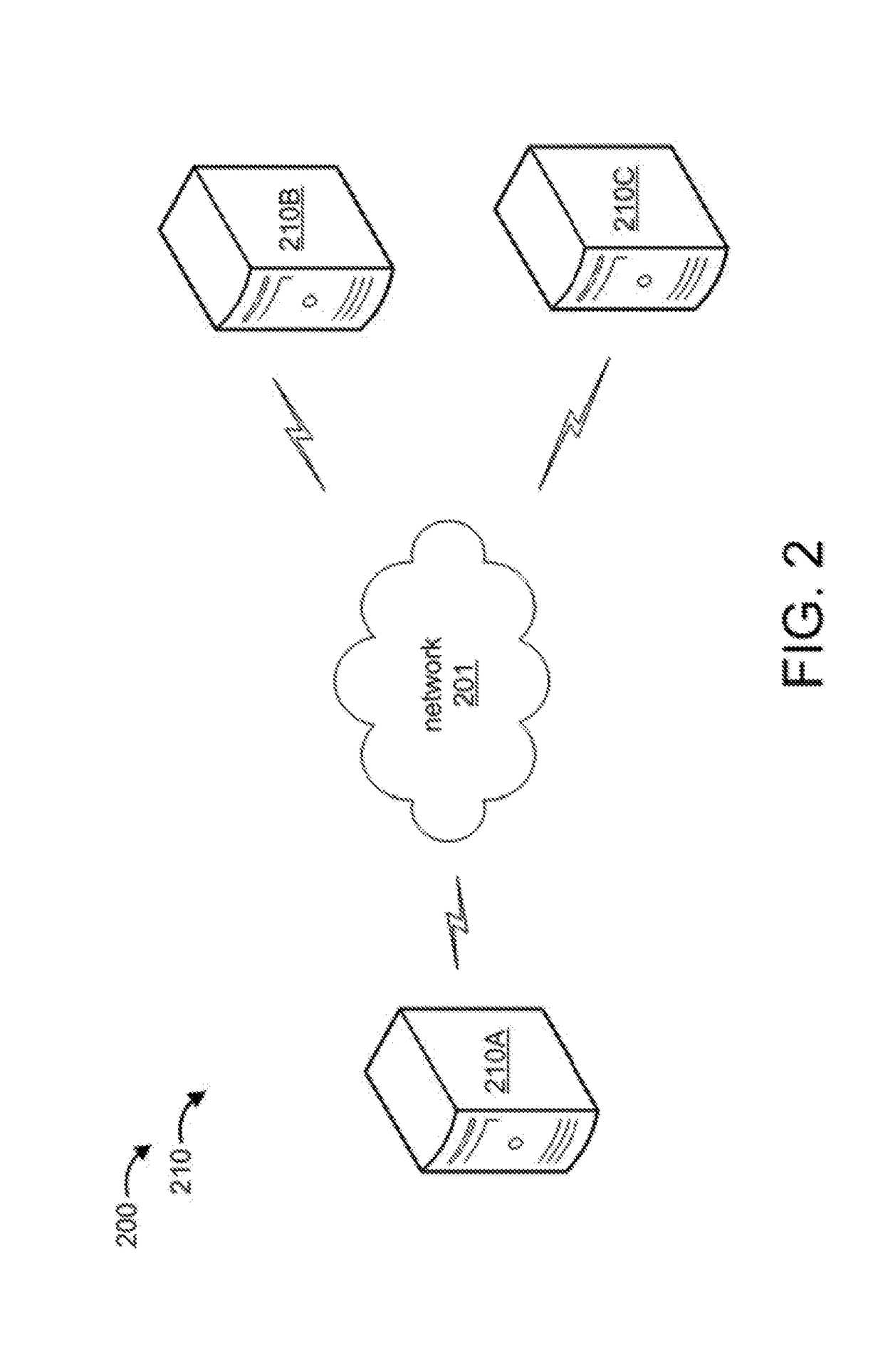

A system and method are provided for deep high-order exemplar learning of a data set. Feature vectors and class labels are received. Each of the feature vectors represents a respective one of a plurality of high-dimensional data points of the data set. The class labels represent classes for the high-dimensional data points. Each of the feature vectors are processed, using a deep high-order convolutional neural network, to obtain respective low-dimensional embedding vectors within each class. A minimization operation is performed on high-order embedding parameters of the high-dimensional data points to output a set of synthetic exemplars. A binarizing operation is performed on the low-dimensional embedding vectors and the set of synthetic exemplars to output hash codes representing the data set. The hash codes are utilized as a search key to increase the efficiency of a processor-based machine searching the data set.

Owner:NEC LAB AMERICA

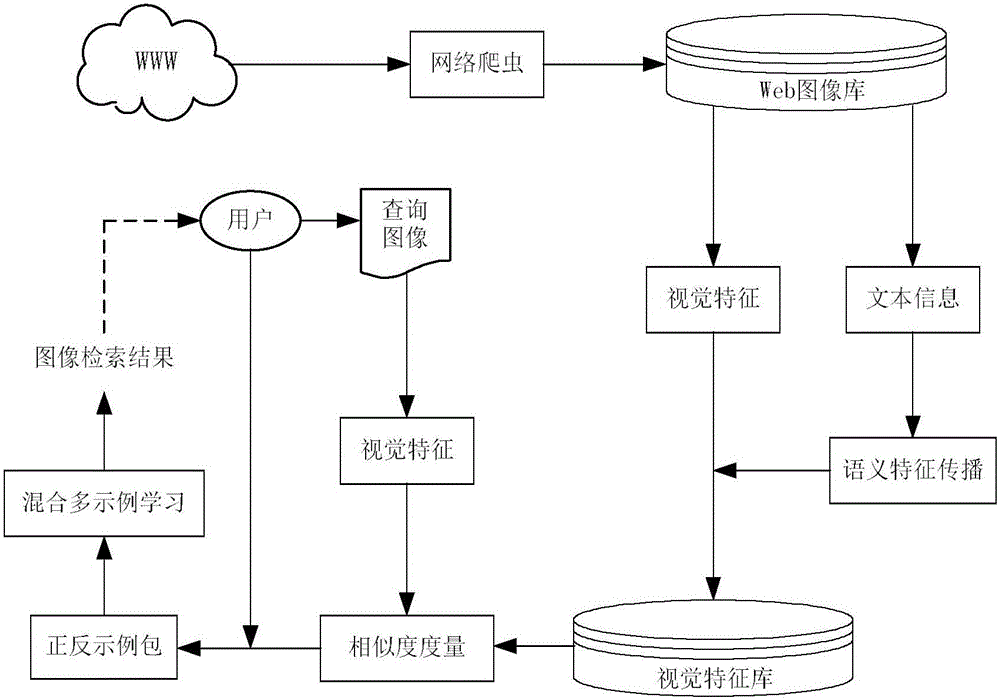

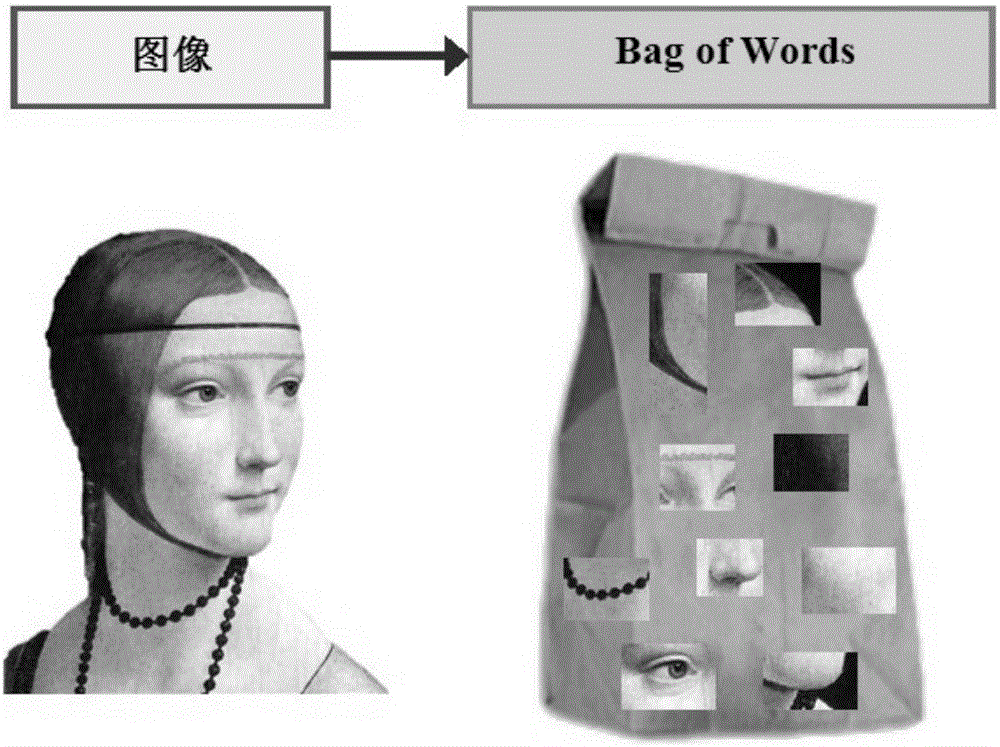

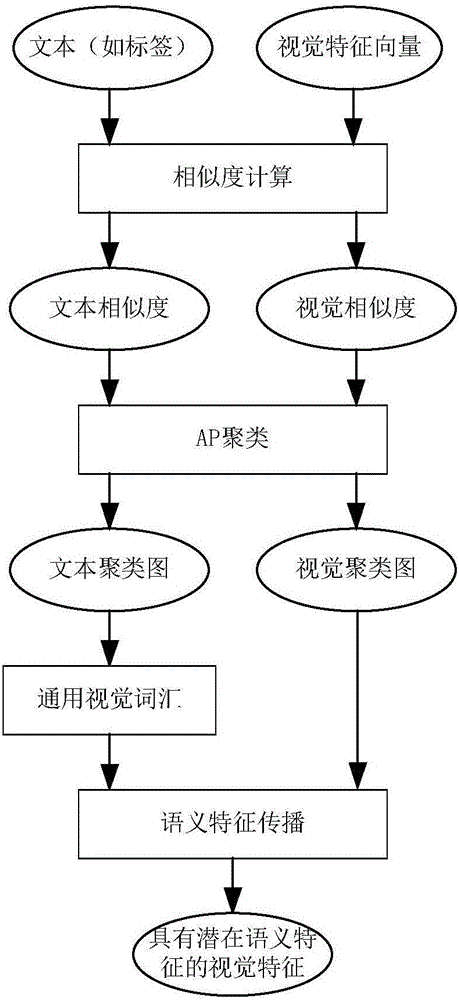

Semantic propagation and mixed multi-instance learning-based Web image retrieval method

ActiveCN106202256AReduce Extraction ComplexityImprove classification performanceCharacter and pattern recognitionSpecial data processing applicationsSmall sampleThe Internet

The invention belongs to the technical field of image processing and particularly provides a semantic propagation and mixed multi-instance learning-based Web image retrieval method. Web image retrieval is performed by combining visual characteristics of images with text information. The method comprises the steps of representing the images as BoW models first, then clustering the images according to visual similarity and text similarity, and propagating semantic characteristics of the images into visual eigenvectors of the images through universal visual vocabularies in a text class; and in a related feedback stage, introducing a mixed multi-instance learning algorithm, thereby solving the small sample problem in an actual retrieval process. Compared with a conventional CBIR (Content Based Image Retrieval) frame, the retrieval method has the advantages that the semantic characteristics of the images are propagated to the visual characteristics by utilizing the text information of the internet images in a cross-modal mode, and semi-supervised learning is introduced in related feedback based on multi-instance learning to cope with the small sample problem, so that a semantic gap can be effectively reduced and the Web image retrieval performance can be improved.

Owner:XIDIAN UNIV

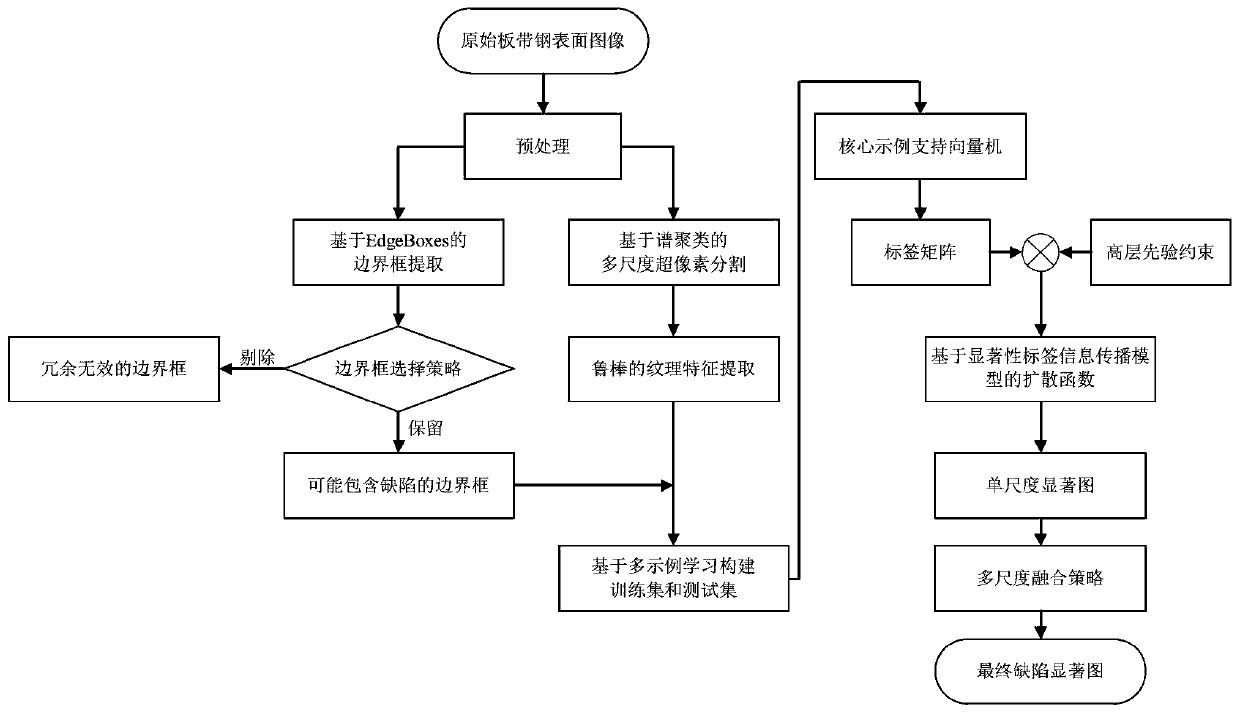

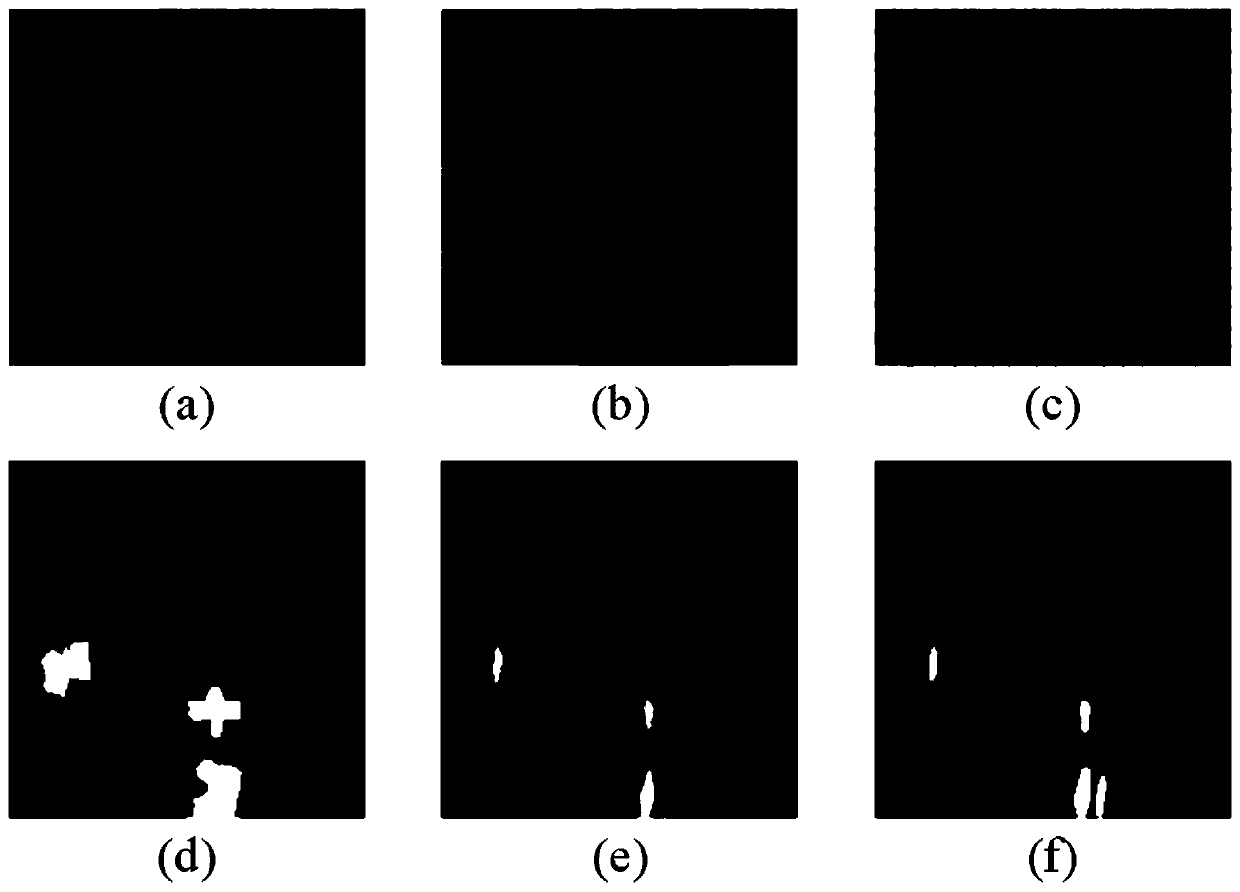

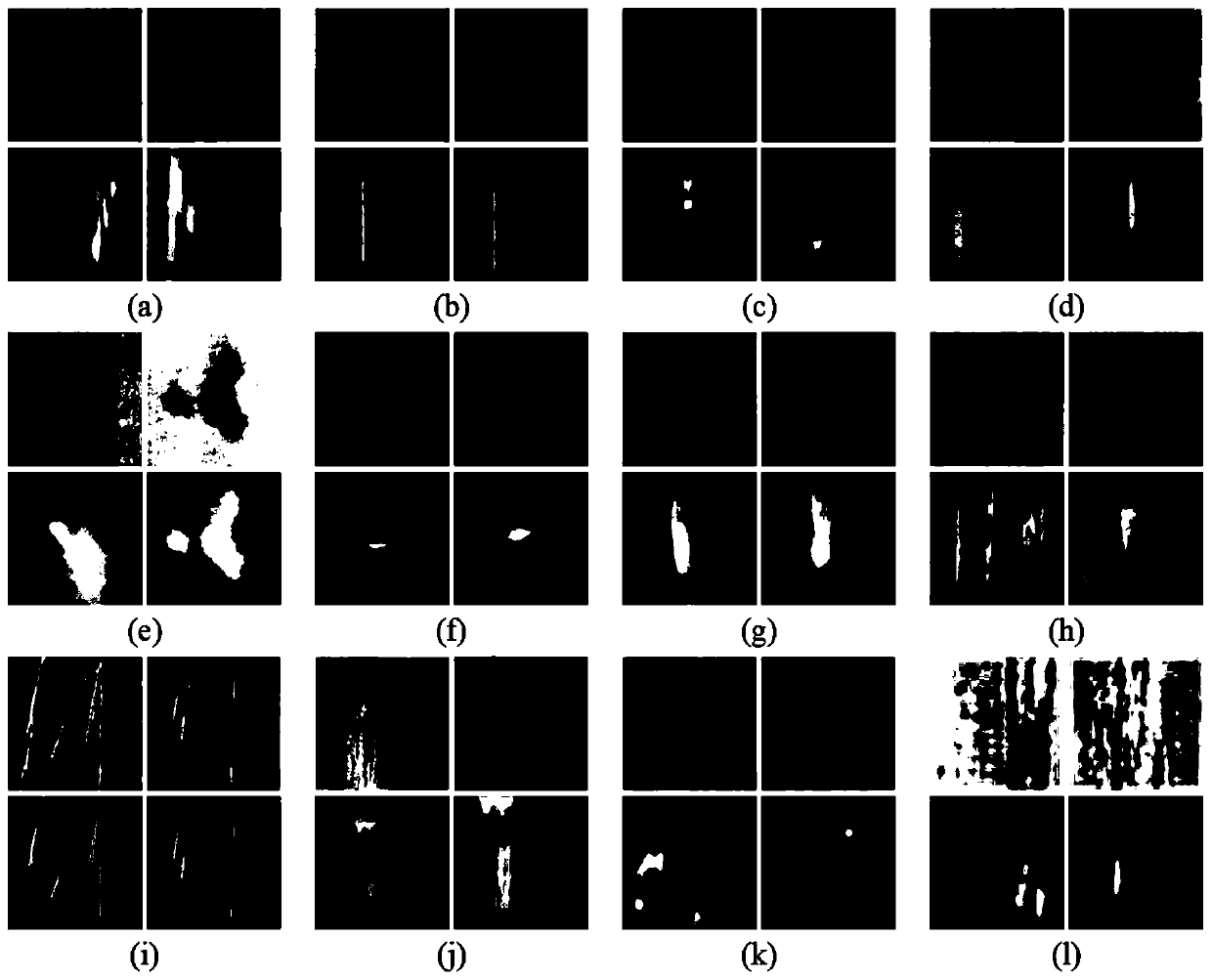

Plate and strip steel surface defect detection method based on saliency label information propagation model

ActiveCN110717896AImprove detection accuracyNo human intervention requiredImage enhancementImage analysisFeature vectorSaliency map

The invention relates to the technical field of industrial surface defect detection, and provides a plate strip steel surface defect detection method based on a significance label information propagation model. The method comprises the following steps of firstly, acquiring a plate strip steel surface image I; then, extracting a bounding box from the image I, and executing a bounding box selectionstrategy; then, performing super-pixel segmentation on the image I, and extracting a feature vector from each super-pixel; then, constructing a significance label information propagation model, constructing a training set based on a multi-example learning framework to train a classification model based on a KISVM, classifying a test set by using the trained model to obtain a category label matrix,calculating a smooth constraint item and a high-level prior constraint item, and optimizing and solving a diffusion function; and finally, calculating a single-scale saliency map under multiple scales, and obtaining a final defect saliency map through multi-scale fusion. The surface defects of the strip steel can be efficiently, accurately and adaptively detected, a complete defect target can beuniformly highlighted, and a non-significant background area can be effectively inhibited.

Owner:NORTHEASTERN UNIV

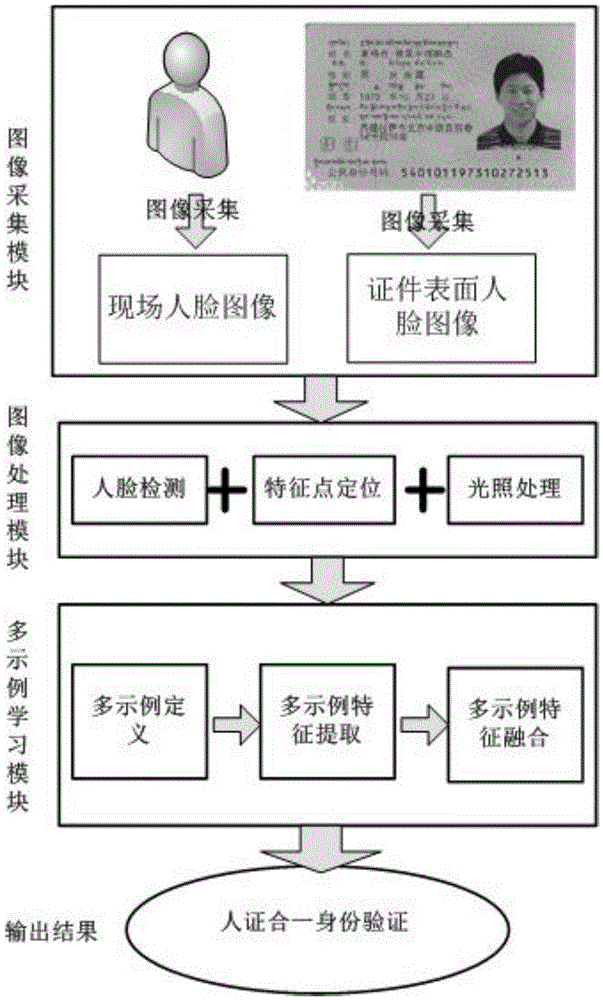

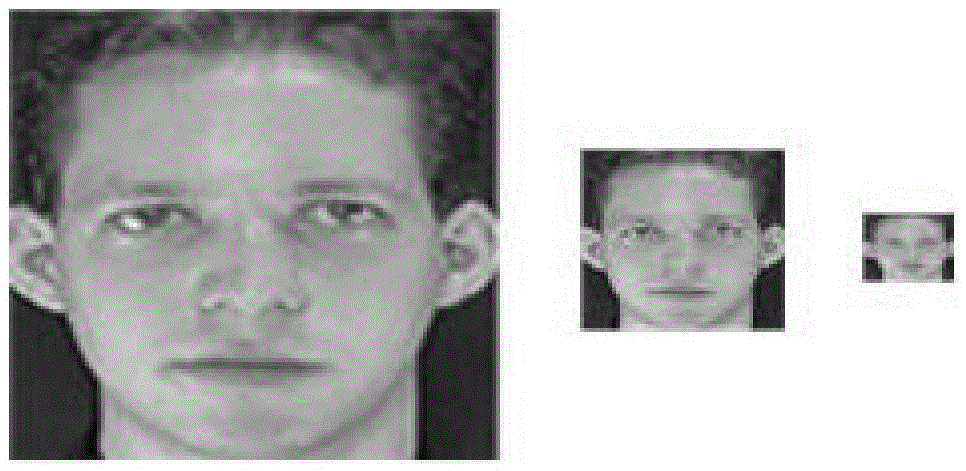

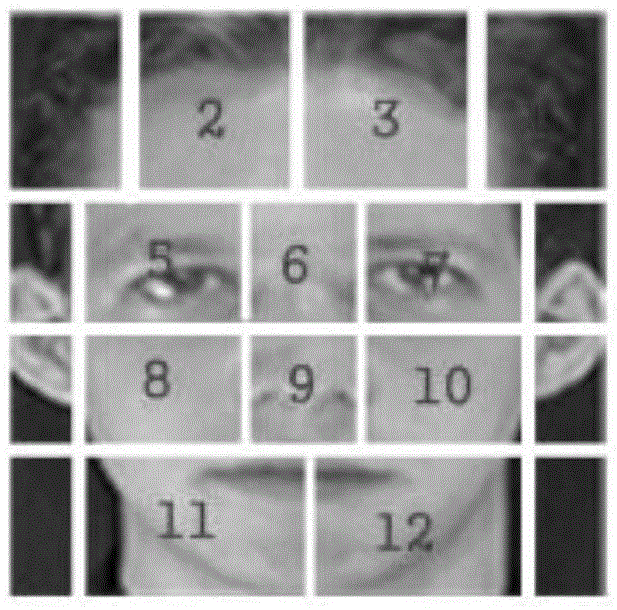

Face comparing verification method based on multi-instance learning

ActiveCN105469076AComplementaryFastCharacter and pattern recognitionFace detectionValidation methods

The invention discloses a face comparing verification method based on multi-instance learning, applied to a people and certificate consistency verification occasion. The method performs face comparing verification based on the thought of multi-instance learning, and comprises the following steps of: S1, face image preprocessing; S2, face multi-instance learning training; and S3, face verification. The face image preprocessing step comprises face detection, feature point locating and DoG light treatment; the face multi-instance learning training step comprises face multi-instance definition, multi-instance feature extraction and multi-instance feature fusion; and the face verification step is to perform face consistency verification according to stock equity of each instance and similarity of matched instances in the step S2. The method solves a difficult problem of change of hair style, skin color, making up, micro plastic, and so on, in face comparing verification, provides an effective algorithm and a train of thought for face verification, and improves reliability of face verification. The method provided by the invention can be widely applied to the people and certificate consistency verification occasion for checking whether certificates, such as a 2nd-generation ID card, a passport, a driving license and a student ID card, are held by owners thereof or not.

Owner:GUANGDONG MICROPATTERN SOFTWARE CO LTD

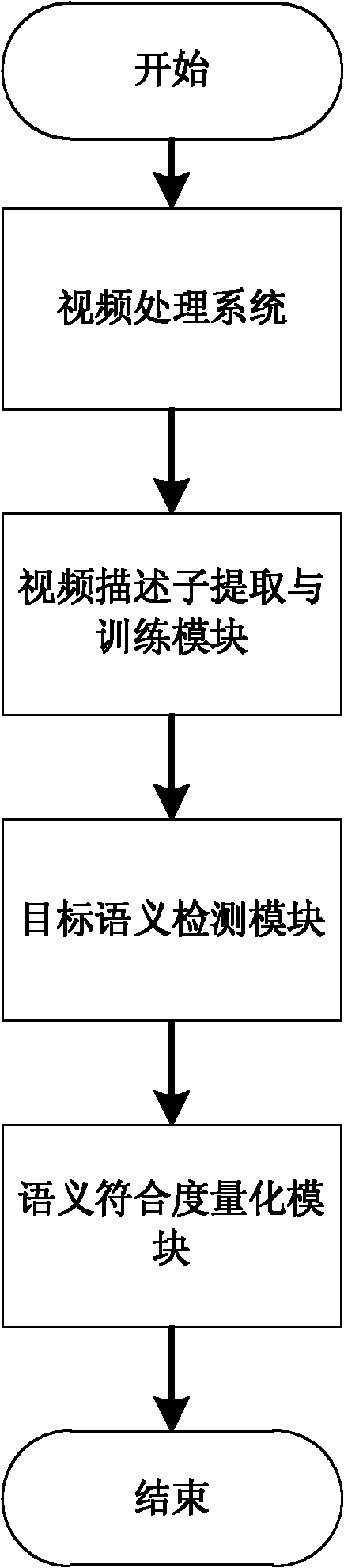

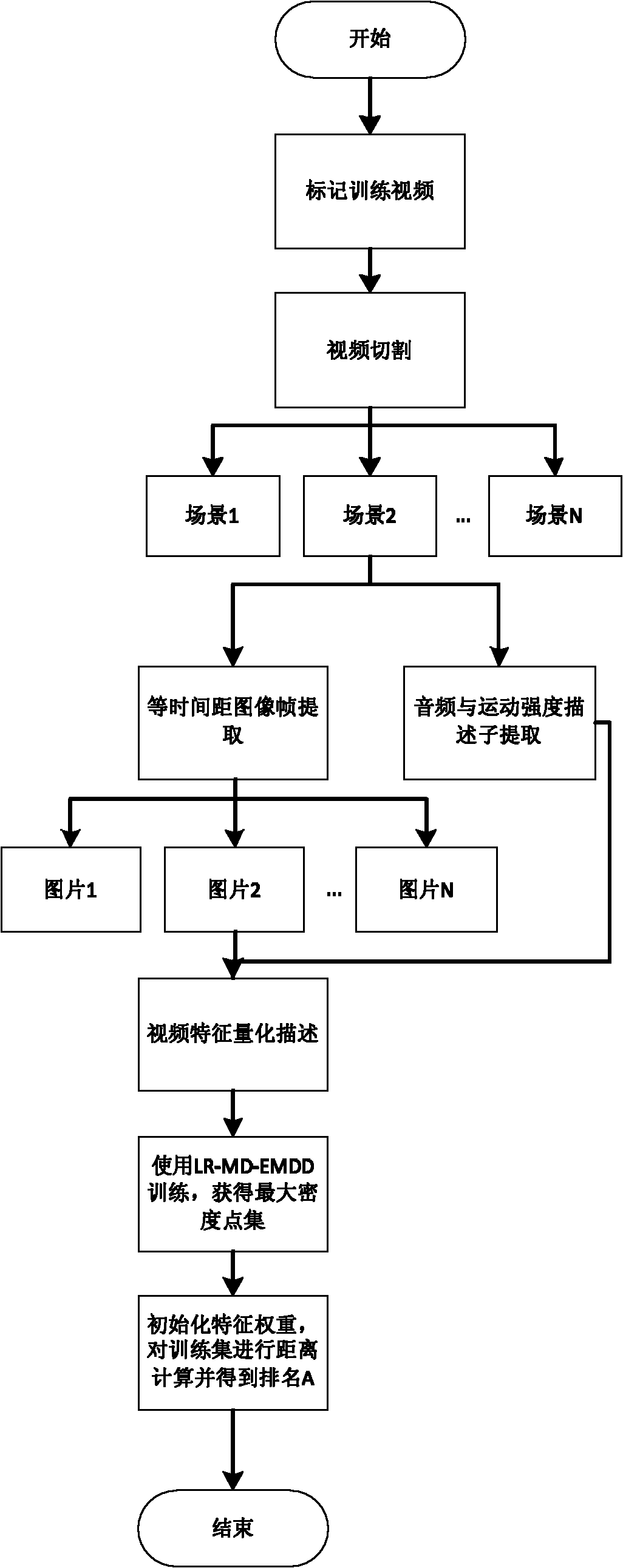

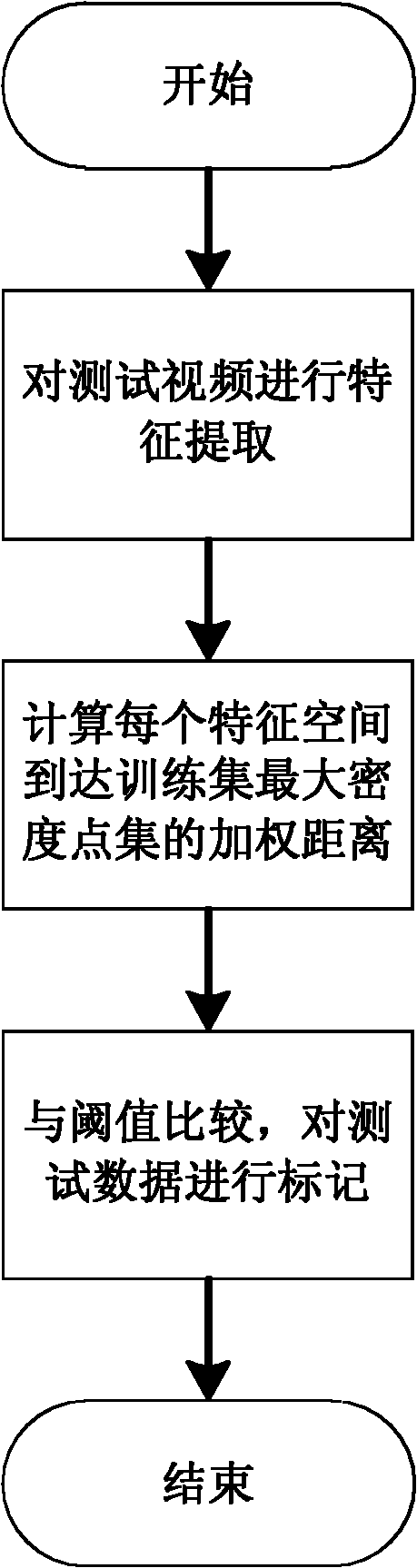

Method for detecting specific contained semantics of video based on grouped multi-instance learning model

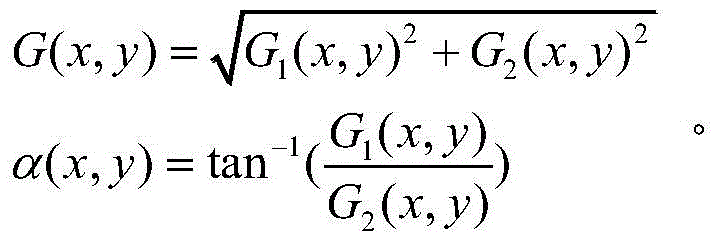

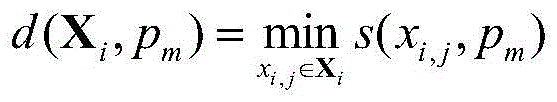

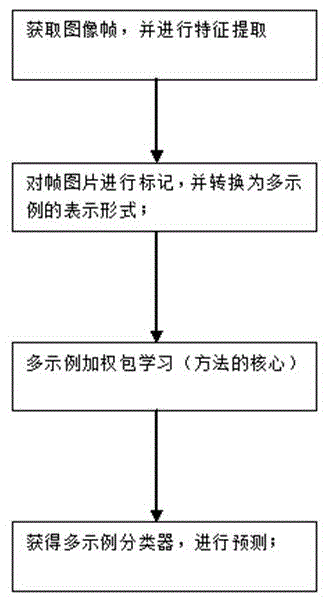

The invention relates to a method for detecting specific contained semantics of a video based on a grouped multi-instance learning model in the technical field of computer video treatment, which comprises the following steps: continuously cutting the video according to the shots, thereby acquiring a plurality of video segments; using a FFMPEG tool to intercept image describers for each video segment Sij, wherein averagely 25 pictures are intercepted from each video segment at the same interval; extracting the related audio describers by using a video audio track, intercepting the video describers by using a video screenshot set, and intercepting the motion degree by using the video; performing machine learning on each set of describers; and acquiring a result after performing the machine learning, performing an European distance calculation on the learning result and one describer of one target video, and using the acquired minimum value as the approaching degree of the original video under the description of the describer for the target video.

Owner:SHANGHAI JIAO TONG UNIV

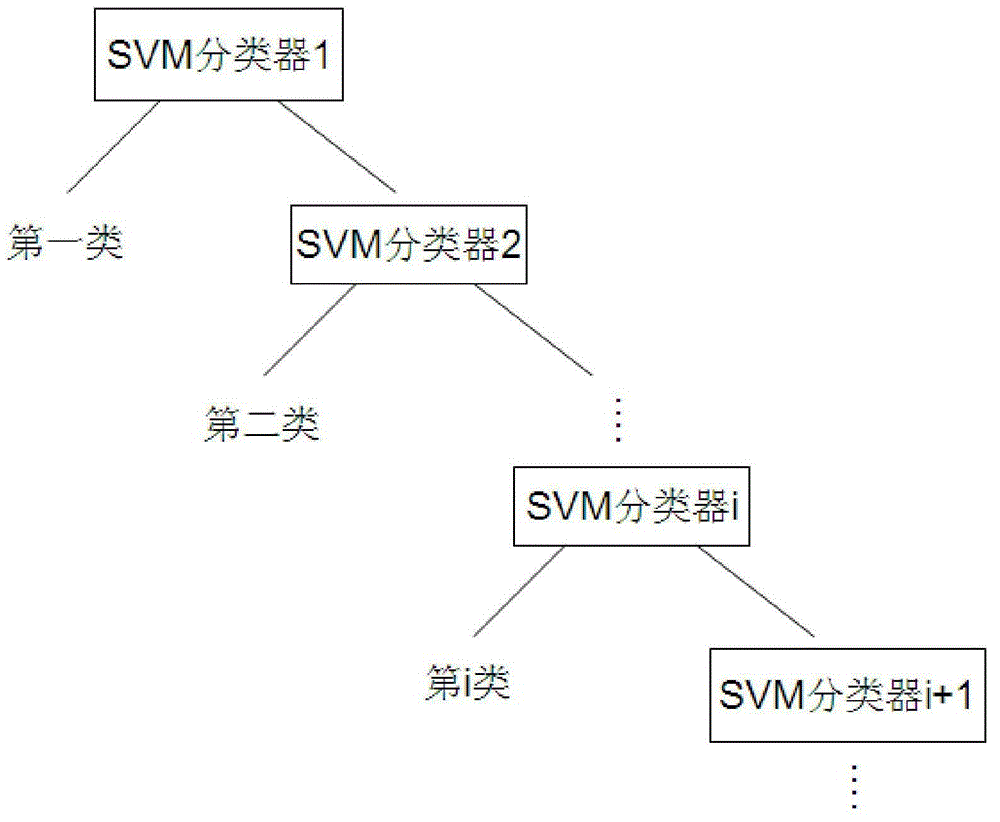

Image multi-tag marking algorithm based on multi-example package feature learning

ActiveCN105678309AImprove discrimination abilityEnhanced Semantic InformationImage enhancementImage analysisFeature learningHistogram of oriented gradients

The invention discloses an image multi-tag marking algorithm based on multi-example package feature learning, and the algorithm comprises the steps: obtaining a set of image blocks of all training images; extracting the features of a color histogram and the features of a direction gradient histogram of each image block of the set of the training images; enabling one training image to serve as an image package, and obtaining an image package structure needed by a multi-example learning framework; enabling the examples in all image packages in the set to form a projection example set, enabling each image package to be projected towards the projection example set, and obtaining the projection features of the image packages; selecting the features with the high discrimination performance as the classification features of the image packages; importing the classification features of the image packages of the learned training image set into an SVM classifier for training, obtaining the parameters of a training model, and predicting a test image tag through employing a trained SVM classifier. The algorithm is simple in implementation, and a trainer is mature and reliable. The algorithm is quick in prediction, and achieves multiple image tags better.

Owner:SHANDONG INST OF BUSINESS & TECH

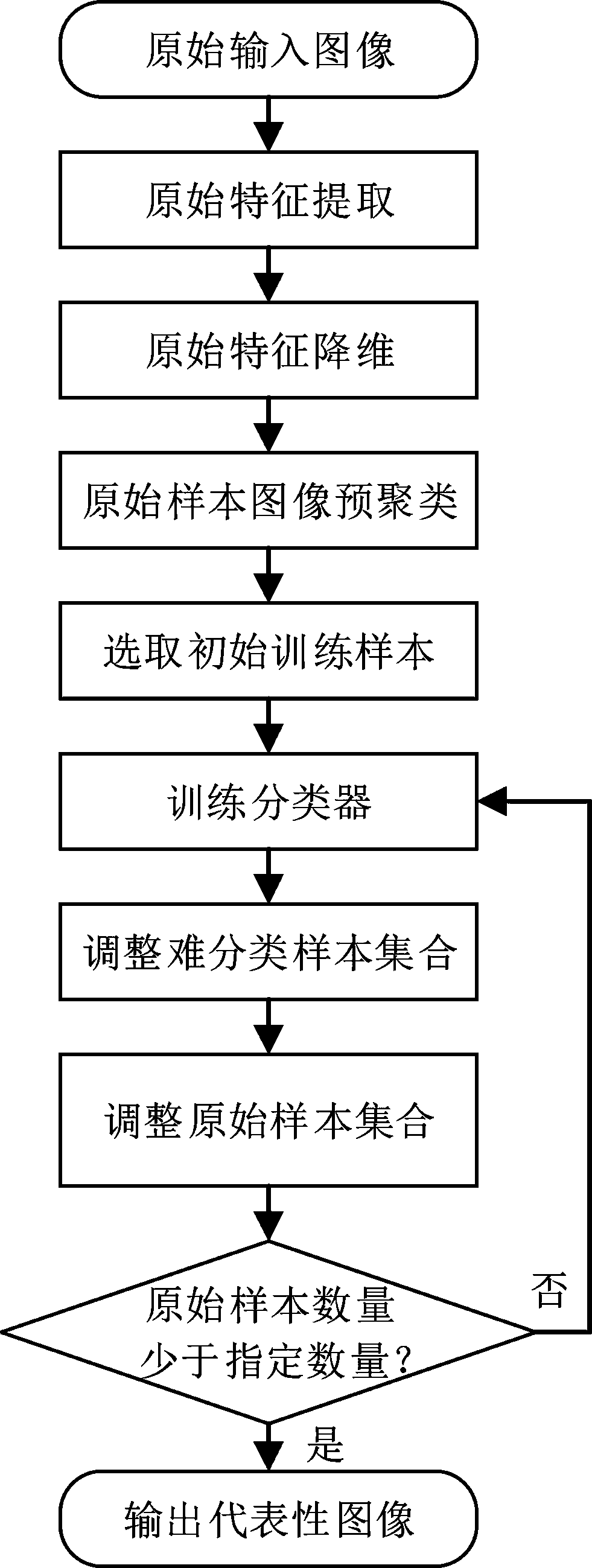

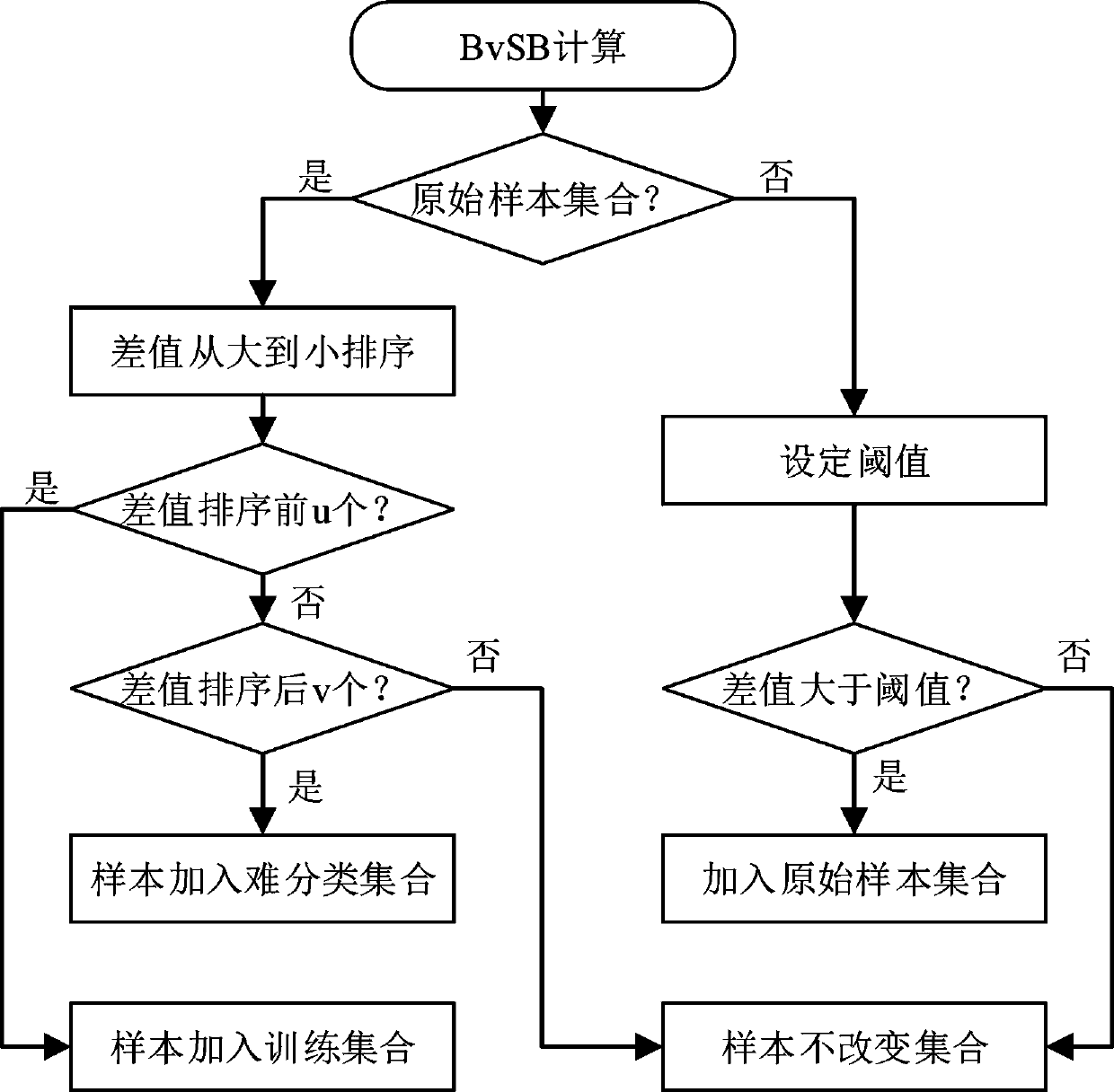

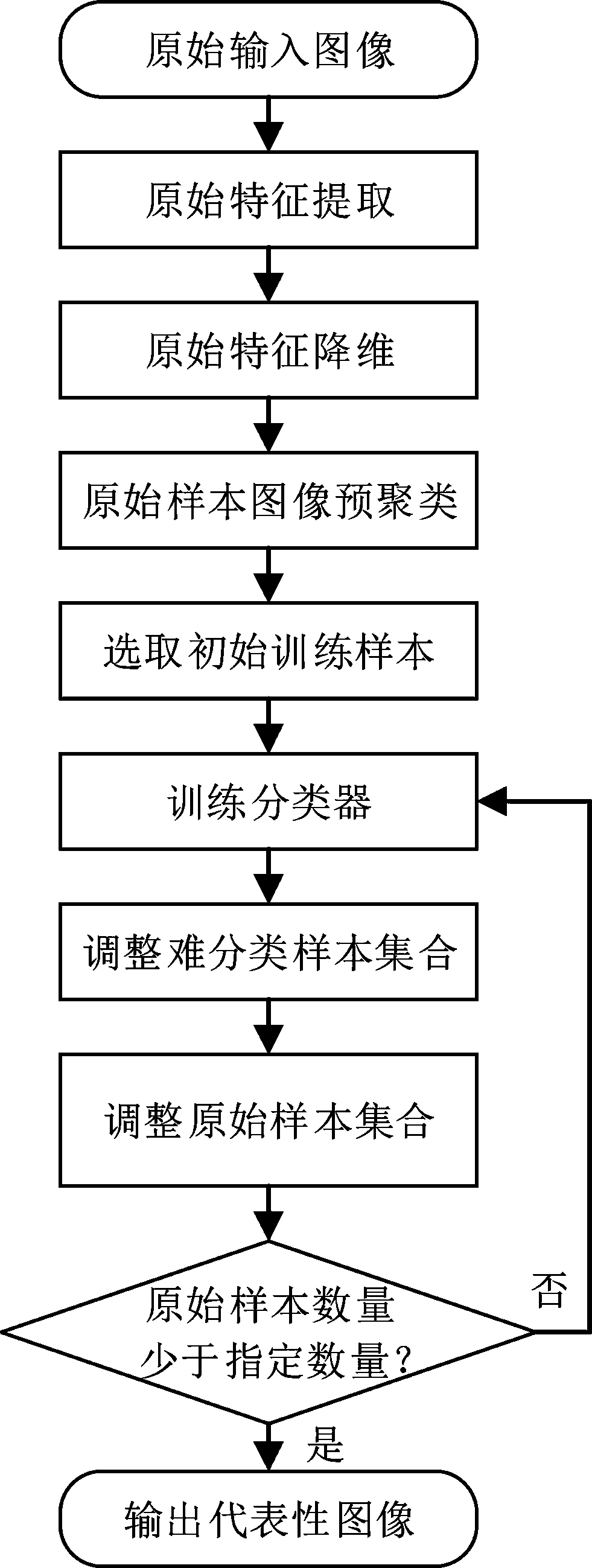

Representative image selection method based on multi-example active learning

The invention relates to the field of machine learning, and specifically relates to a representative image selection method based on multi-example active learning, which comprises the following stepsof: (1) extracting original features of an image; (2) dimension reduction of original features; (3) carrying out original sample image pre-clustering by utilizing the dimensionality reduction characteristics; (4) selecting an initial training sample; (5) training a classifier; (6) adjusting the difficult-to-classify sample set; (7) adjusting the original sample set; (8) repeatedly executing the steps (5) to (7) to carry out iterative training until convergence; and (9) outputting a representative image. Screening out a sample set which contributes to the maximum classification precision of theclassifier from the original samples through pre-clustering, multi-example learning and active learning methods; and the samples are labeled for other machine learning tasks, so that the manpower consumed by labeling can be reduced, a part of noise samples can be filtered out, and the effective operation of other machine learning tasks is ensured.

Owner:ZHEJIANG UNIV OF TECH

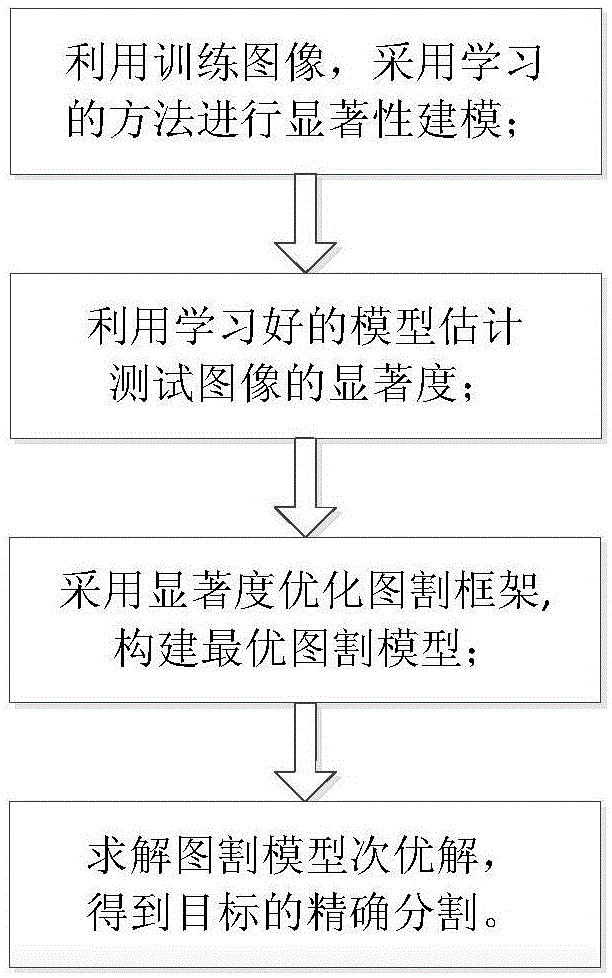

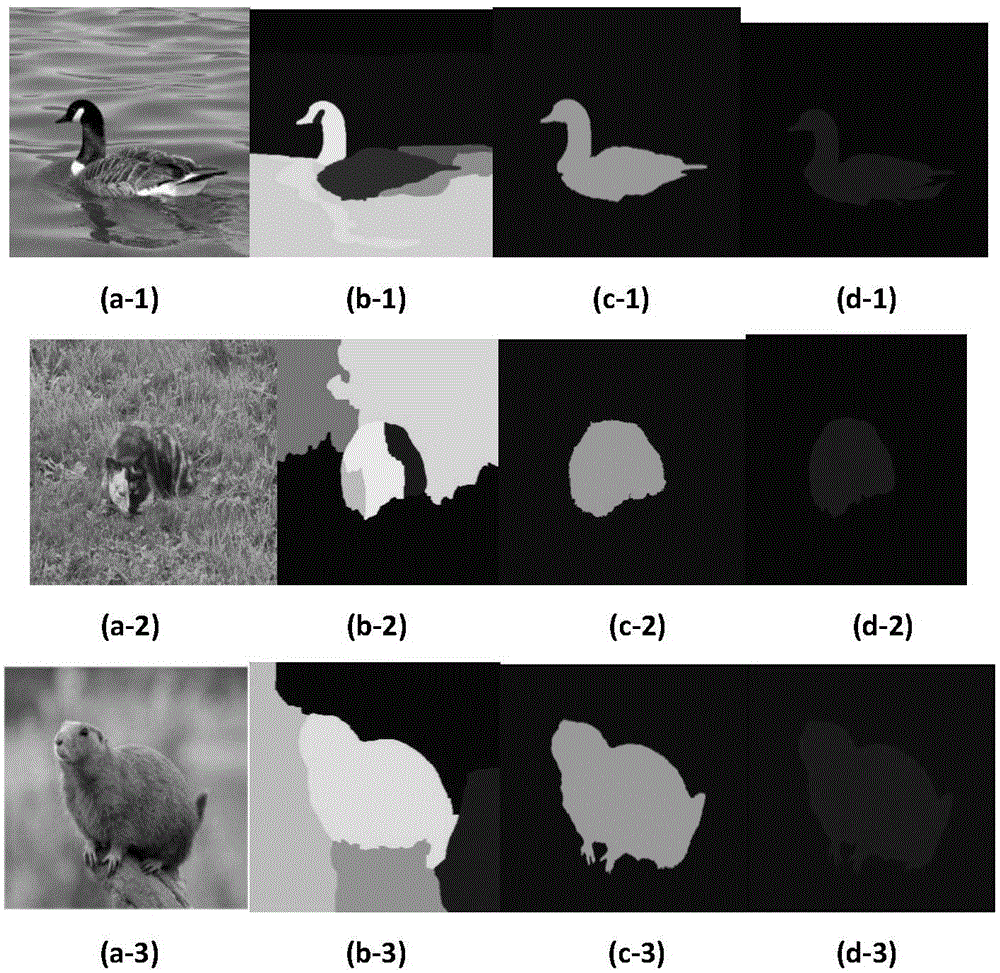

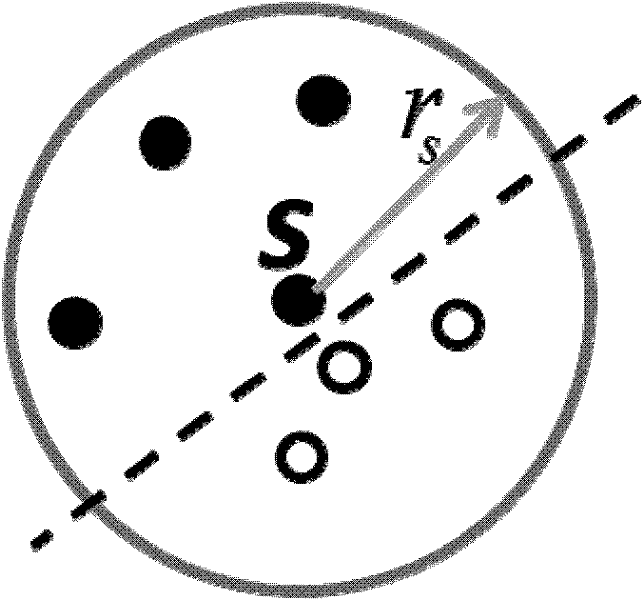

Object segmentation method based on multiple-instance learning and graph cuts optimization

ActiveCN105069774AImage analysisCharacter and pattern recognitionPattern recognitionCluster algorithm

The present invention discloses an object segmentation method based on multiple-instance learning and graph cuts optimization. The method comprises the first step of carrying out salient model construction by adopting a multiple-instance learning method on training images, and predicting packages and instances of a testing image by using a salient model, thus to obtain a saliency testing result of the testing image; a second step of introducing the saliency testing result of the testing image into a graph-cut frame, optimizing the graph-cut frame according to instance characteristic vectors and marks of the instance packages, acquiring a second-best solution of graph cuts optimization, and obtaining precise segmentation of an object. According to the method provided by the present invention, the saliency testing model is constructed by using the multiple-instance learning method and thus is suitable for images of specific types, the saliency testing result is used into an image segmentation method based on the graph theory so as to guide image segmentation, a graph cut model frame link is optimized, an agglomerative hierarchical clustering algorithm is adopted for solving, the segmentation result can thus well accords to semantic aware output, and an accurate object segmentation result can be obtained.

Owner:CHANGAN UNIV

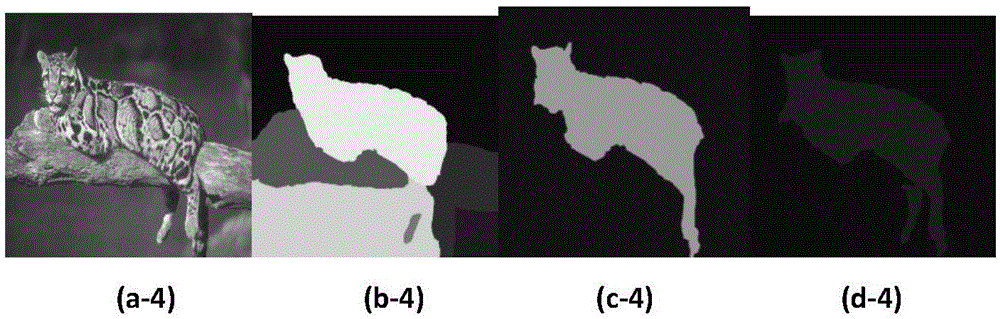

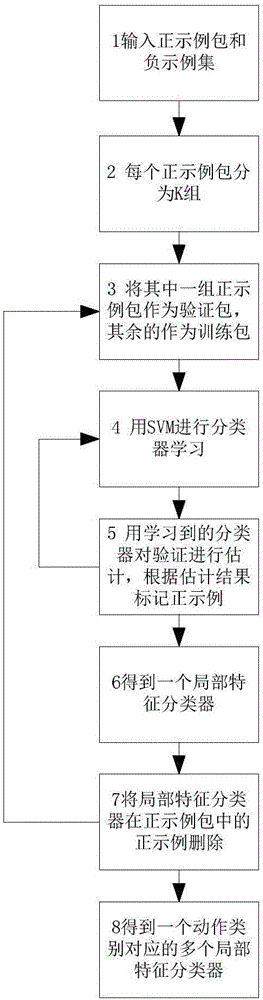

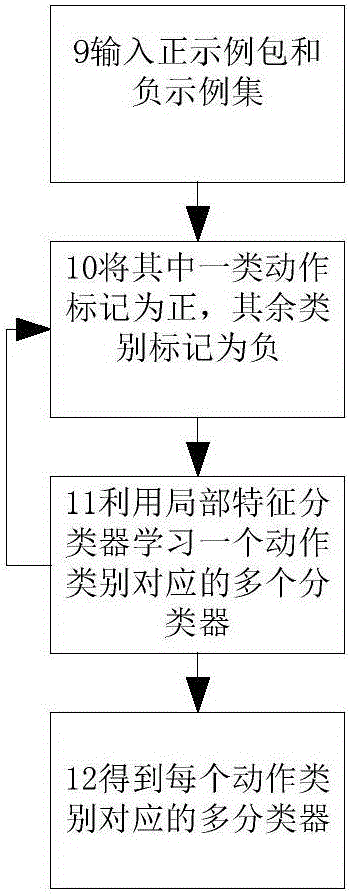

Human action classification method based on video local feature dictionary

InactiveCN105930792AAvoid memoryImprove accuracyCharacter and pattern recognitionFeature vectorPositive sample

The present invention discloses a human action classification method based on a video local feature dictionary. The method comprises a step of extracting a local feature from a training video with a category label, wherein a feature package is formed by the feature vector set in each segment of video, a step of grouping feature packages, using a multi-instance learning method to learn a local feature classifier, using a mode of cross-validation in the multi-instance learning, and marking multiple instances with largest ranking in each package as positive instances in updating the positive instances, a step of taking a learned classifier as the dictionary of feature encoding, and using a maximum pooling method to pool the local feature response to obtain the global vector expression of a video, and a step of using a global feature vector to learn, obtaining the classifier of each action category, and using the classifier to classify the action in a new video. According to the invention, the improvement of the accuracy of estimation is facilitated, the memory of an initial value by classification is avoided, and the accuracy of estimated positive samples is ensured at the same time.

Owner:WUHAN UNIV

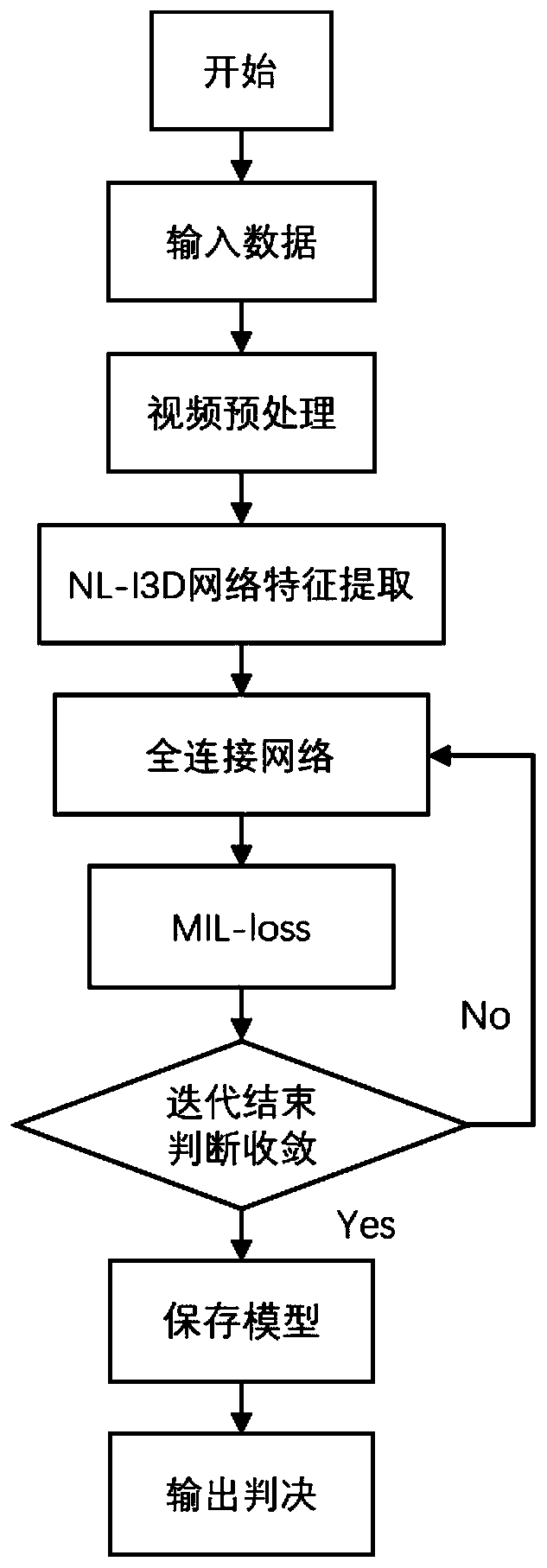

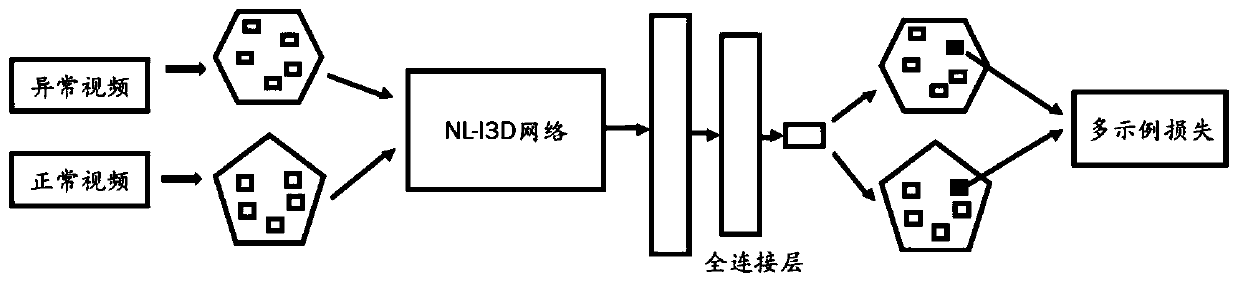

Video abnormal behavior discrimination method based on non-local network deep learning

ActiveCN110084151AMeeting recall requirementsHas engineering application valueCharacter and pattern recognitionConvolution filterAnomalous behavior

The invention discloses a video abnormal behavior discrimination method based on non-local network deep learning, and belongs to the field of computer vision, intelligence and multimedia signal processing. A training set is constructed by using a multi-example learning idea, and positive and negative packages and examples of video data are defined and labeled. A non-local network is adopted for feature extraction of a video sample, an I3D network of a residual structure serves as a convolution filter for extracting space-time information, and a non-local network block fuses long-distance dependence information so as to meet the time sequence and space requirements of video feature extraction. After the features are obtained, a regression task is established through a weakly supervised learning method, and a model is trained. According to the method, unlabeled categories can be judged, and the method is suitable for the conditions that positive samples of abnormal detection tasks are rare and the diversity in the categories is high. The method meets the recall rate requirement of an abnormal scene and has engineering application value.

Owner:SOUTHEAST UNIV

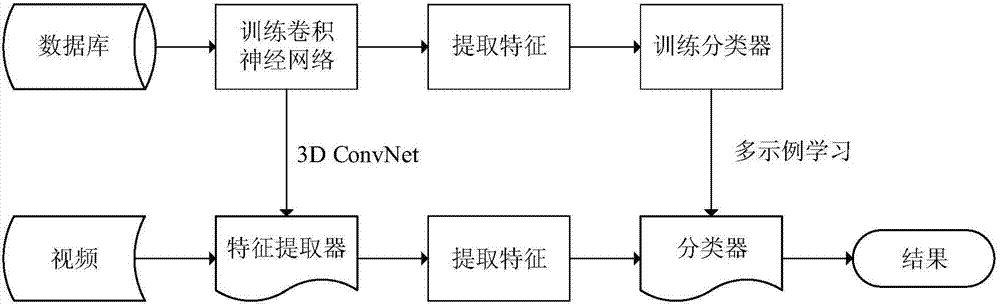

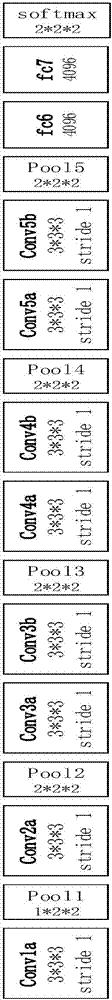

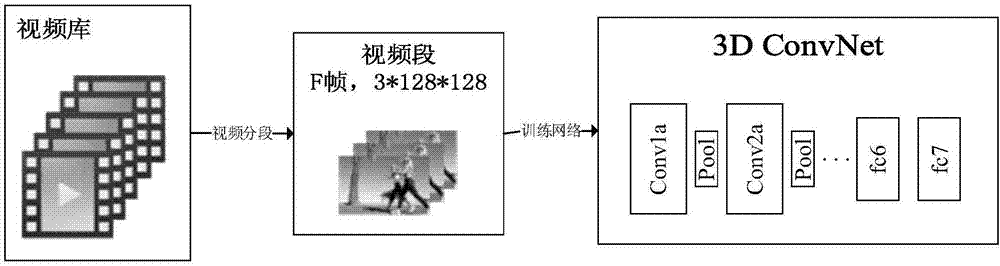

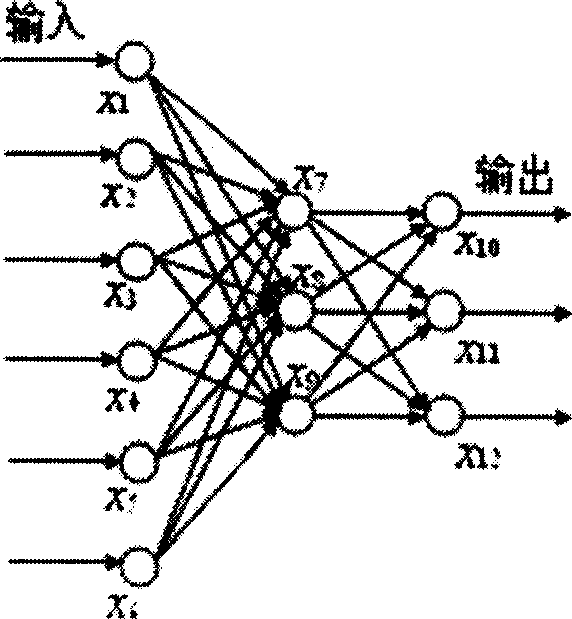

Neural network-based movement recognition method

InactiveCN106980826AReduce the impact of classification resultsAvoid negative effectsCharacter and pattern recognitionNeural learning methodsHuman bodyFeature extraction

The invention discloses a neural network-based human body movement recognition method. The method includes the following steps of based on a video database, training N mutually independent 3D convolutional neural networks as a video feature extractor; according to the video feature extractor, training a multi-instance learning classifier; inputting a to-be-recognized video, extracting video features through the well trained network, and classifying movements by the classifier. According to the technical scheme of the invention, the influence of a large amount of noise features on a classification result is avoided. Meanwhile, the negative effect of a fixed sample length on a movement recognition result is also avoided.

Owner:TIANJIN UNIV

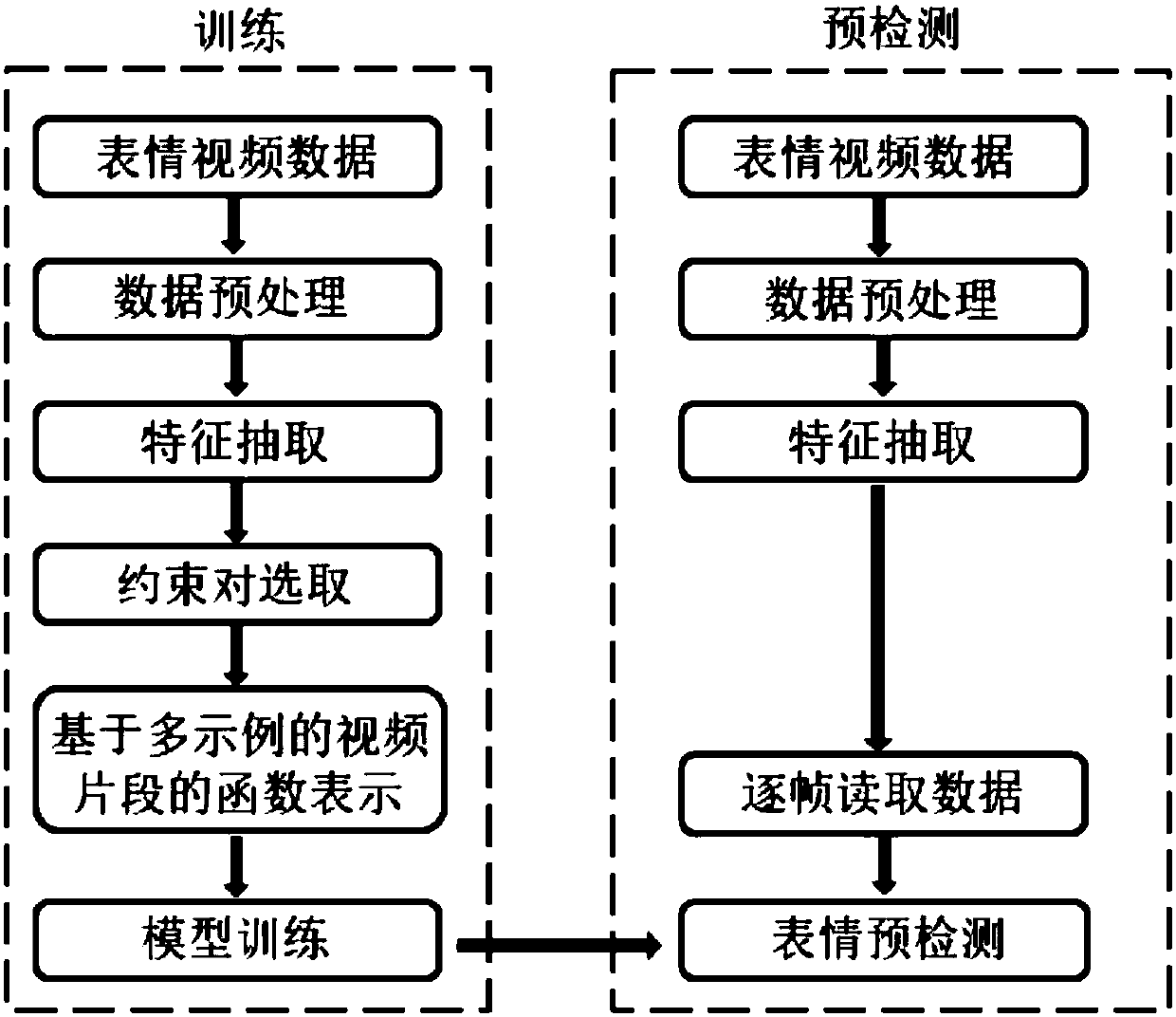

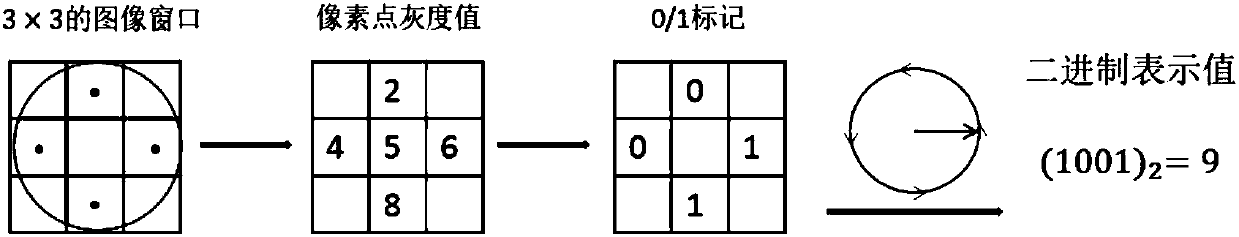

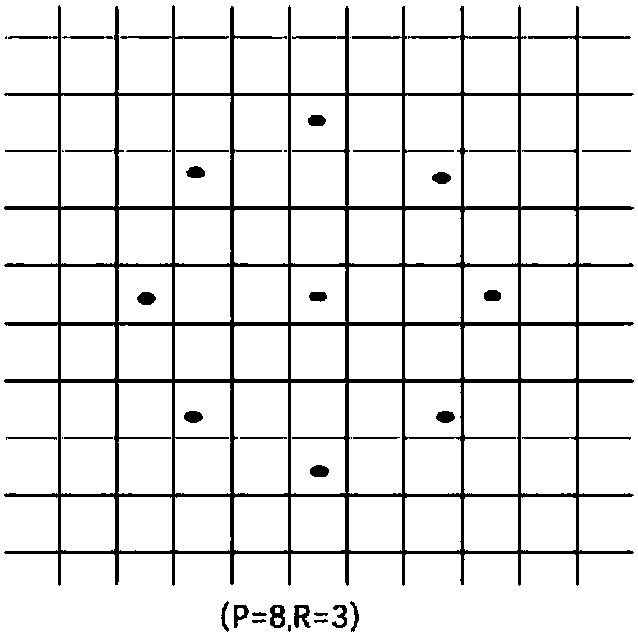

Video facial expression early detection method based on multi-instance learning

ActiveCN108038434AFully excavatedImprove accuracyCharacter and pattern recognitionNeural architecturesSupport vector machineFeature vector

The invention discloses a video facial expression early detection method based on multi-instance learning. The method comprises the steps that (1) video data of training samples and to-be-tested samples is preprocessed, and a facial region in each frame image in a video is extracted; (2) an LBP descriptor is adopted to perform feature extraction on the facial region, obtained through preprocessing, in each frame image, and feature vectors of each frame image are obtained; (3) an expression early detection function is solved by the adoption of an extended structured output support vector machine based on multi-instance learning according to the feature vectors of the training samples; and (4) the early detection function obtained in the step (3) is used to perform facial expression early detection according to the features vector of the to-be-tested samples in the step (2), and an expression early detection result is obtained. Through the method, expressions can be monitored in real time, and the recognition rate is high.

Owner:SOUTHEAST UNIV

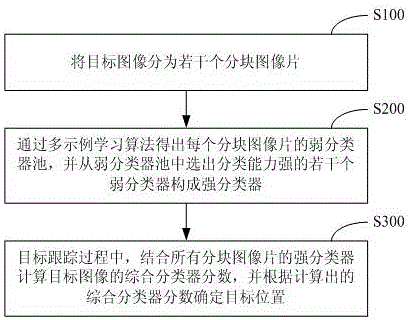

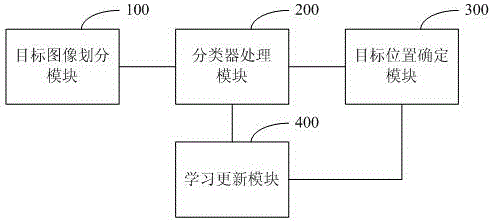

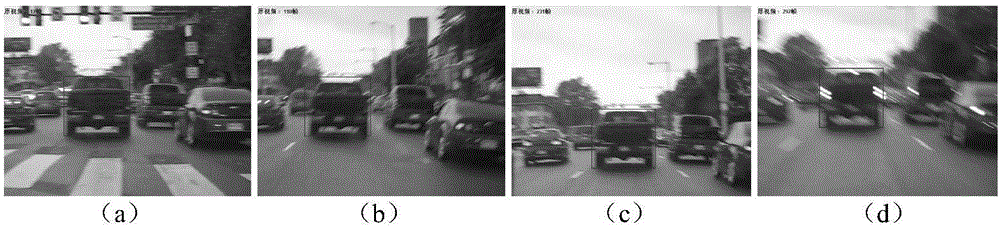

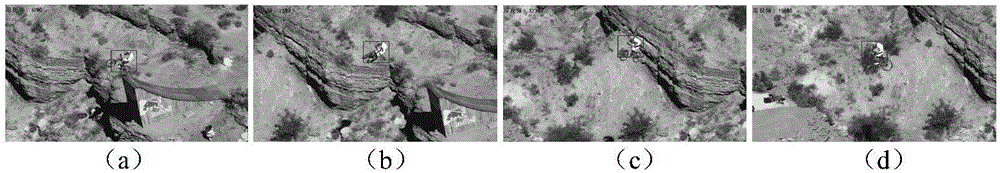

Target tracking method and system based on partitioned multi-example learning algorithm

ActiveCN105976401AImprove object tracking performanceTarget Tracking ContinuesImage enhancementImage analysisPattern recognitionTracking system

The invention discloses a target tracking method based on a partitioned multi-example learning algorithm. The method includes that a target image is divided into a plurality of partitioned image sheets; the weak classifier pool of each partitioned image sheet is obtained through the multi-example learning algorithm, and a plurality of weak classifiers having high classification capability are selected from the weak classifier pool to form strong classifiers; and during the target tracking, the score of an integrated classifier of the target image is calculated through the combination with the strong classifiers of all of the partitioned image sheets, and a target position is determined based on the score of the integrated classifier. The target tracking method based on the partitioned multi-example learning algorithm is high in tracking performance and stable in tracking process, and can solve the problems of serious lighting and pose change and shielding. The invention further discloses a target tracking system based on a partitioned multi-example learning algorithm.

Owner:HEBEI COLLEGE OF IND & TECH

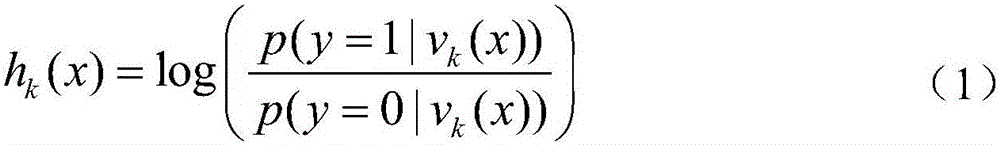

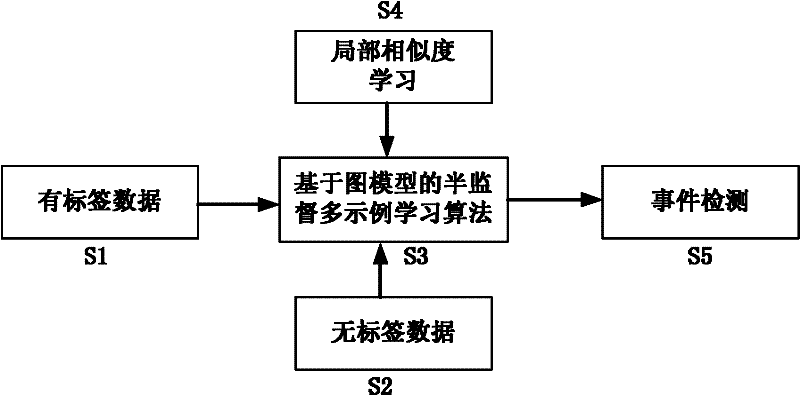

Video event detection method based on multimedia analysis

InactiveCN102298604AAchieve retrievalAchieving Semantic UnderstandingSpecial data processing applicationsMathematical modelEvent model

The present invention is a video event detection method based on multimedia analysis, which includes: using text analysis to analyze the video to obtain a small amount of automatically marked video data; using multiple keywords to obtain a large number of event-related videos through a network video search engine Data; a semi-supervised multi-instance learning algorithm based on a graph model, using a small amount of automatically labeled video data and a large amount of event-related video data for training, and using the similarity criteria of event examples and the positive and negative attribute criteria of event packets to construct The mathematical description model of the event adopts a constrained convex-convex process method to solve a large amount of video data related to the event; the local similarity measure learning method uses the spatial distribution characteristics of the samples to learn and obtain effective similarity. The event model is used to realize event identification and location; according to the event model, the content of the video is semantically analyzed to obtain the location information of the event in the video.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Non-supervision joint visual concept learning system and method based on images and characters

ActiveCN106227836ANo diversitySolve the complicated problem of manual calibrationCharacter and pattern recognitionSpecial data processing applicationsSocial mediaStudy methods

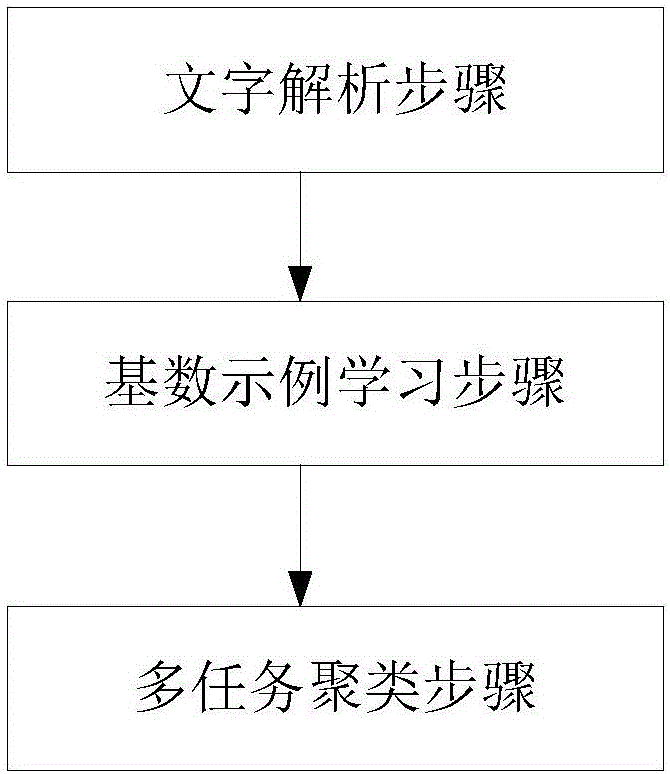

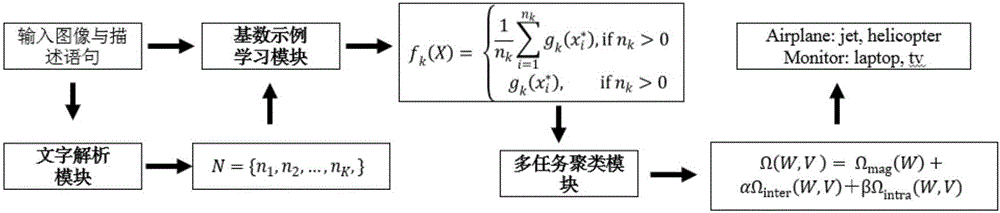

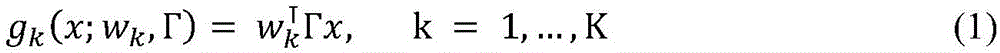

The invention discloses a non-supervision joint visual concept learning system and method based on images and characters. The system comprises a character resolving module, a cardinal example learning module and a multi-task clustering module, wherein the character resolving module is used for extracting corresponding nouns from additional sentence description of an image to serve as visual concepts and cardinal numerals to serve as additional constraint information of a next module through social media; the cardinal example learning module is used for training classifiers of the visual concepts by a multi-example learning method guided by cardinals; and the multi-task clustering module is used for processing cross-concept diversities, namely, clustering nouns indicating similar objects into large classes to serve as the visual concepts. By adopting non-supervision automatic leaning, the problem of complexity in implementation of manual calibration under large-scale data can be solved effectively.

Owner:SHANGHAI JIAO TONG UNIV

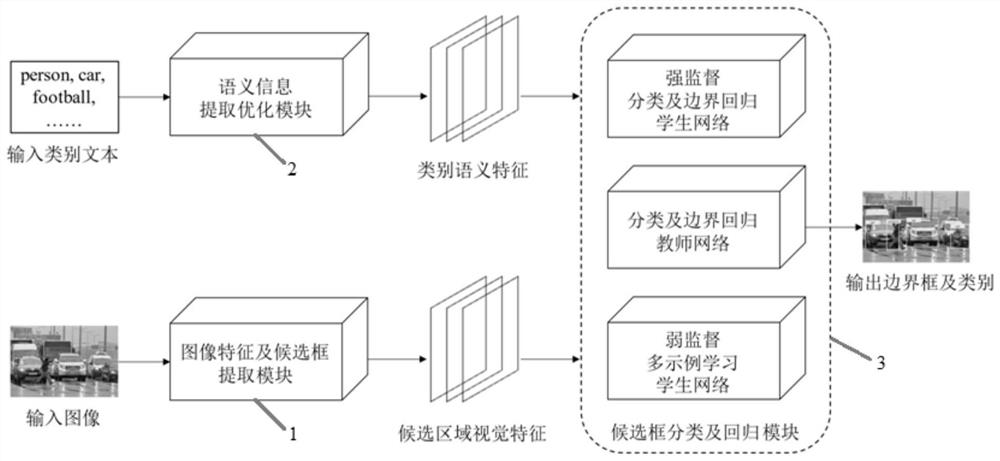

Weak supervision target detection method and system based on transfer learning

ActiveCN113239924AEasy to detectPromote aggregationCharacter and pattern recognitionNeural learning methodsData setFeature extraction

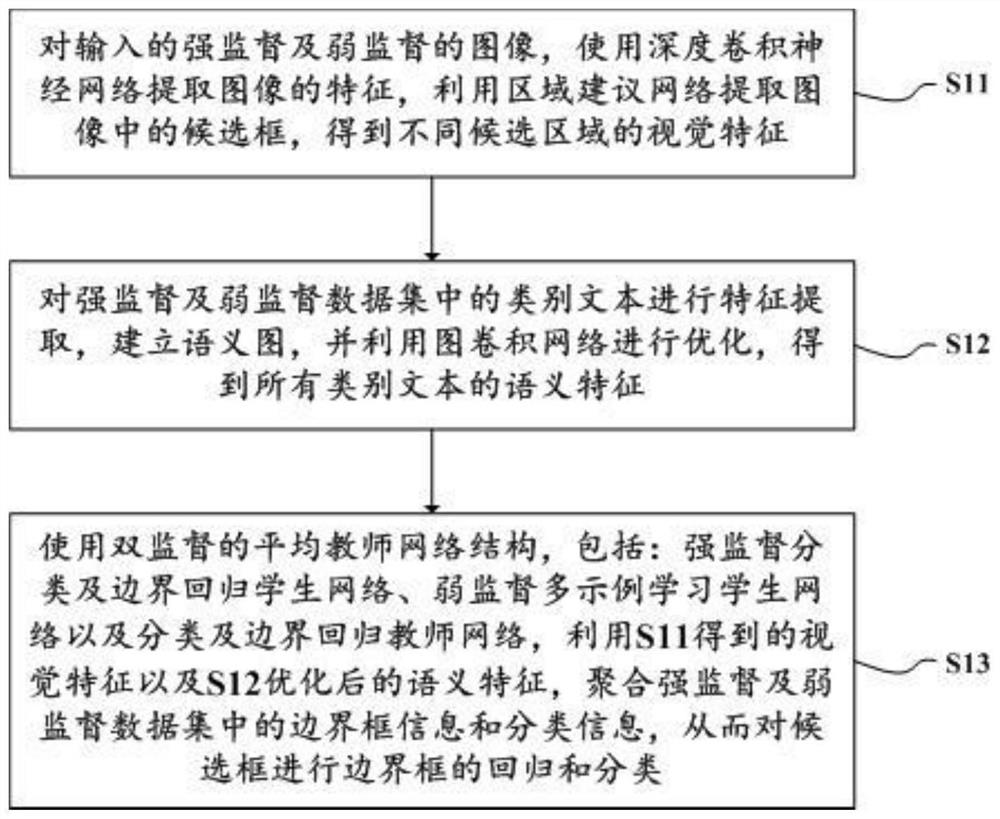

The invention discloses a weak supervision target detection method and system based on transfer learning; the method comprises the steps: extracting the features of an input strong supervision image and a weak supervision image through a deep convolutional neural network, extracting candidate boxes in the images through a region suggestion network, and obtaining the visual features of different candidate regions; performing feature extraction on category texts in the strong supervision data set and the weak supervision data set, establishing a semantic graph, and performing optimization by using a graph convolutional network to obtain semantic features of all category texts; employing dual-supervised average teacher network structure, which comprises a strong supervised classification and boundary regression student network, a weak supervised multi-instance learning student network and a classification and boundary regression teacher network; and aggregating bounding box information and classification information in the strong supervision data set and the weak supervision data set by using visual features and optimized semantic features, thereby performing bounding box regression and classification on candidate boxes. According to the invention, the weak supervision target detection effect is improved.

Owner:SHANGHAI JIAO TONG UNIV

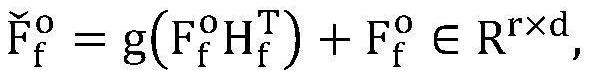

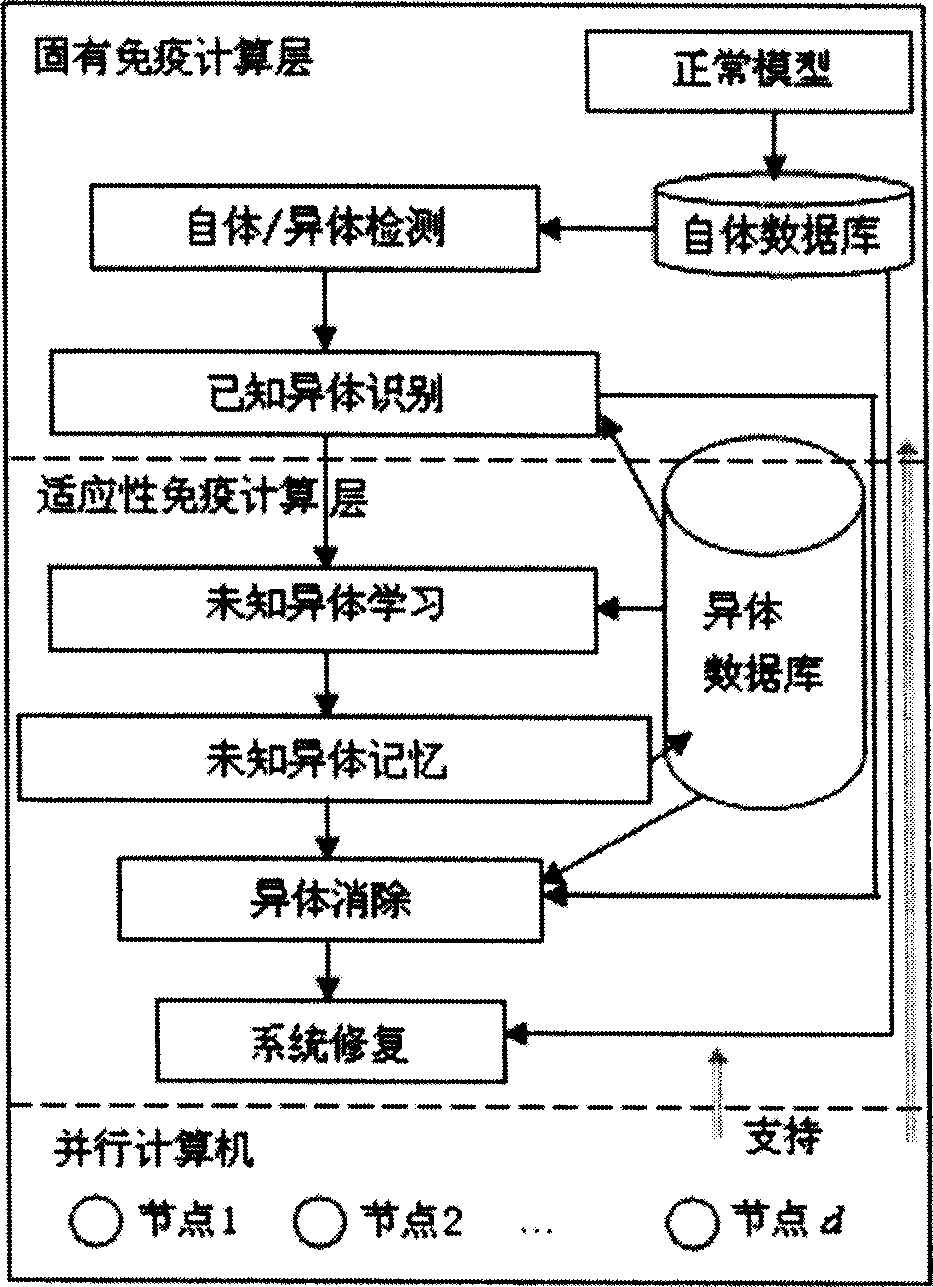

Artificial immune system based on normal model

InactiveCN1866267AError detection/correctionPlatform integrity maintainanceComputer resourcesPattern recognition

The disclosed artificial immunity system based on normal model comprises: an intrinsic immunity calculation layer to build self-database by normal model only determined by time-space attribution of all normal assembly, an adaptive immunity calculation layer to recognize and store unknown variant by NN or example-learning mechanism and eliminate variant, and a parallel immunity calculation layer to balance load for every computer.

Owner:龚涛

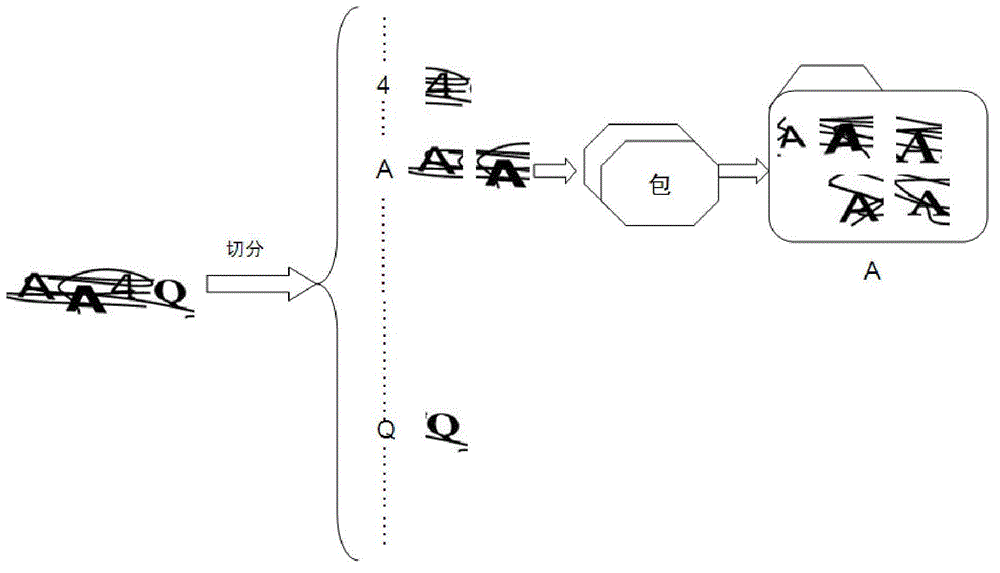

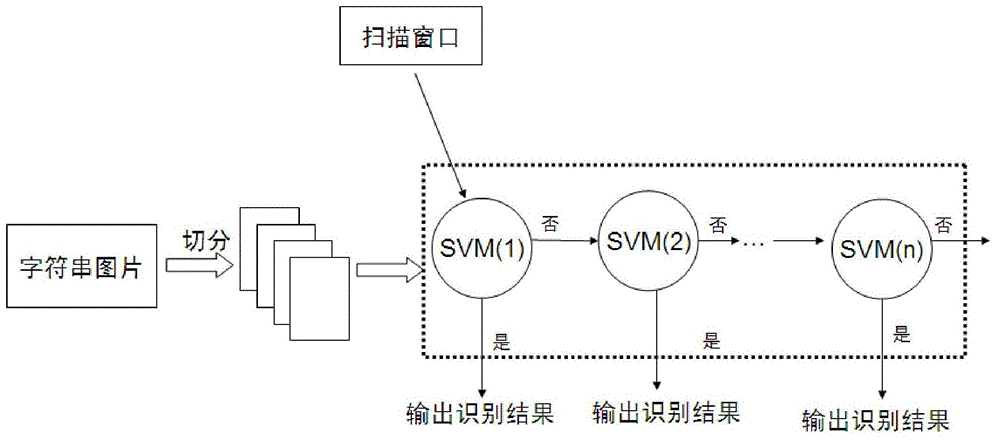

Method for splitting and identifying character strings at complex interference

InactiveCN102722736ARealize automatic acquisitionImprove work efficiencyCharacter and pattern recognitionSupport vector machineAlgorithm

The invention discloses a method for splitting and identifying character strings at complex interferences, which is characterized by comprising a learning phase and an identifying phase. The learning phase comprises the following steps of: splitting an image containing m characters into m pictures to form a multi-example learning packet, taking the same characters as a category, and classifying the packet and inputting to a base; and calculating an integrogram of the packet, extracting haar-like characteristics of the packet as an example of the packet, finding out key examples of each category by using a diversity density algorithm, and finally learning the key examples by using classified performances of an SVM (Support Vector Machine). The identifying phase comprises the following steps of: predicting the type of a new packet by using a learning result to identify the character strings. By using the method, the function of automatically identifying the character strings at the complex interferences can be realized, and the identifying speed and efficiency are higher.

Owner:HEFEI UNIV OF TECH

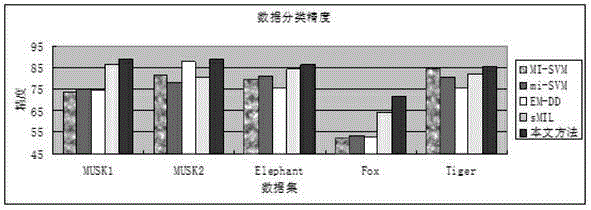

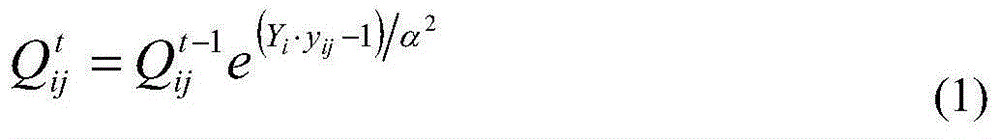

Multi-instance weighted packet learning method for online uncertain image recognition

ActiveCN105069473AReduce distractionsImprove classification accuracyCharacter and pattern recognitionStudy methodsLearning methods

The invention provides a multi-instance weighted packet learning method for online uncertain image recognition, which reduces the influence of noise data on classification results in online image recognition through improving a multi-instance learning algorithm, and puts forward the ideal of endowing different weights to packet instances, updating a classifier continuously and adjusting the weights to increase classification accuracy. The multi-instance weighted packet learning method is different from the traditional multi-instance learning method for image recognition in that: during the traditional multi-instance learning, a training set is composed of a plurality of packets, each packet comprises a plurality of instances, and tags of the packet instances are unknown. The multi-instance weighted packet learning method for online uncertain image recognition reduces the interference of noise instances on classification prediction through weighing the instances, iterates the training classifier, trains instance weights, represents the packets and achieves higher classification accuracy.

Owner:SHENZHEN TISMART TECH CO LTD

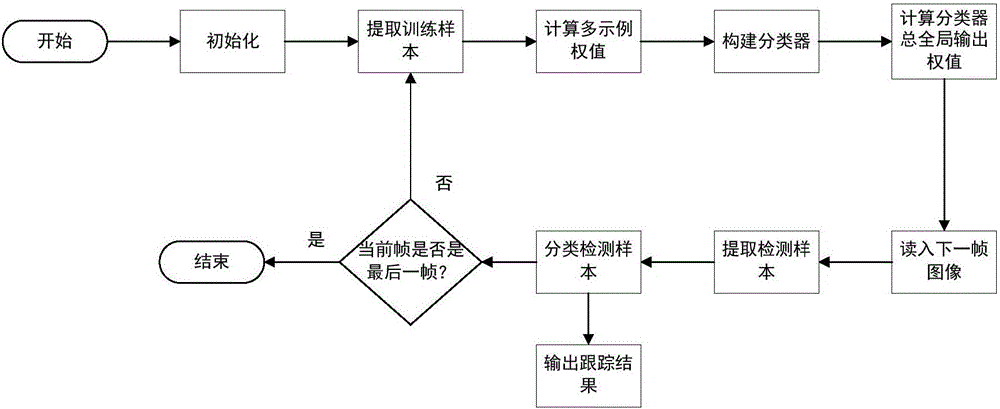

Weighted extreme learning machine video target tracking method based on weighted multi-example learning

The invention discloses a weighted extreme learning machine video target tracking method based on weighted multi-example learning, solving the problem of bad tracking accuracy in the prior art. The method includes 1. initializing a Haar-like feature similar model pool and constructing a variety of feature model blocks, setting the weighted extreme learning machine network parameters; 2. extracting the training samples in the current frame and their feature blocks corresponding to the feature blocks of the different feature model blocks; 3. calculating the weighted multi-instance learning weight values; 4. constructing a plurality of networks corresponding to the different feature blocks and selecting the network with the largest similarity function value of the packet and the corresponding feature model block; 5. calculating the network global output weight values; 6. extracting the detection samples in the next frame and their corresponding feature blocks corresponding to the selected feature model blocks; 7. classifying the detection samples by means of the selected network and obtaining the target position of the next frame; and 8. repeating the above steps until the video is ended. According to the invention, the tracking accuracy is improved, and the target robustness tracking is realized.

Owner:XIDIAN UNIV

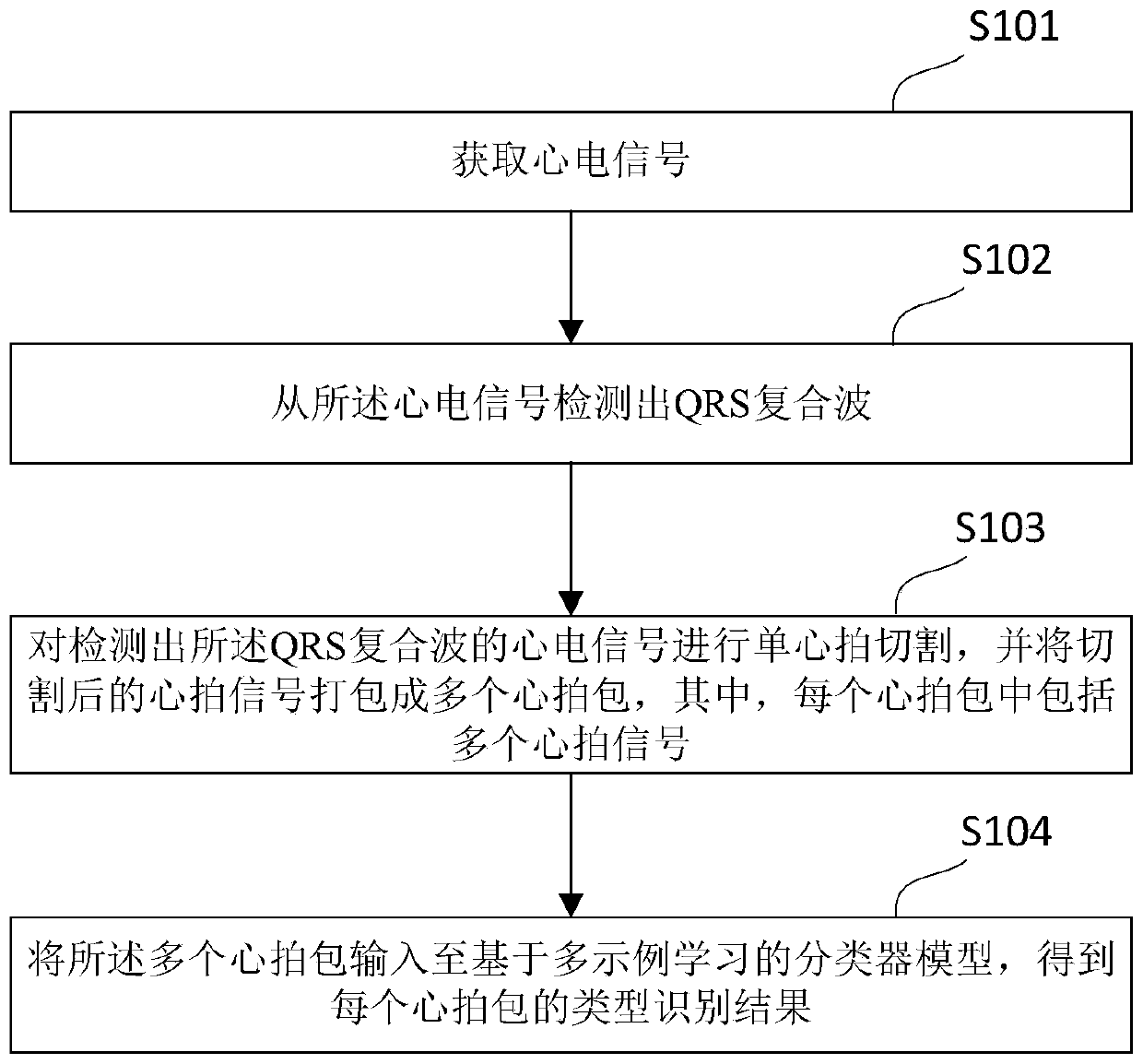

Electrocardiosignal classification method and device, electronic equipment and storage medium

PendingCN111488793AAvoid time costReduce labor costsCharacter and pattern recognitionNeural architecturesEcg signalClassification methods

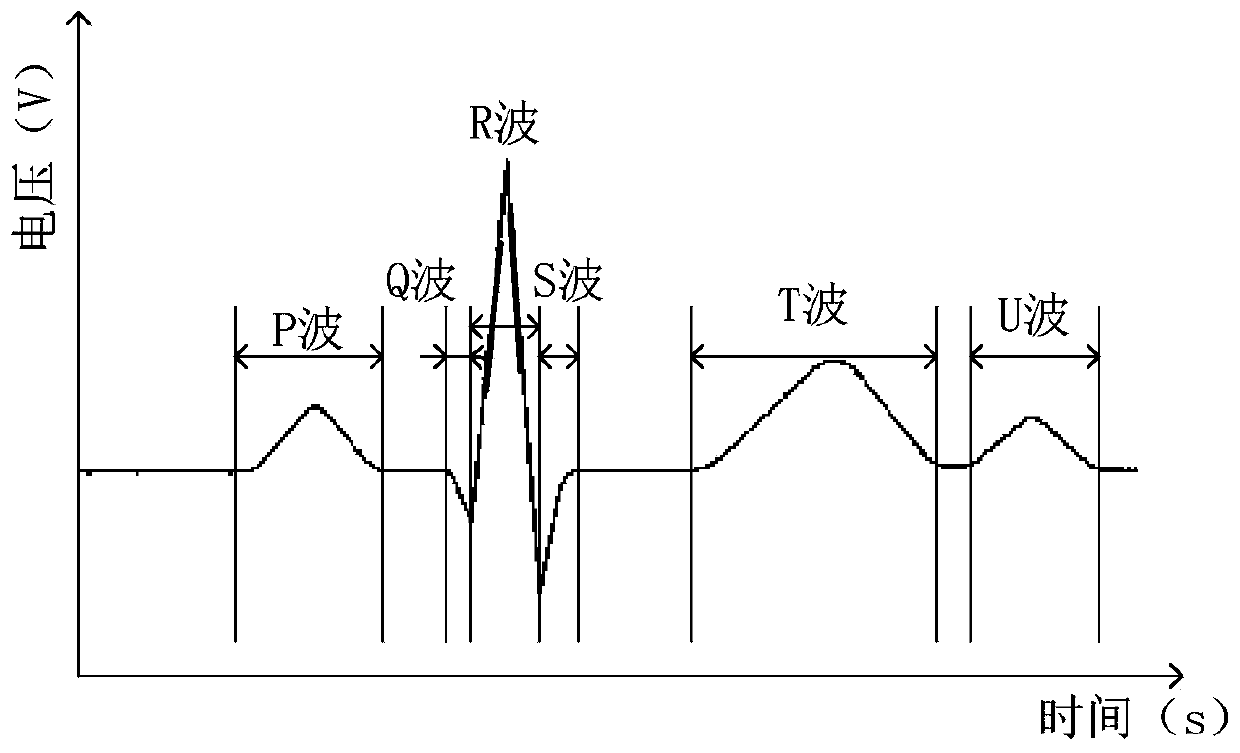

The embodiment of the invention relates to an electrocardiosignal classification method and device, electronic equipment and a storage medium. The electrocardiosignal classification method disclosed by the embodiment of the invention comprises the following steps: acquiring electrocardiosignals; detecting a QRS complex wave from the electrocardiosignal; carrying out single heart beat cutting on the electrocardiosignals with the detected QRS composite waves and then packaging the electrocardiosignals into a plurality of heart beat packets; inputting the plurality of heart beat packets into a classifier model based on multi-example learning, obtaining a type identification result of each heart beat packet, wherein when the classifier model based on multi-example learning is used for classification, each heart beat packet is used as an example packet, each heart beat signal in the heart beat packet is used as an example in the example packet, and the type identification result comprises anormal rhythm type and an abnormal rhythm type. The electrocardiosignal classification method provided by the embodiment of the invention effectively reduces the time and labor cost spent in performing machine learning classification by manually labeling the heart beat.

Owner:GUANGZHOU SHIYUAN ELECTRONICS CO LTD

Three-branch convolutional network fabric defect detection method based on weak supervised learning

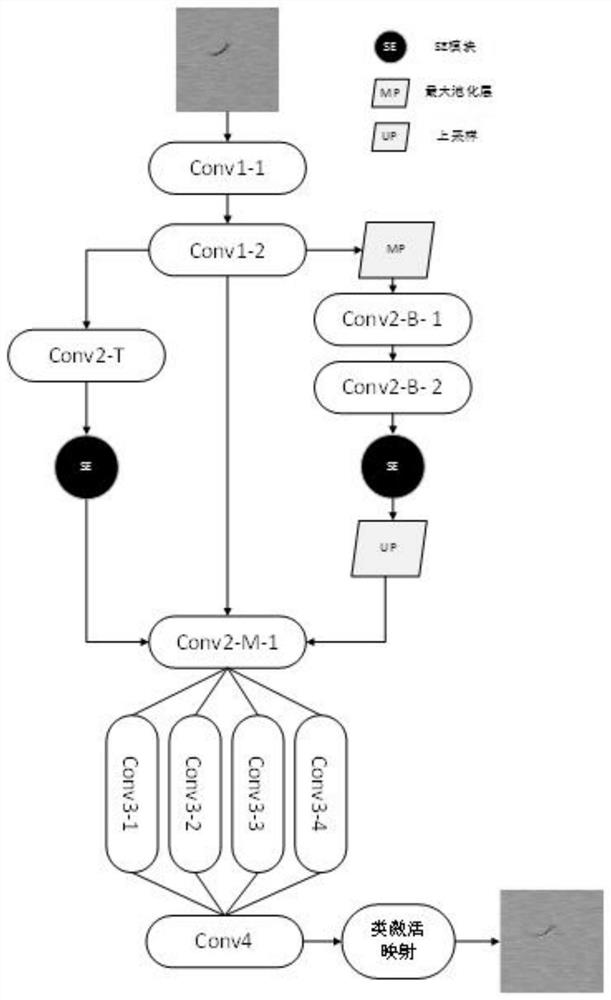

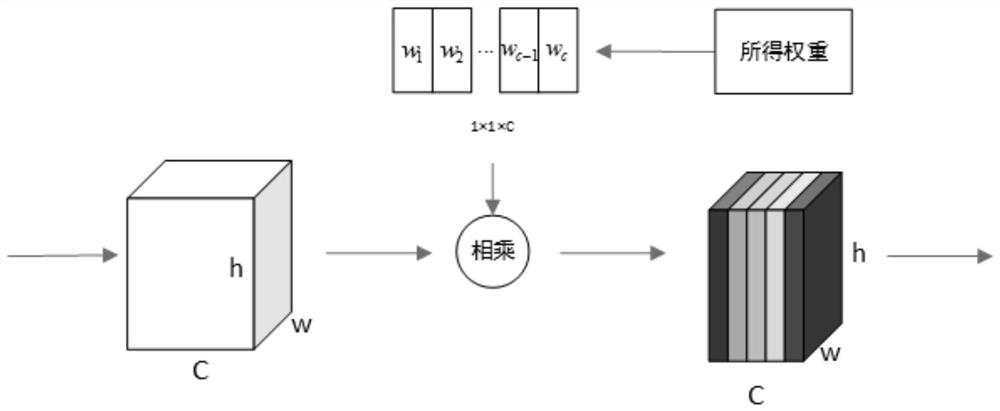

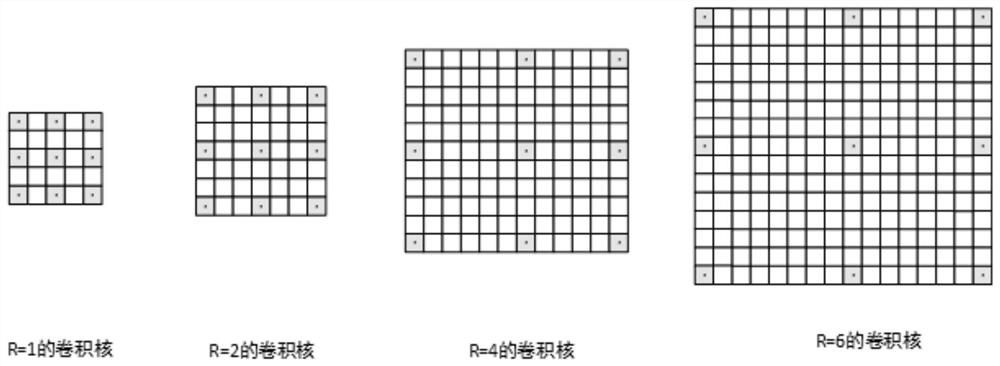

ActiveCN111882546AAvoid interferencePrecise positioningImage enhancementImage analysisClass activation mappingEngineering

The invention provides a three-branch convolutional network fabric defect detection method based on weak supervised learning, and the method comprises the steps: firstly, building a multi-example learning detection network based on a mutual exclusion principle in a weak supervised network, so as to carry out the training through an image-level label; then, establishing a three-branch network framework, and adopting a long connection structure so as to extract and fuse the multi-level convolution feature map; utilizing the SE module and the cavity convolution to learn the correlation between channels and expand the convolution receptive field; and finally, calculating the positioning information of the target by using a class activation mapping method to obtain the attention mapping of thedefect image. According to the method, the problems of rich textural features and defect label missing contained in the fabric picture are comprehensively considered, and by adopting a weak supervision network mechanism and a mutual exclusion principle, the representation capability of the fabric picture is improved while the dependence on the label is reduced, so that the detection result has higher detection precision and adaptivity.

Owner:ZHONGYUAN ENGINEERING COLLEGE

Few-sample pedestrian re-identification method based on deep multi-example learning

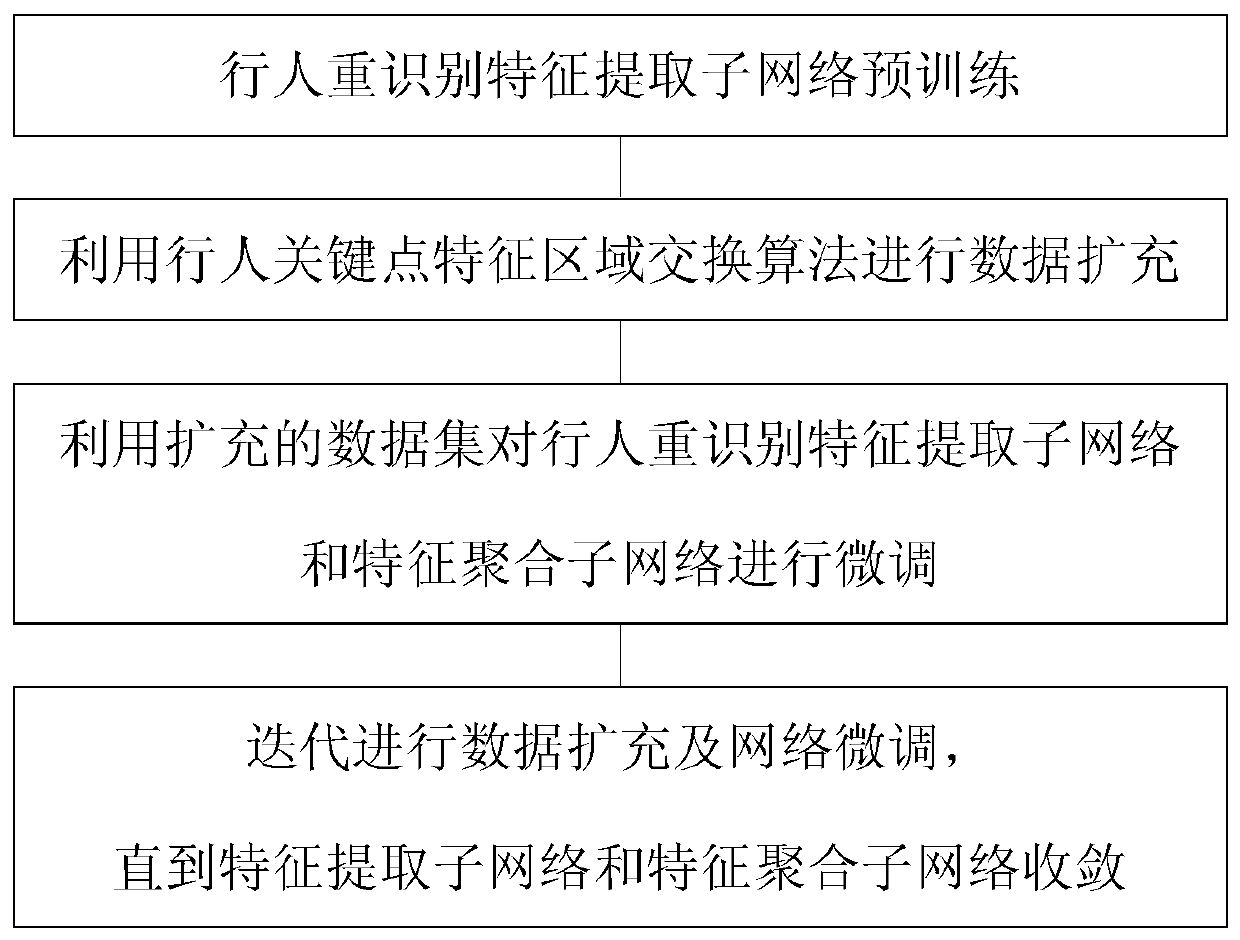

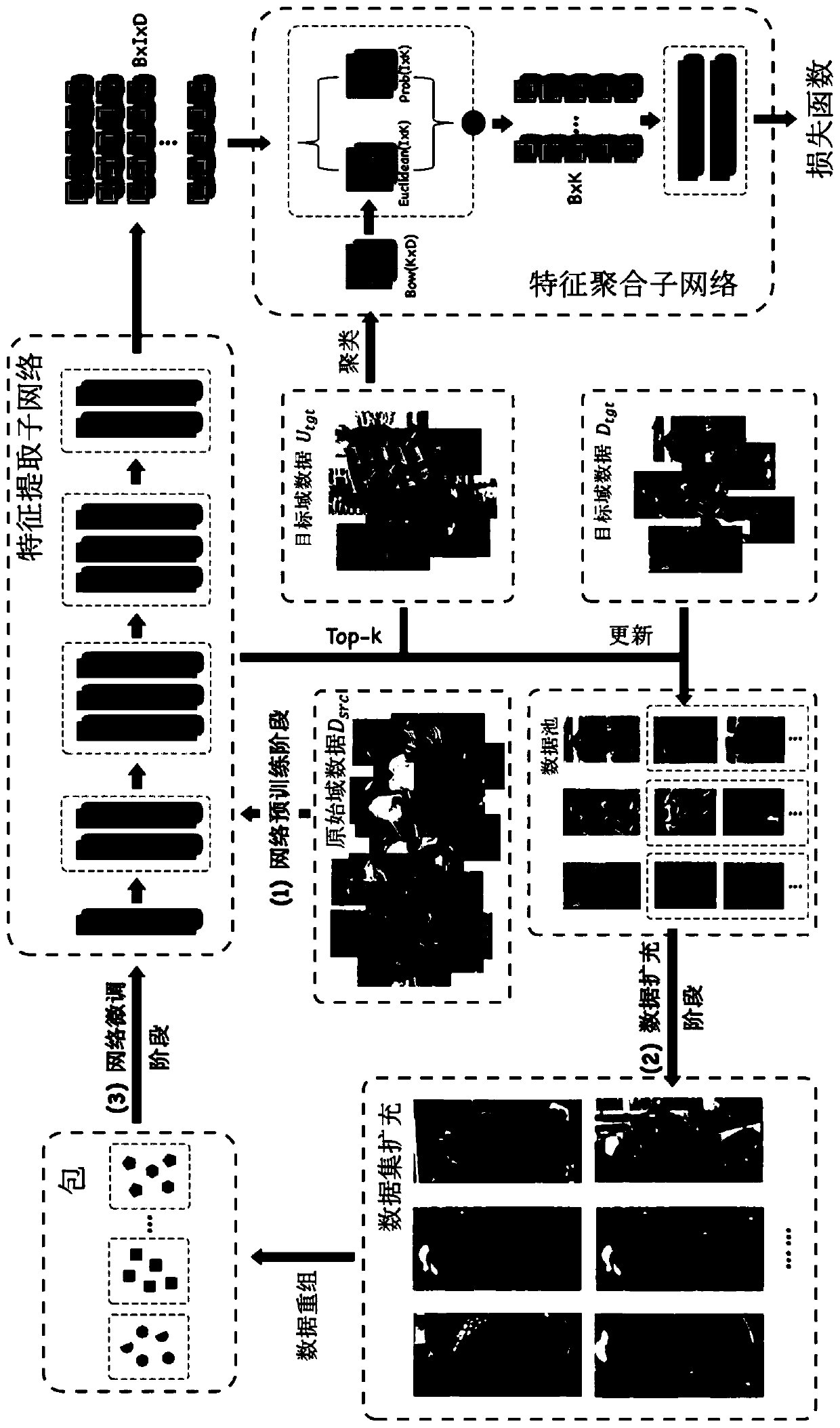

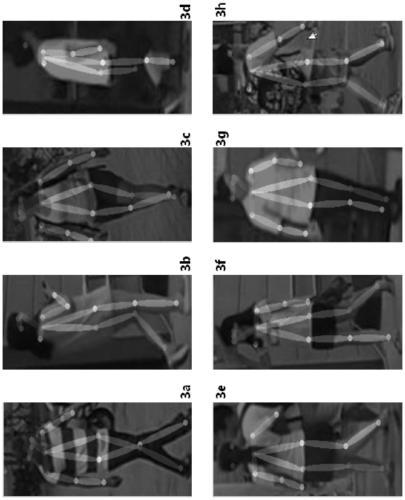

ActiveCN111488760AStrong representation abilityImprove recognition accuracyInternal combustion piston enginesCharacter and pattern recognitionData setData expansion

The invention relates to a few-sample pedestrian re-identification method based on deep multi-example learning. The method comprises three stages: a network pre-training stage, a data set expansion stage and a network fine tuning stage. The method includes: after the pedestrian re-identification feature extraction sub-network is pre-trained, performing data expansion by utilizing a pedestrian keypoint feature region exchange algorithm; finely adjusting the pedestrian re-identification feature extraction sub-network and the feature aggregation sub-network by using the expanded data set; iteratively repeating data set expansion and network fine tuning until the feature extraction sub-network and the feature aggregation sub-network converge. Once the training is completed, the pedestrian re-identification model on the original domain is migrated and extended to the target domain by using few samples. On the premise of giving a small number of learning samples in the target domain, the pedestrian re-identification model can be effectively migrated and expanded to the target domain monitoring network, and the few-sample pedestrian re-identification method has the advantages of high accuracy, good robustness, good expansibility and mobility.

Owner:FUDAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com