Bag-of-visual-word-model-based indoor scene cognitive method

A technology of visual bag of words and indoor scenes, applied in the field of indoor scene cognition based on the visual bag of words model, can solve problems such as inability to complete high-intelligence tasks, and achieve the effect of improving the scene recognition rate and ensuring the rapidity of the algorithm

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention will be further described below in conjunction with the accompanying drawings.

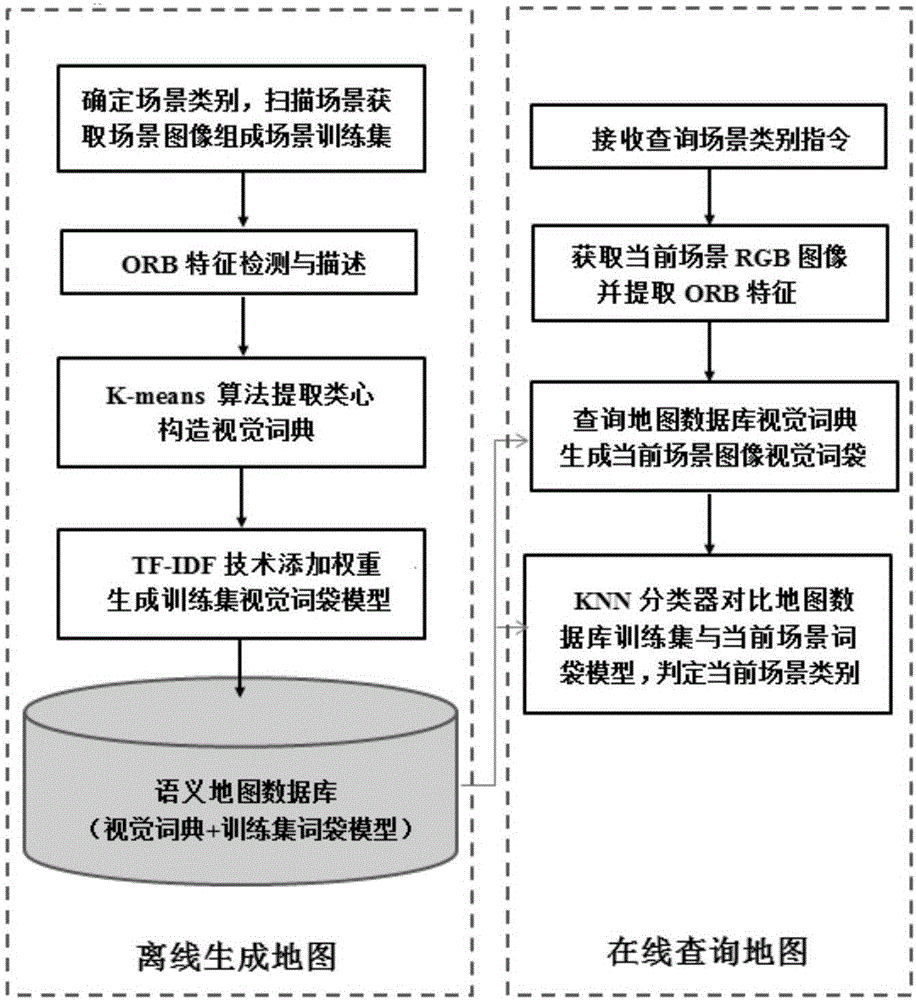

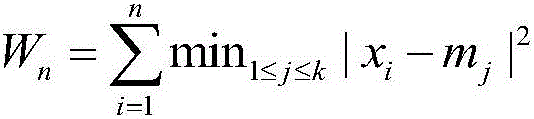

[0027] The invention discloses an indoor scene cognition method based on a visual word bag model. The method of the invention includes two parts: offline map generation and online map query. The offline map generation part includes: scanning the scene to obtain the scene training set; ORB feature detection and description; K-means clustering to extract the centroids to construct the visual dictionary; TF-IDF technology adds weights to generate the training set visual word bag model database. The online map query part includes: receiving scene query instructions; obtaining the RGB image of the current scene and extracting ORB features; querying the visual dictionary of the map database to generate a visual bag-of-words model for the current scene image; comparing the KNN classifier with the training set of the map database and the bag-of-words model of the current scene ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com