A fine-grained task scheduling method in cloud environment

A task scheduling, fine-grained technology, applied in multi-programming devices, program control design, instruments, etc., to achieve the effect of improving throughput and solving high-latency problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

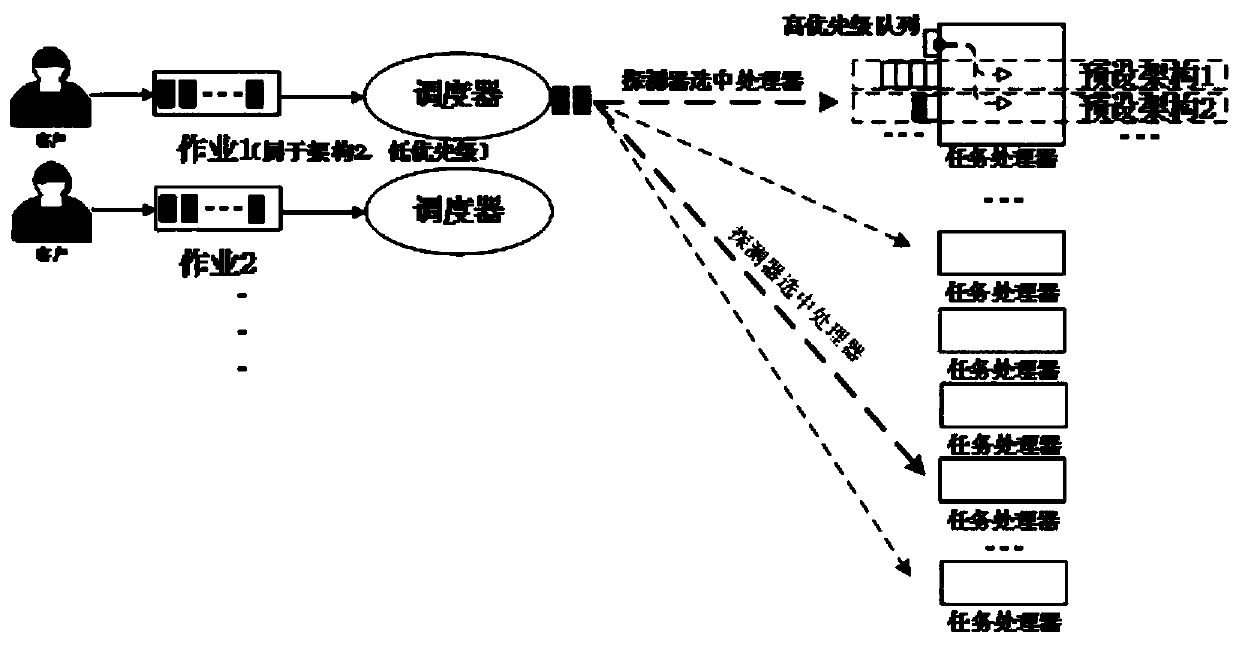

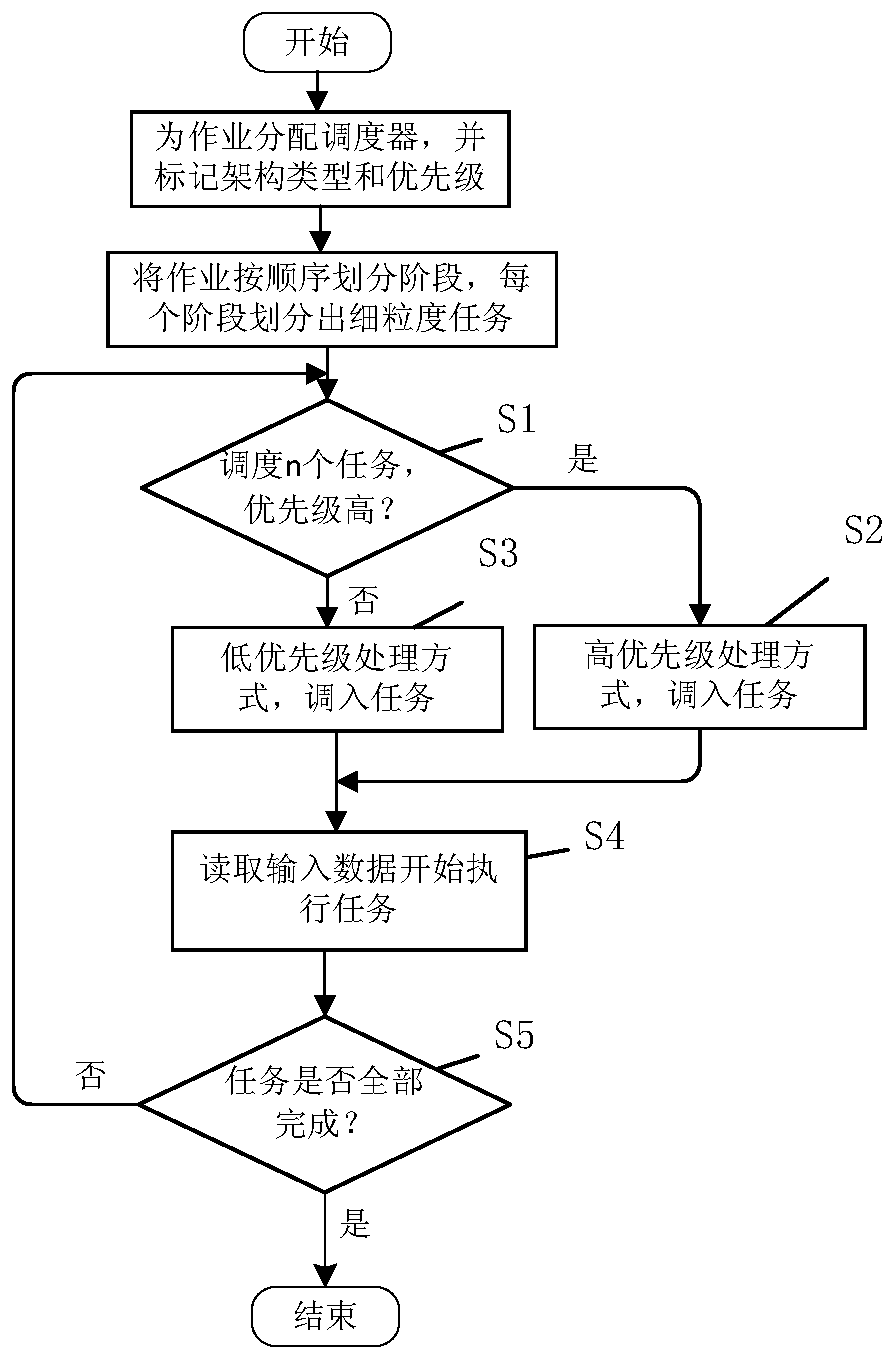

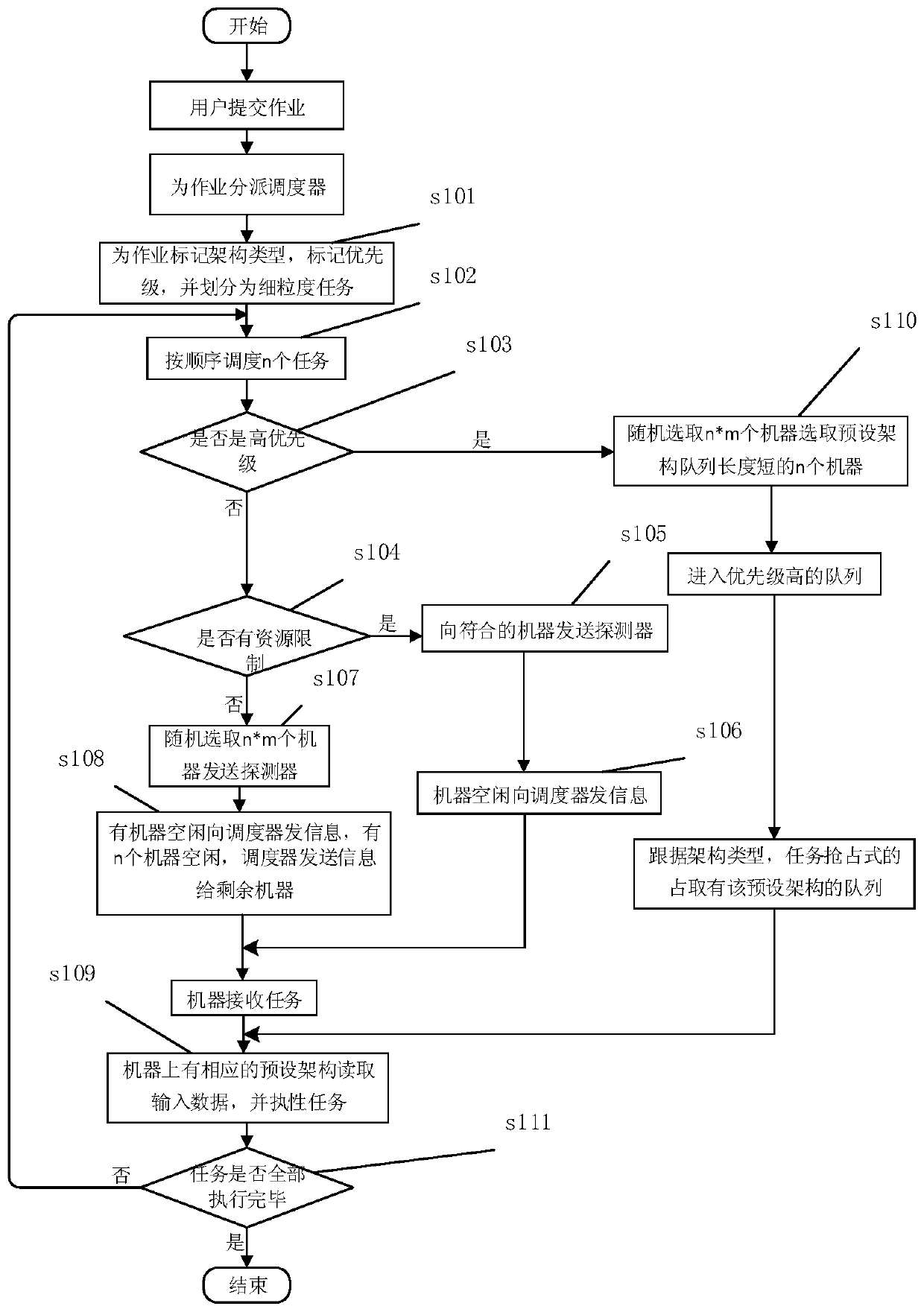

[0029] Such as figure 1 and figure 2 As shown, a fine-grained task scheduling method in a cloud environment includes the following steps:

[0030] (1) Divide the job into fine-grained tasks in a certain way, judge the priority and resource constraints of the fine-grained tasks, and schedule the tasks to different machines and different machines according to the priority and whether the resources are limited On the queue; the job that needs to be submitted by the user is assigned a scheduler, according to the architecture type of the job, the architecture type is marked, and the priority is marked; the job is divided into stages according to the order of execution, and a directed acyclic graph is scheduled. Fine-grained tasks, each stage contains a task set of several tasks;

[0031] (2) Different architecture executors are preset on each machine. The preset architecture is the processing data model in Spark or the processing data model in MapReduce; Queue up in the queue a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com