A Cross-camera Pedestrian Trajectory Matching Method

A cross-camera and camera technology, applied in the field of cross-camera pedestrian trajectory matching, can solve problems such as measurement errors and low tracking accuracy, and achieve the effect of solving trajectory point alignment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

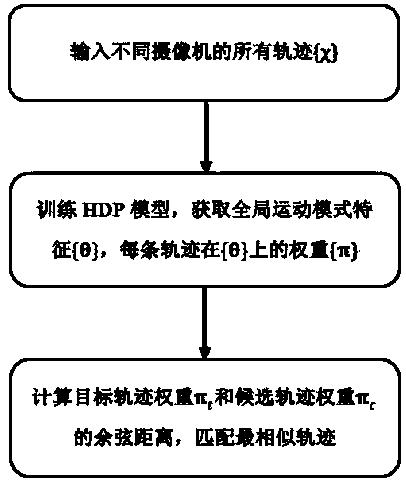

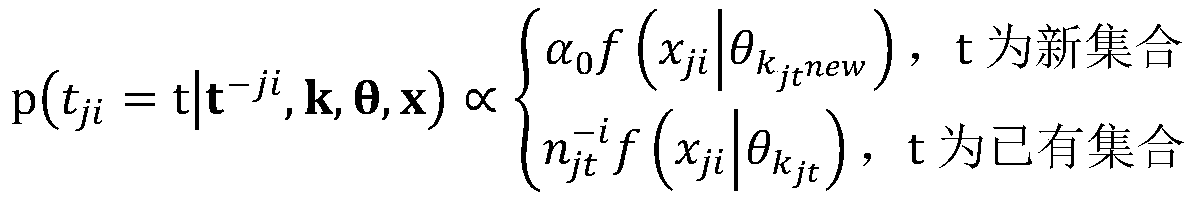

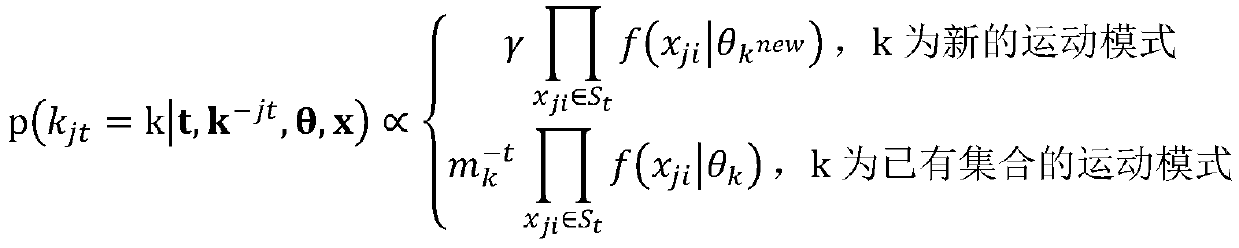

[0036] Such as figure 1 Described, the method provided by the invention specifically comprises the following steps:

[0037] Using {x}=(x 1 ,x 2 ,...,x L ) to represent a pedestrian trajectory, where x i Represents the observed value, L is the length of the trajectory, and each observed value x i It is composed of the position information of the current point and the motion direction information between adjacent points, where the value range of the position information is 160×120, and the motion direction information between adjacent points is divided into four directions: up, down, left, and right, that is, each observation value is is a three-dimensional vector, x i ∈R 3 .

[0038] On the above basis, the method provided by the invention comprises the following steps:

[0039] S1. Extract a pedestrian trajectory of the target camera as the target trajectory, and then use all the trajectories of the remaining cameras within the time period as candidate trajectories; ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com