Unmanned vehicle real-time positioning method based on laser reflection strength

A technology of laser reflection and real-time positioning, which is applied in the direction of radio wave measurement systems and instruments, can solve the problems of positioning algorithm failure and false detection, and achieve the effect of high detection accuracy and reduced calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

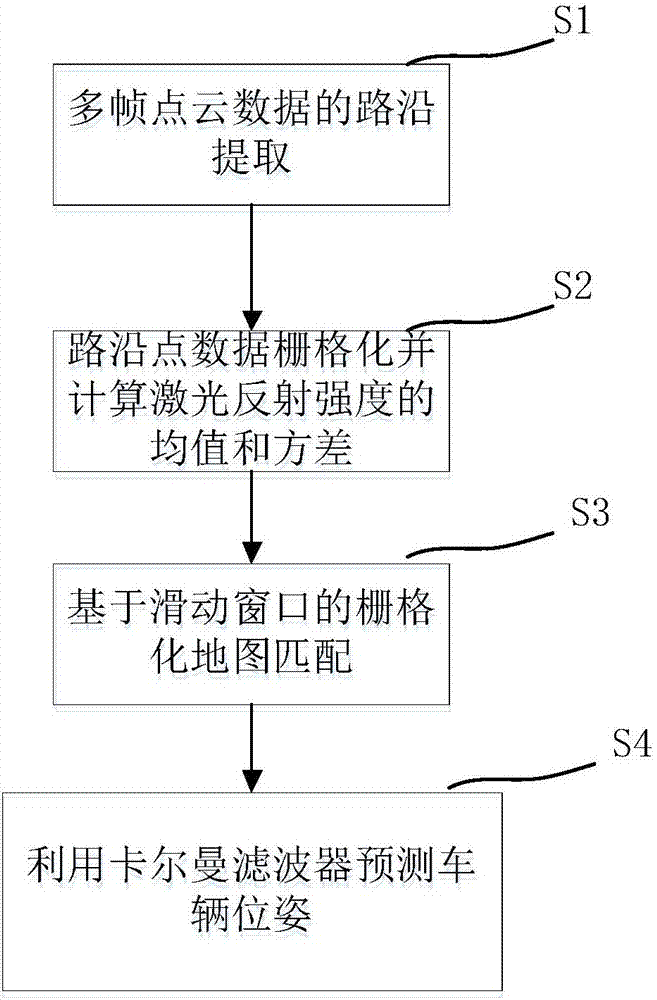

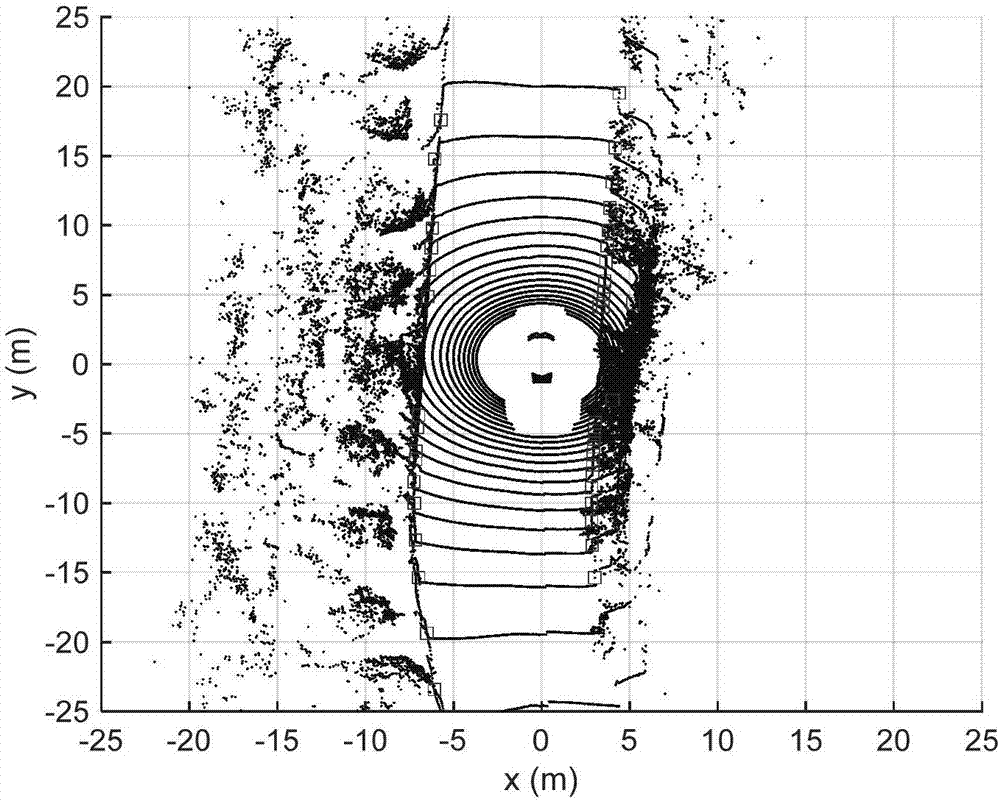

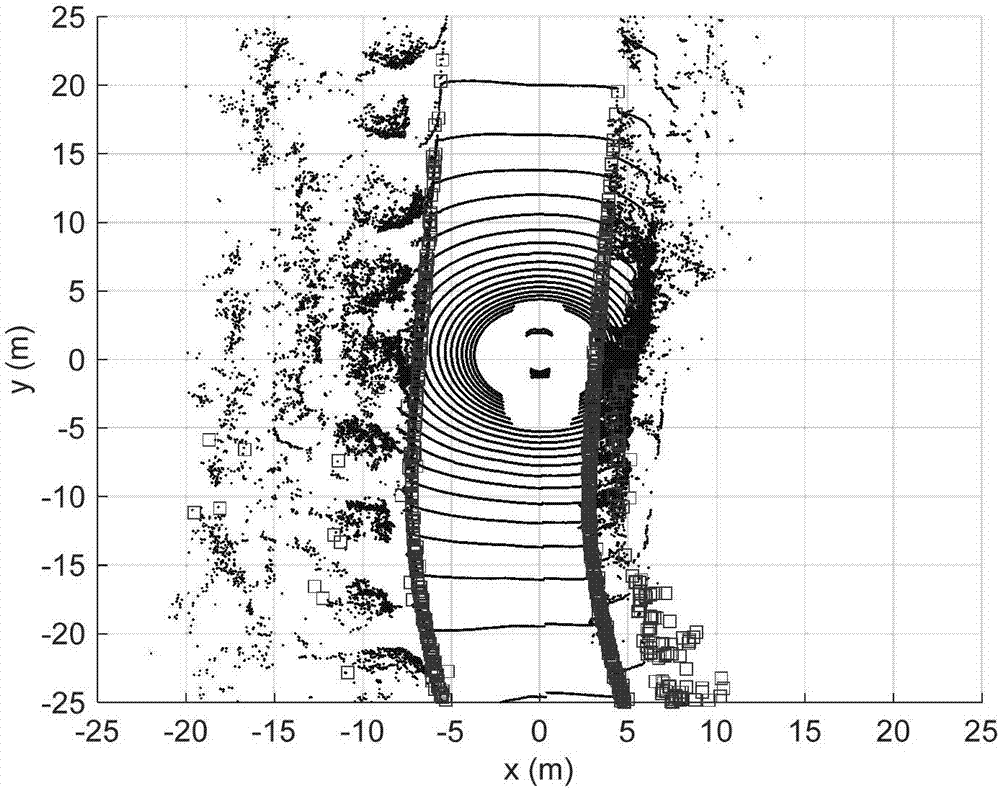

[0035] A real-time positioning method for unmanned vehicles based on laser reflection intensity. This method first extracts the characteristics of laser reflection intensity from single-frame laser point cloud data, and converts the coordinates of roadside feature points detected by multiple frames according to the vehicle kinematics model. Go to the current unmanned vehicle coordinate system, and then use the regional probability matching search algorithm to match the reflection intensity distribution with the reflection intensity distribution in the high-precision map, and calculate the lateral, longitudinal and heading angle deviations of the current unmanned vehicle as observation values , input to the Kalman filter for pose estimation. This embodiment is innovative and practical, can realize real-time precise positioning in a complex environment, and can effectively improve the driving safety of unmanned vehicles.

[0036] Specific steps are as follows:

[0037] 1. Roads...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com