Cross-modal hash retrieval method based on mapping dictionary learning

A dictionary learning, cross-modal technology, applied in the field of cross-modal hash retrieval based on mapping dictionary learning, can solve problems such as limiting algorithm application, learning hash functions, and uneven distribution of hash codes.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

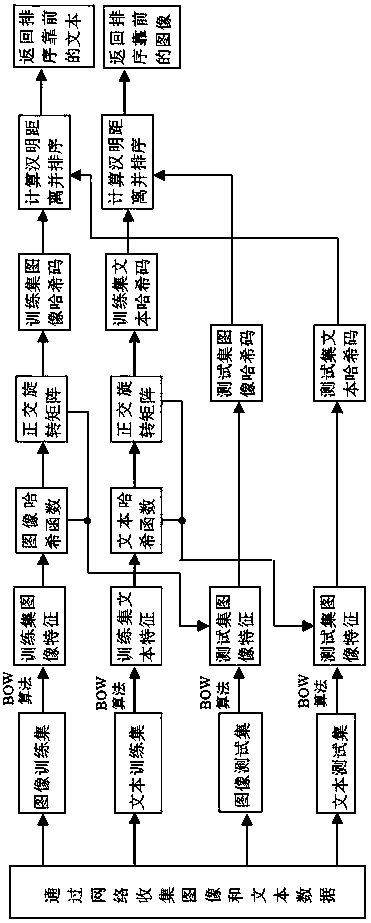

[0067] The specific embodiment: the specific embodiment of the present invention is described in detail below in conjunction with accompanying drawing:

[0068] Although the present invention specifies two modalities, image and text, the algorithm can be easily extended to other modalities and situations with more than two modalities. For convenience of description, the present invention only considers two modes of image and text.

[0069] see figure 1 , a cross-modal hash retrieval method based on mapping dictionary learning, which implements the following steps through a computer device:

[0070] Step S1, collect image and text samples through the network, and establish a cross-media retrieval image and text data set, and divide the image and text data set into a training set and a test set;

[0071] The step S1 includes collecting images and text samples from social networking, shopping and other websites on the Internet, and forming image and text sample pairs from image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com