Motion sensing game interactive method and system based on deep learning and big data

A technology of deep learning and somatosensory games, applied in the field of human-computer interaction, can solve problems such as unfavorable popularization and promotion of somatosensory games, high recognition accuracy, complex configuration environment, etc., to achieve strong adaptive recognition ability, good somatosensory experience, and reduce costs Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

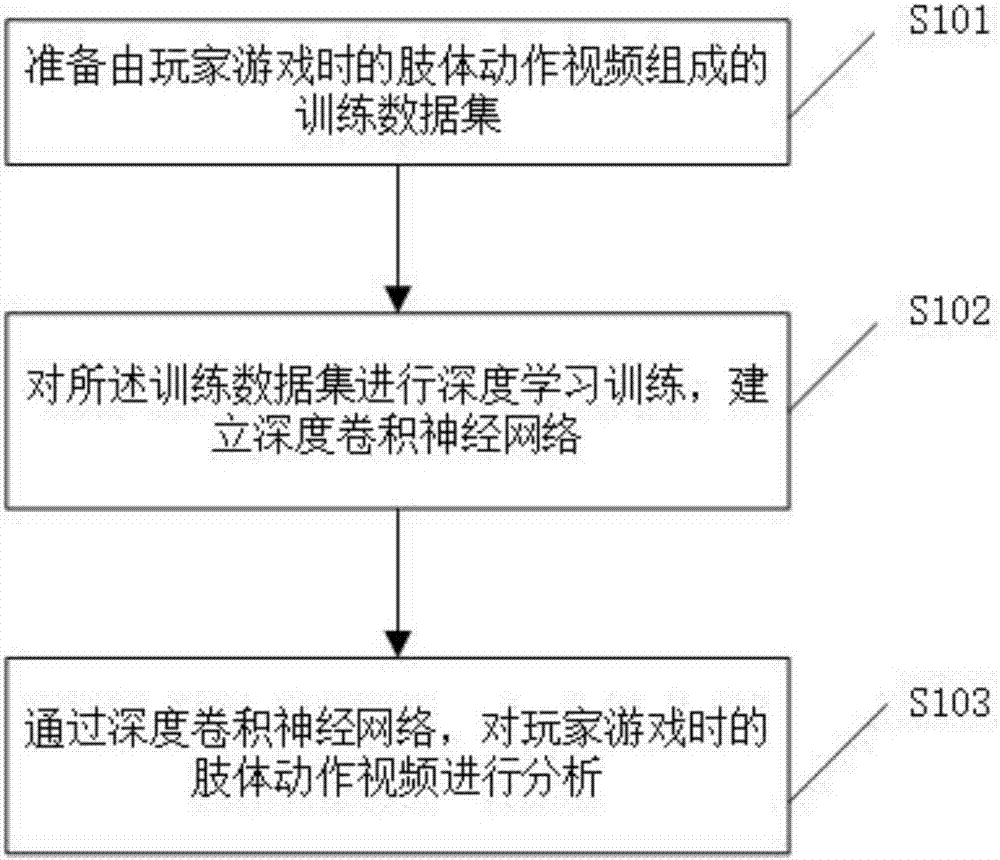

[0041] Such as figure 1 As shown, the embodiment of the present invention provides a deep learning-based somatosensory game interaction method, including:

[0042] Step S101, collect body action videos in different games in advance, obtain an action video sample database, and add corresponding action tags to the sample data, and the action tags correspond to the game object control instructions one by one;

[0043] Step S102, perform in-depth learning training on the above-mentioned video sample data set, establish a deep convolutional neural network, backpropagate the error during training, and use the stochastic gradient descent method to update the network weight parameters, and finally make the loss function of the network reach a minimum value . The deep convolutional neural network is used to input the video of the player's body movements during the game, and output the game player's action prediction result; the game player's action prediction result includes action cl...

Embodiment 2

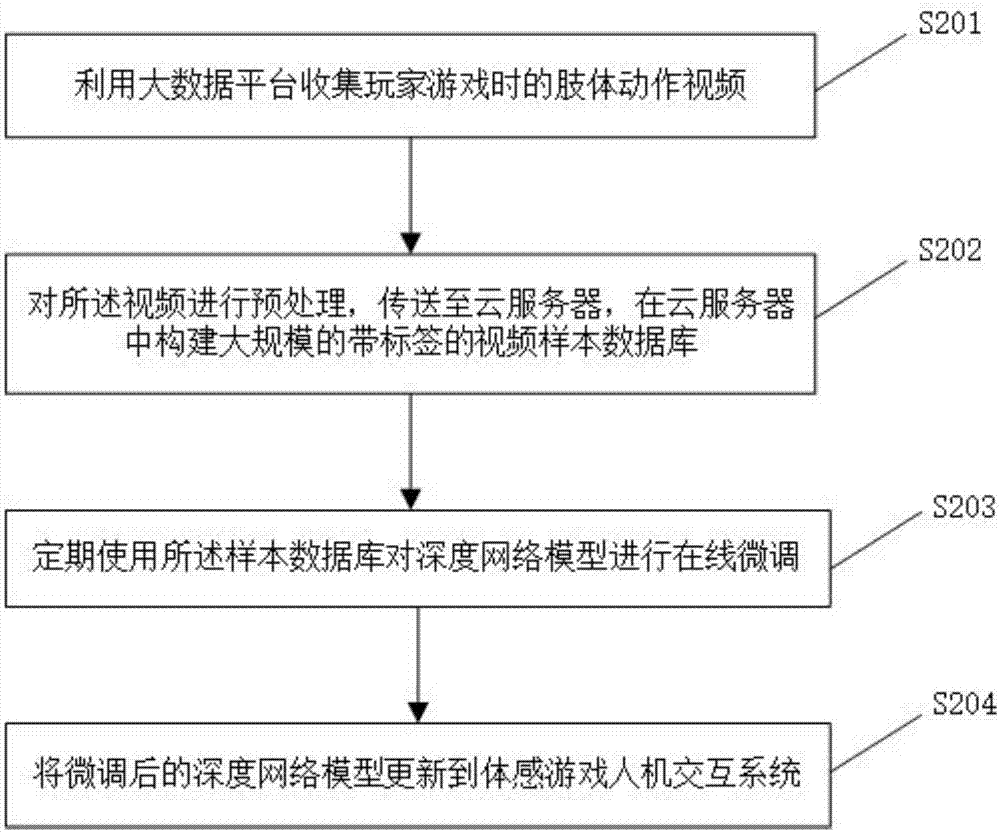

[0054] Such as figure 2 As shown, the embodiment of the present invention provides a method for online optimization of a deep learning network model based on big data, including:

[0055] Step S201, using the big data platform to collect the body movement video of the player during the game;

[0056] Step S202, preprocessing the video, sending it to the cloud server, and building an action video sample database in the cloud server;

[0057] Step S203, regularly using the video sample database to fine-tune the deep convolutional neural network model obtained through offline training, so as to further improve the recognition accuracy of the network;

[0058] Step S204, periodically updating the online fine-tuned network model to the somatosensory game interaction system, so that game players can obtain better somatosensory experience.

[0059] The embodiment of the present invention preprocesses the video, including removing frames irrelevant to the game player's operation co...

Embodiment 3

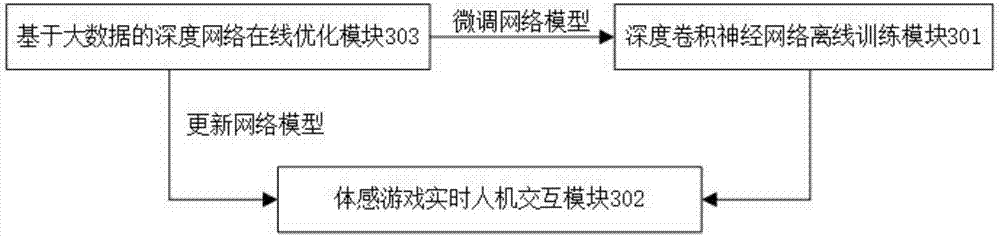

[0062] refer to image 3 and Figure 4 As shown, the embodiment of the present invention provides a somatosensory game interaction system based on deep learning and big data, including:

[0063] The deep convolutional neural network offline training module 301 is used for:

[0064] Deep learning training is performed on the training sample data set composed of the player's body movement videos during the game, and a deep convolutional neural network is established. During training, the error is backpropagated, and the network weight parameters are updated using the stochastic gradient descent method, and finally the loss function of the network reaches a minimum value. The deep convolutional neural network is used to input the action video of the game player, and output the action prediction result of the game player; the action prediction result of the game player includes action classification and probability distribution data thereof;

[0065] The real-time human-compute...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com