A Feature Extraction Method Fused with Inter-class Standard Deviation in Acoustic Scene Classification

A scene classification and feature extraction technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of inconsistent perceptual resolution, affecting the classification and recognition rate of acoustic scenes, insufficient feature expression, etc., to achieve convenient implementation, improve Recognition performance, effect of simple system structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below in conjunction with embodiment:

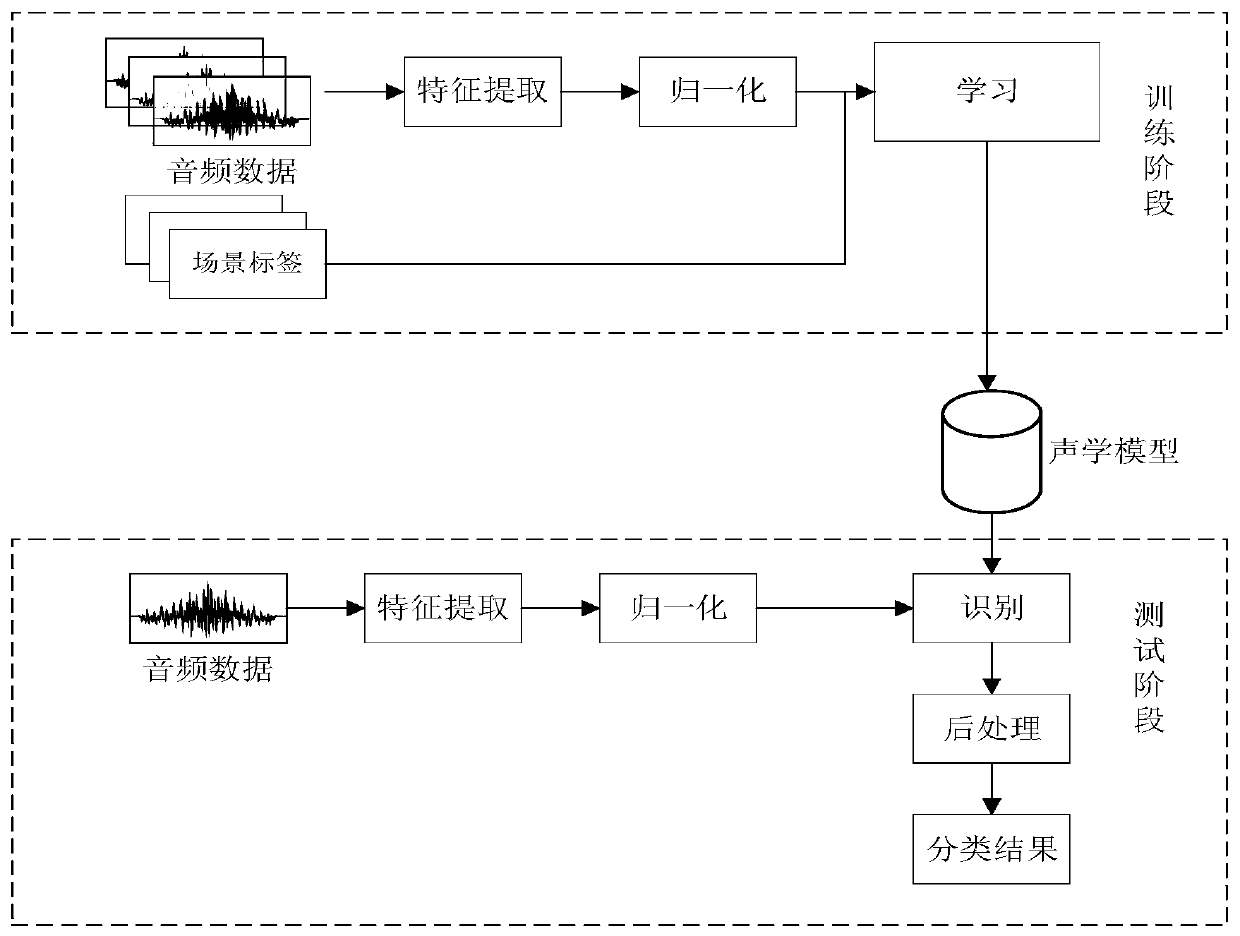

[0032] The acoustic scene classification system based on the fusion of inter-class standard deviation features provided by the embodiment of the present invention specifically includes the following parts, and each module may be realized by using software solidification technology during specific implementation.

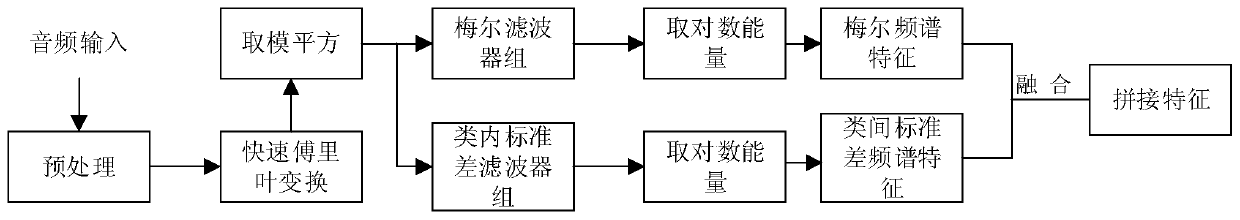

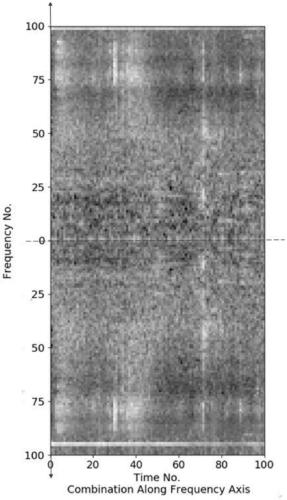

[0033] The feature generation module of the non-linear mapping of the standard deviation in the frequency domain between classes: According to the input audio, the output is a spectral image feature (Frequency Standard Deviation based SIF, FSD-SIF) representing the sound scene based on the standard deviation in the frequency domain. Spectral image feature generation method based on frequency domain standard deviation:

[0034] Step 1, use the audio in DCASE2017 as the original audio training set for reference, and record it as the original training set A;

[0035] Step 2, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com