Voice converting method based on deep learning

A voice conversion and deep learning technology, applied in voice analysis, instruments, etc., can solve problems such as unsuitable computing resources and equipment, poor voice quality, and sudden increase in calculation volume, so as to save terminal computing and storage resources and improve voice conversion effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

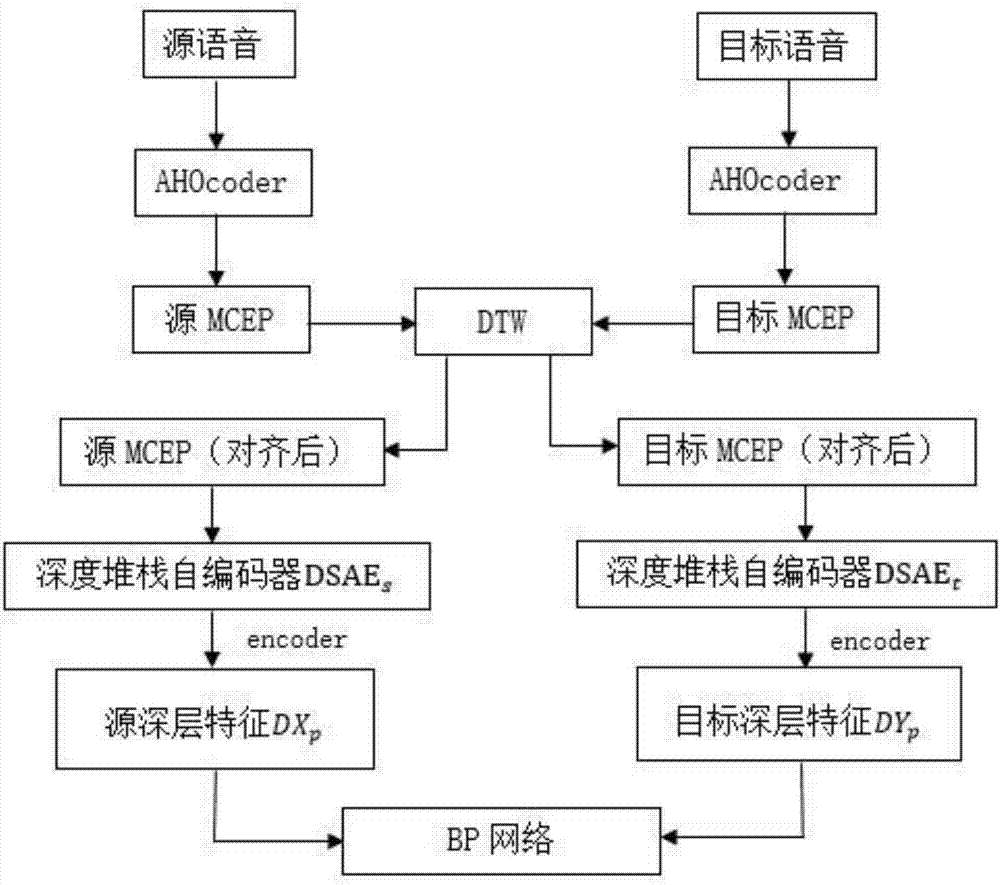

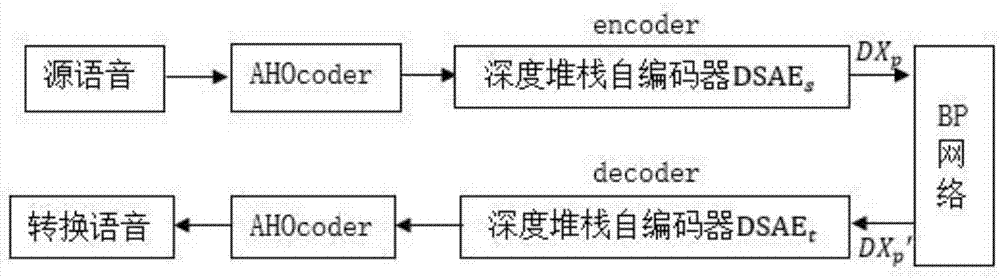

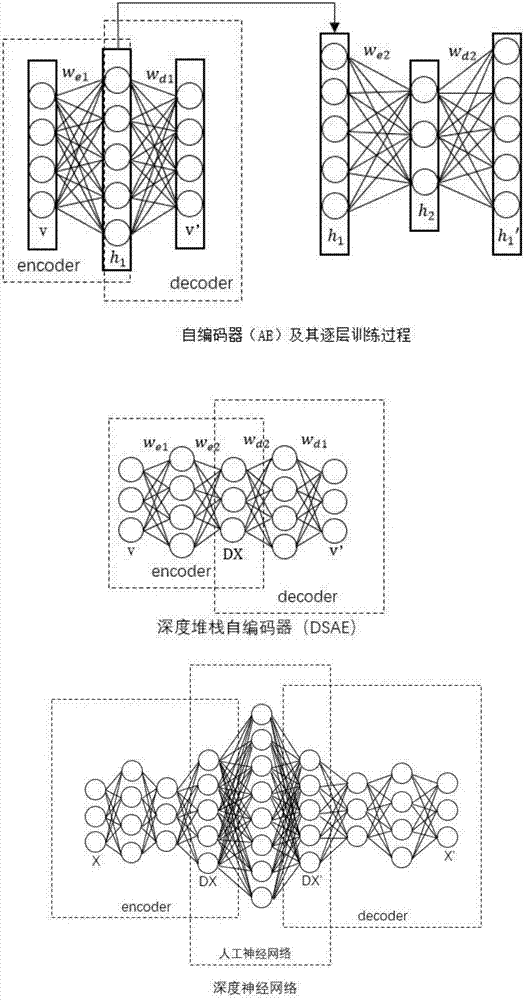

[0041] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

[0042] Those skilled in the art can understand that, unless otherwise defined, all terms (including technical terms and scientific terms) used herein have the same meaning as commonly understood by one of ordinary skill in the art to which this invention belongs. It should also be understood that terms such as those defined in commonly used dictionaries should be understood to have a meaning consistent with the meaning in the context of the prior art, and unless defined as herein, are not to be interpreted in an idealized or overly formal sense Explanation.

[0043] The AHOcoder feature parameter extraction model is a speech codec (speech analysis / synthesis system) developed by Daniel Erro in the AHOLAB signal processing laboratory of the University of Basque Country. AHOcoder decomposes 16kHz, 16bits mono wav speech into three parts: fundamental ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com