GPU box PCIE extended interconnection topology device

A topology and high-speed interconnection technology, applied in the direction of instruments, electrical digital data processing, etc., can solve the problems of huge servers, stuck in server processing performance, inability to adjust the interconnection topology of CPU and GPU, etc., to achieve low transmission delay and good scalability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

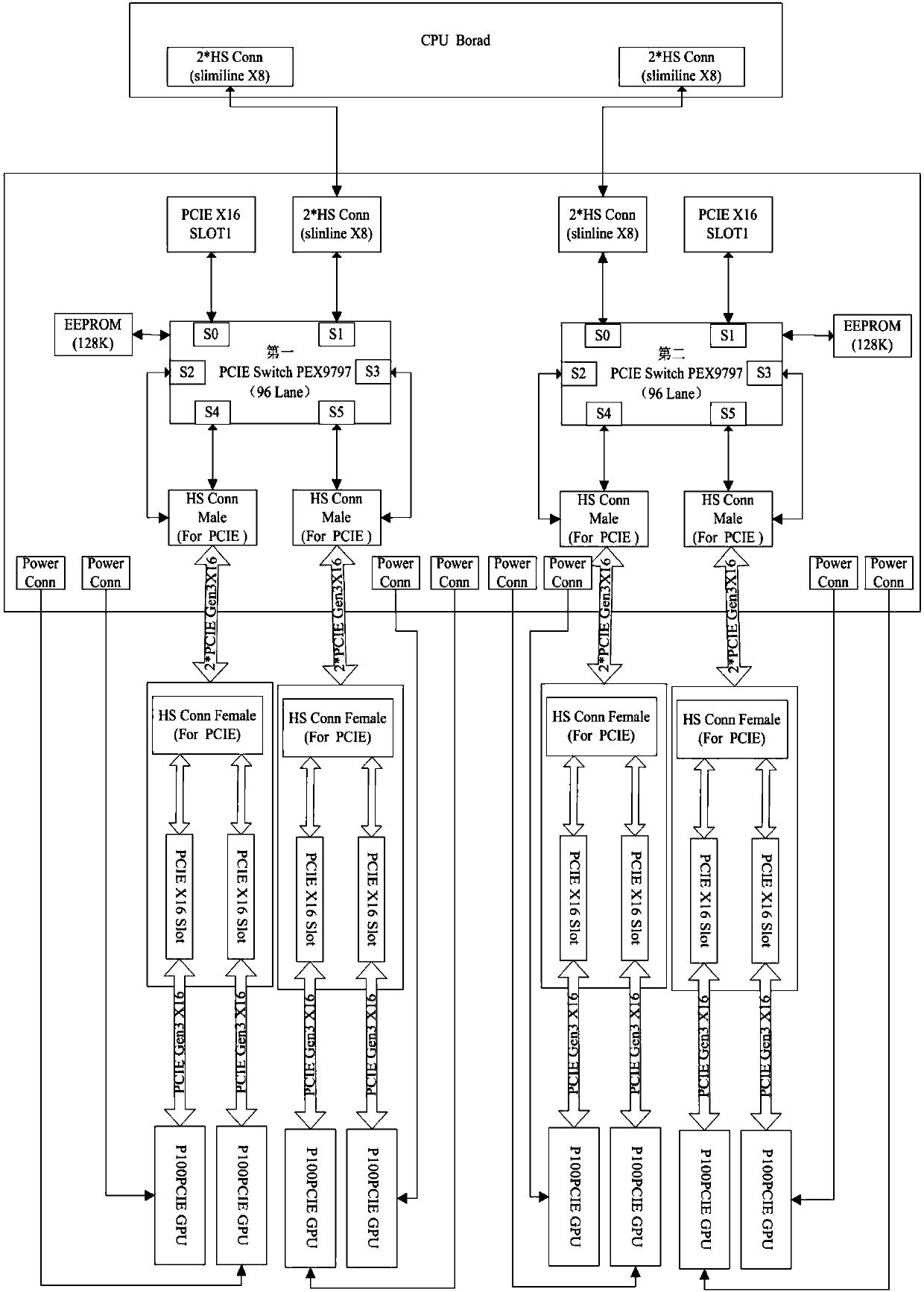

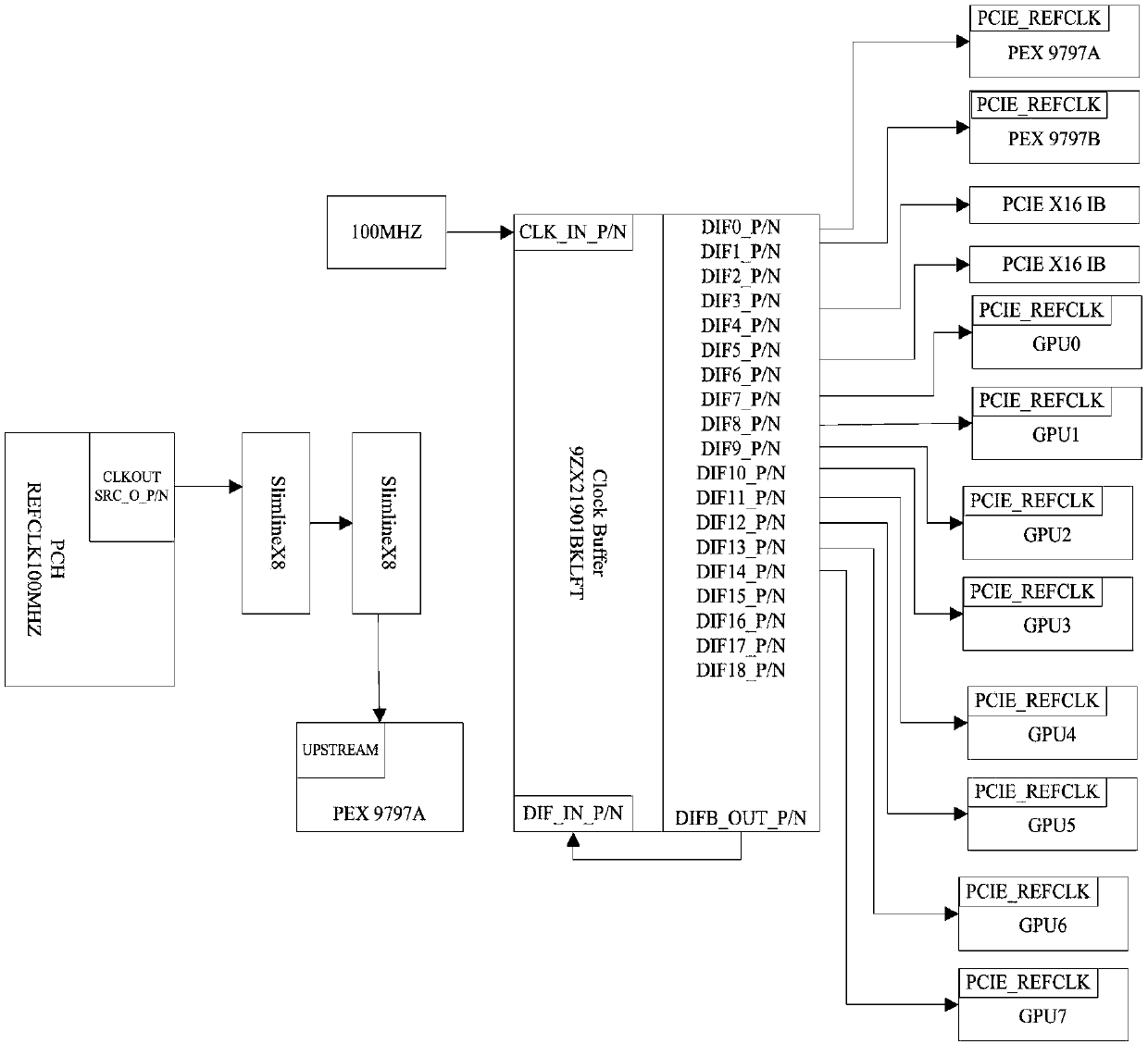

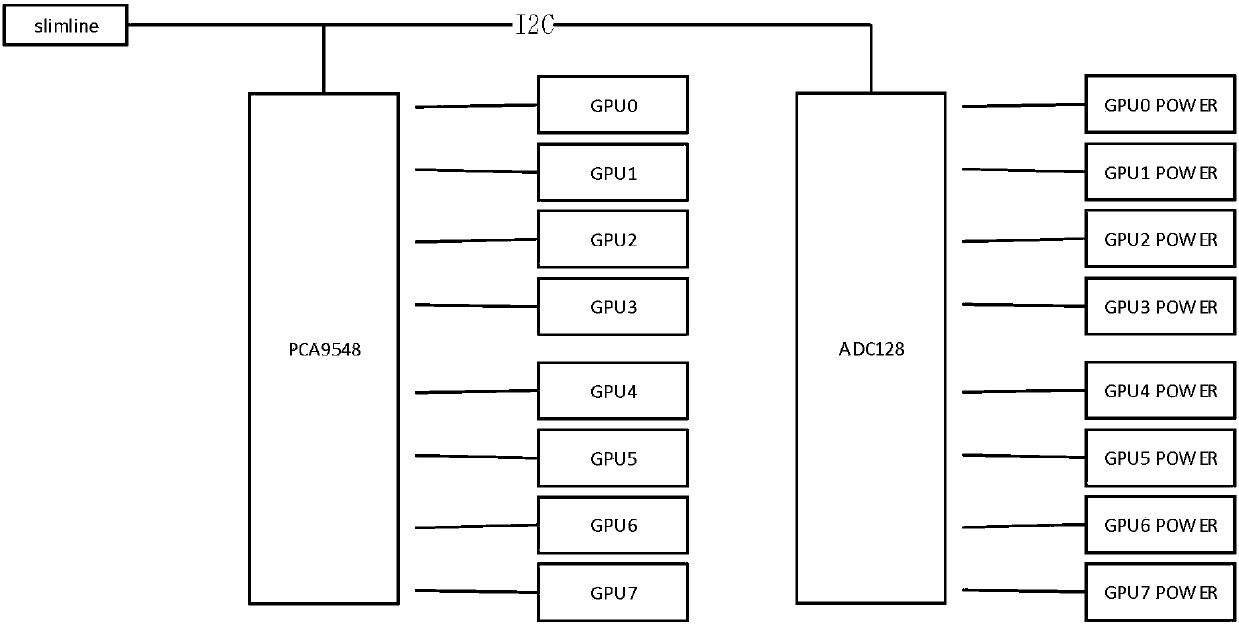

[0023] Such as figure 1 As shown, including the GPU box, the CPU server, the first PCIE switch chip PEX9797, the second PCIE switch chip PEX9797, the GPU box includes 8 groups of interconnected GPUs, and the uplink port of the GPU box is configured with two sets of PCIE X16 ports to connect to the CPU server. Port S0 of the first PCIE switch chip PEX9797 is connected to the first high-speed interconnection cable card through PCIE slot1, port S1 of the first PCIE switch chip PEX9797 is connected to the CPU server through a slimline interface, ports S2, Port S4 is connected to the PCIE connection terminal, the PCIE connection terminal is connected to another PCIE connection terminal through 2*PCIE Gen 3X16 bus, the other PCIE connection terminal is connected to two PCIE slot chips through two PCIE X16 buses, and the two PCIE The slot chips are respectively connected to GPU0 and GPU1 through the PCIE X16 bus. Similarly, ports S3 and S5 of the first PCIE switch chip PEX9797 are co...

Embodiment 2

[0029] The difference between Embodiment 2 and Embodiment 1 is that when the upstream port of the GPU box is configured with a set of PCIE X16 ports to connect to the CPU server, the port S0 of the first PCIE switch chip and the port S1 of the second PCIE switch chip are transferred through the PCIE to slimline adapter card Connect to the slimline interface of the CPU server.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com