Patents

Literature

66 results about "Interconnection architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

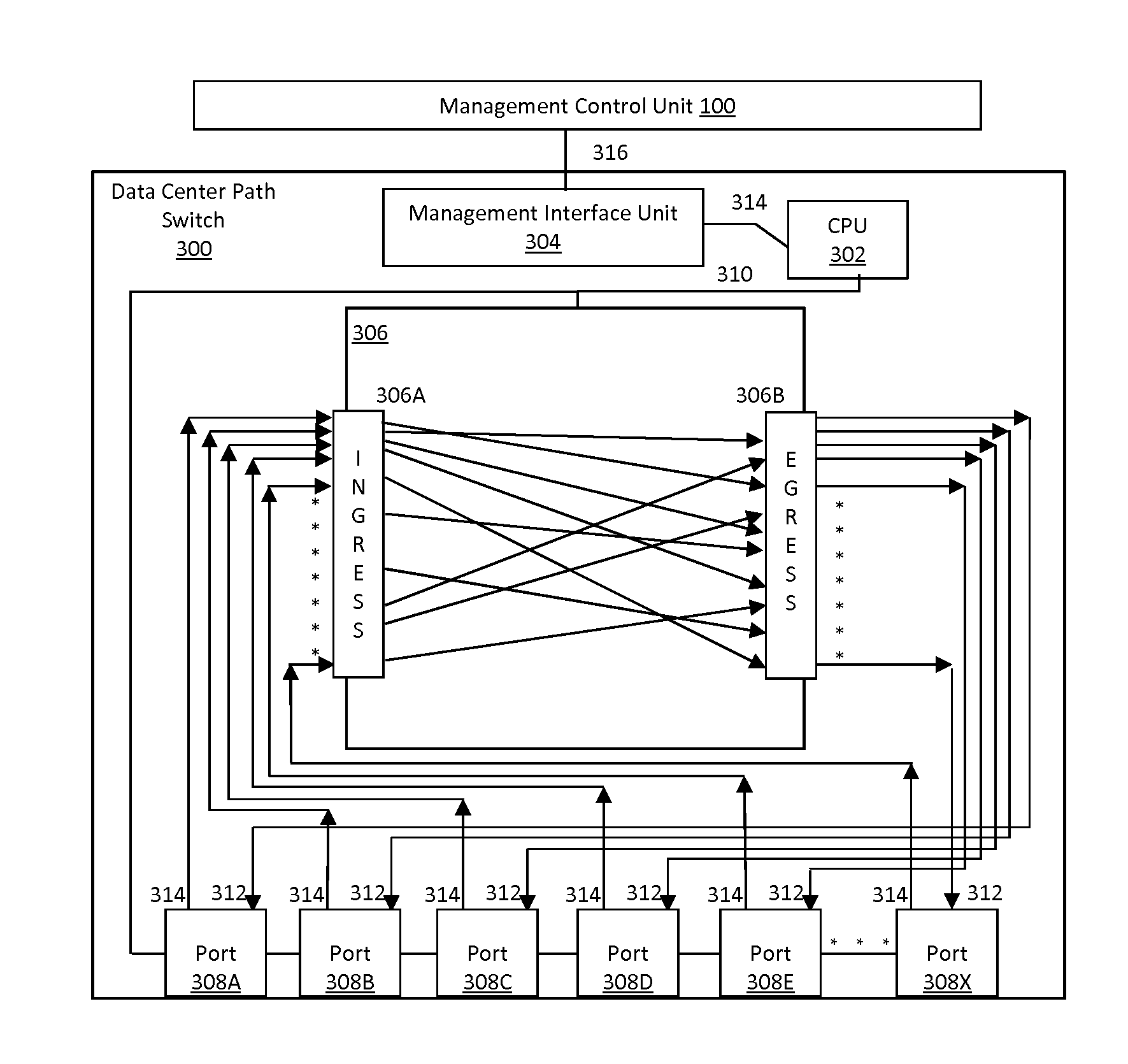

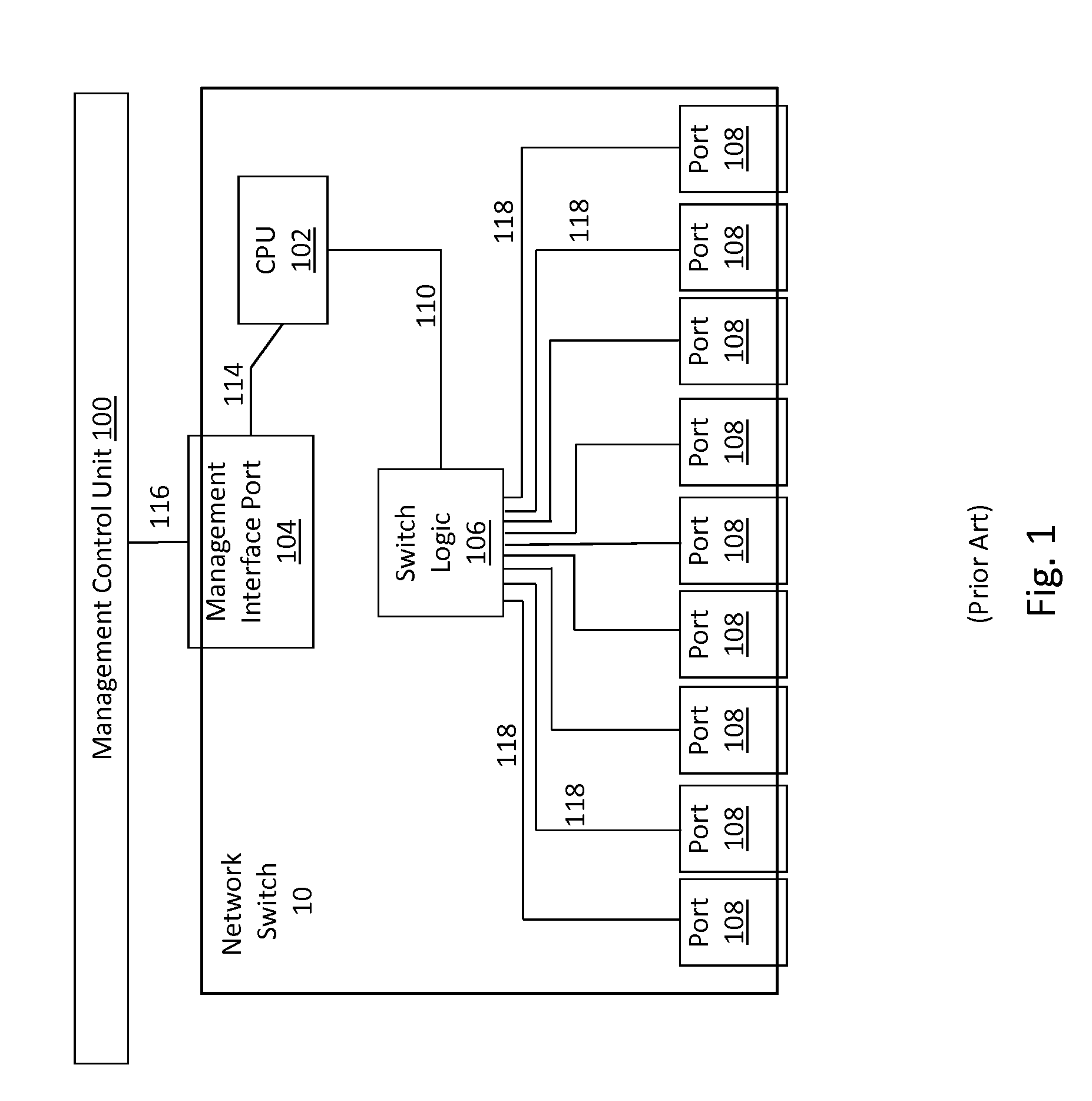

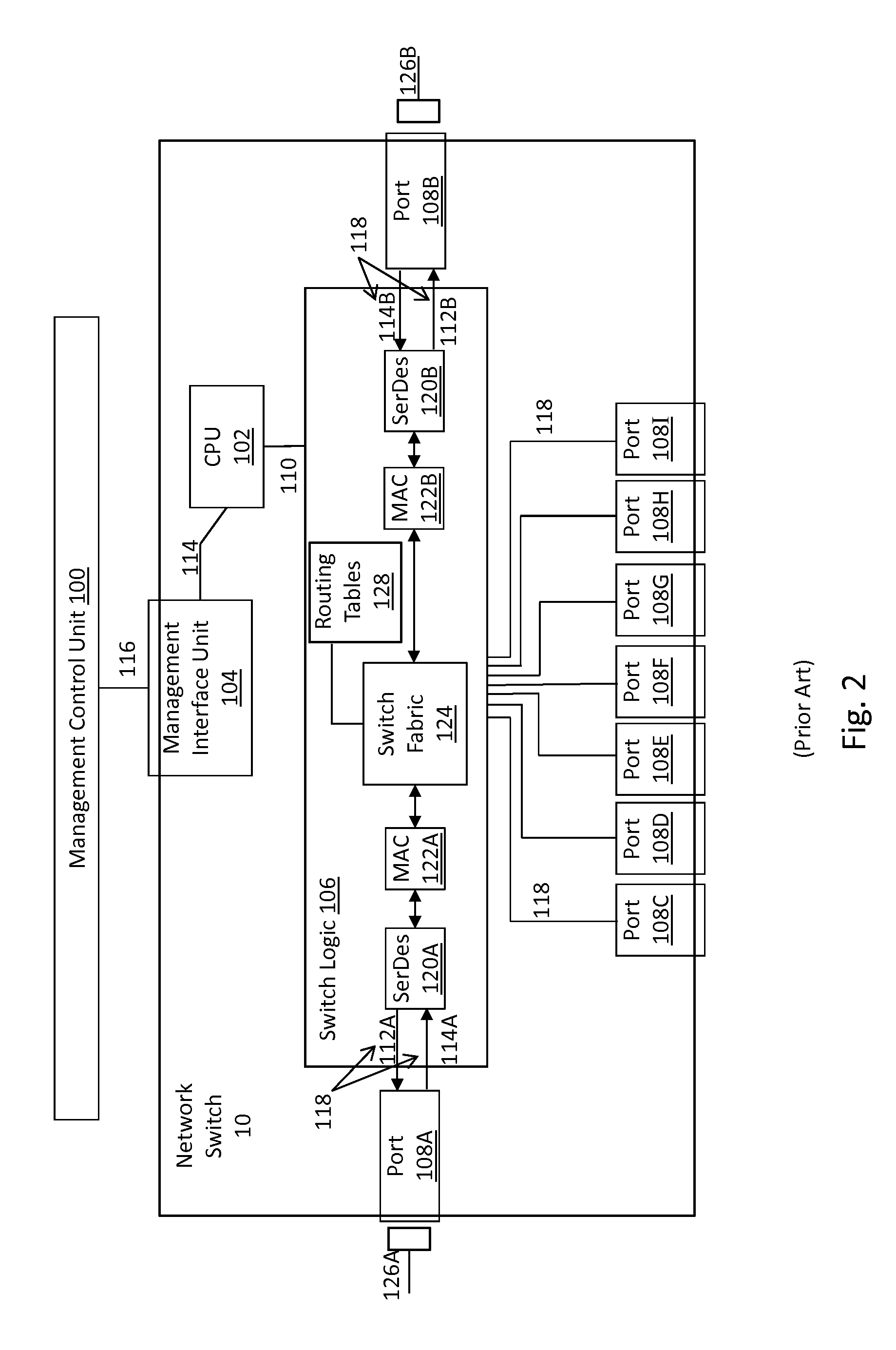

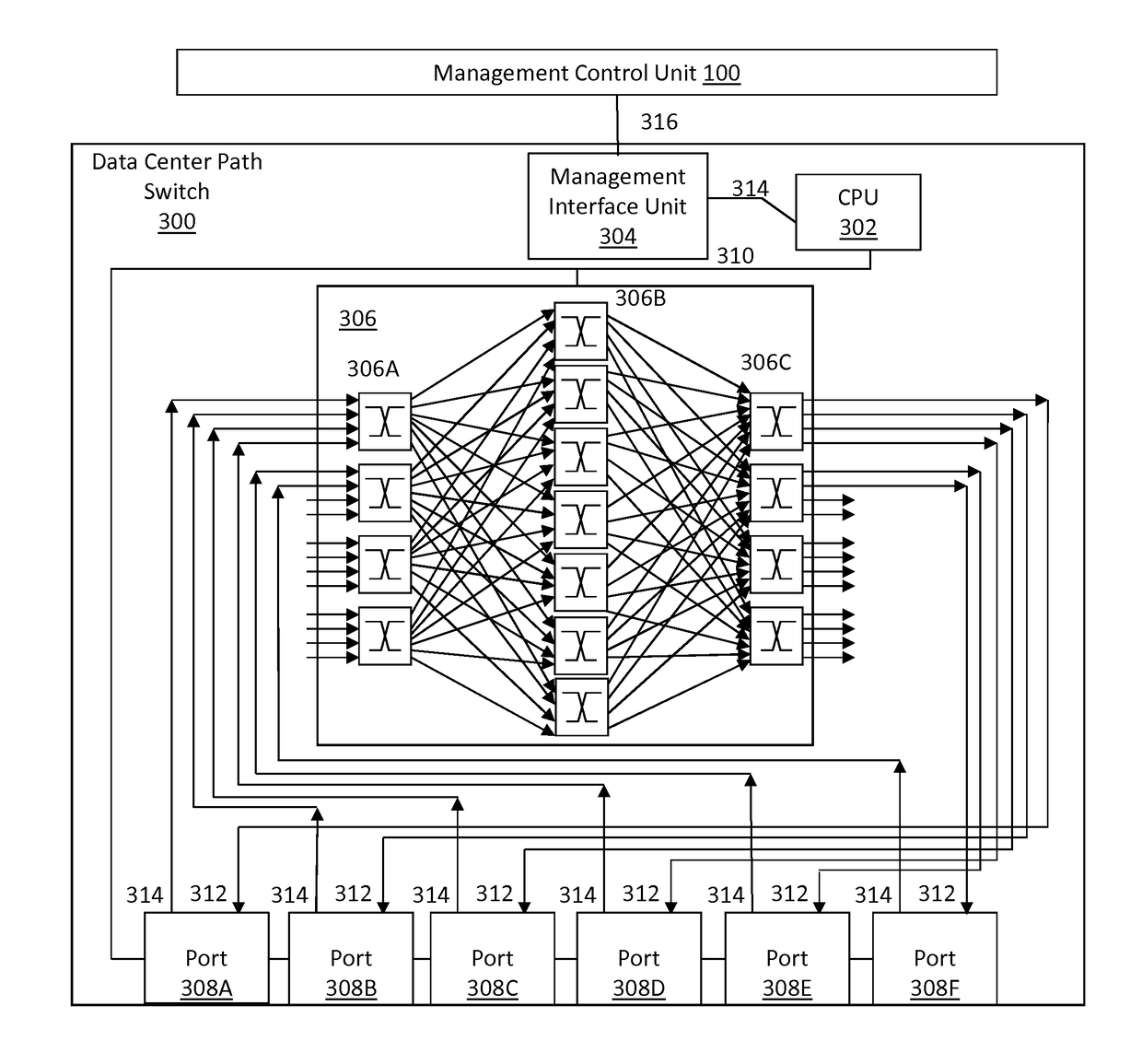

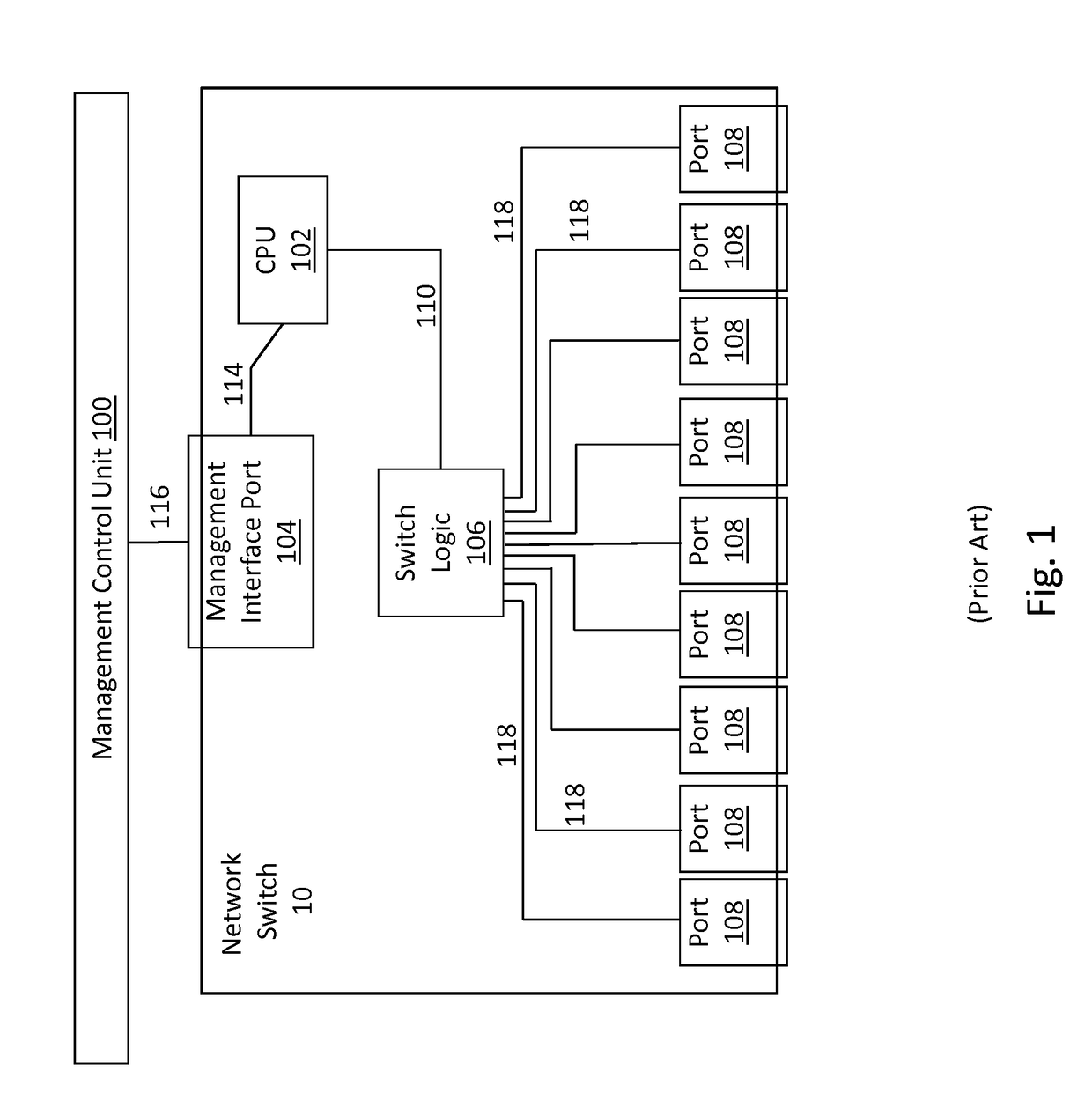

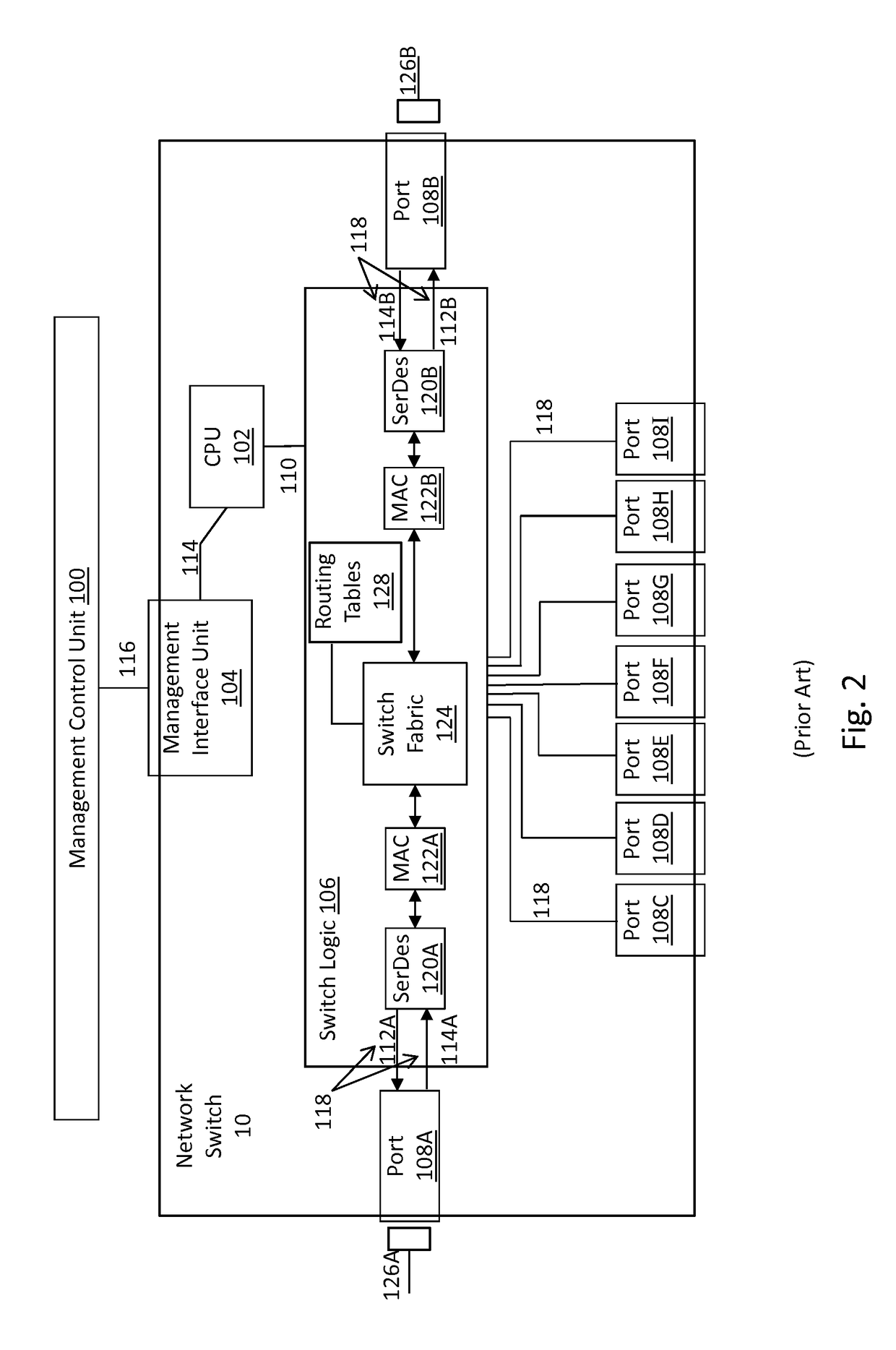

Data center path switch with improved path interconnection architecture

ActiveUS20160007102A1Easy to controlSimplifying interconnectionMultiplex system selection arrangementsOptical multiplexData centerSwitching signal

A data center path switch architecture permits path switching of the signal path of incoming signals to one or more output paths in real time without the need for manual intervention, and without delays associated with current data center network switches. In this architecture, a switching core capable of switching signals directly from the ingress of the switching core to alternate destination ports in real time, either under software or hardware control.

Owner:FIBER MOUNTAIN INC

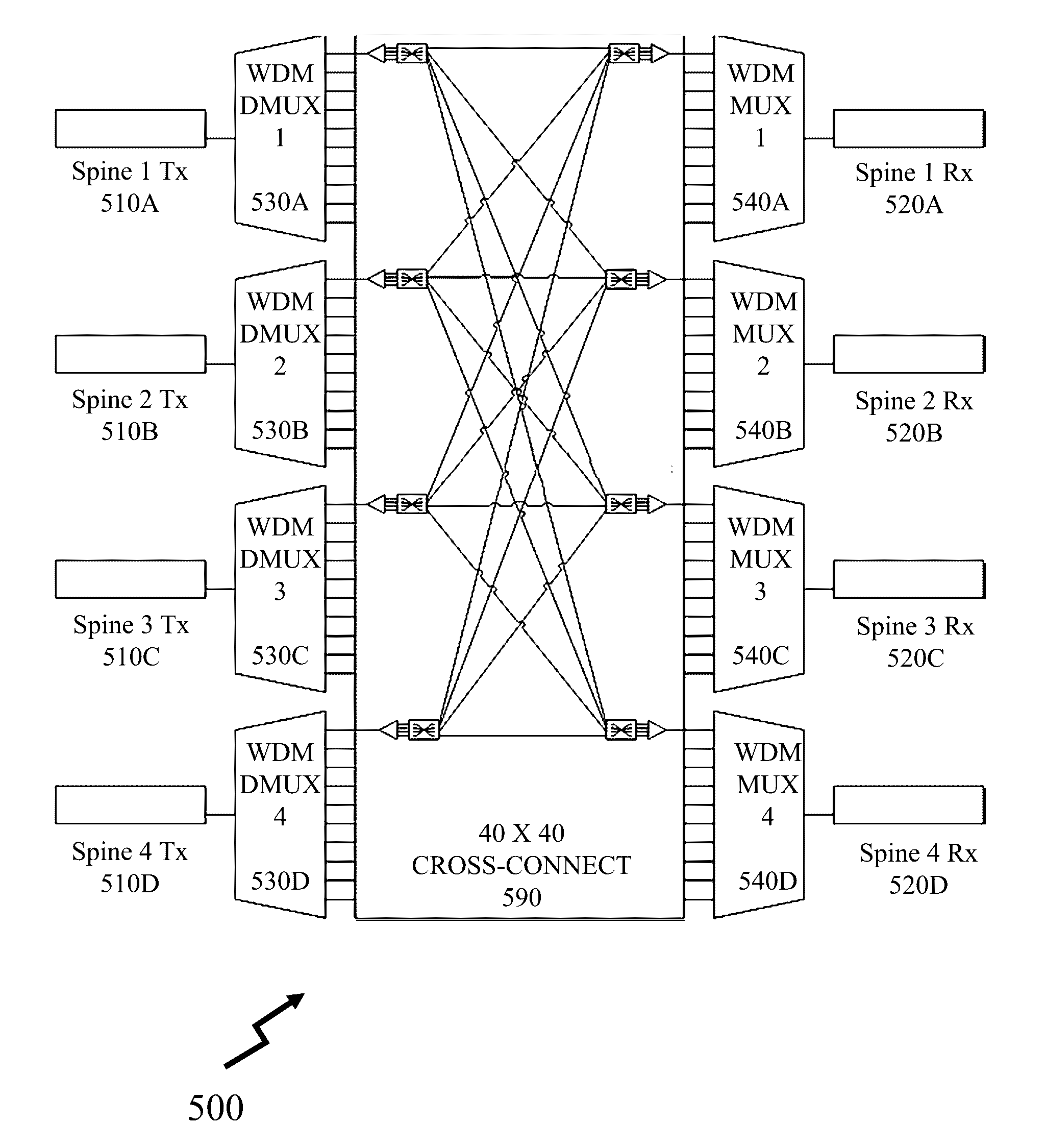

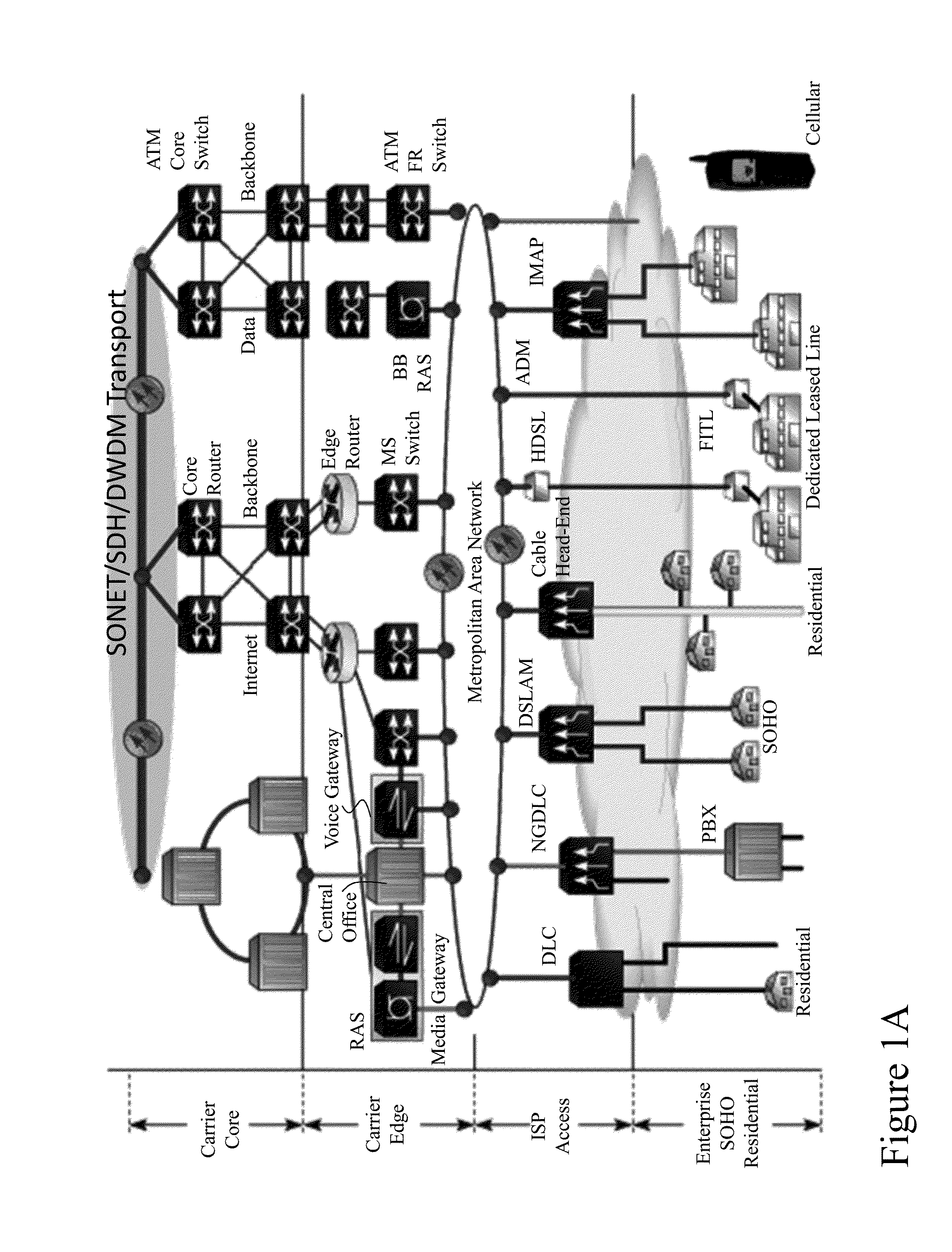

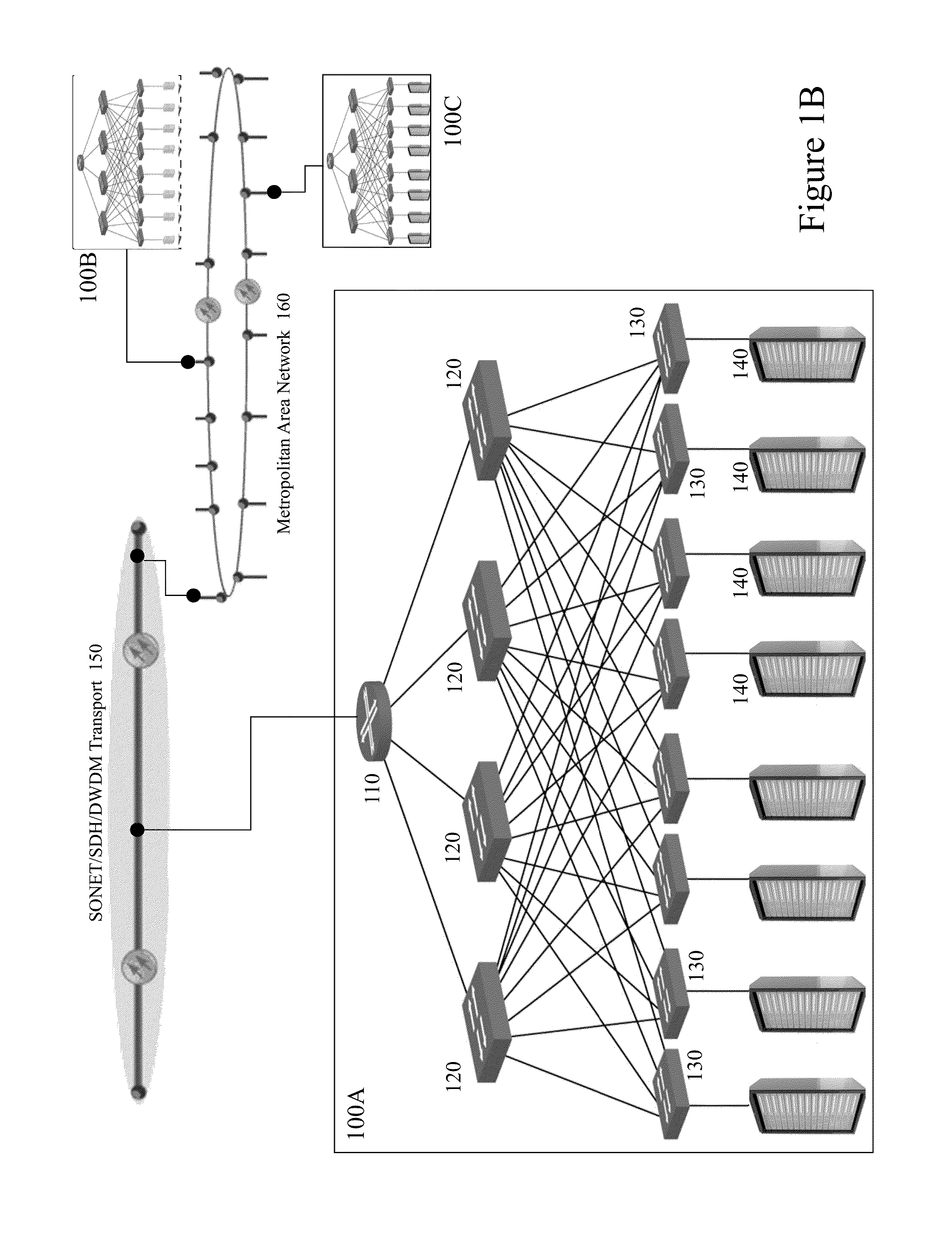

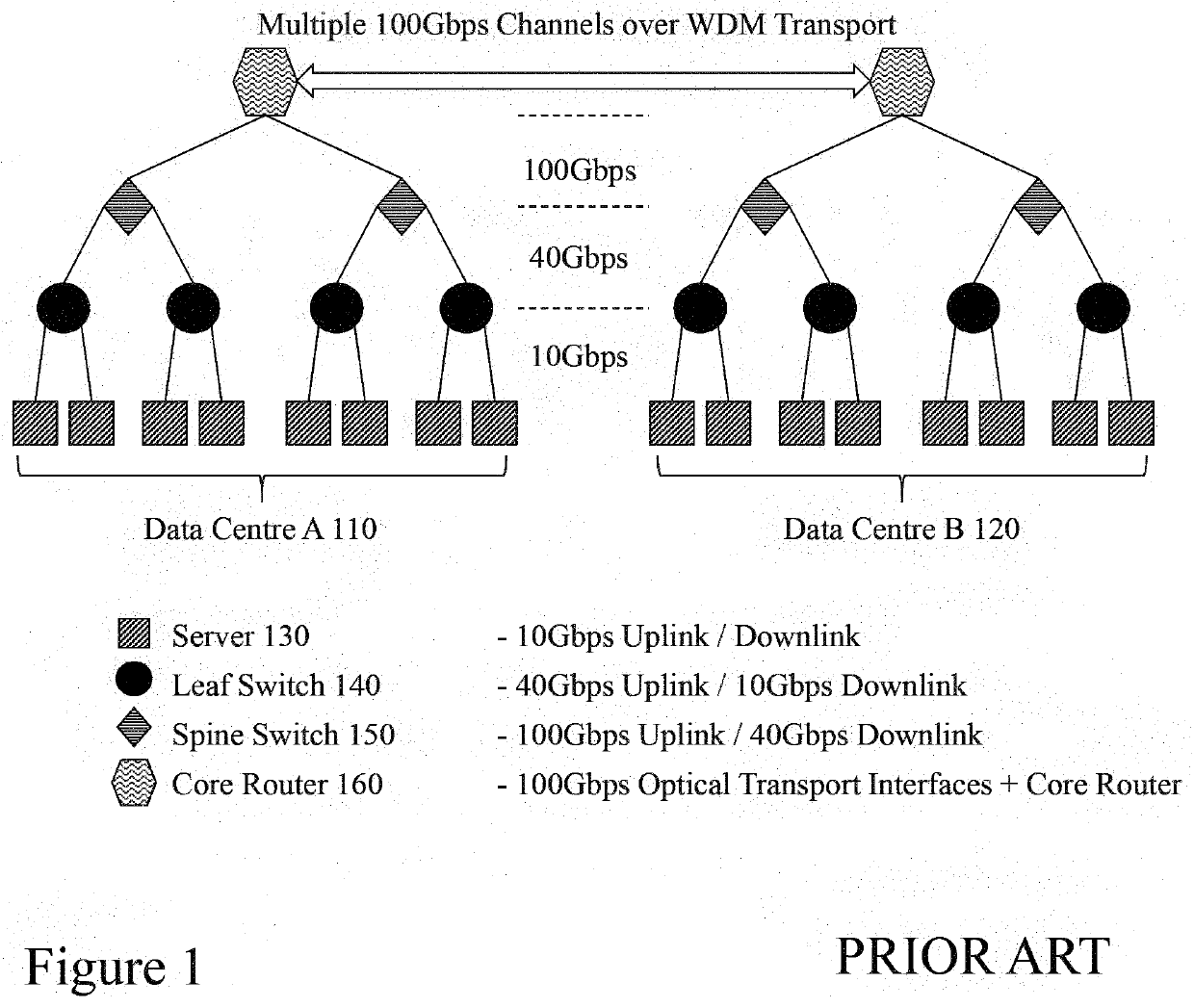

Methods and systems relating to optical networks

ActiveUS20170019168A1Reduce restrictionsMultiplex system selection arrangementsWavelength-division multiplex systemsFiberData center

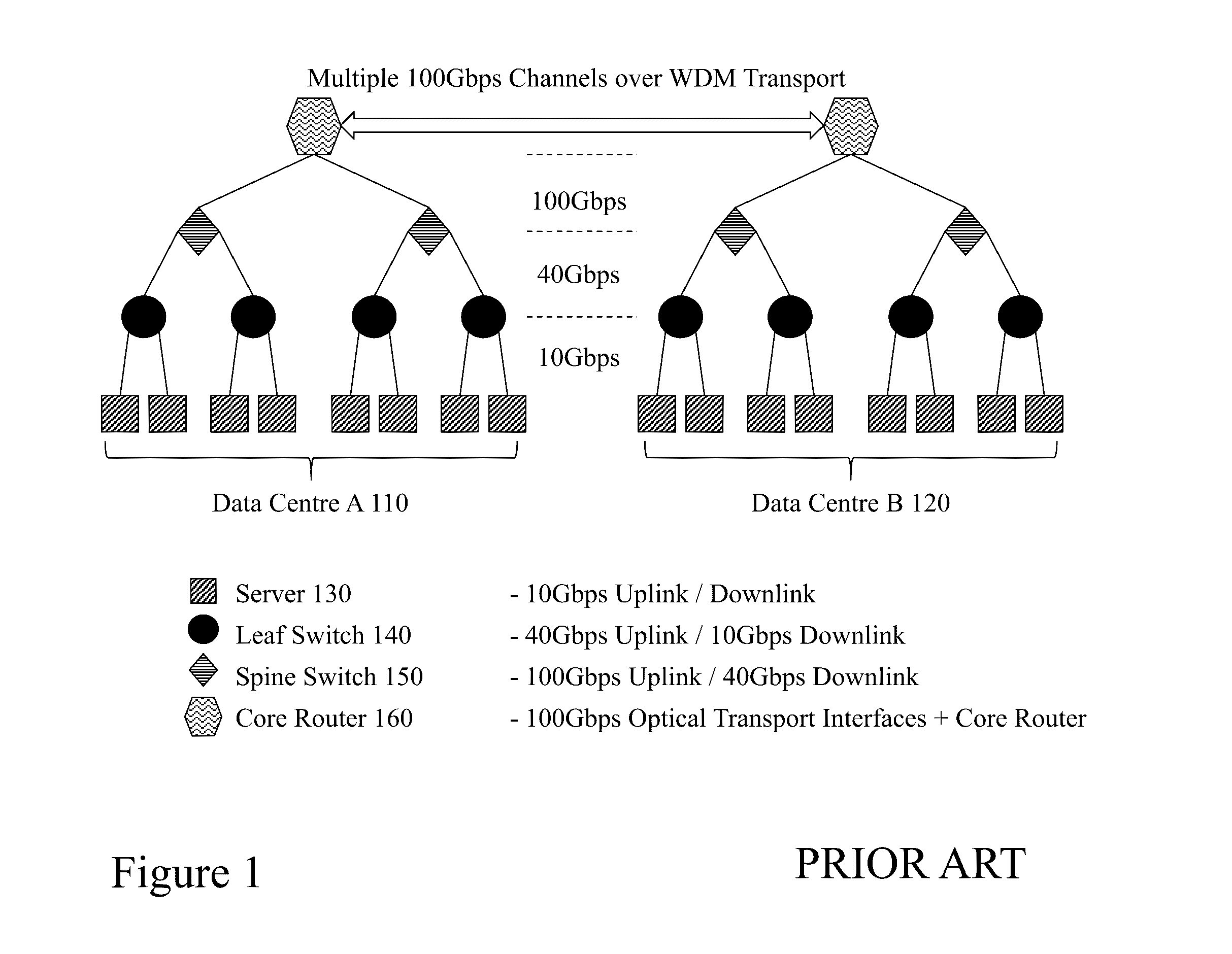

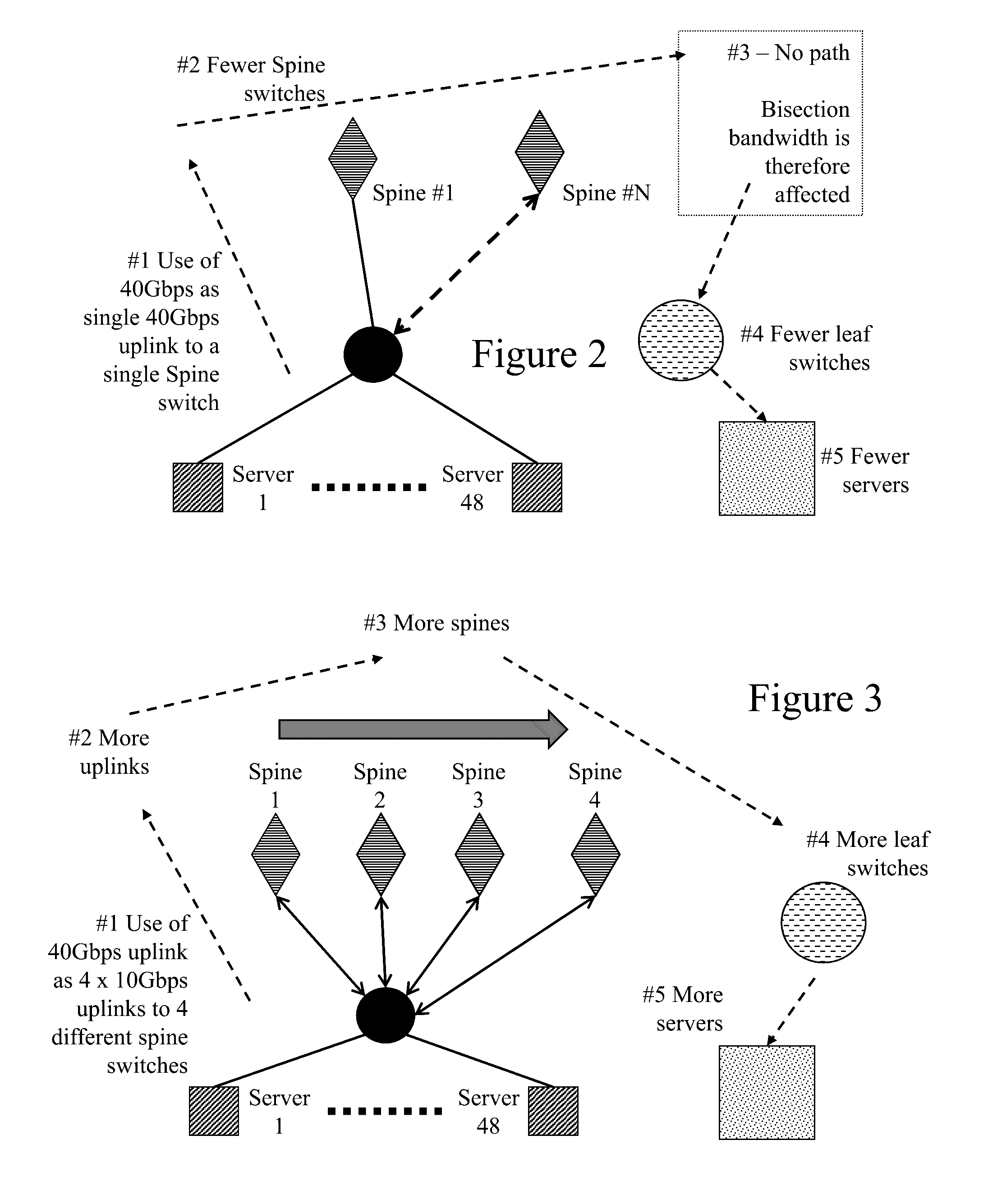

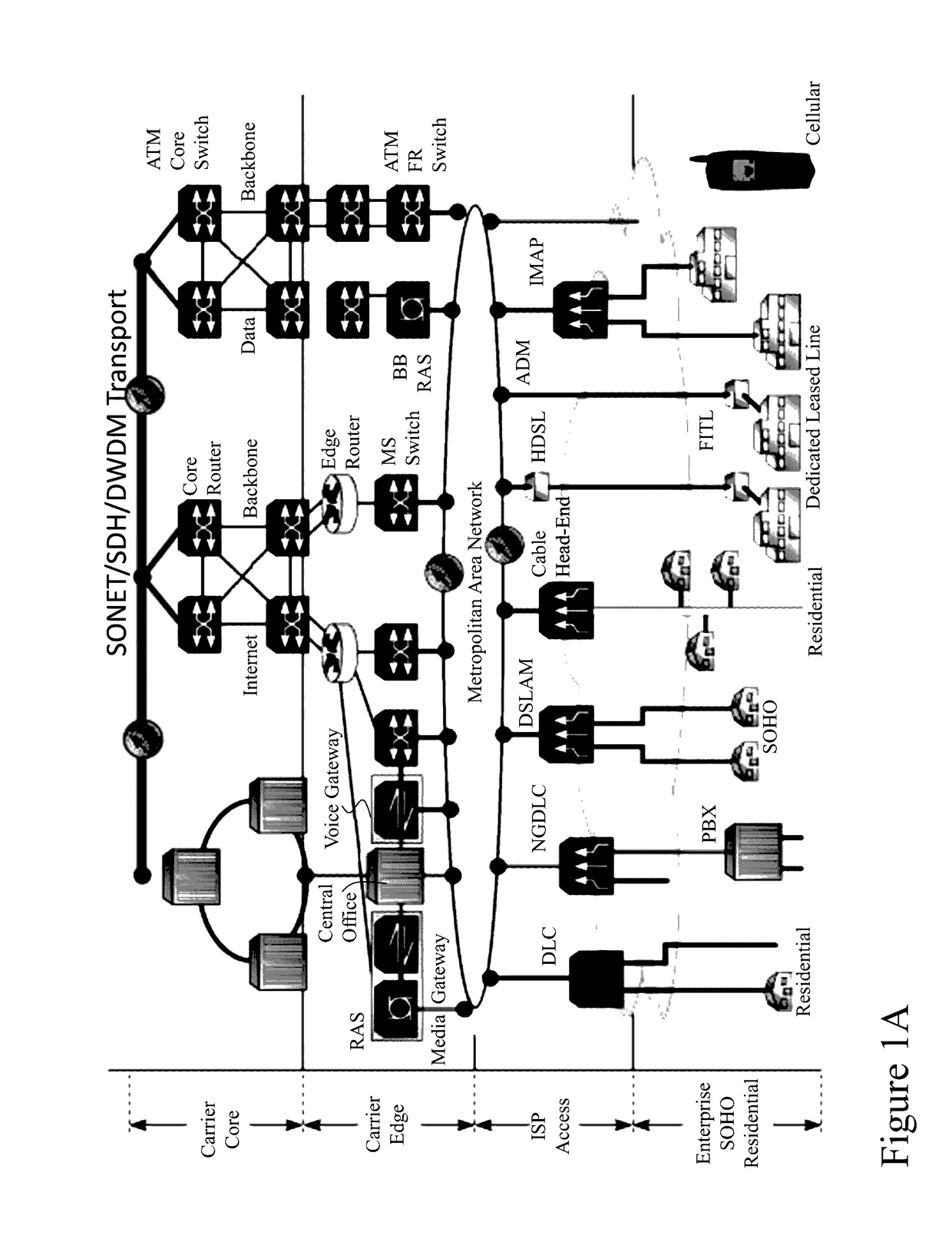

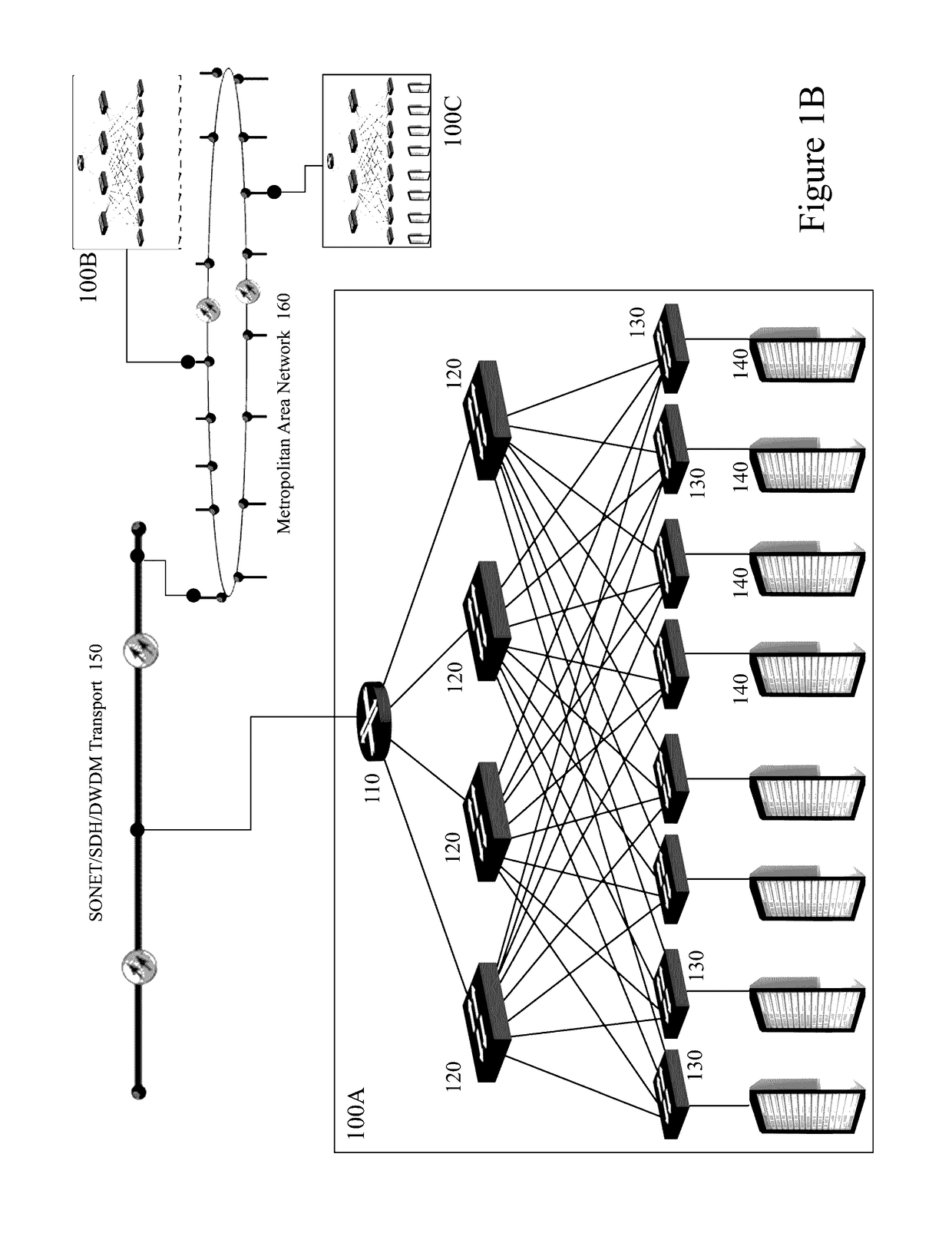

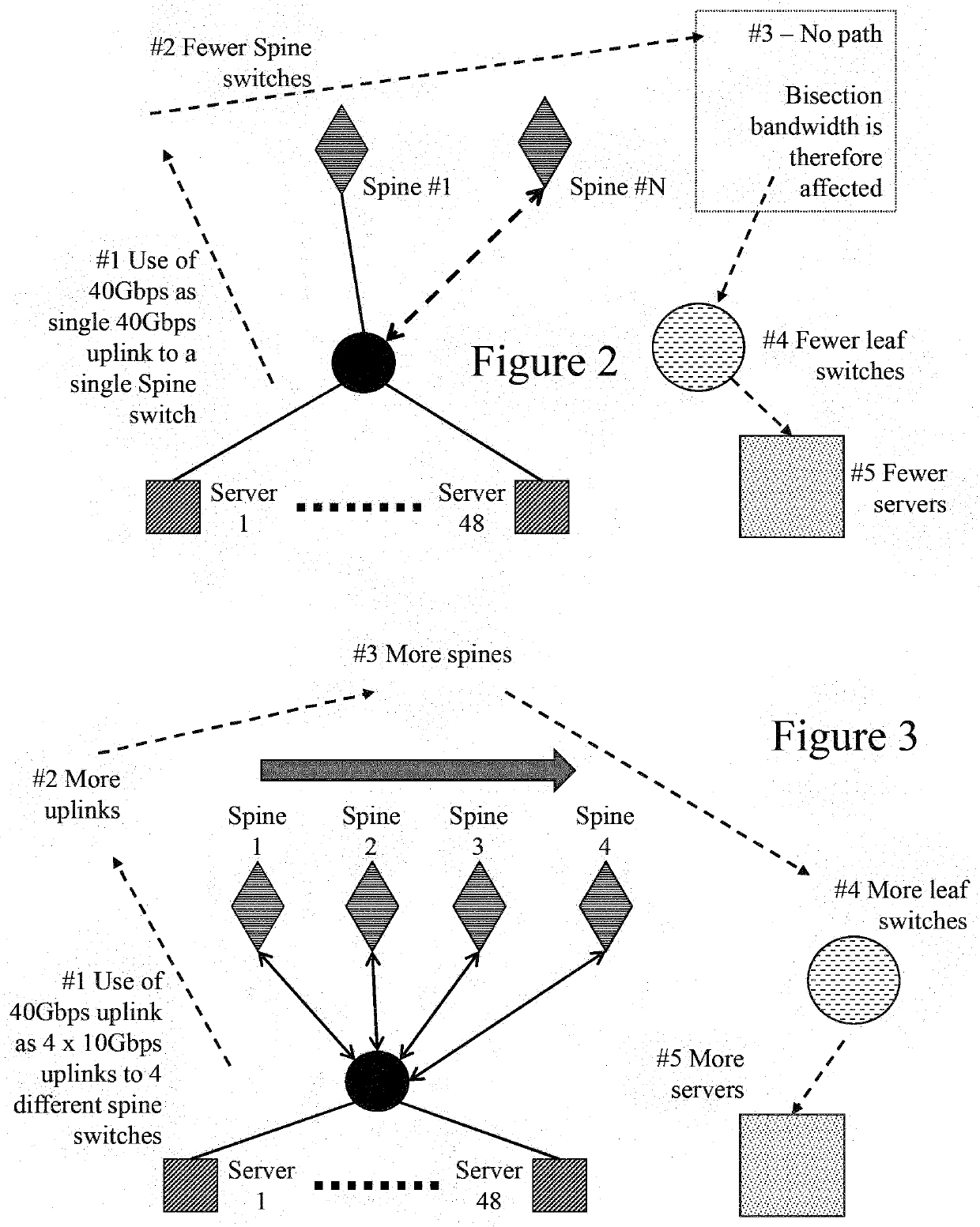

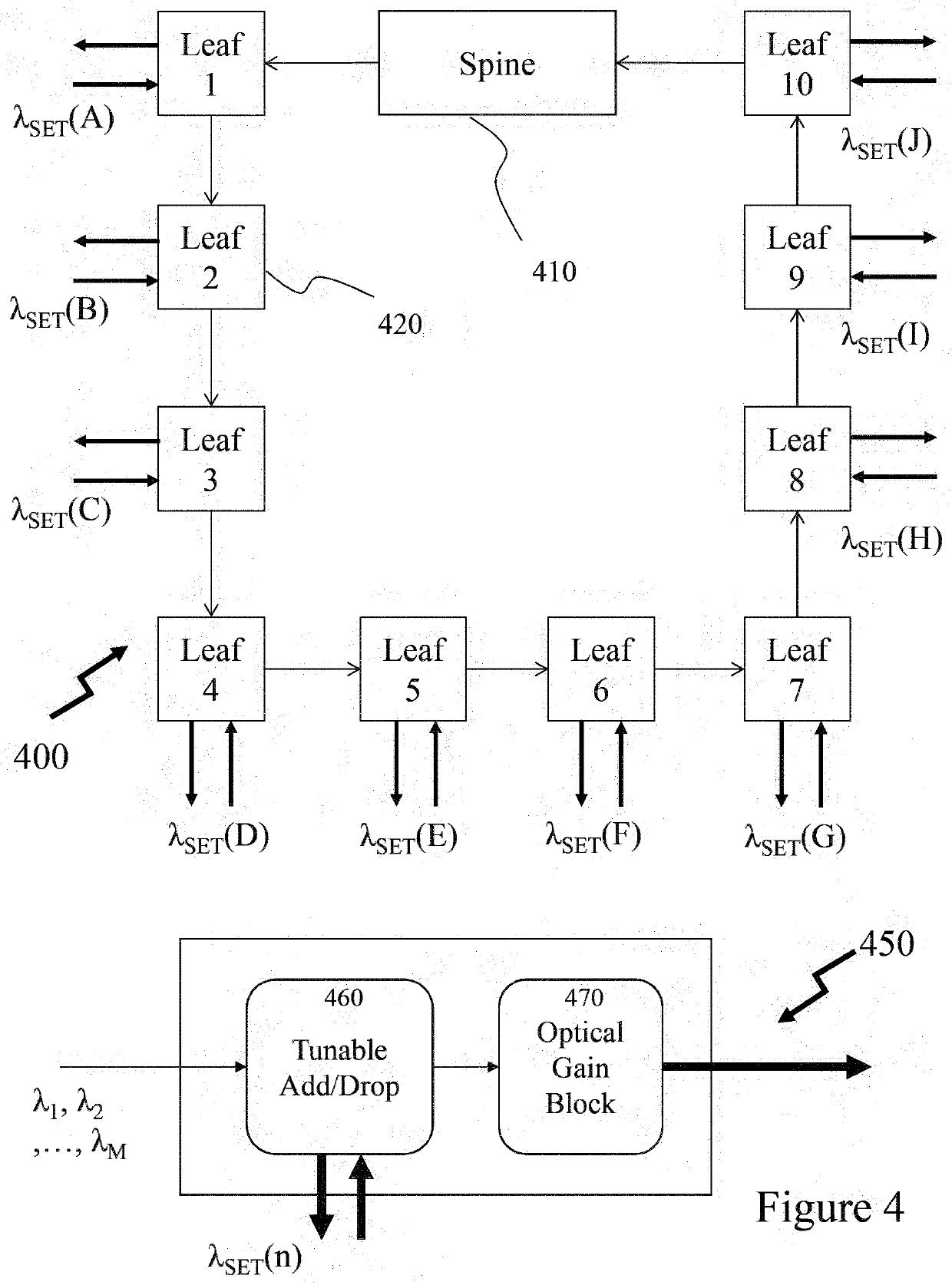

Data center interconnections, which encompass WSCs as well as traditional data centers, have become both a bottleneck and a cost / power issue for cloud computing providers, cloud service providers and the users of the cloud generally. Fiber optic technologies already play critical roles in data center operations and will increasingly in the future. The goal is to move data as fast as possible with the lowest latency with the lowest cost and the smallest space consumption on the server blade and throughout the network. Accordingly, it would be beneficial for new fiber optic interconnection architectures to address the traditional hierarchal time-division multiplexed (TDM) routing and interconnection and provide reduced latency, increased flexibility, lower cost, lower power consumption, and provide interconnections exploiting N×M×D Gbps photonic interconnects wherein N channels are provided each carrying M wavelength division signals at D Gbps.

Owner:AEPONYX

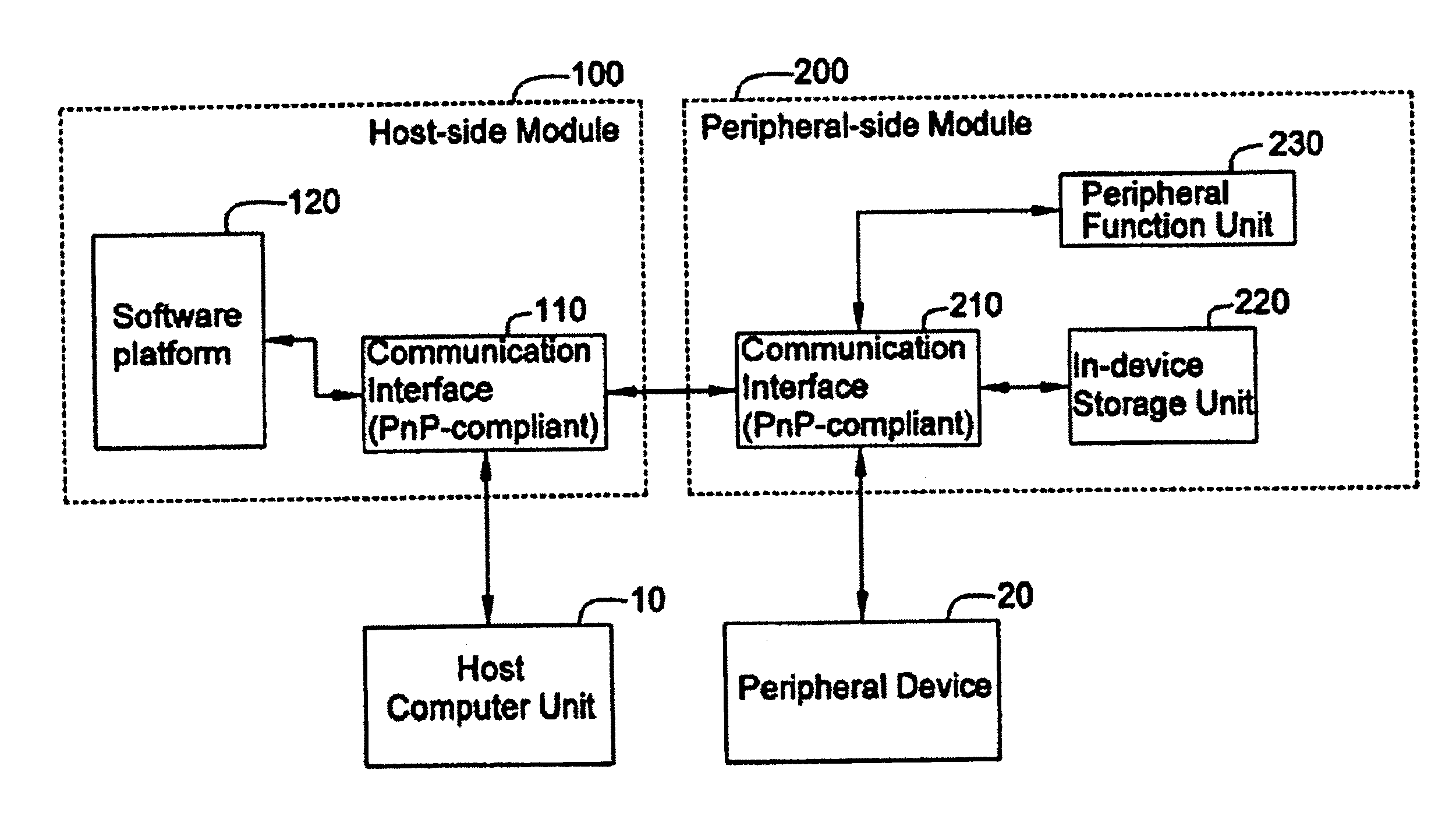

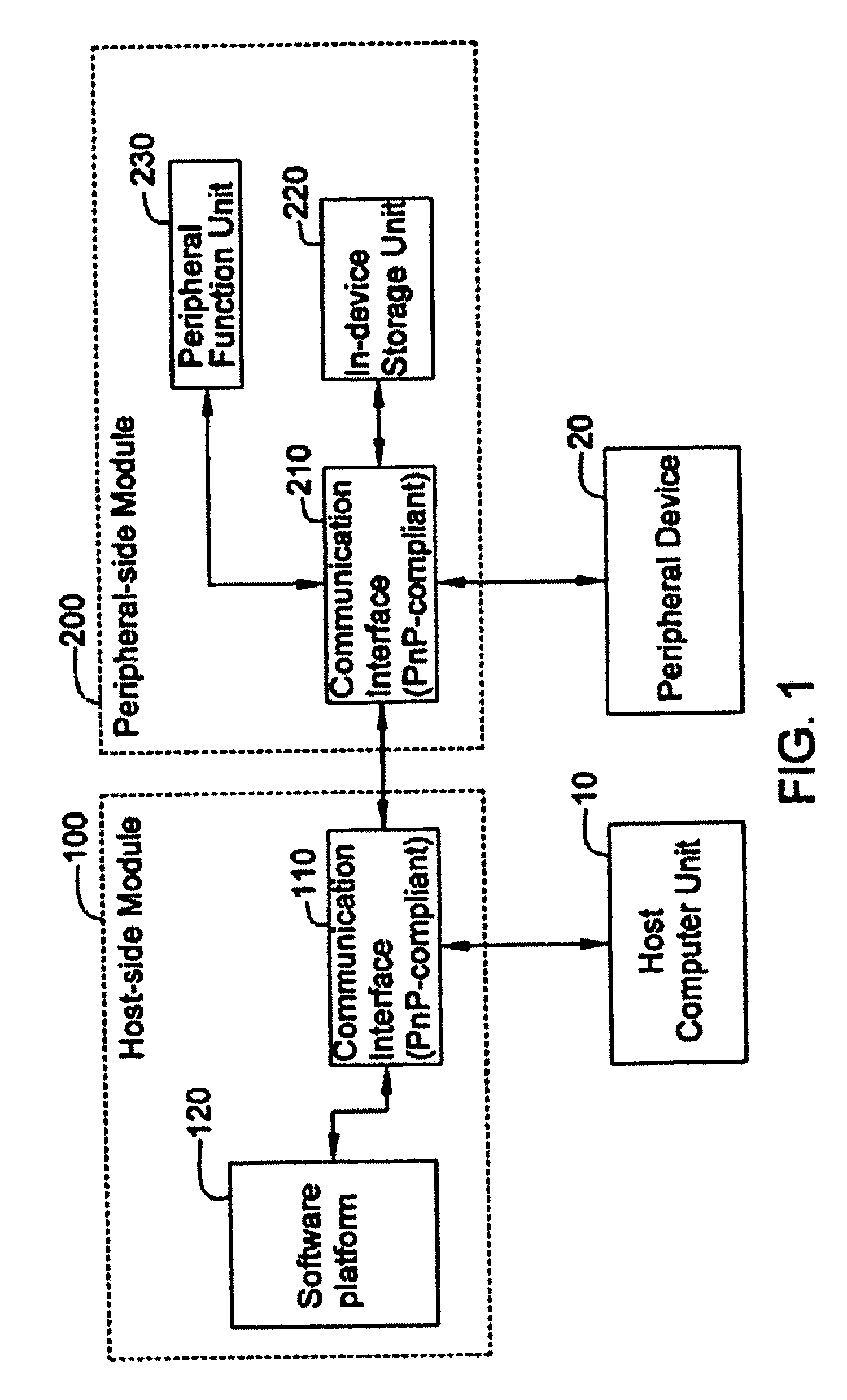

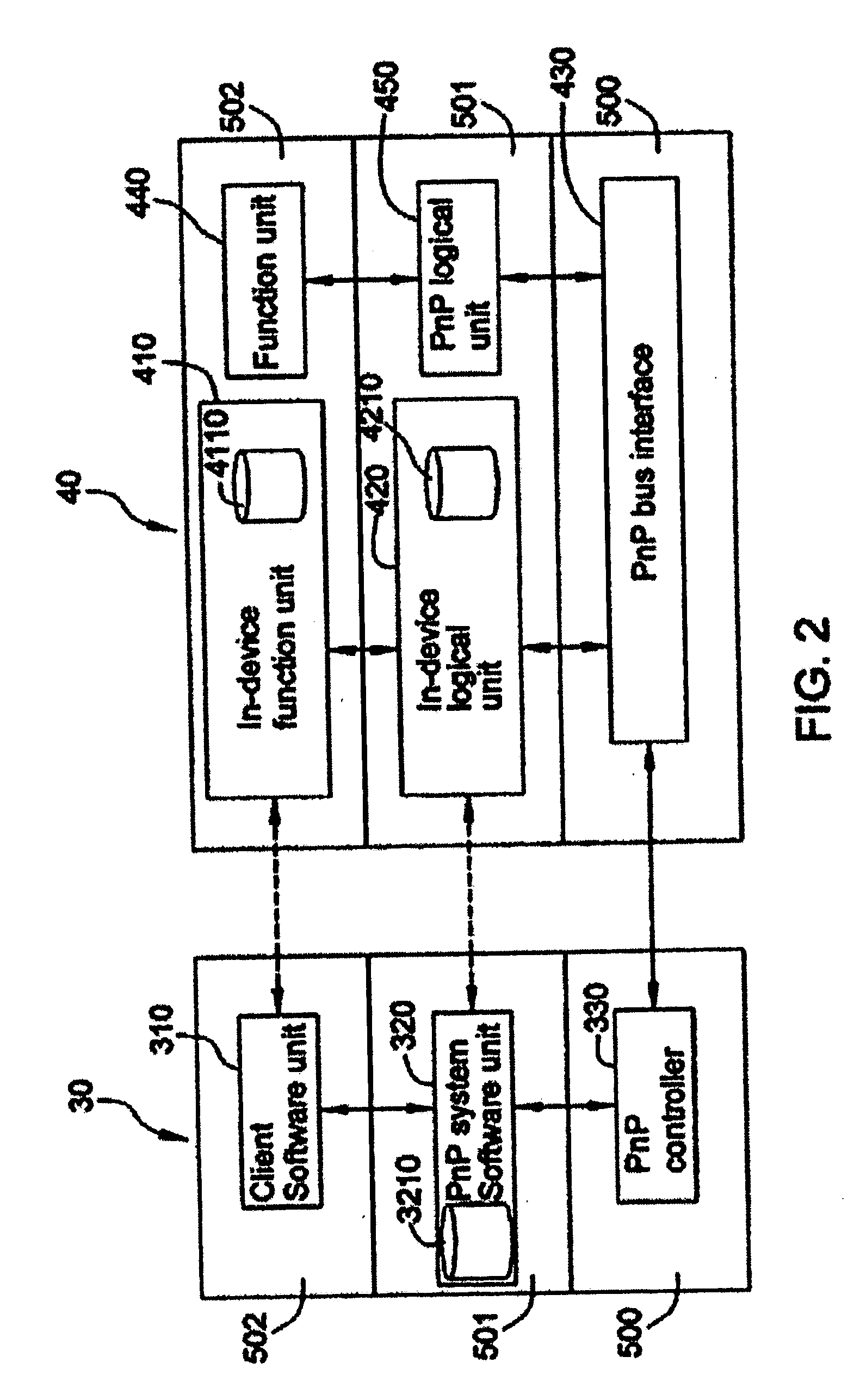

Plug-and-play interconnection architecture and method with in-device storage module in peripheral device

InactiveUS6898653B2Enhance performance and functionEnhanced PnP (ePnP)Multiple digital computer combinationsComponent plug-in assemblagesStorage cellLayered structure

A plug-and-play(PnP) interconnection architecture and method with an in-device storage module in a peripheral device are proposed for interconnecting a peripheral device with a host computer unit. The proposed architecture is characterized by integration of an in-device storage unit with internal functional modules of the peripheral device for storing device specific data and software such as the dedicated device driver of the peripheral device. An enhanced plug-and-play (ePnP) layered structure is proposed based on the in-device storage architecture. The ePnP provides a mechanism to PnP peripheral devices' functions customization. An application of the ePnP is the mechanism to bring up the device driver automatically when the peripheral device is connected to the host computer unit. This auto-installation mechanism provides a truly plug-and-play capability to the user.

Owner:SCIENTRONIC

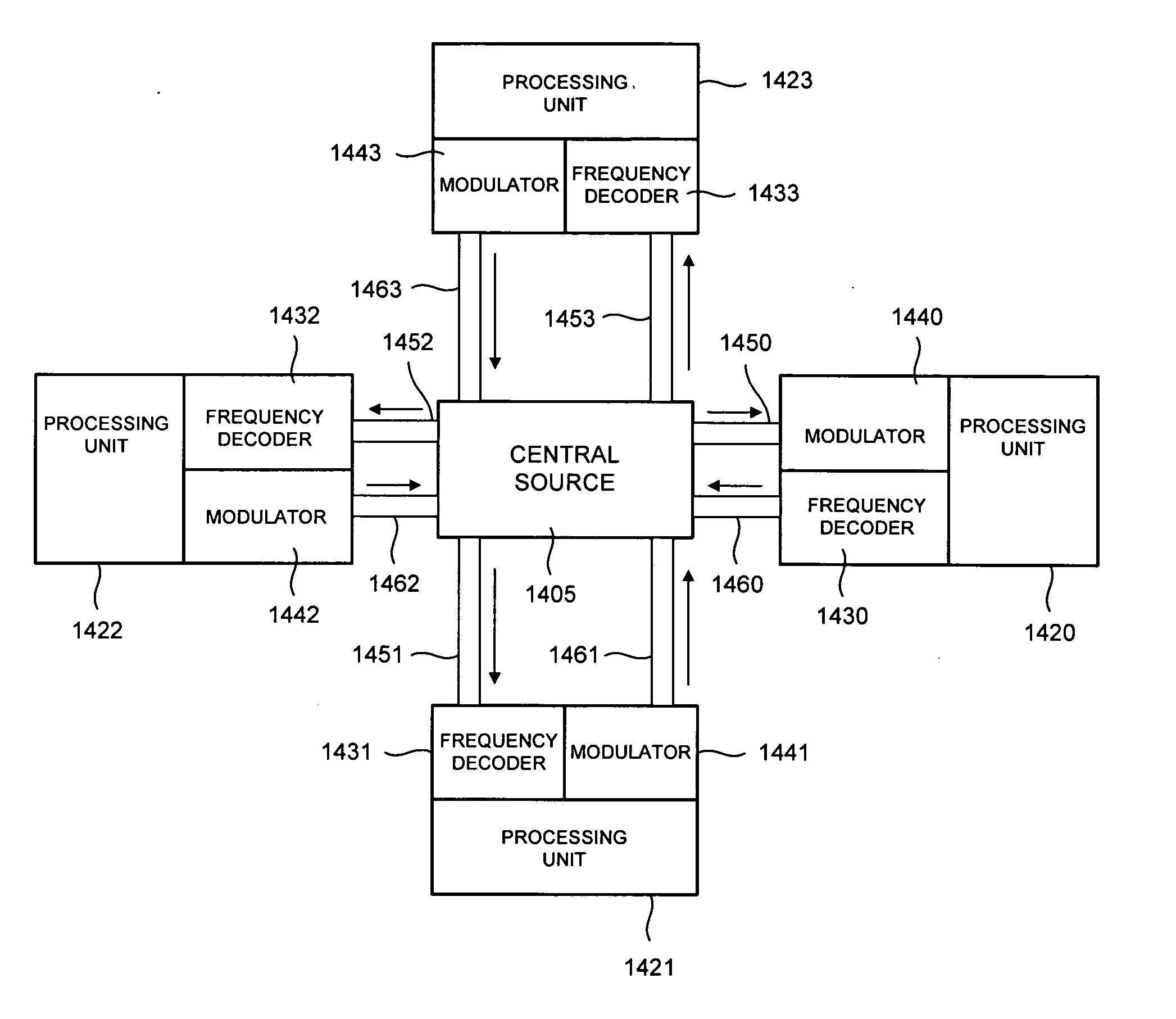

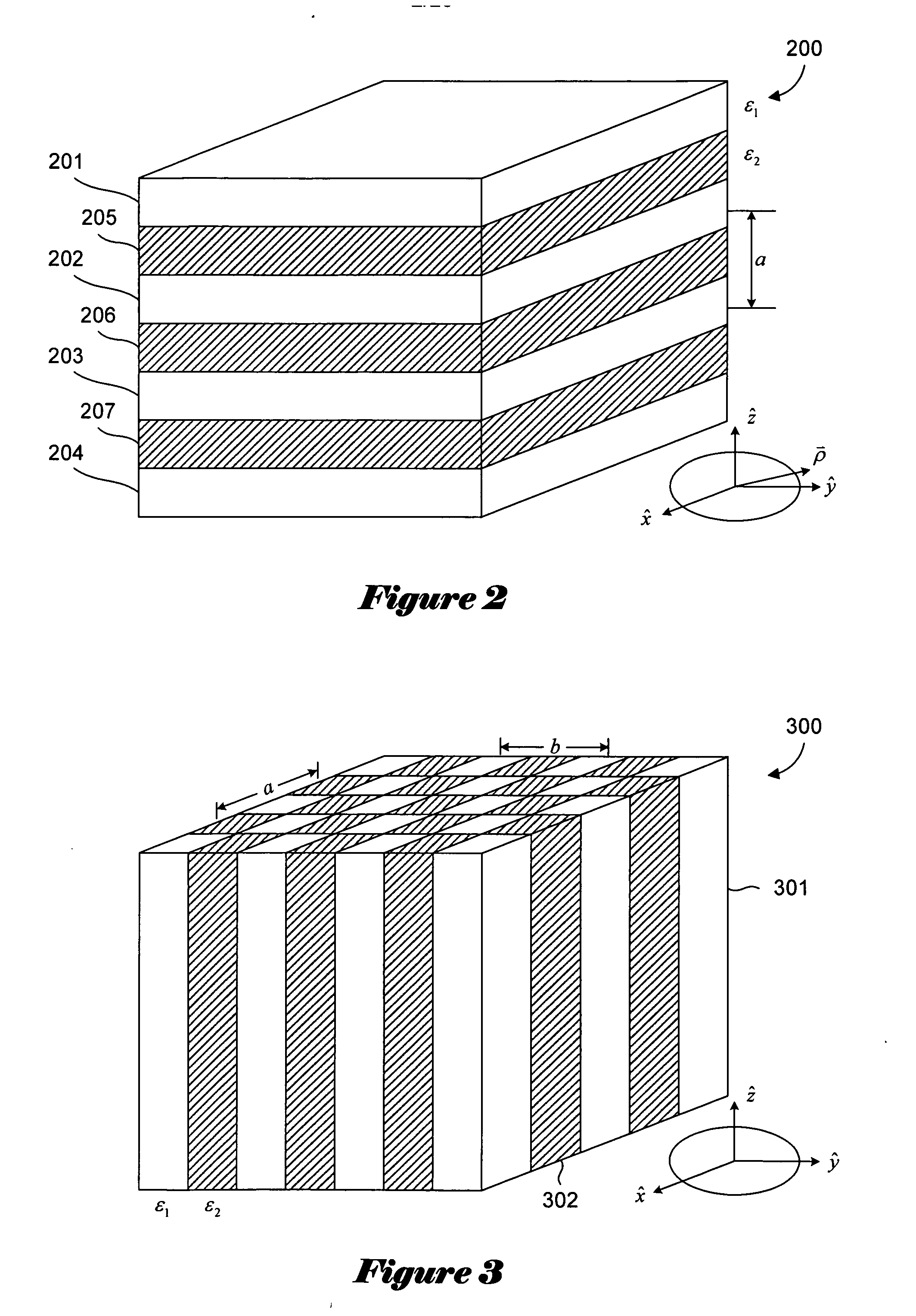

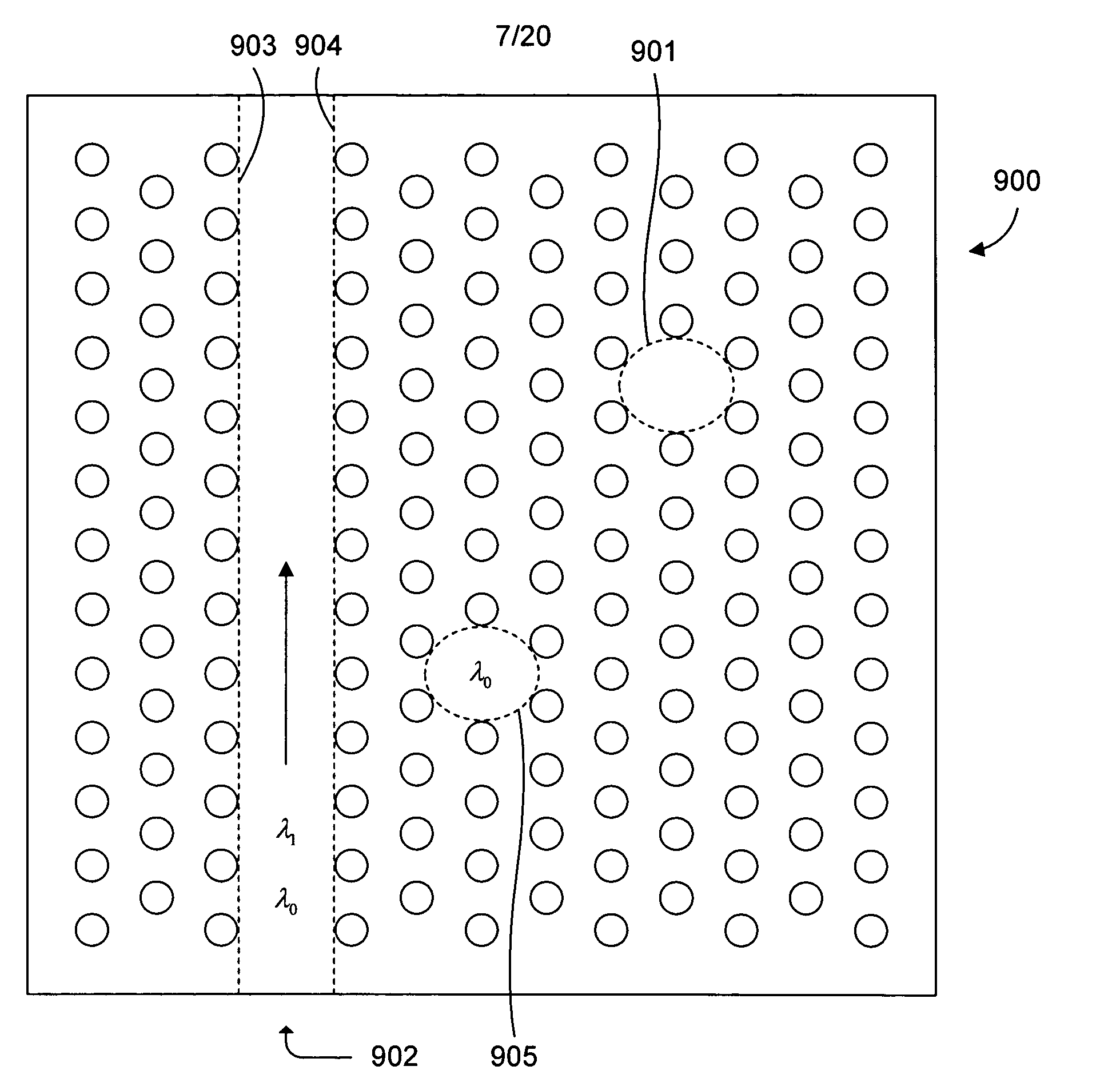

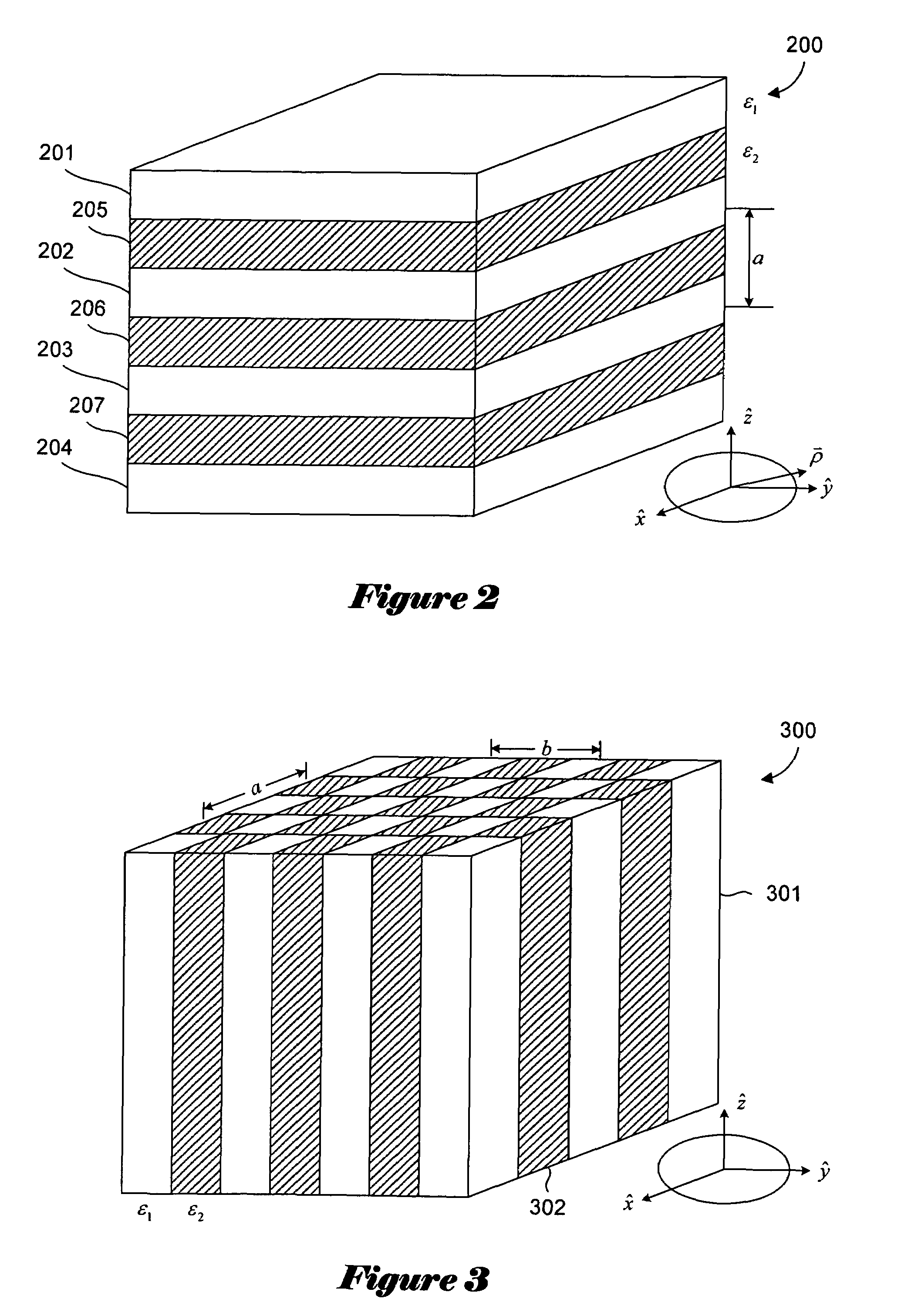

Photonic interconnections that include optical transmission paths for transmitting optical signals

Various embodiments of the present invention are directed to photonic interconnection architectures that provide high-speed interconnections of microscale or nanoscale devices. In one embodiment of the present invention, a photonic interconnection for interconnecting and synchronizing operation of components within a computing device comprises an optical transmission path for transmitting a number of independent frequency channels within an optical signal provided by an optical signal source. The photonic crystal may include one or more filters located near the waveguide for extracting specific frequency channels transmitted by the waveguide, the frequency channel including one or more frequency channels carrying a clock signal. One or more photodetectors positioned near the one or more filters convert the extracted frequency channels into electrical signals for use by one or more components of the computing device, the electrical signals including one or more clock signals for synchronizing operation of the one or more components.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

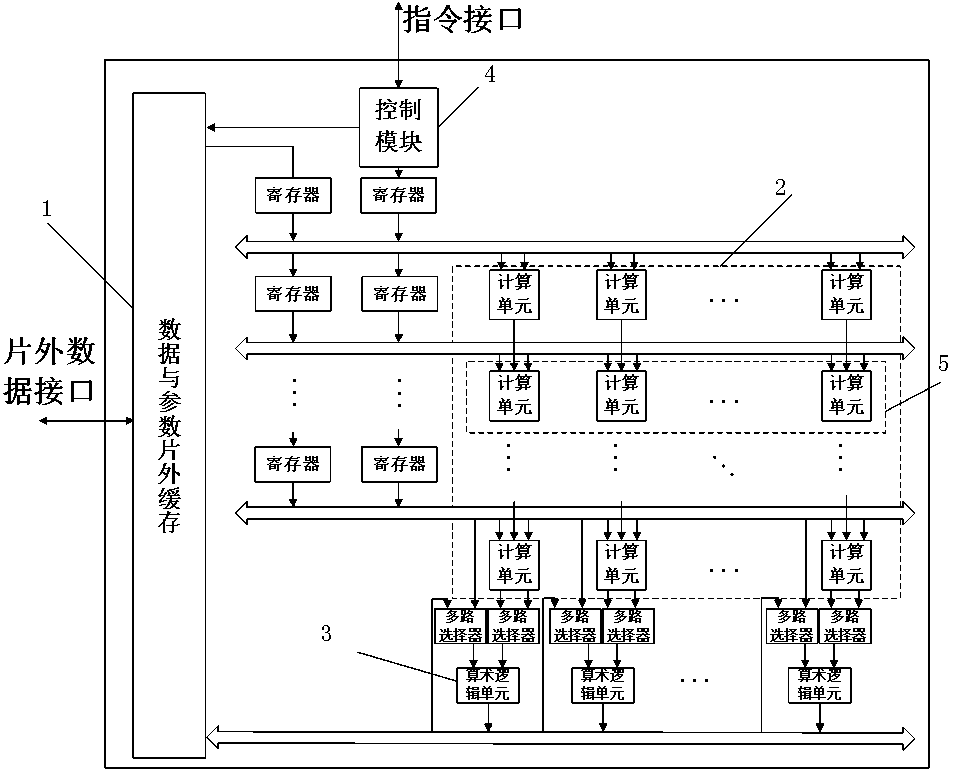

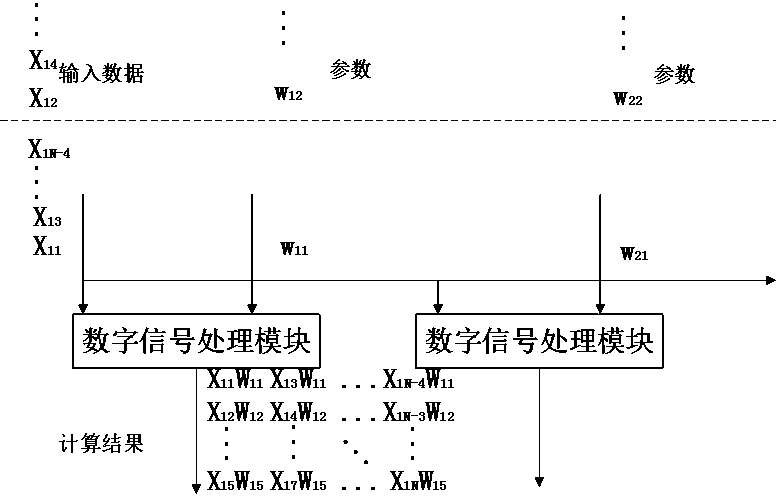

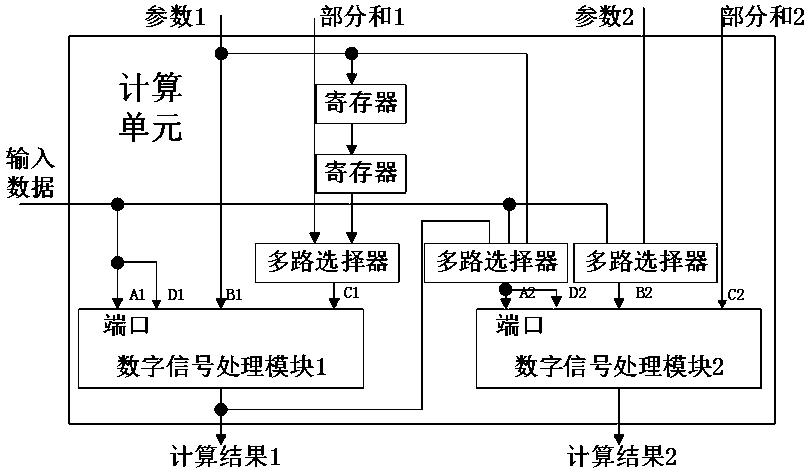

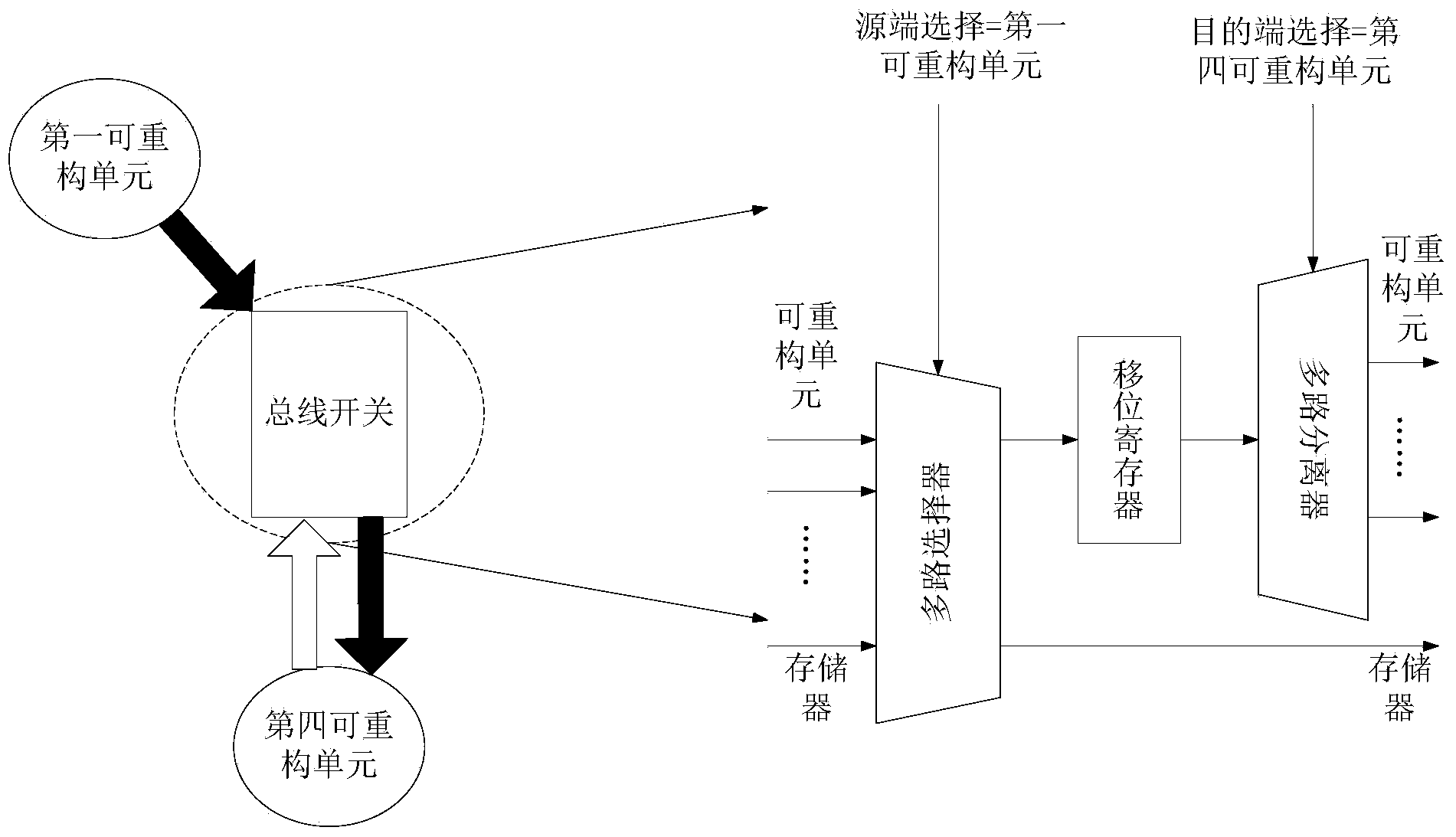

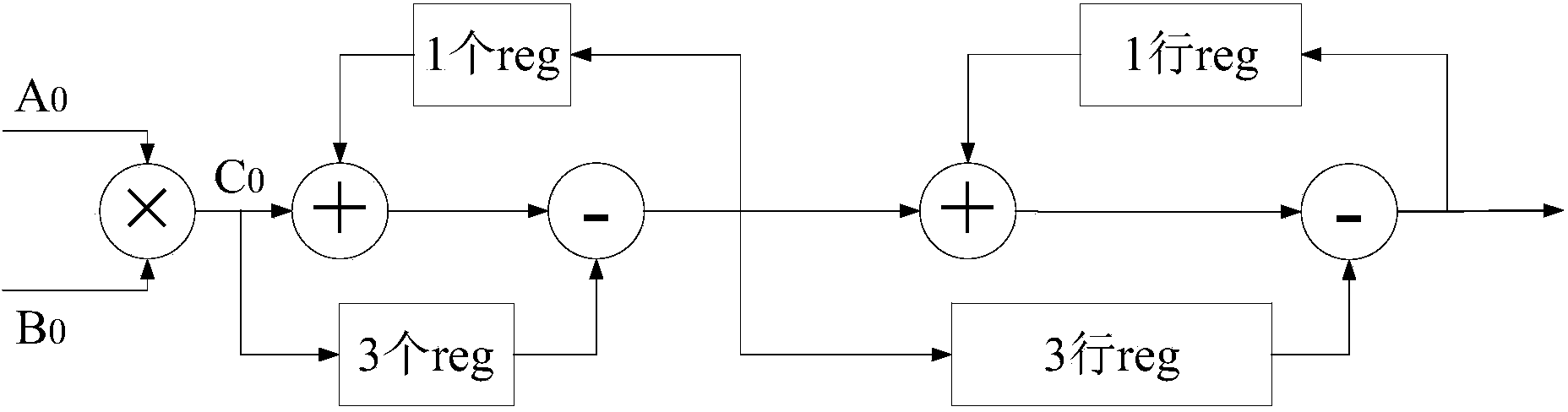

Hardware interconnection architecture of reconfigurable convolutional neural network

The invention belongs to the technical field of hardware design of image processing algorithms, and specifically discloses hardware interconnection architecture of a reconfigurable convolutional neural network. The interconnection architecture comprises a data and parameter off-chip caching module, a basic calculation unit array module and an arithmetic logic unit calculation module, wherein the data and parameter off-chip caching module is used for caching pixel data in input to-be-processed pictures and parameters input during convolutional neural network calculation; the basic calculation unit array module is used for realizing core calculation of the convolutional neural network; and the arithmetic logic unit calculation module is used for processing calculation results of the basic calculation unit array module and accumulating a down-sampling layers, activation functions and partial sums. The basic calculation unit array module is interconnected according to a two-dimensional array manner; in a row direction, input data is shared and parallel calculation is realized by using different pieces of parameter data; and in a column direction, a calculation result is transferred rowby row to serve as input of the next row to participate in the operation. The hardware interconnection architecture is capable of reducing the bandwidth demand while enhancing the data reusing ability through structure interconnection.

Owner:FUDAN UNIV

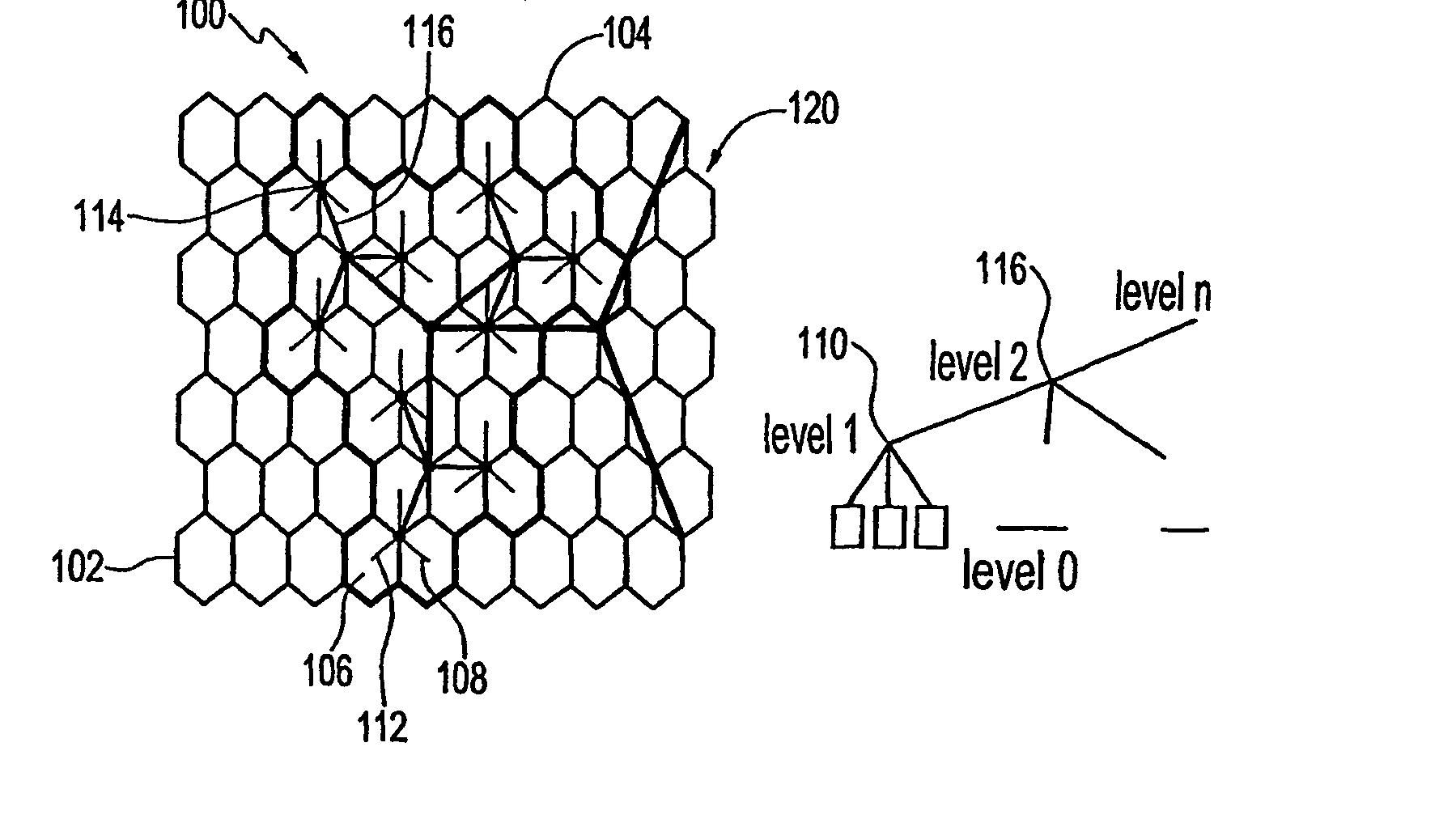

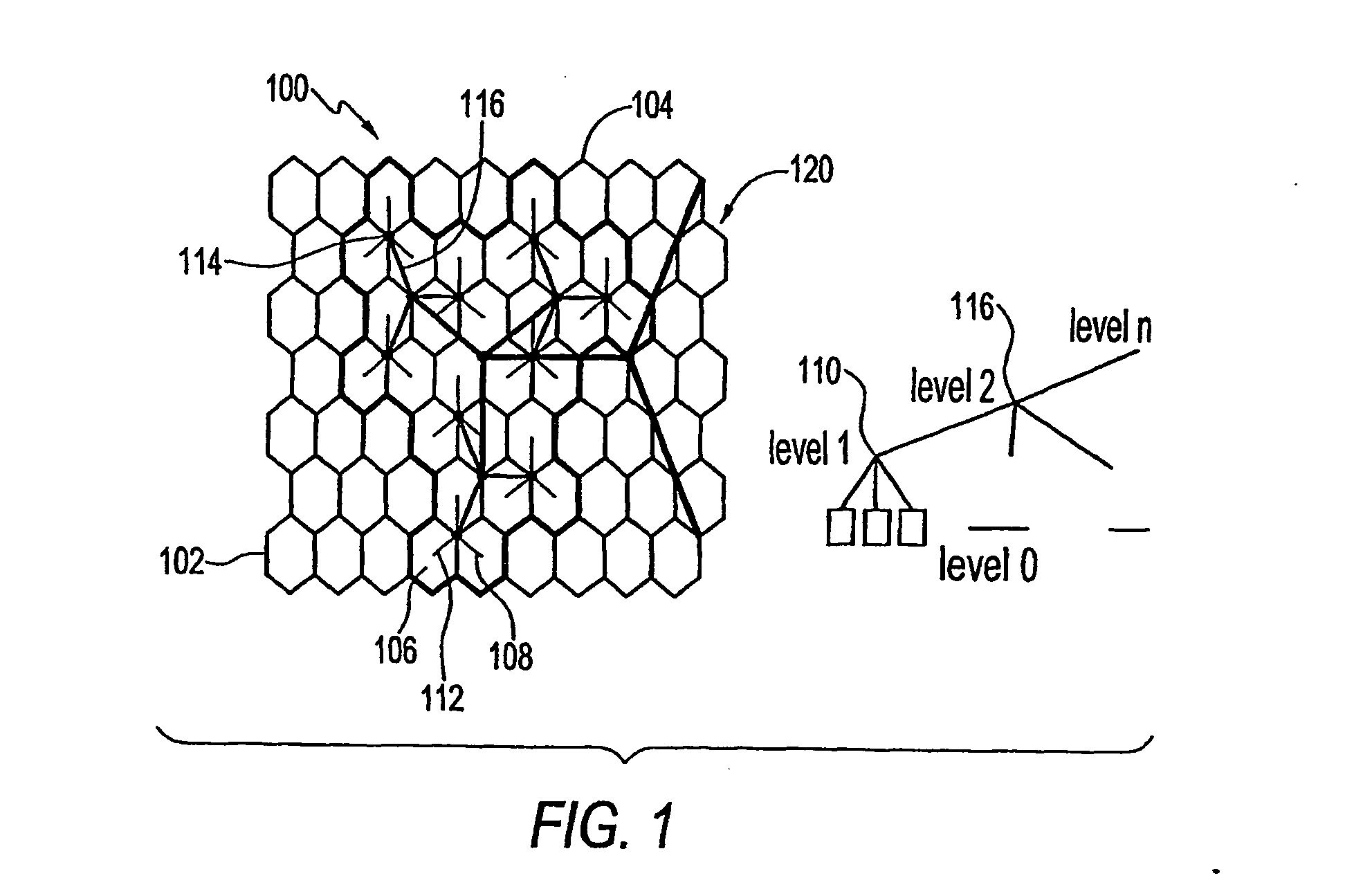

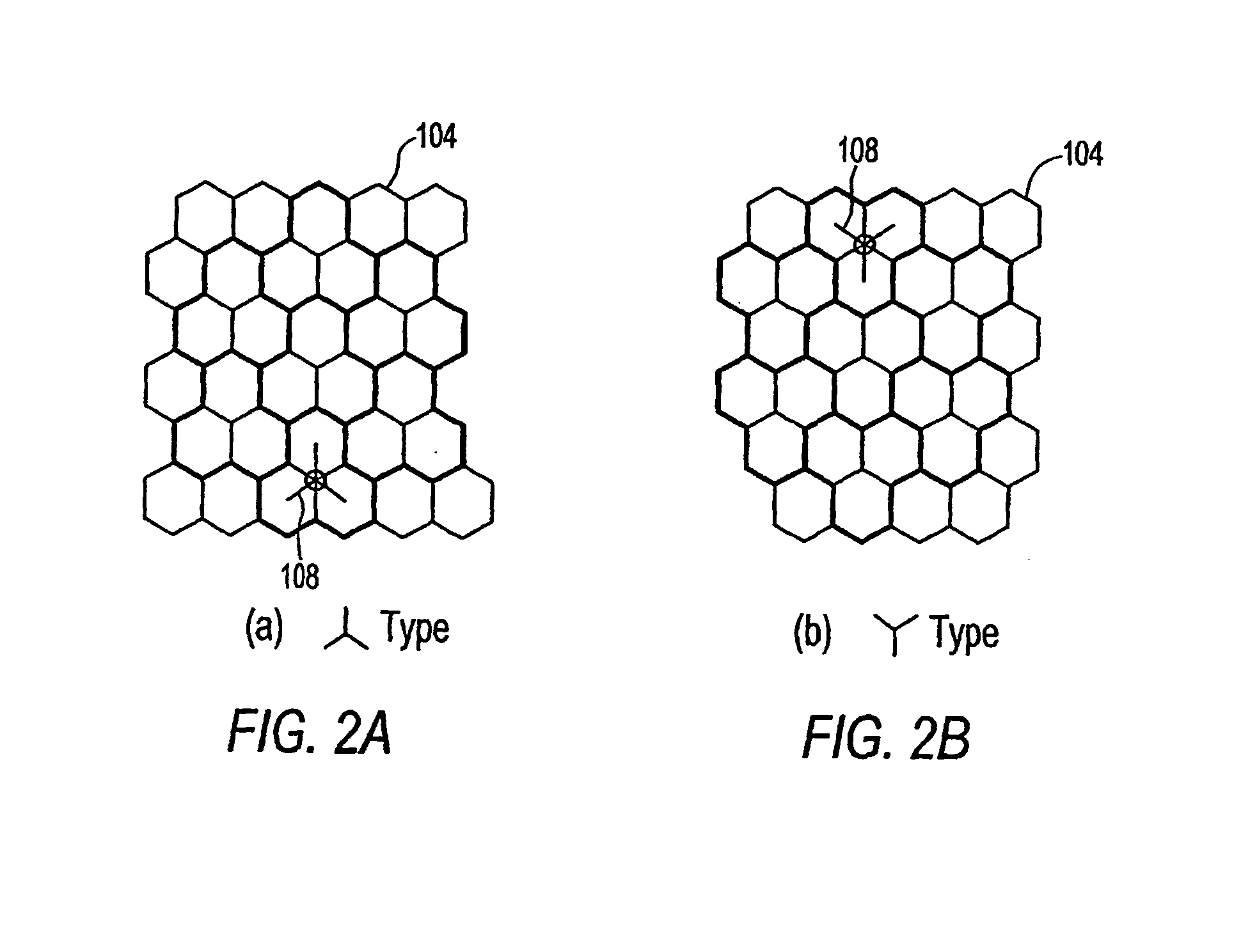

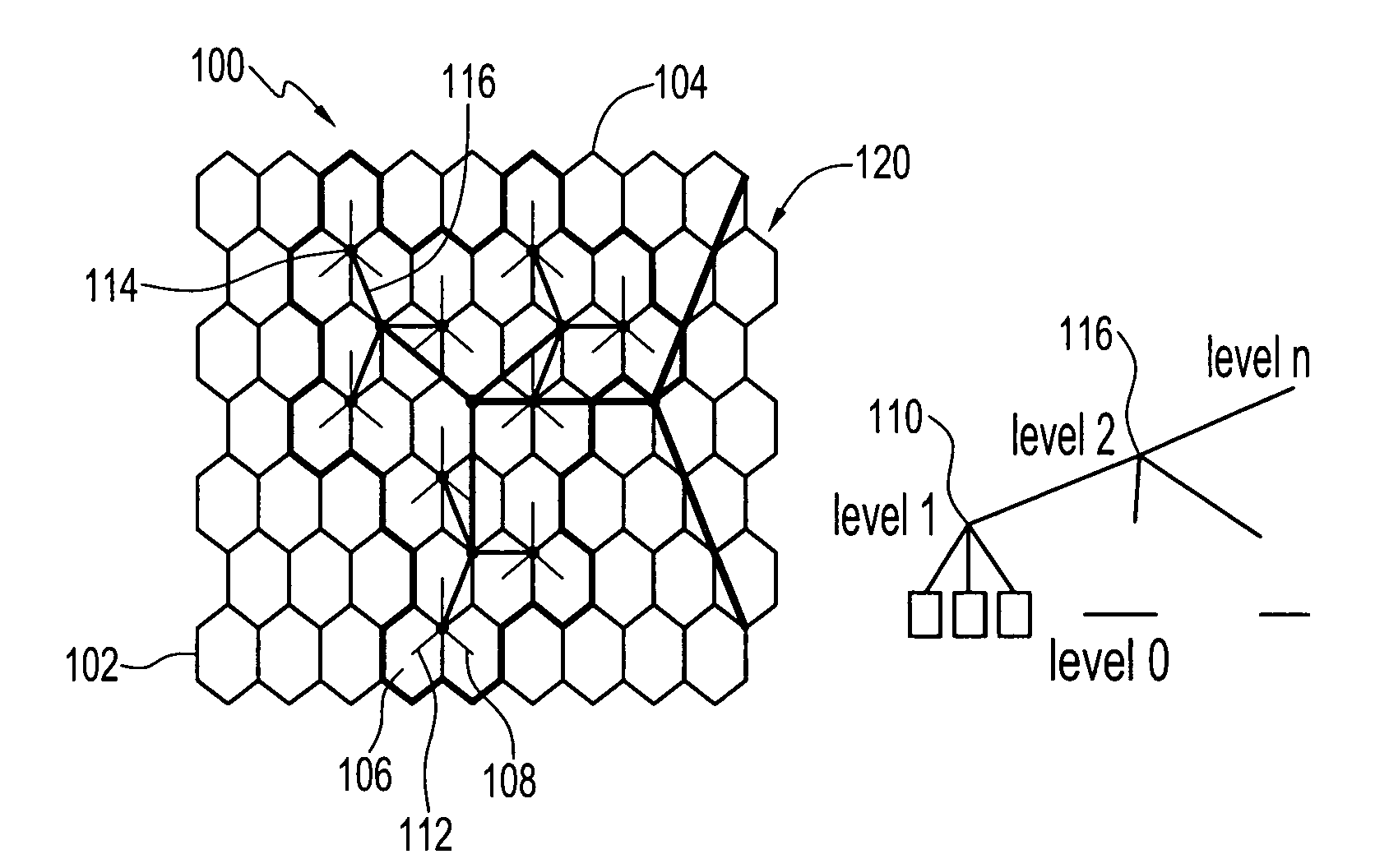

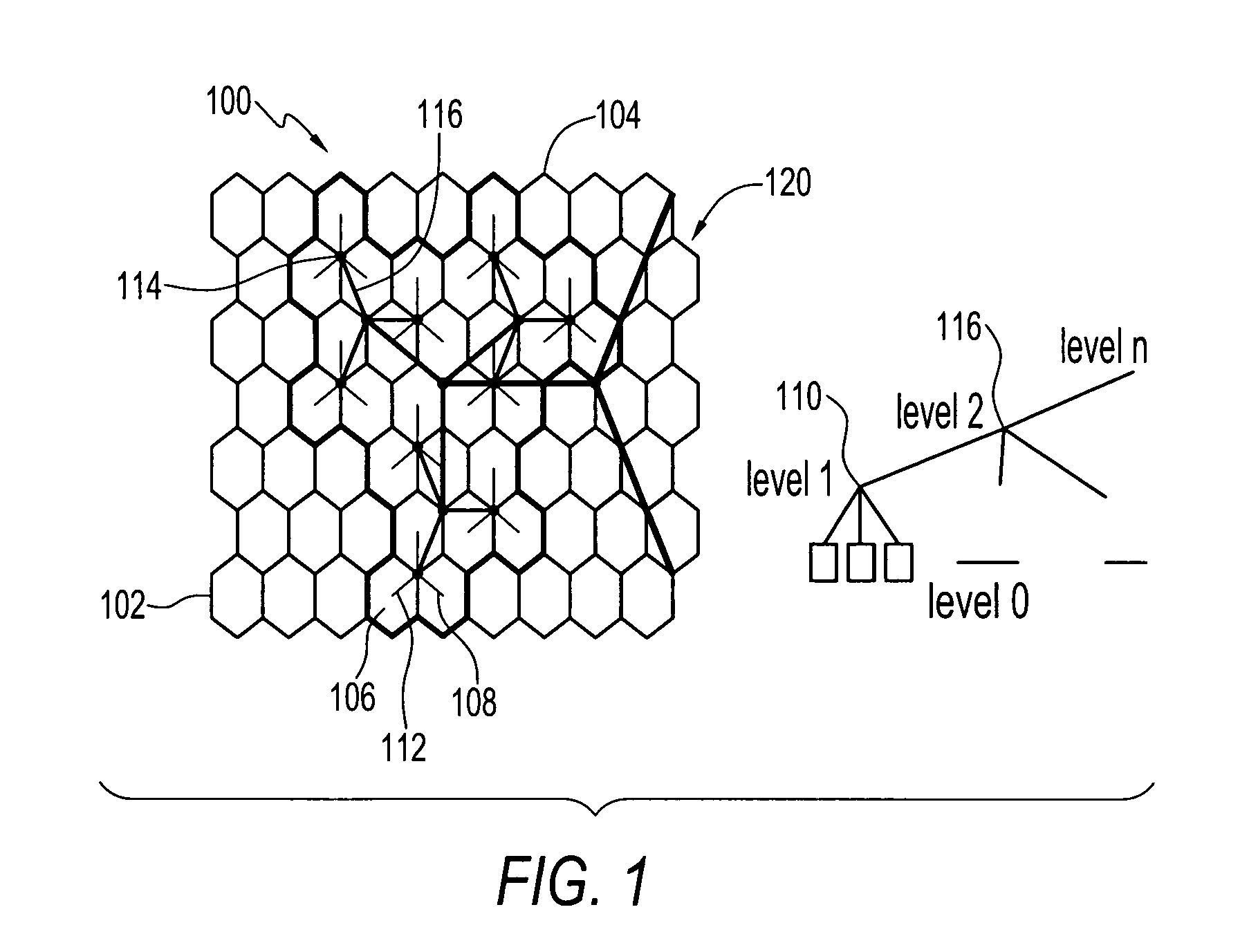

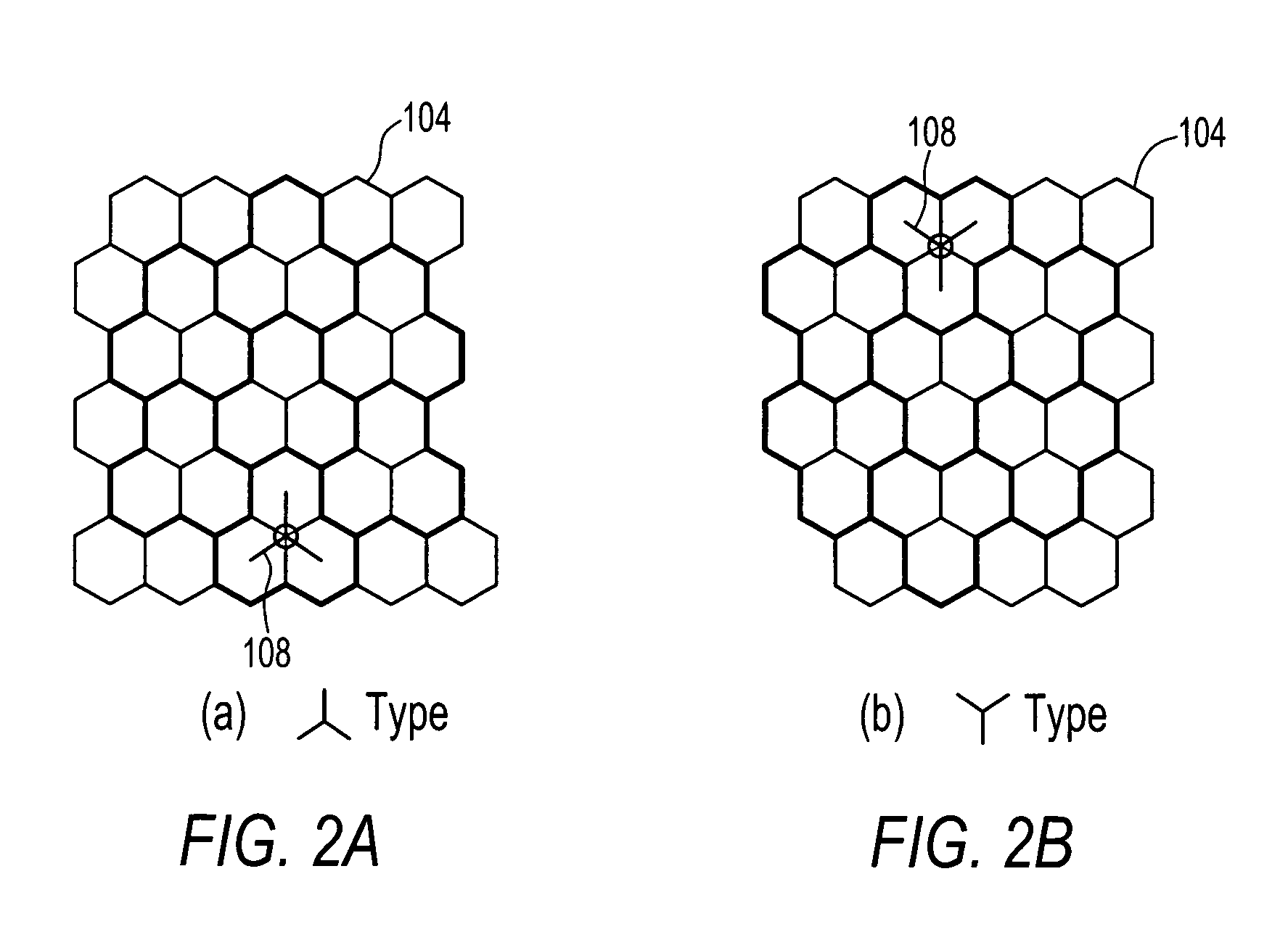

Interconnection architecture and method of assessing interconnection architecture

InactiveUS20060049468A1TransistorSingle instruction multiple data multiprocessorsHexagonal cellEngineering

Owner:RGT UNIV OF CALIFORNIA

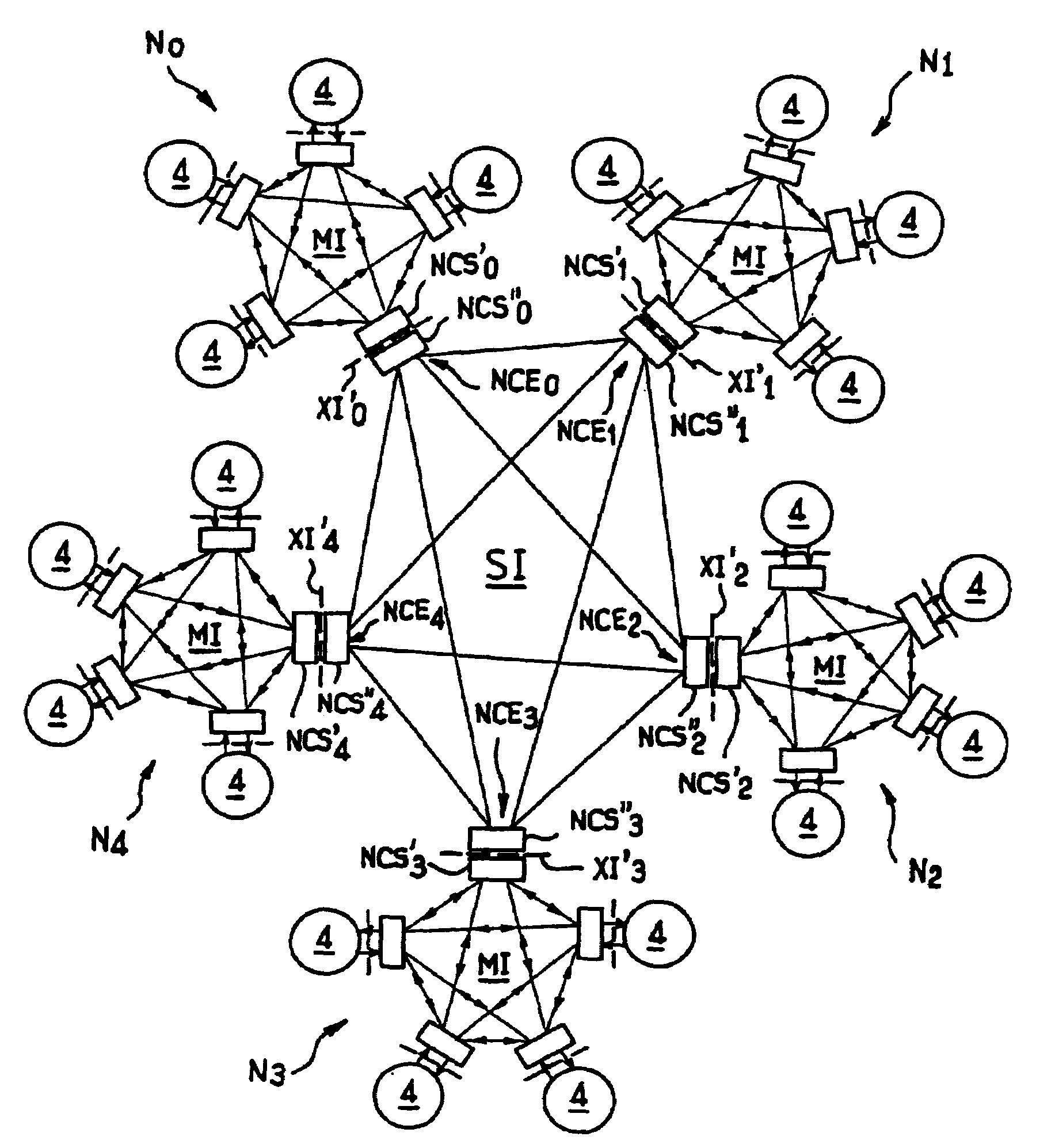

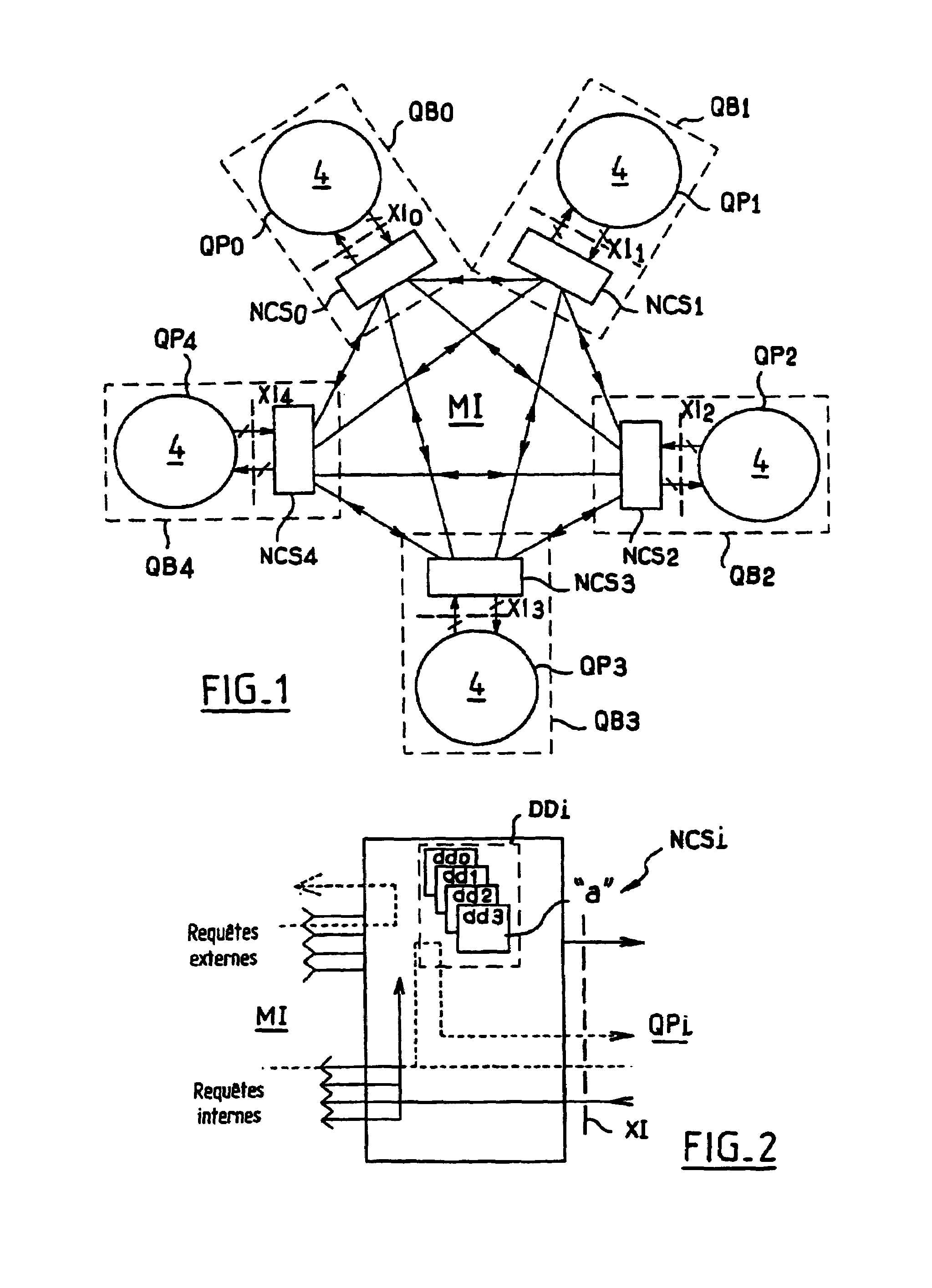

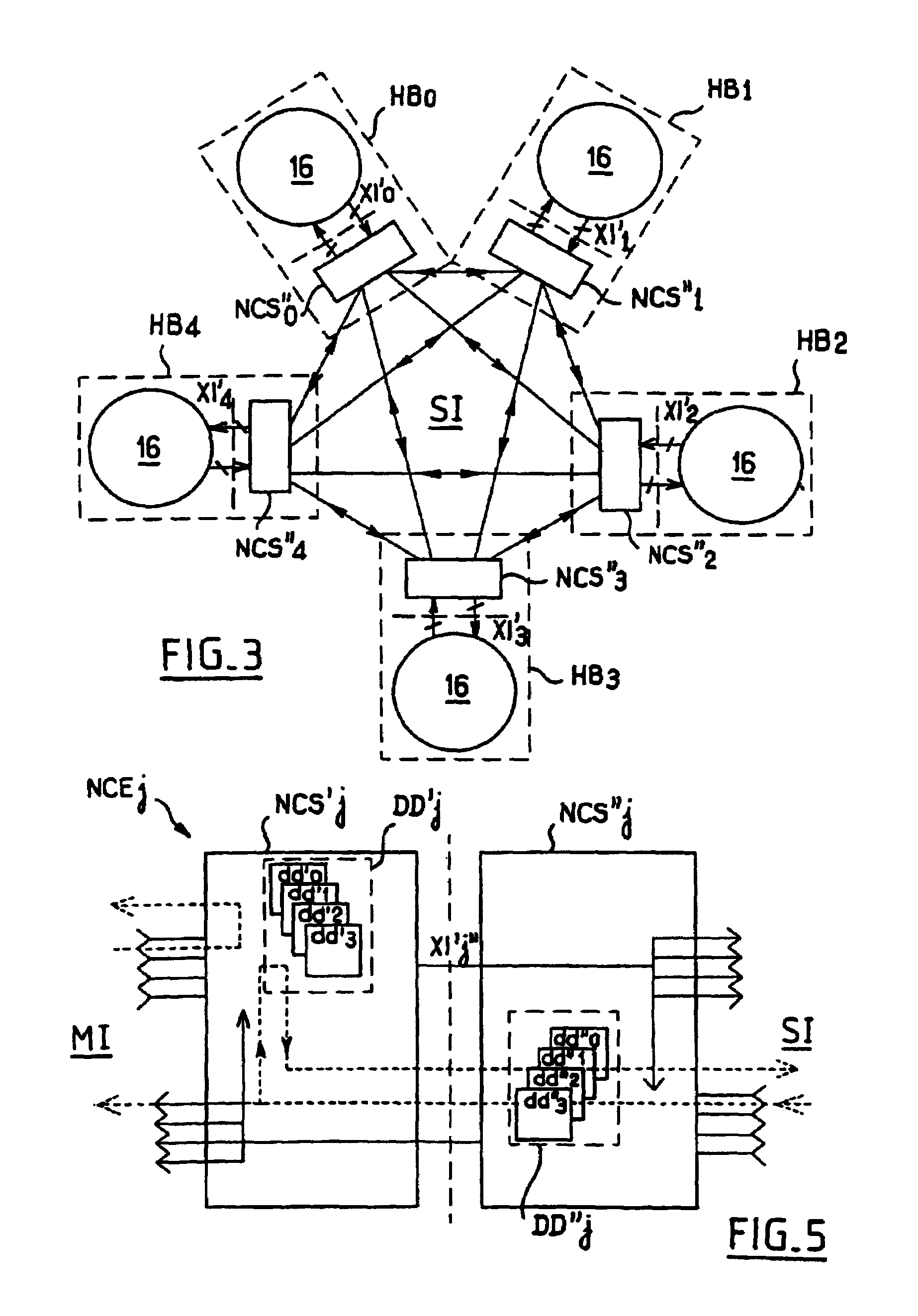

Modular interconnection architecture for an expandable multiprocessor machine, using a multilevel bus hierarchy and the same building block for all the levels

InactiveUS6996681B1Eliminate needMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorModularity

The present invention relates to a modular interconnection architecture for an expandable multiprocessor machine. It comprises a first interconnection level (MI) comprising connection agents (NCSi) that connect the multiprocessor modules and handle the transactions between the multiprocessor modules, and a second interconnection level (SI) comprising external connection nodes (NCEj) that connect the nodes (Nj) to one another and handle the transactions between the nodes (Nj). Each external connection node (NCEj) comprises two connection agents identical to the connection agent (NCSi), connected head-to-tail, one of the two agents (NCS′j) receives and filters the transactions sent by the node (Nj) to which it is connected. The other agent (NCS″j) receives and filters the transactions sent by the other nodes (Nj) to which it is connected. Its applications specifically include the construction of an entire range of machines: UMA, QUASI-UMA, NUMA, cluster, etc.

Owner:BULL SA

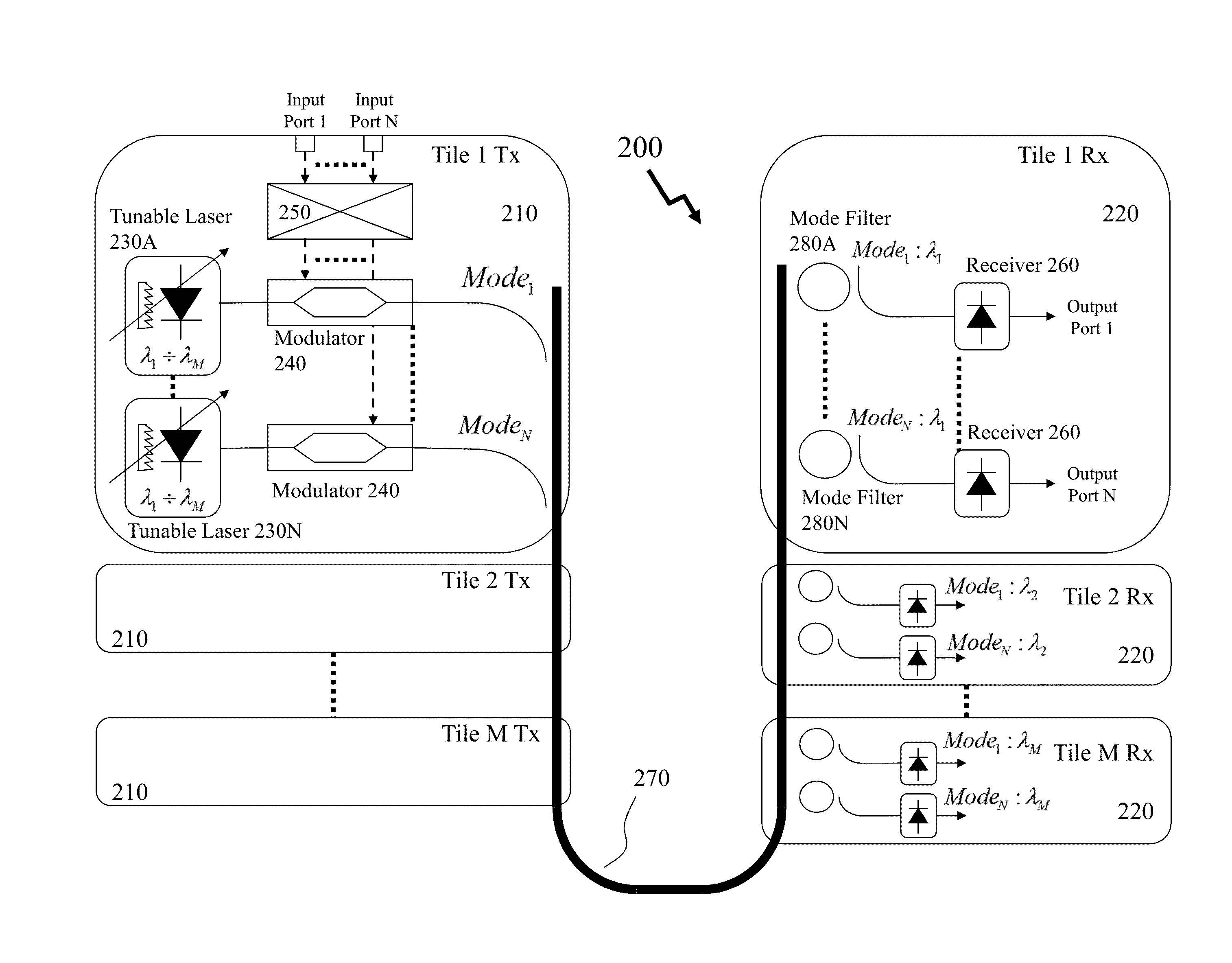

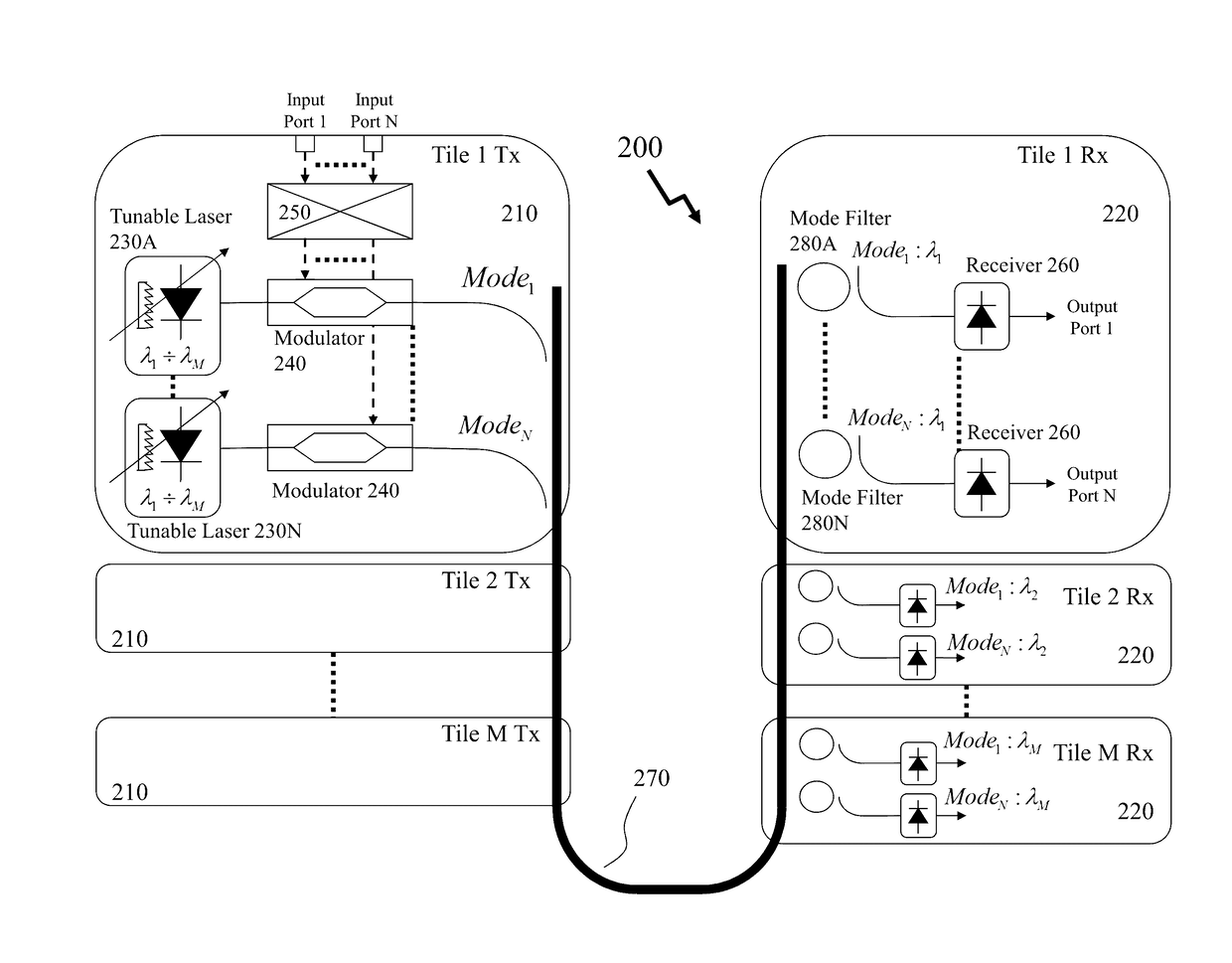

Optical interconnection methods and systems exploiting mode multiplexing

ActiveUS20160094308A1Address limitationsMultiplex system selection arrangementsWavelength-division multiplex systemsOptical spaceMode division multiplexing

Optical solutions to address and overcome the issues of superseding / replacing electrical interconnection networks have generally exploited some form of optical space switching. Such optical space switching architectures required multiple switching elements, leading to increased power consumption and footprint issues. Accordingly, it would be beneficial for new optical, e.g. fiber optic or integrated optical, interconnection architectures to address the traditional hierarchal time-division multiplexed (TDM) space based routing and interconnection to provide reduced latency, increased flexibility, lower cost, and lower power consumption. Accordingly, it would be beneficial to exploit networks operating in multiple domains by overlaying mode division multiplexing to provide increased throughput in bus, point-to-point networks, and multi-cast networks, for example, discretely or in combination with wavelength division multiplexing.

Owner:MCGILL UNIV +1

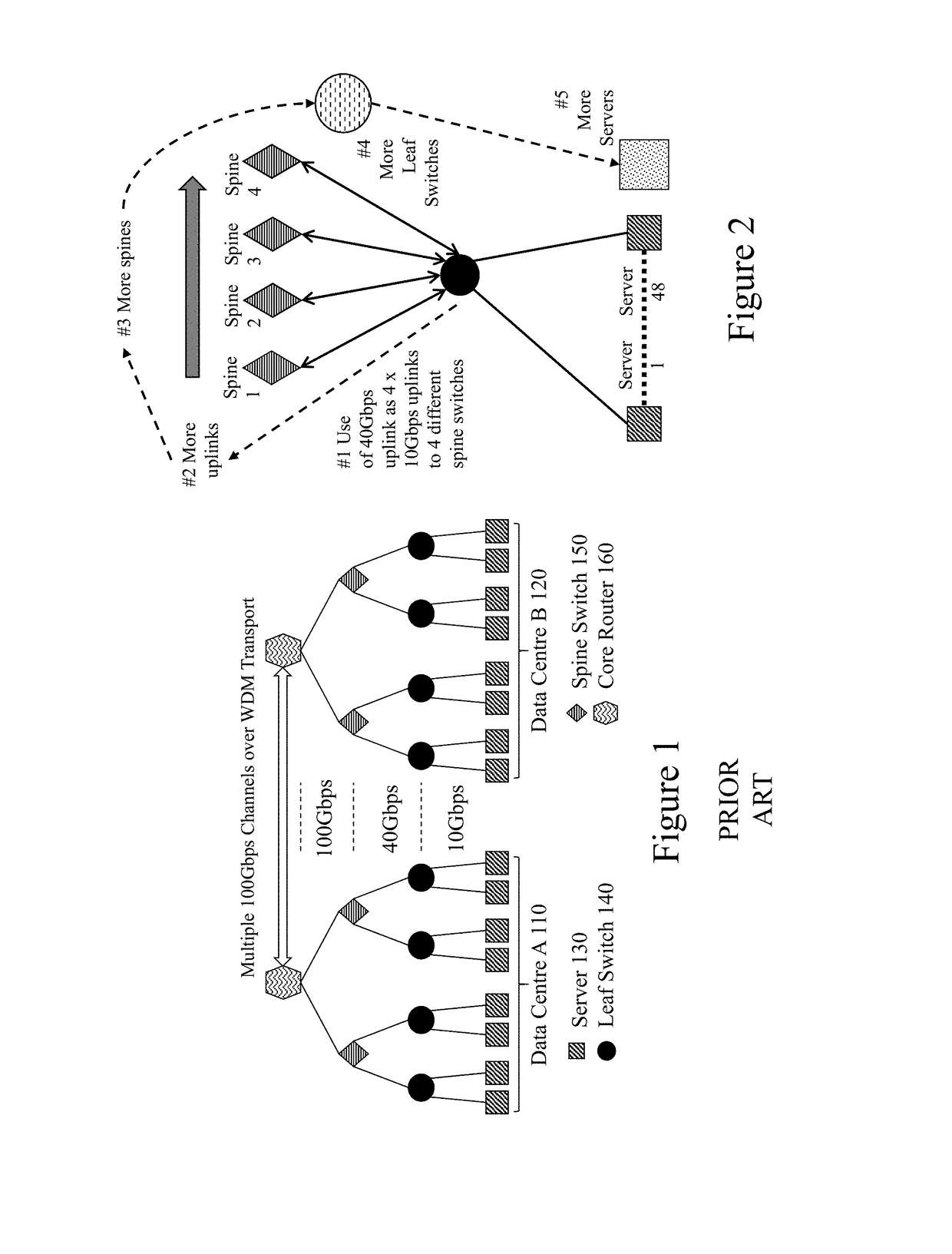

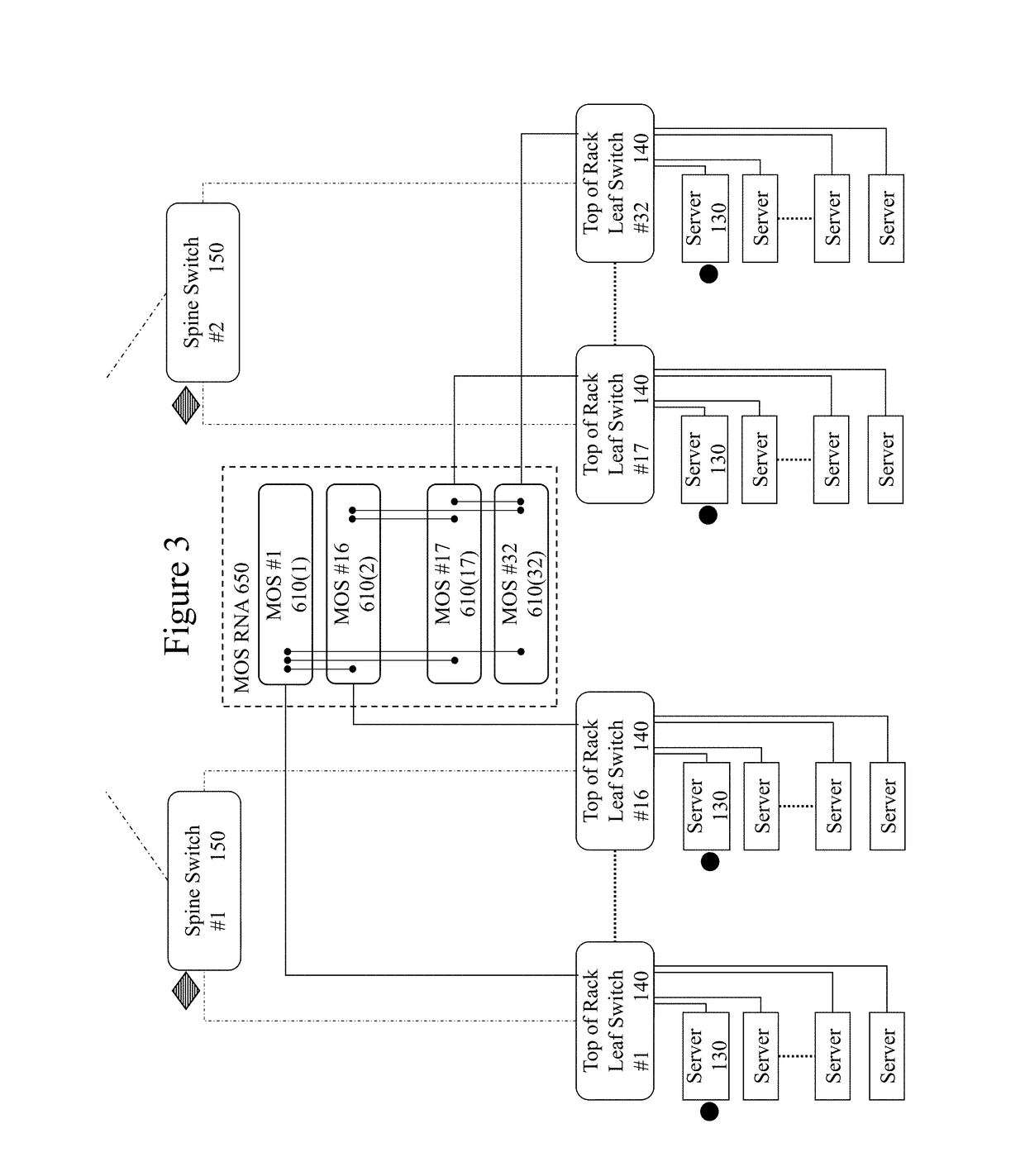

Photonic switches, photonic switching fabrics and methods for data centers

ActiveUS20180070157A1Reduce restrictionsWavelength-division multiplex systemsHybrid transportFiberData center

Data center interconnections, which encompass WSCs as well as traditional data centers, have become both a bottleneck and a cost / power issue for cloud computing providers, cloud service providers and the users of the cloud generally. Fiber optic technologies already play critical roles in data center operations and will increasingly in the future. The goal is to move data as fast as possible with the lowest latency with the lowest cost and the smallest space consumption on the server blade and throughout the network. Accordingly, it would be beneficial for new fiber optic interconnection architectures to address the traditional hierarchal time-division multiplexed (TDM) routing and interconnection and provide reduced latency, increased flexibility, lower cost, lower power consumption, and provide interconnections exploiting scalable optical modular optically switched interconnection network as well as temporospatial switching fabrics allowing switching speeds below the slowest switching element within the switching fabric.

Owner:AEPONYX

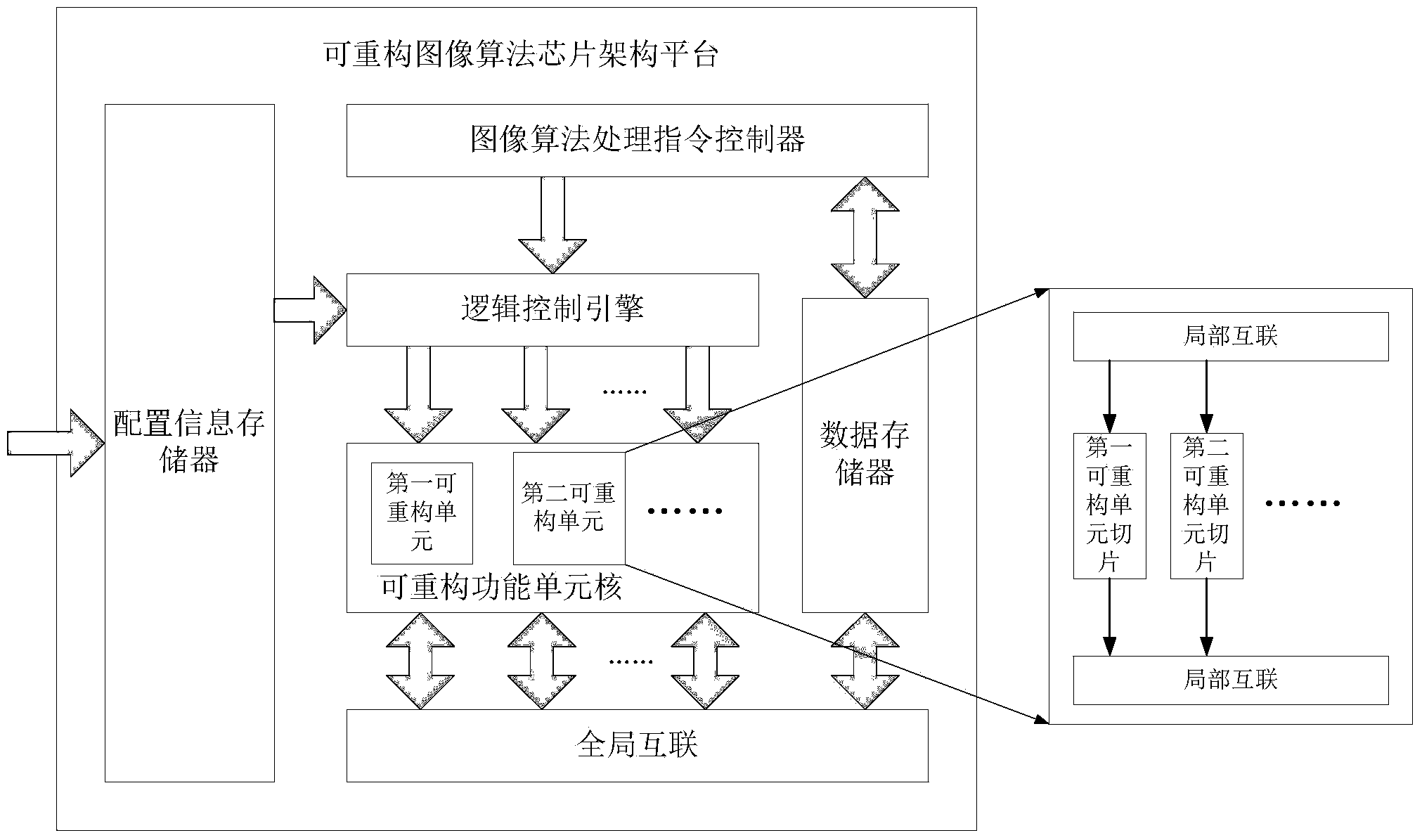

Design method of reconfigurable architecture platform oriented image processing

ActiveCN104112053AApplicable designVersatilitySpecial data processing applicationsImaging processingComputer architecture

The invention discloses a design method of a reconfigurable architecture platform oriented image processing. The design method comprises the steps of firstly analyzing and concluding a plurality of operation types of common complex algorithms, secondly, performing software / hardware division under the reconfigurable architecture on the image algorithm processing flow, thirdly, performing reconfigurable unit design and implementation on a plurality of image algorithm operation types, and on this basis, performing scheme design and technical implementation on the universal complex algorithm global interconnection architecture, and finally, performing design and implementation of a universalized IP core based on the architecture.

Owner:TIANJIN JINHANG COMP TECH RES INST

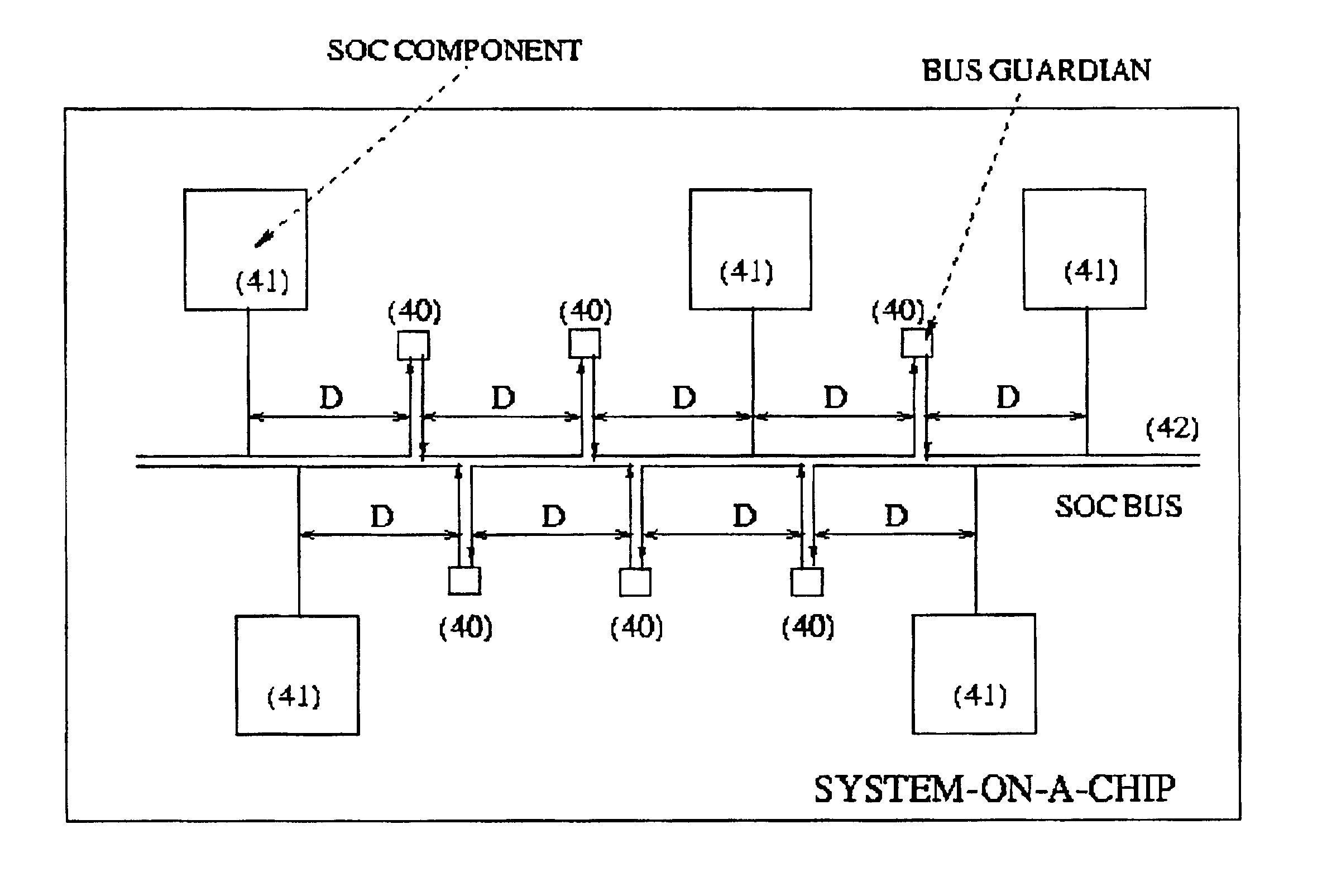

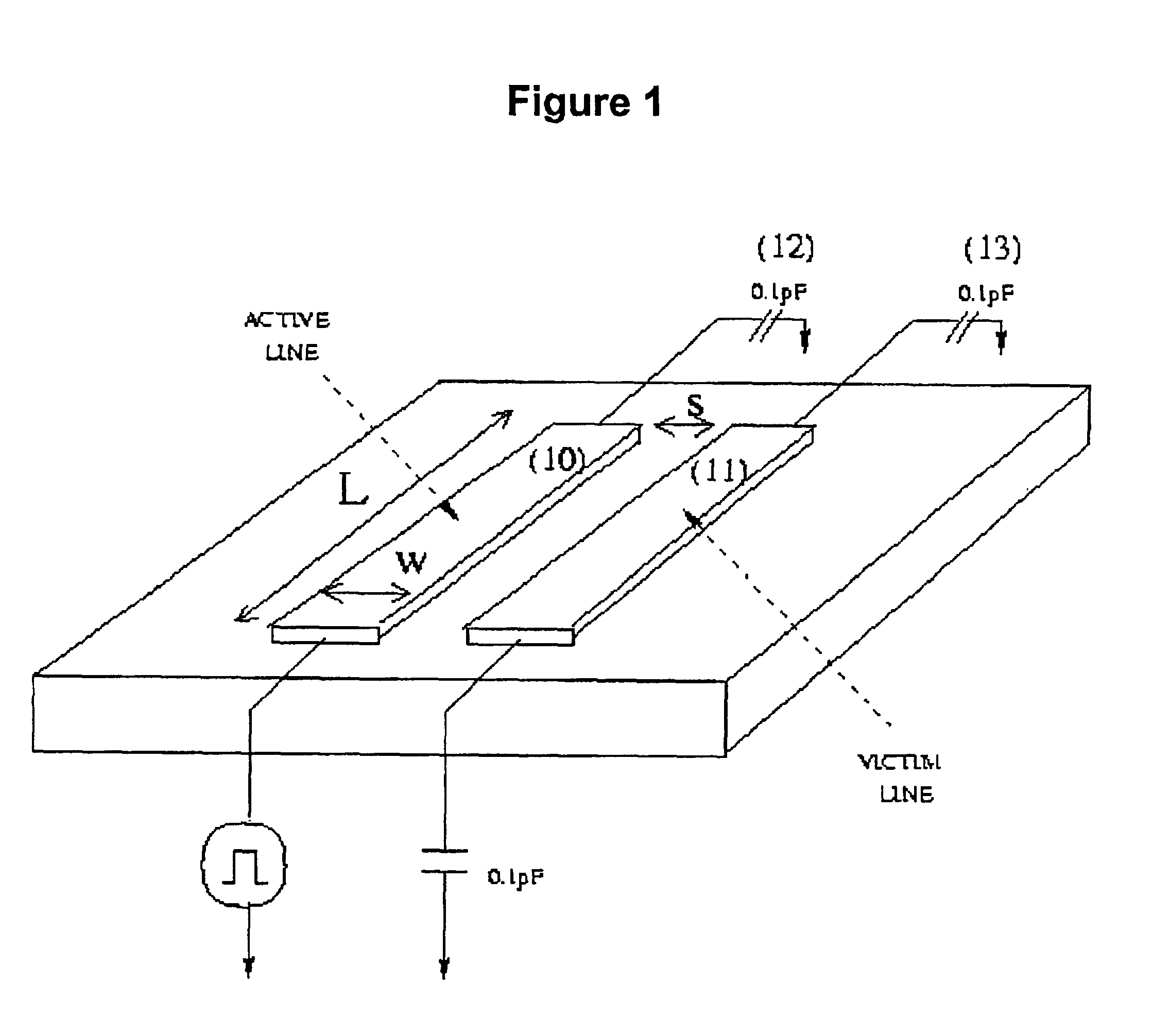

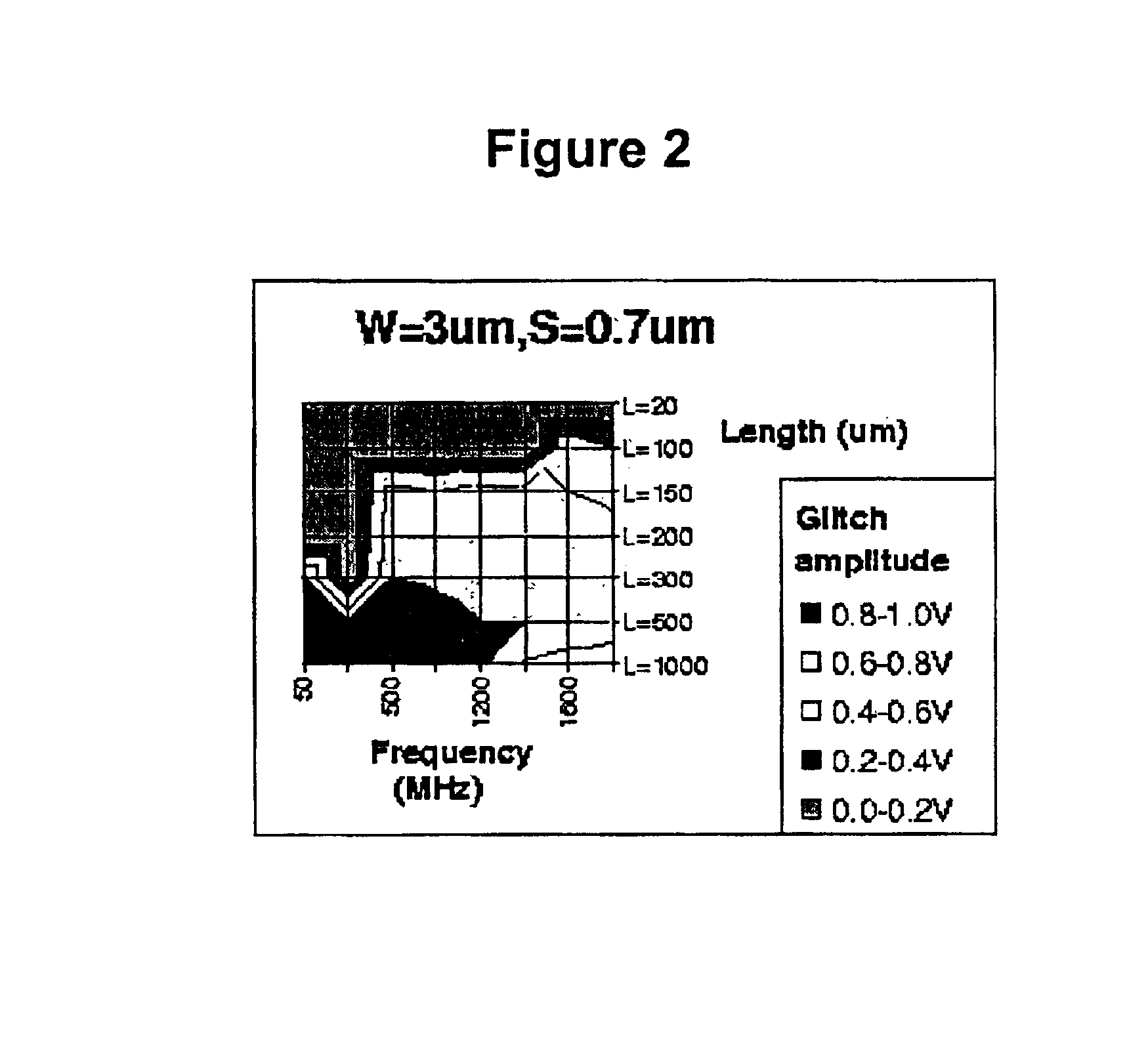

Method and apparatus for online detection and correction of faults affecting system-on-chip buses

InactiveUS6880112B2Error prevention/detection by using return channelCorrect operation testingHardware modulesCODE protocol

This paper presents a methodology for designing System-On-Chip interconnection architectures providing a high level of protection from crosstalk effects. An event driven simulator enriched with fault injection capabilities is exploited to evaluate the dependability level of the system being designed. The simulation environment supports several bus coding protocols and thus designers can easily evaluate different design alternatives. To enhance the dependability level of the interconnection architecture, we propose a distributed bus guardian scheme, where dedicated hardware modules monitor the integrity of the information transmitted over the bus and provide error correction mechanisms.

Owner:NEC CORP

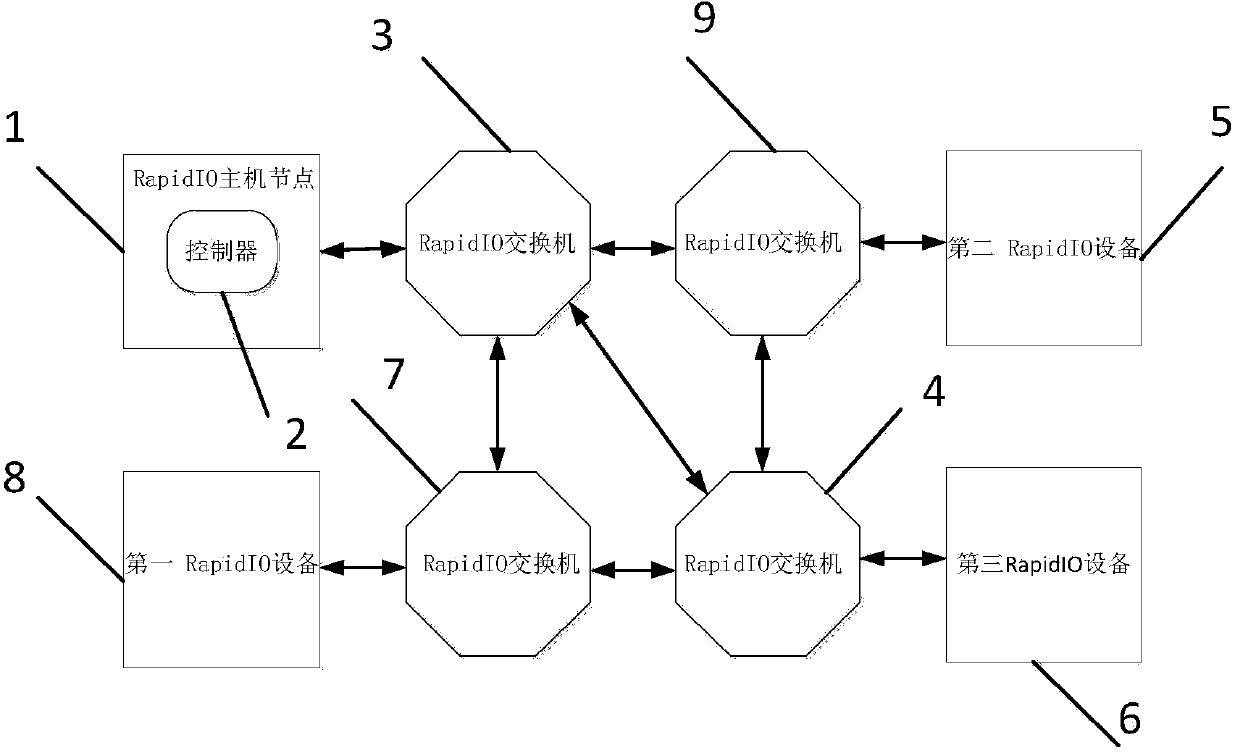

RapidIO network recursive enumeration method

InactiveCN103746910ASolve the problem that the optimal path cannot be selectedData switching networksDistributed computingExternal connection

The invention pertains to the field of data communication, and relates to an RapidIO network recursive enumeration method. The invention provides an RapidIO network recursive enumeration networking method based on depth-first path weighting, the shortest communication path between a master machine and a slave machine in the RapidIO network is caculated, and an optimal path dynamic enumeration method which has advantages of simple structure, good adaptability and good reliability and can be used to support the change of external connection device. IDT Tsi578 is used as the RapidIO networking switchboard to realize the optimal RapidIO networking scheme based on the Tsi578 switchboard device. The method of the invention adopts the following technical scheme that in a system in which the RapidIO bus is used as the interconnection architecture, the RapidIO master machine mode is taken as the reference, and the route hop is taken as the weighted value to calculate the distances between each slave machine node in the RapidIO network and RapidIO master machine mode, for each slave machine mode that newly found, the shortest path between each slave machine mode and the RapidIO master machine mode is selected dynamically, and the route information table of each slave machine mode ID and the corresponding switchboard device Tsi578 is set according to the shortest path.

Owner:SUZHOU CHANGFENG AVIATION ELECTRONICS

Photonic interconnections that include optical transmission paths for transmitting optical signals

Various embodiments of the present invention are directed to photonic interconnection architectures that provide high-speed interconnections of microscale or nanoscale devices. In one embodiment of the present invention, a photonic interconnection for interconnecting and synchronizing operation of components within a computing device comprises an optical transmission path for transmitting a number of independent frequency channels within an optical signal provided by an optical signal source. The photonic crystal may include one or more filters located near the waveguide for extracting specific frequency channels transmitted by the waveguide, the frequency channel including one or more frequency channels carrying a clock signal. One or more photodetectors positioned near the one or more filters convert the extracted frequency channels into electrical signals for use by one or more components of the computing device, the electrical signals including one or more clock signals for synchronizing operation of the one or more components.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Interconnection architecture and method of assessing interconnection architecture

InactiveUS7622779B2TransistorSingle instruction multiple data multiprocessorsHexagonal cellEngineering

Owner:RGT UNIV OF CALIFORNIA

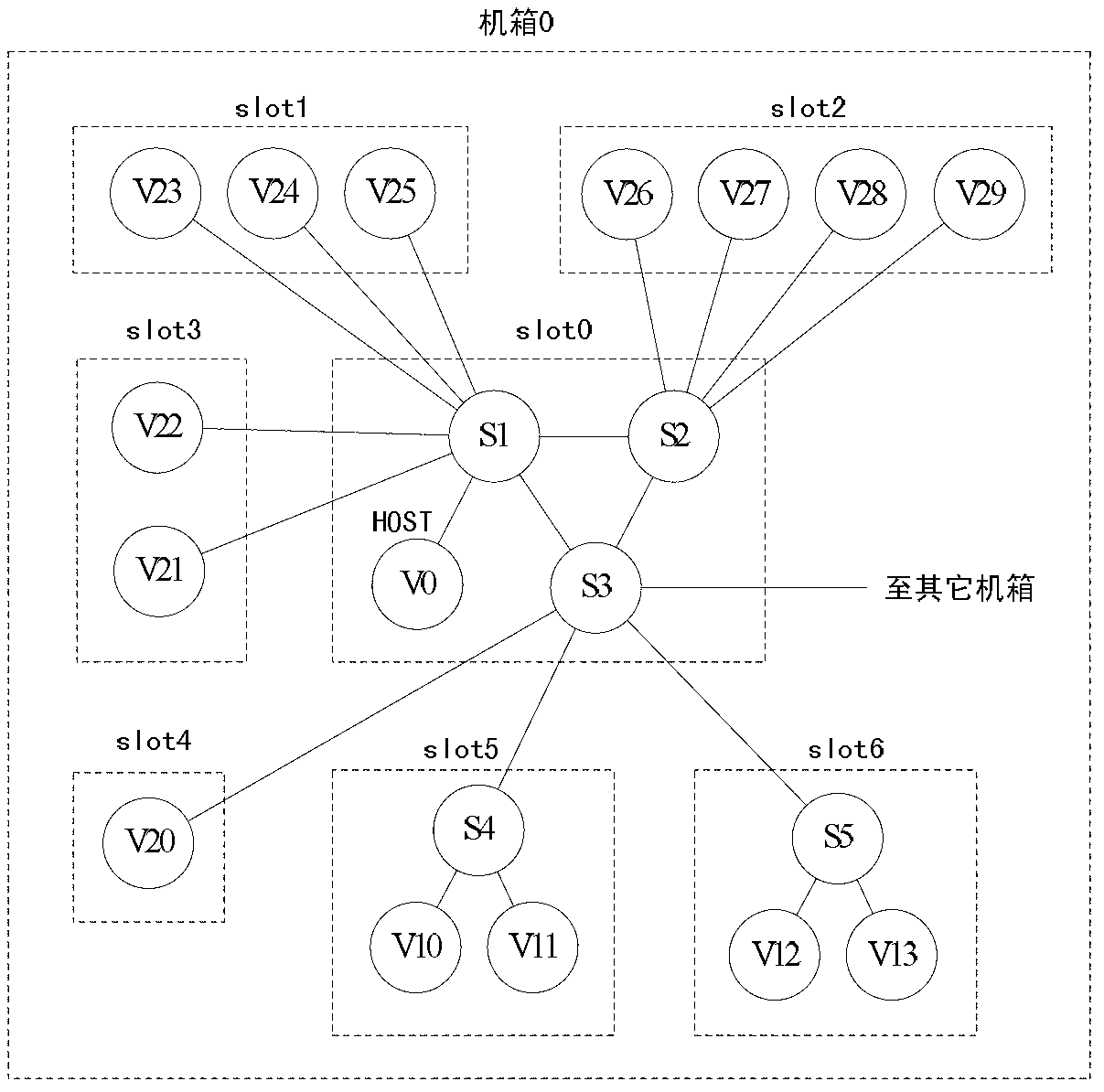

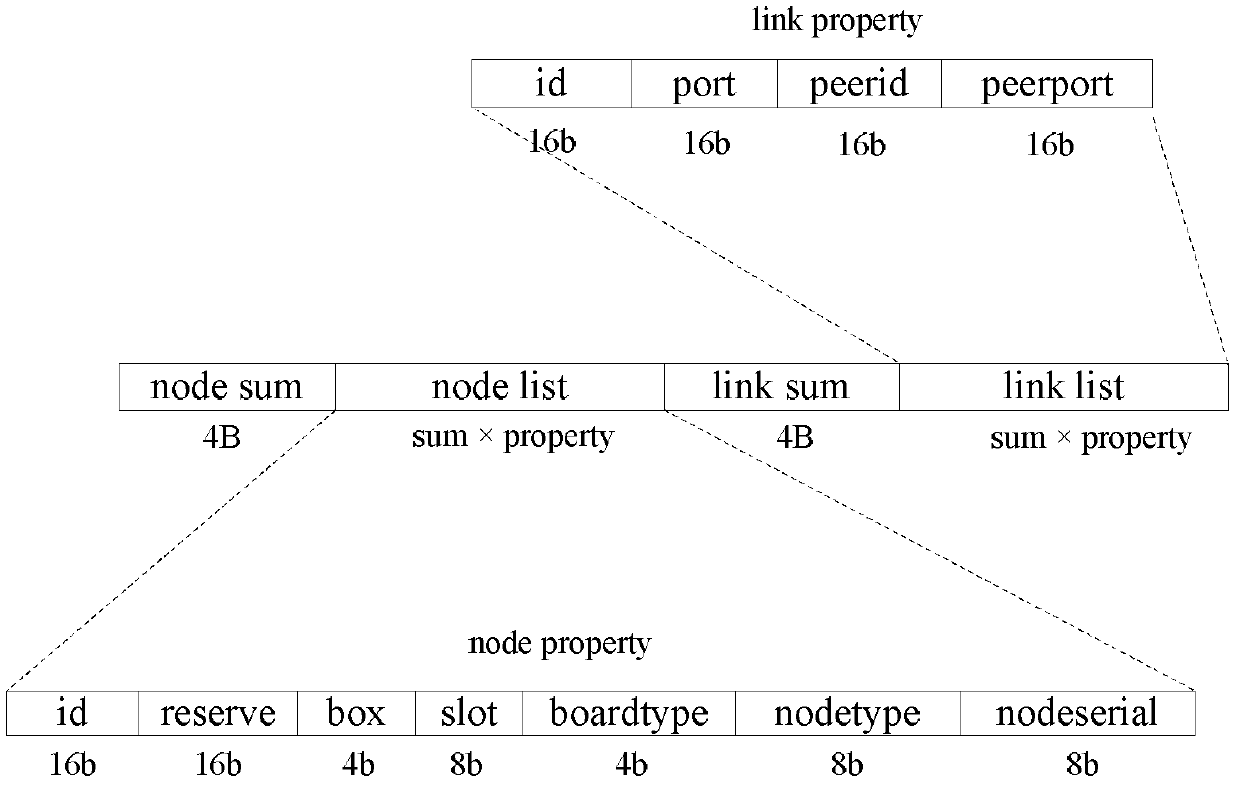

Network enumeration method of Rapid IO bus interconnection system

InactiveCN103347098AAdd allocation strategyReduce couplingData switching networksElectric digital data processingNetwork sizePhysical address

The invention brings forward a network enumeration method of a Rapid IO bus interconnection system and aims at provides an enumeration method that is only connected with a host node and is capable of adapting to a network size change and fixed mapping between a physical address and a network address. The method is realized by using the following technical scheme: a connected graph G0 (V,E) is constructed in a system using a Rapid IO bus as an interconnection architecture and is used as a network template stored in a host node, wherein V is a peak set describing network nodes and each peak v in the peak set V contains a network address and a physical address that are distributed in advance by each node; and a distribution strategy of predefined addresses of the network template is added in a network enumeration algorithm containing a network enumeration main function and a node setting subfunction; and for each network node that is newly discovered, a network address and a port number of an enumerated port are used as key words to search a matched node in the G0, wherein the matched node serves as a new node distribution address. And in the network enumeration method, the node setting function serves as a subfunction that is invoked by enumerated function.

Owner:10TH RES INST OF CETC

Communication mechanism

ActiveUS7155557B2Improve distributionAid in placement and routingTransmissionMemory systemsEngineeringSemiconductor

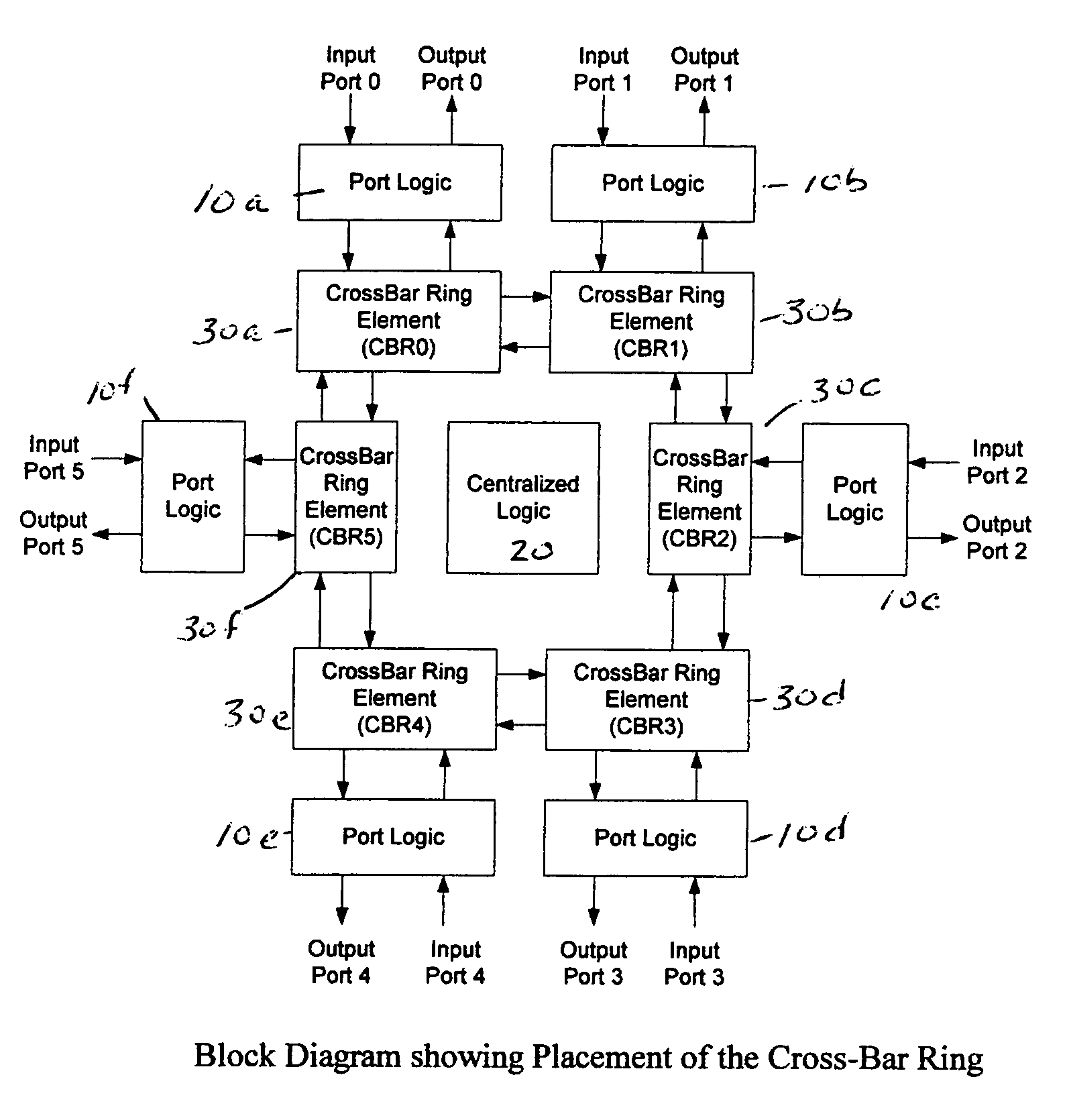

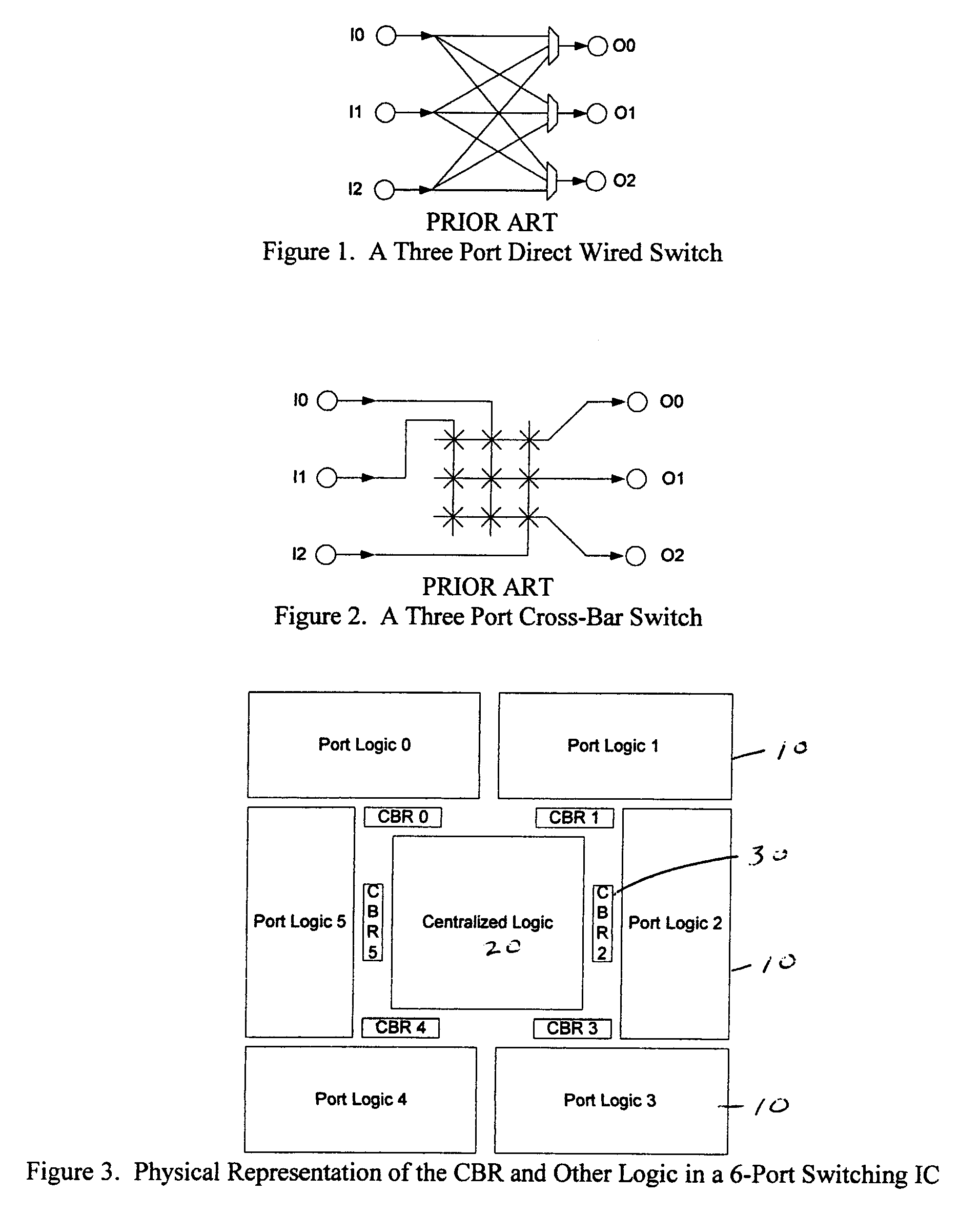

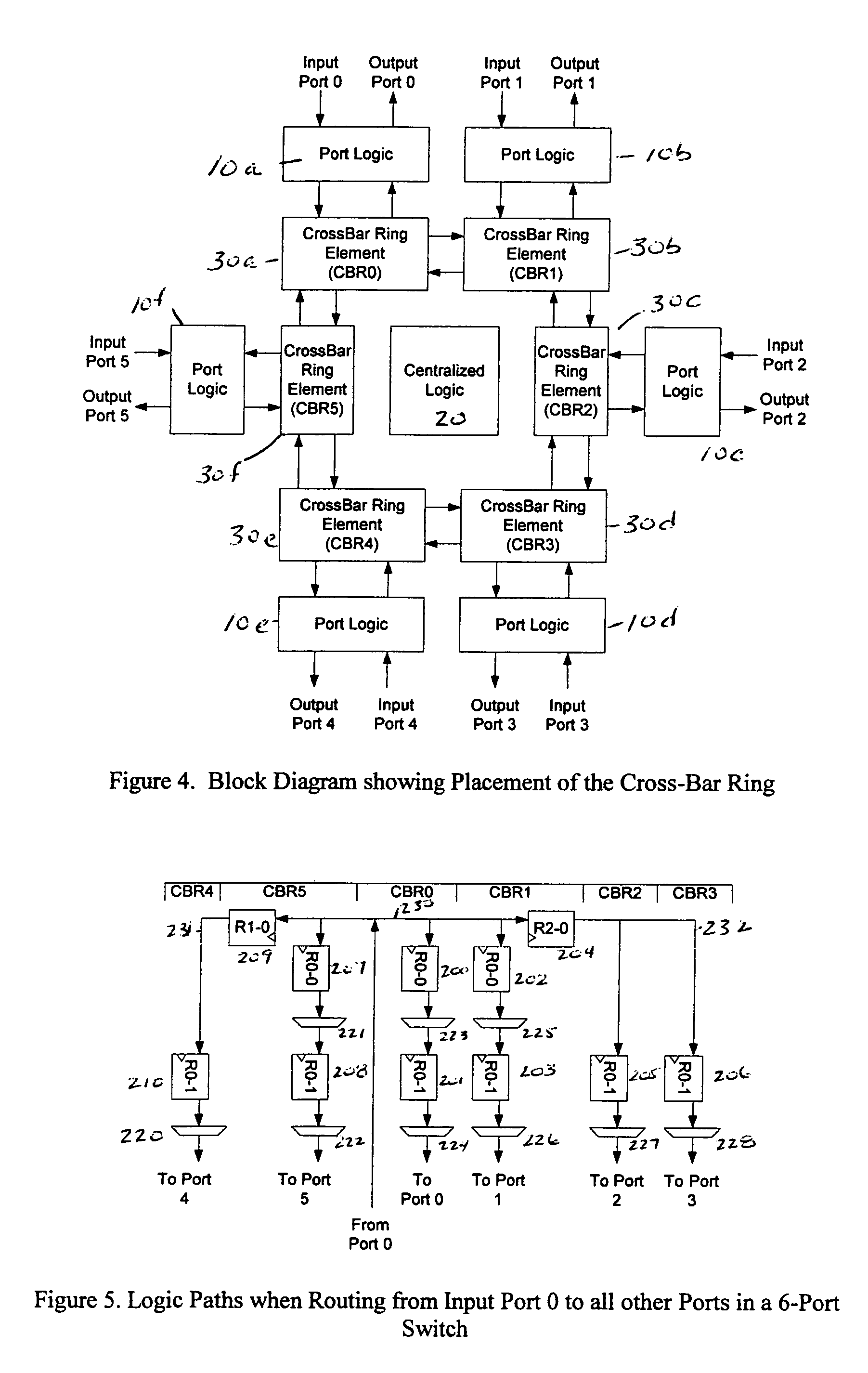

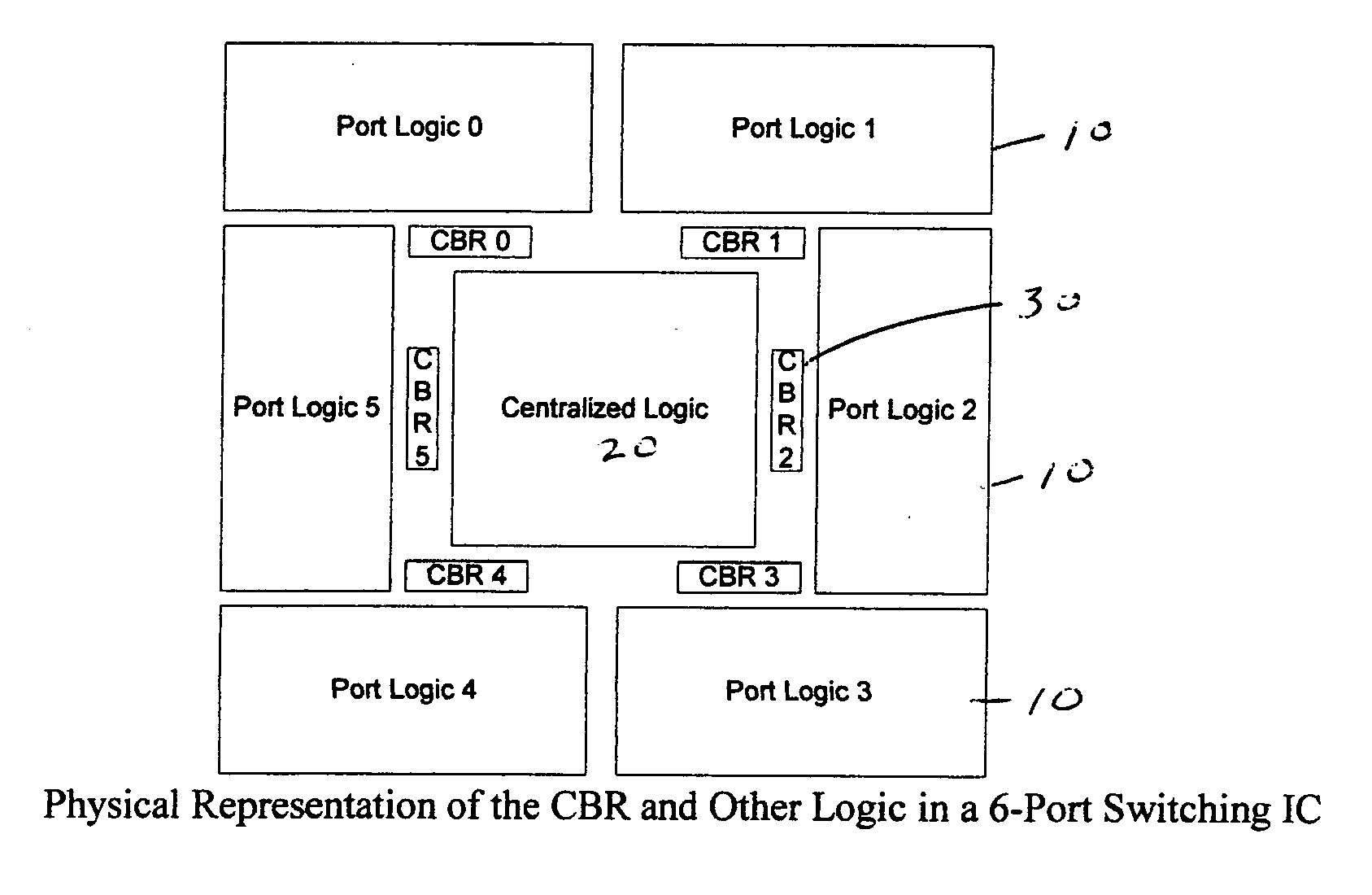

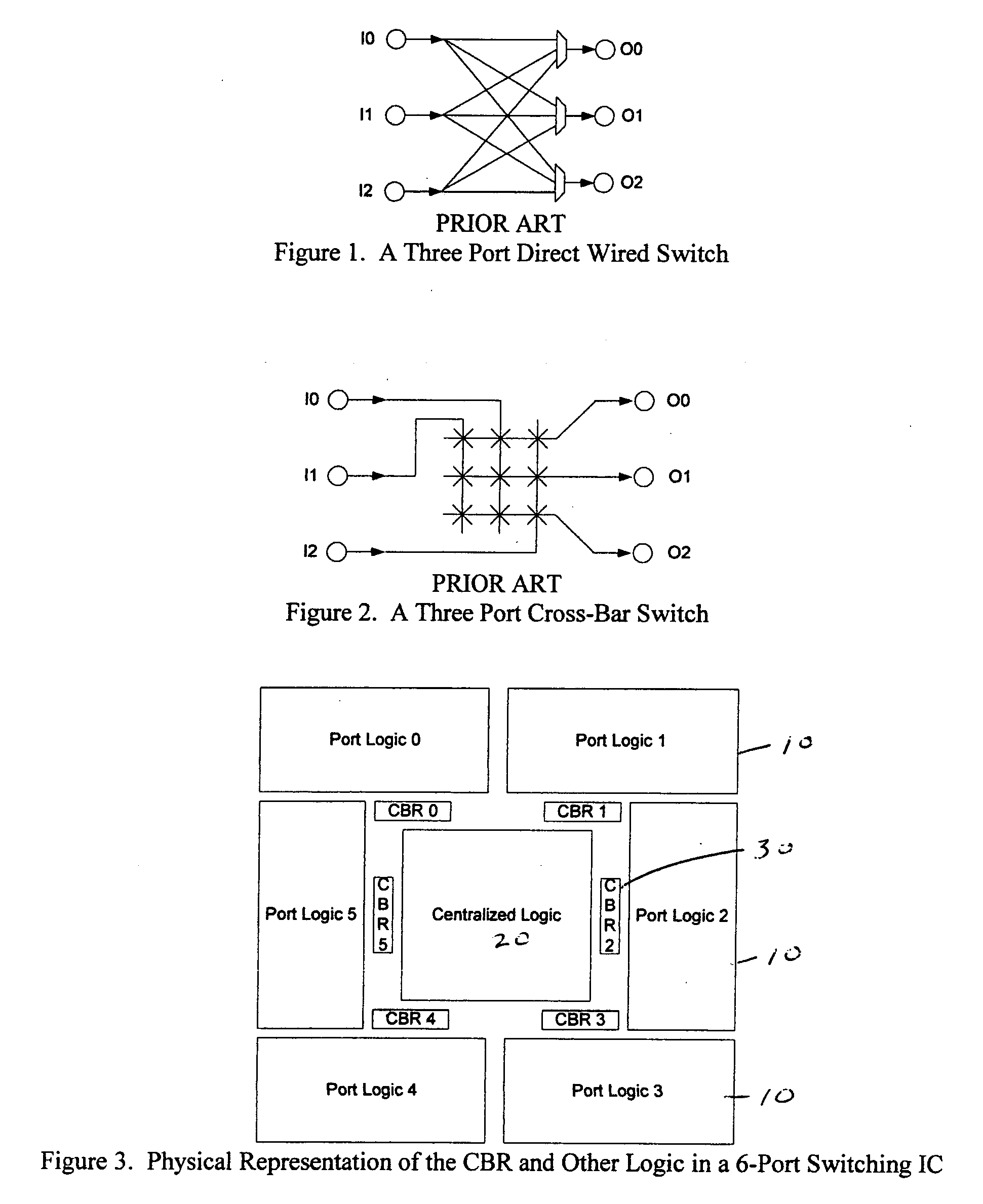

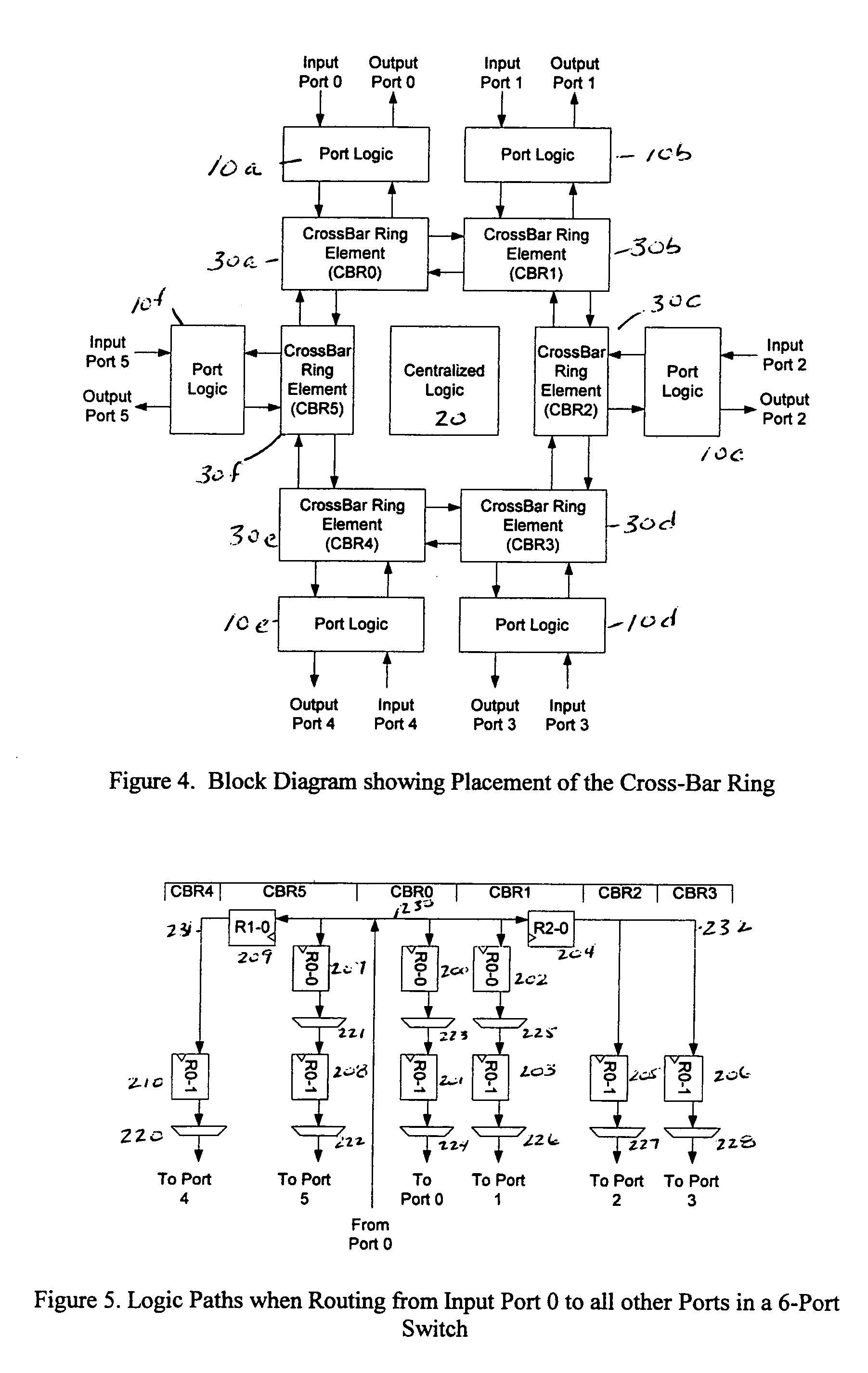

The invention provides an interconnection architecture for semiconductor devices. Cross bar switches are traditionally placed in the center of the IC. However, this location may also be the preferred location for the centralized logic in the IC. This invention, known as a cross bar ring or CBR, provides cross bar switch functionality in a manner that can be easily distributed around the chip. Typically, it can fit in the routing channels between other functional blocks, thereby allowing other centralized functions to be placed in the center of the IC. The CBR is defined so that it can be partitioned into separate modules, which greatly aids in the placement and routing of wires. Furthermore, the architecture is defined such that the CBR can use storage elements, allowing it to be pipelined so that the wire distances can be increased while still maintaining a high internal clock speed. The use of storage elements also allows the CBR to provide a deterministic delay between any two locations on the IC, and can, if desired, insure a constant delay regardless of source and destination.

Owner:CALLAHAN CELLULAR L L C

Emulation processor interconnection architecture

ActiveUS20060190237A1Reduce areaHigh speedCAD circuit designSoftware simulation/interpretation/emulationMultiplexerVerification system

Owner:CADENCE DESIGN SYST INC

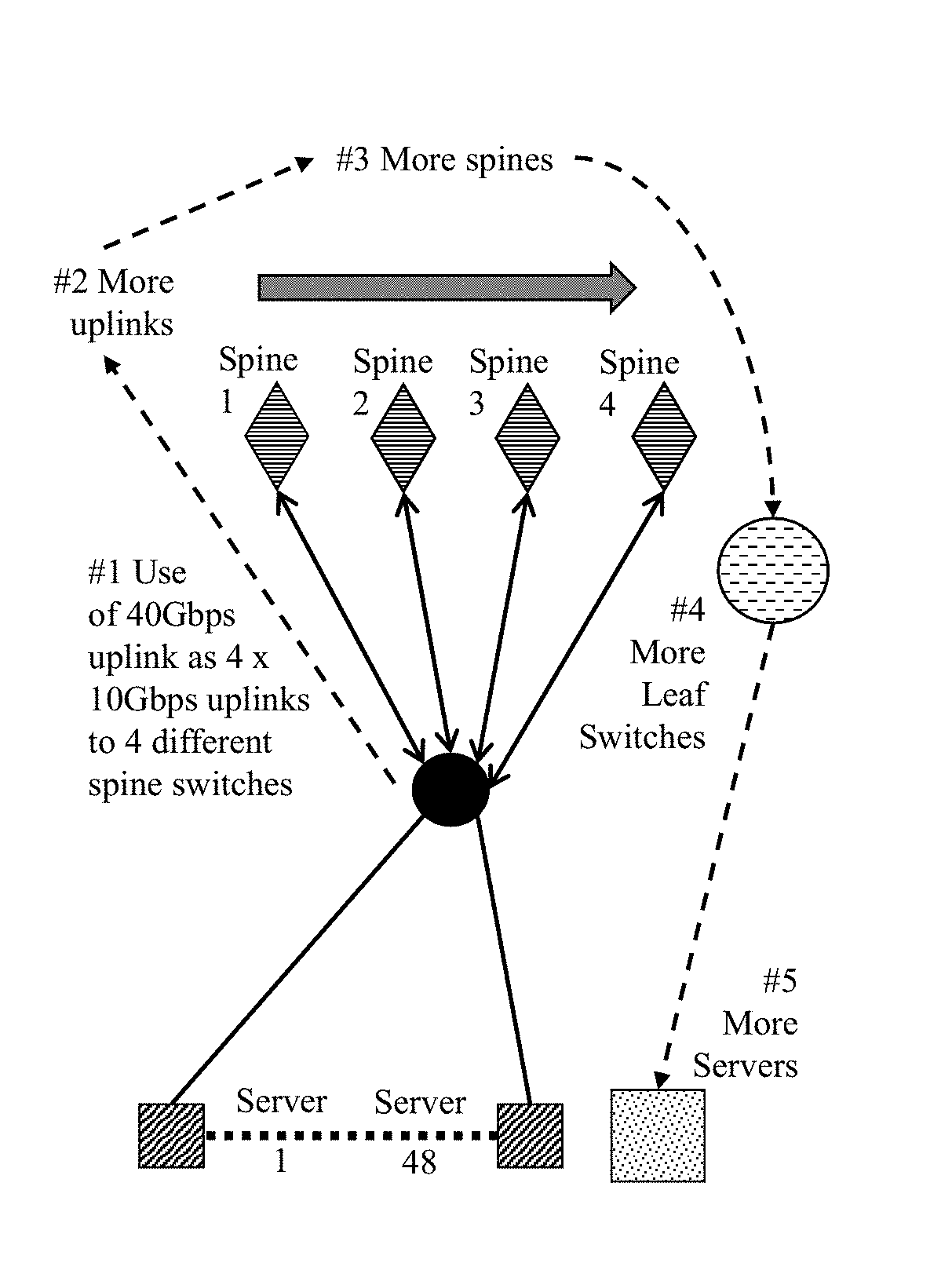

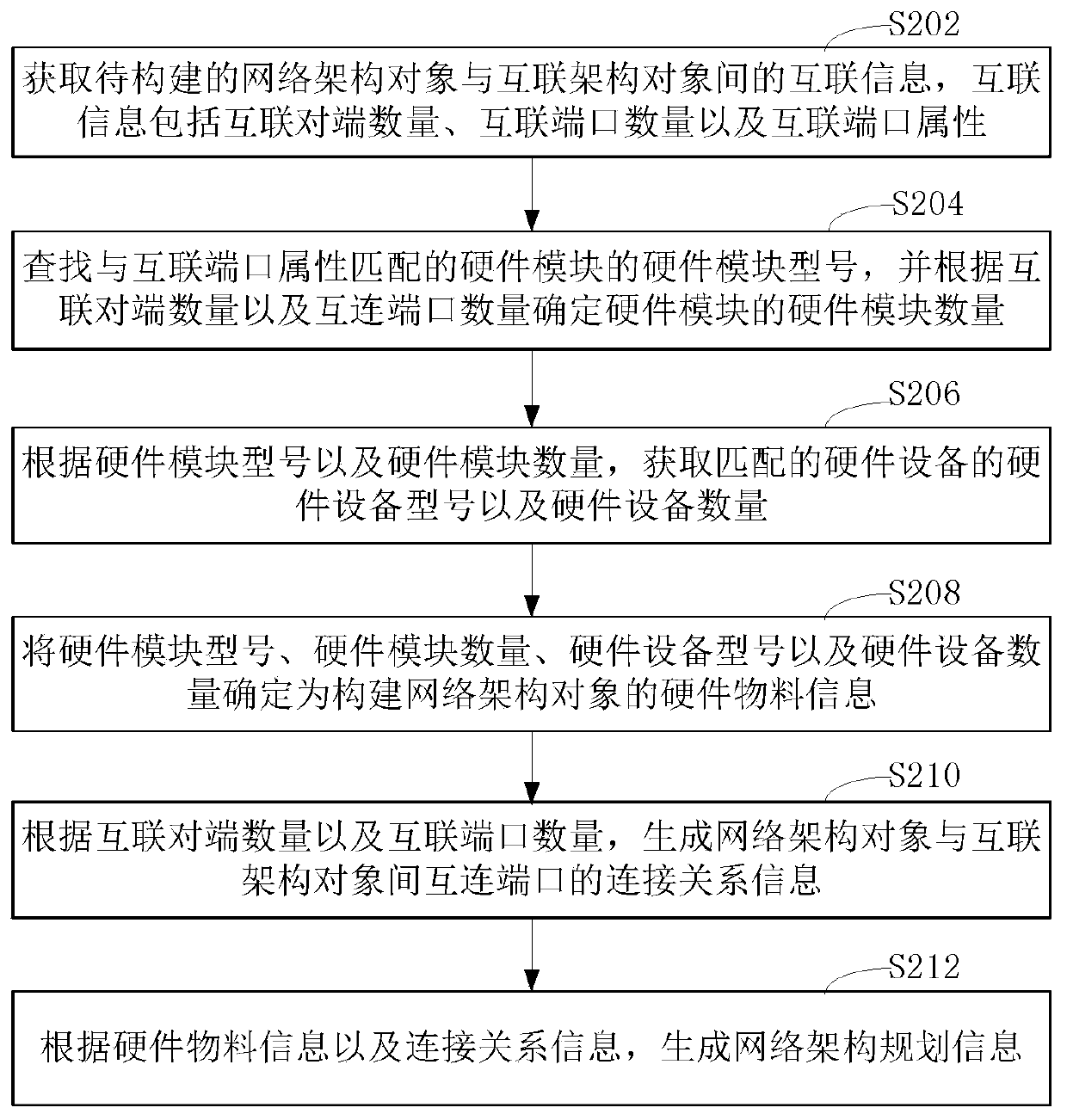

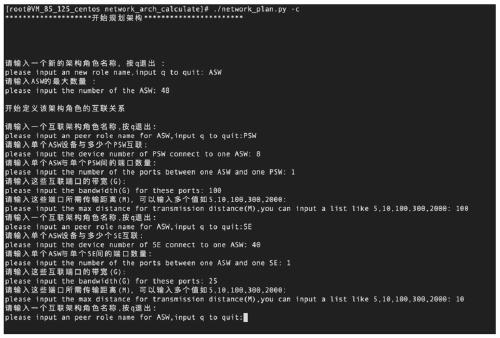

Network architecture generation method and device, readable storage medium and computer equipment

ActiveCN111193603AQuantitative demandAvoid wastingData switching networksComputer hardwareComputer architecture

The invention relates to a network architecture planning information generation method and device, a computer readable storage medium and computer equipment. The method comprises the steps: obtaininginterconnection information between a to-be-constructed network architecture object and an interconnection architecture object; searching a hardware module model of the hardware module matched with the interconnection port attribute, and determining the number of the hardware modules of the hardware module according to the number of the interconnection opposite ends and the number of the interconnection ports; obtaining hardware material information for constructing the network architecture object according to the hardware module model and the hardware module number; generating connection relation information of interconnection ports between the network architecture object and the interconnection architecture object according to the number of the interconnection opposite ends and the number of the interconnection ports; and generating network architecture planning information according to the hardware material information and the connection relation information. According to the schemeprovided by the invention, the network architecture planning information meeting the construction requirement of the business server can be dynamically generated, and the waste of equipment resourcesis effectively avoided.

Owner:TENCENT TECH (SHENZHEN) CO LTD

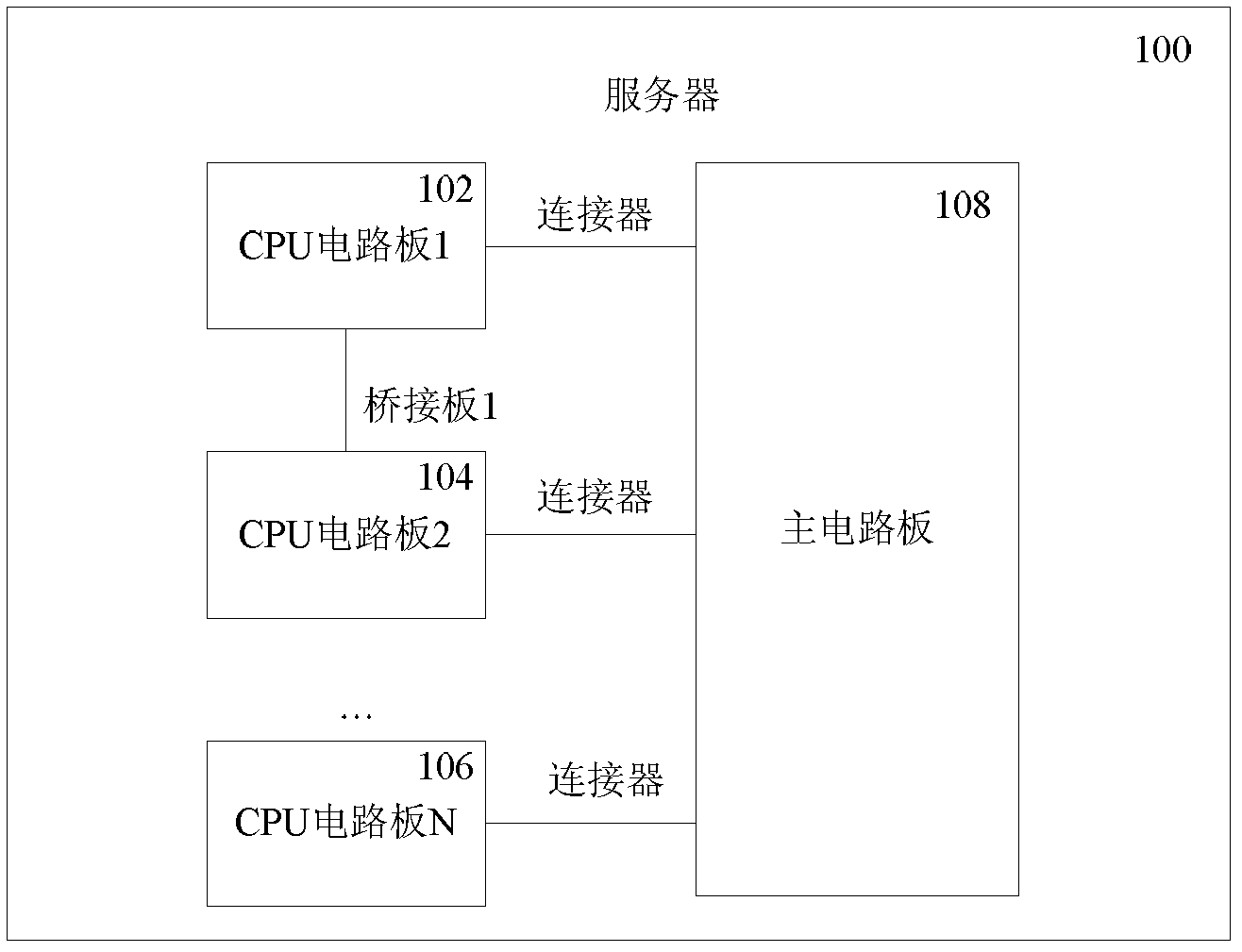

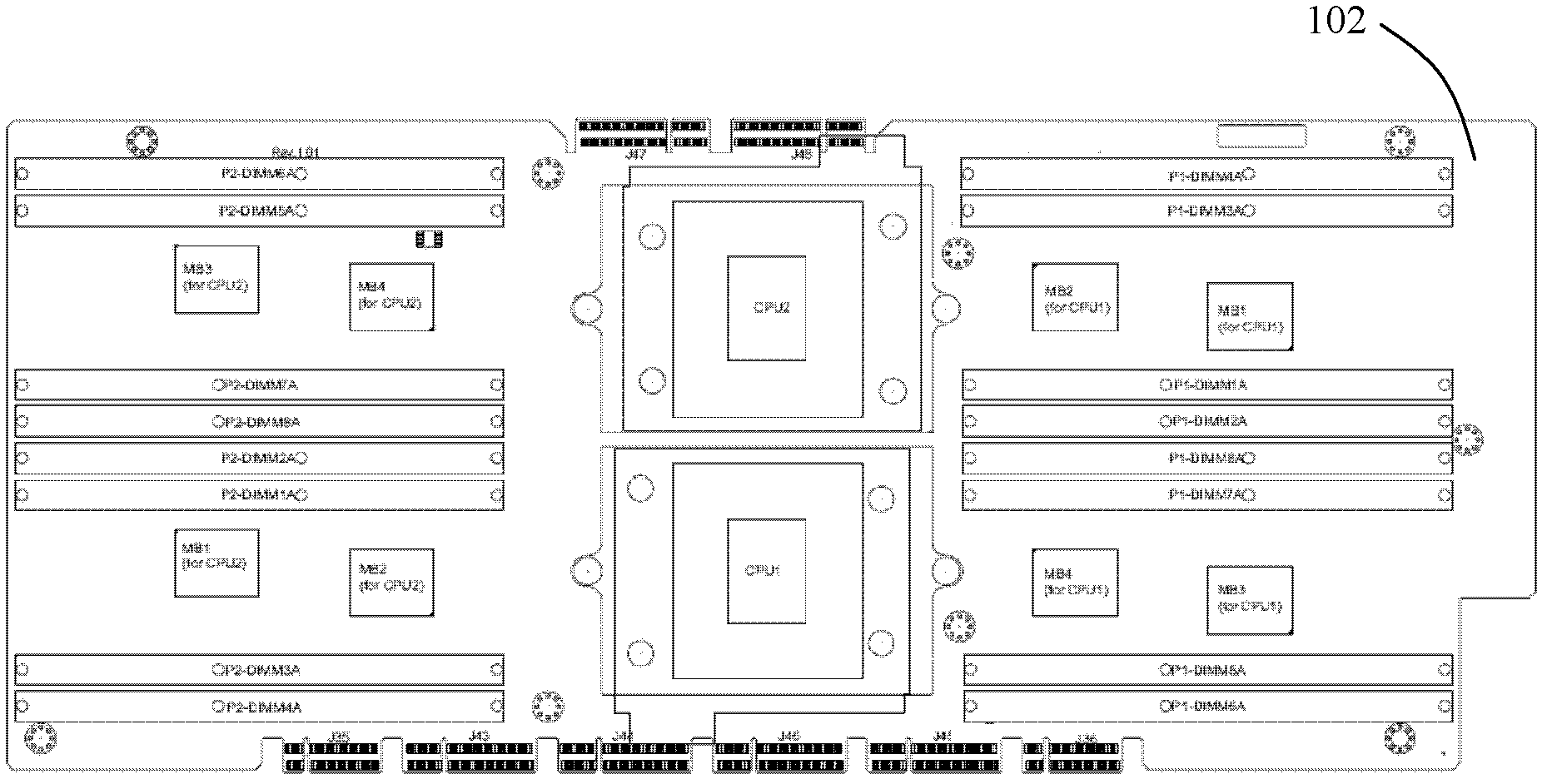

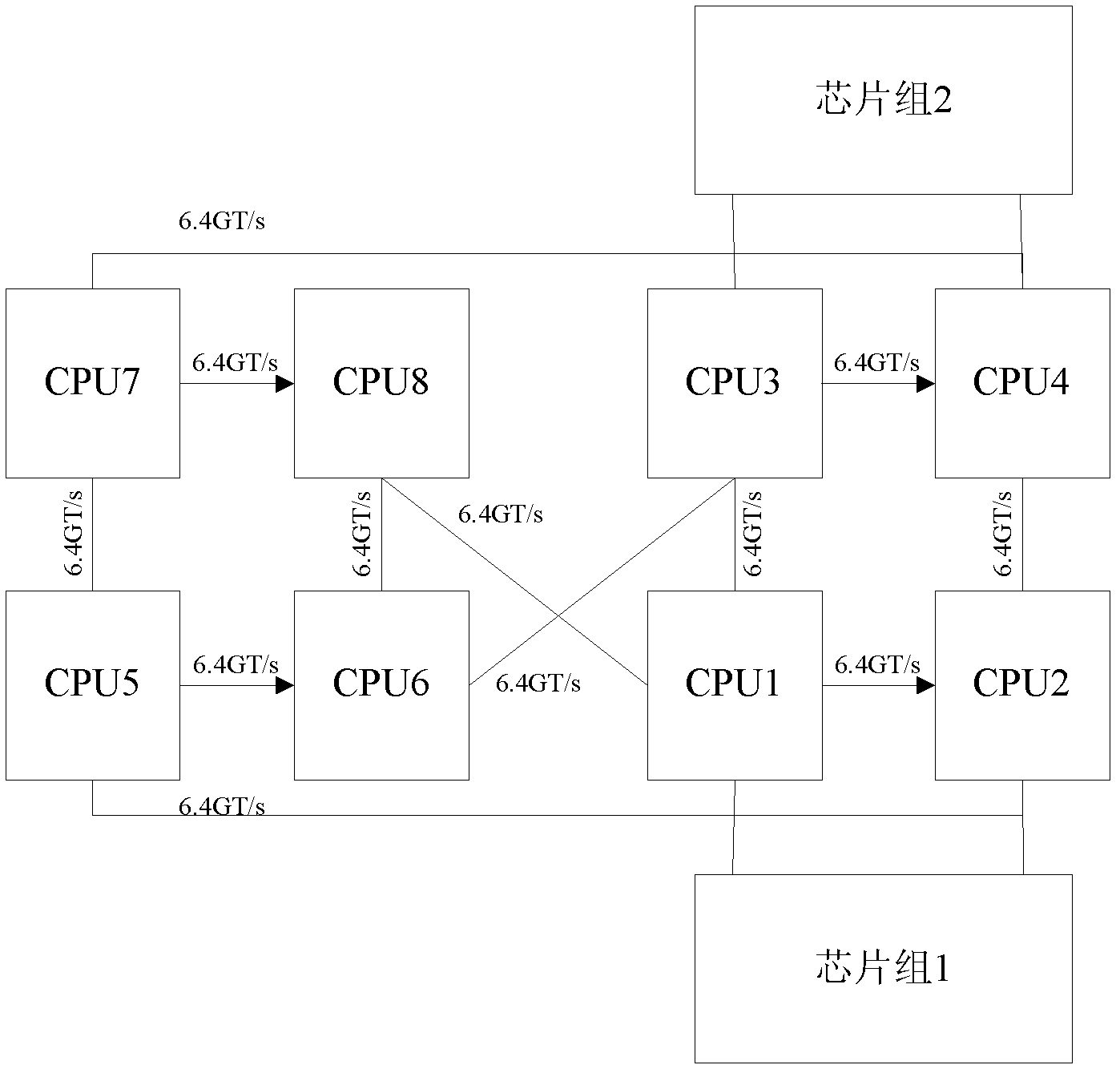

Server

InactiveCN102520769AImprove computing powerImprove stabilityDigital processing power distributionInformation transmissionEngineering

Owner:SUGON INFORMATION IND

Communication mechanism

ActiveUS20060069842A1Improve distributionAid in placement and routingTransmissionMemory systemsDevice materialEngineering

The invention provides an interconnection architecture for semiconductor devices. Cross bar switches are traditionally placed in the center of the IC. However, this location may also be the preferred location for the centralized logic in the IC. This invention, known as a cross bar ring or CBR, provides cross bar switch functionality in a manner that can be easily distributed around the chip. Typically, it can fit in the routing channels between other functional blocks, thereby allowing other centralized functions to be placed in the center of the IC. The CBR is defined so that it can be partitioned into separate modules, which greatly aids in the placement and routing of wires. Furthermore, the architecture is defined such that the CBR can use storage elements, allowing it to be pipelined so that the wire distances can be increased while still maintaining a high internal clock speed. The use of storage elements also allows the CBR to provide a deterministic delay between any two locations on the IC, and can, if desired, insure a constant delay regardless of source and destination.

Owner:CALLAHAN CELLULAR L L C

Optical interconnection methods and systems exploiting mode multiplexing

ActiveUS20170155465A9Multiplex system selection arrangementsWavelength-division multiplex systemsOptical spaceEngineering

Optical solutions to address and overcome the issues of superseding / replacing electrical interconnection networks have generally exploited some form of optical space switching. Such optical space switching architectures required multiple switching elements, leading to increased power consumption and footprint issues. Accordingly, it would be beneficial for new optical, e.g. fiber optic or integrated optical, interconnection architectures to address the traditional hierarchal time-division multiplexed (TDM) space based routing and interconnection to provide reduced latency, increased flexibility, lower cost, and lower power consumption. Accordingly, it would be beneficial to exploit networks operating in multiple domains by overlaying mode division multiplexing to provide increased throughput in bus, point-to-point networks, and multi-cast networks, for example, discretely or in combination with wavelength division multiplexing.

Owner:MCGILL UNIV +1

Data center path switch with improved path interconnection architecture

ActiveUS9756404B2Easy to controlSimplifying interconnectionMultiplex system selection arrangementsOptical multiplexData centerSwitching signal

A data center path switch architecture permits path switching of the signal path of incoming signals to one or more output paths in real time without the need for manual intervention, and without delays associated with current data center network switches. In this architecture, a switching core capable of switching signals directly from the ingress of the switching core to alternate destination ports in real time, either under software or hardware control.

Owner:FIBER MOUNTAIN INC

Methods and systems relating to optical networks

ActiveUS20200052786A1Reduce restrictionsMultiplex system selection arrangementsWavelength-division multiplex systemsCloud userEngineering

Data center interconnections, which encompass WCs as well as traditional data centers, have become both a bottleneck and a cost / power issue for cloud computing providers, cloud service providers and the users of the cloud generally. Fiber optic technologies already play critical roles in data center operations and will increasingly in the future. The goal is to move data as fast as possible with the lowest latency with the lowest cost and the smallest space consumption on the server blade and throughout the network. Accordingly, it would be beneficial for new fiber optic interconnection architectures to address the traditional hierarchal time-division multiplexed (TDM) routing and interconnection and provide reduced latency, increased flexibility, lower cost, lower power consumption, and provide interconnections exploiting N×M×D Gbps photonic interconnects wherein N channels are provided each carrying M wavelength division signals at D Gbps.

Owner:AEPONYX

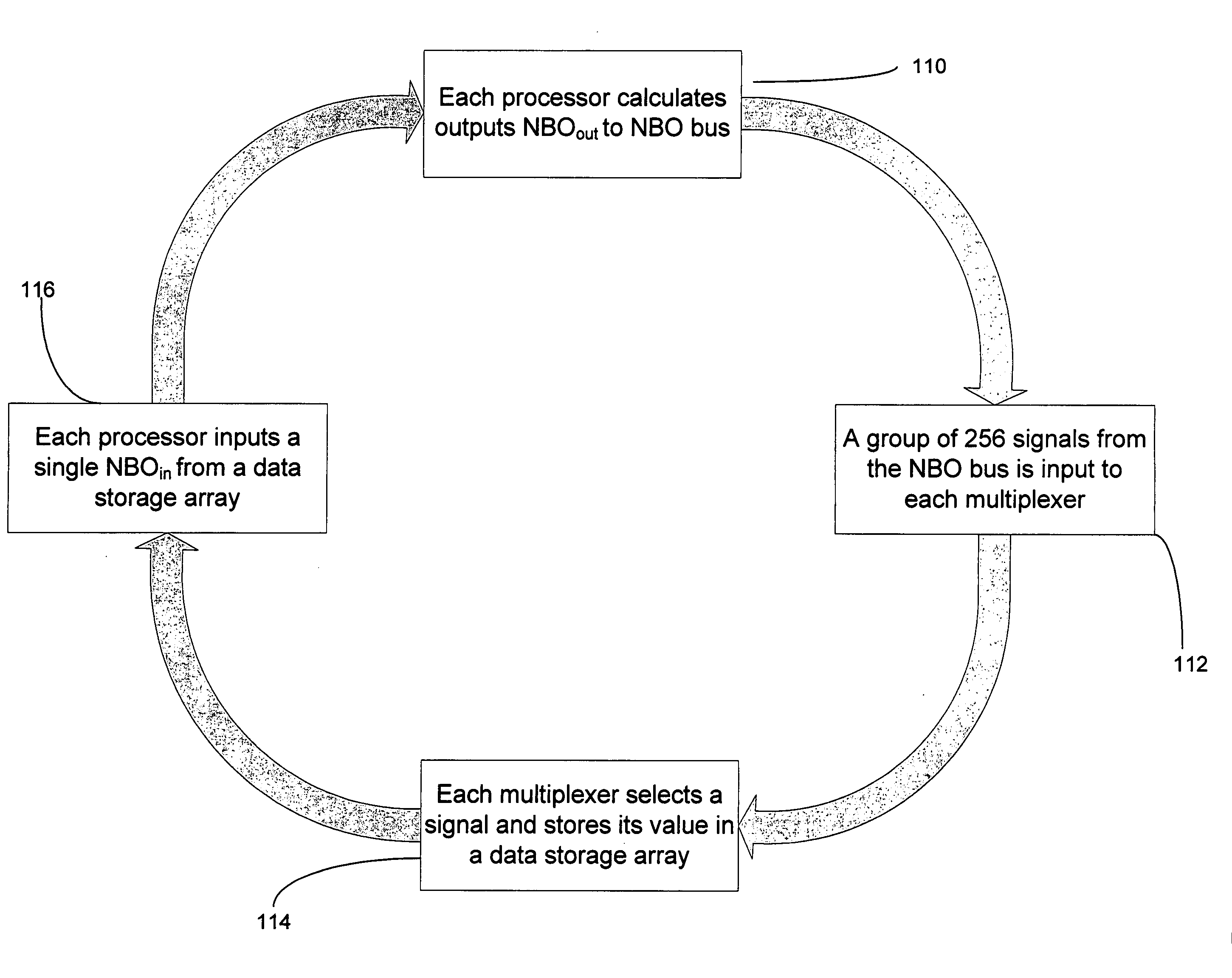

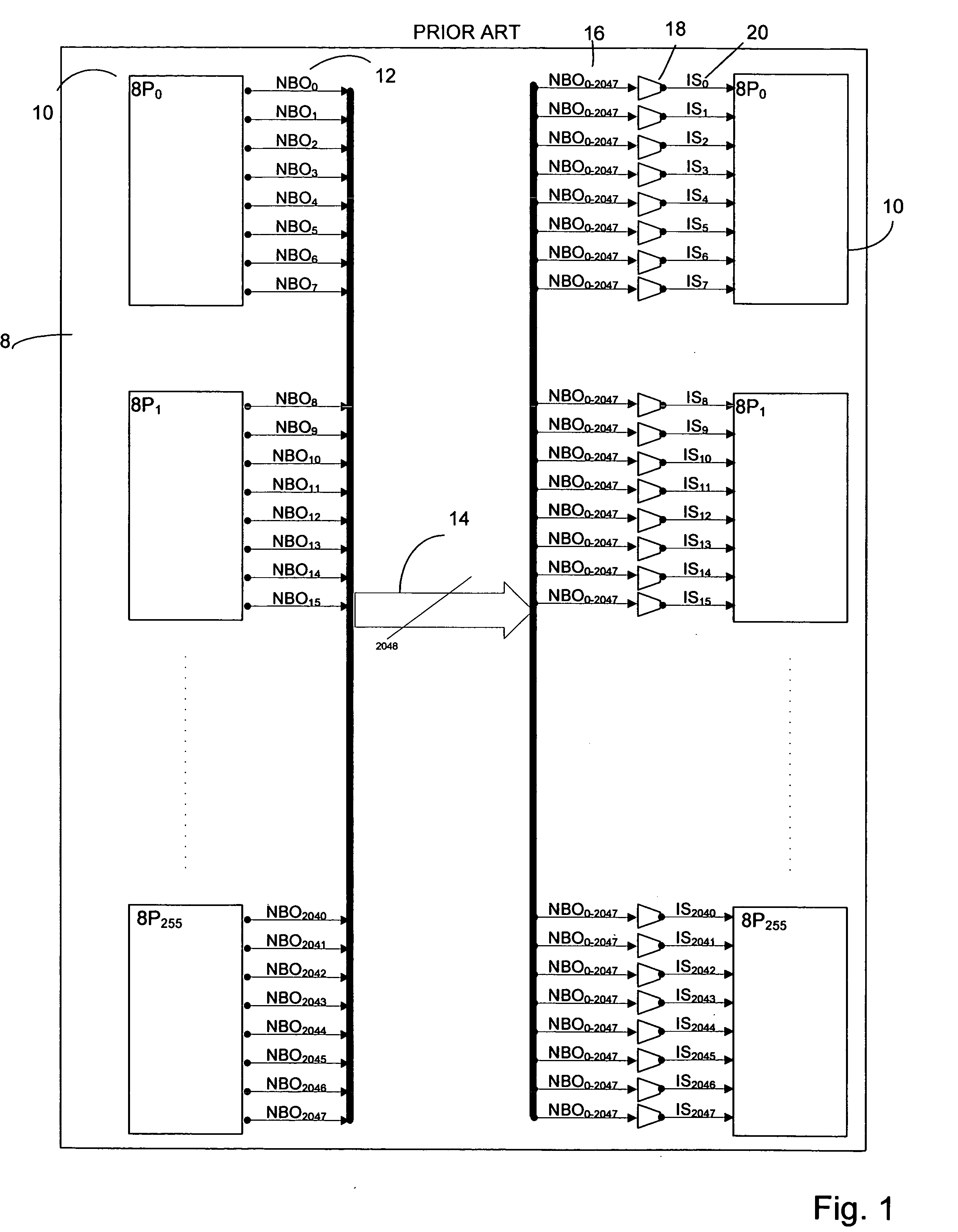

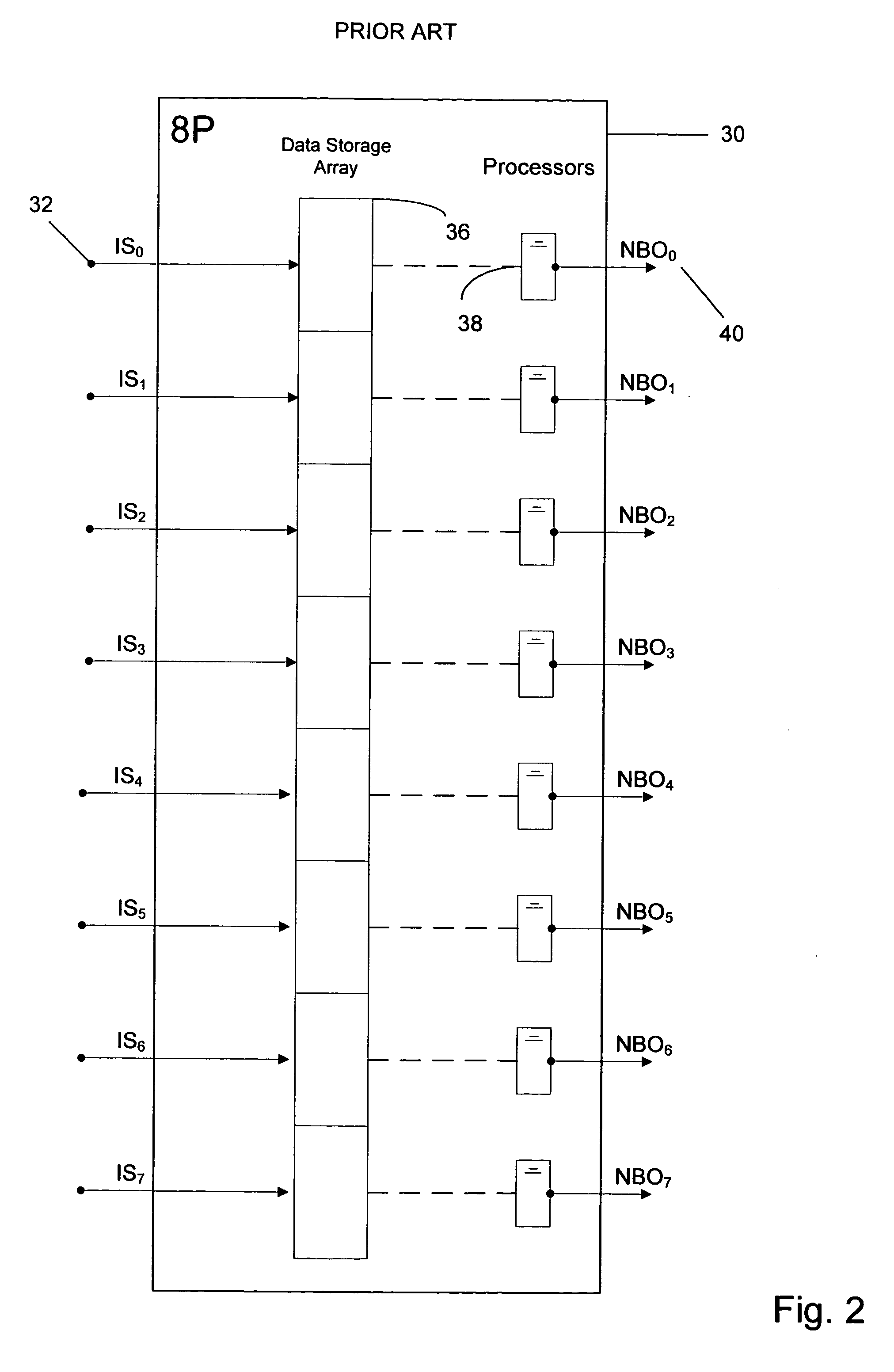

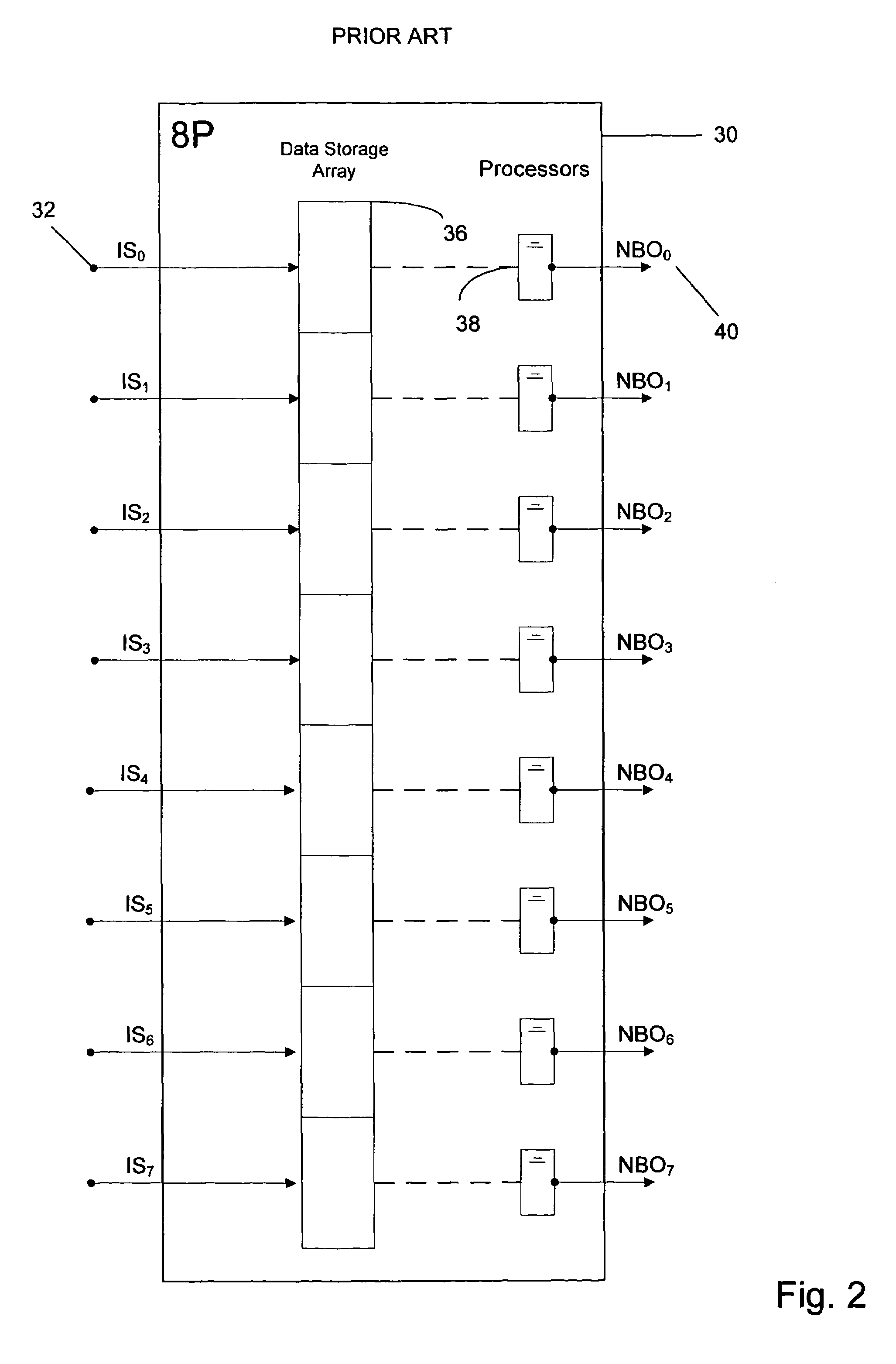

Emulation processor interconnection architecture

ActiveUS7555423B2Reduce the amount requiredReduce power consumptionCAD circuit designSoftware simulation/interpretation/emulationMultiplexerVerification system

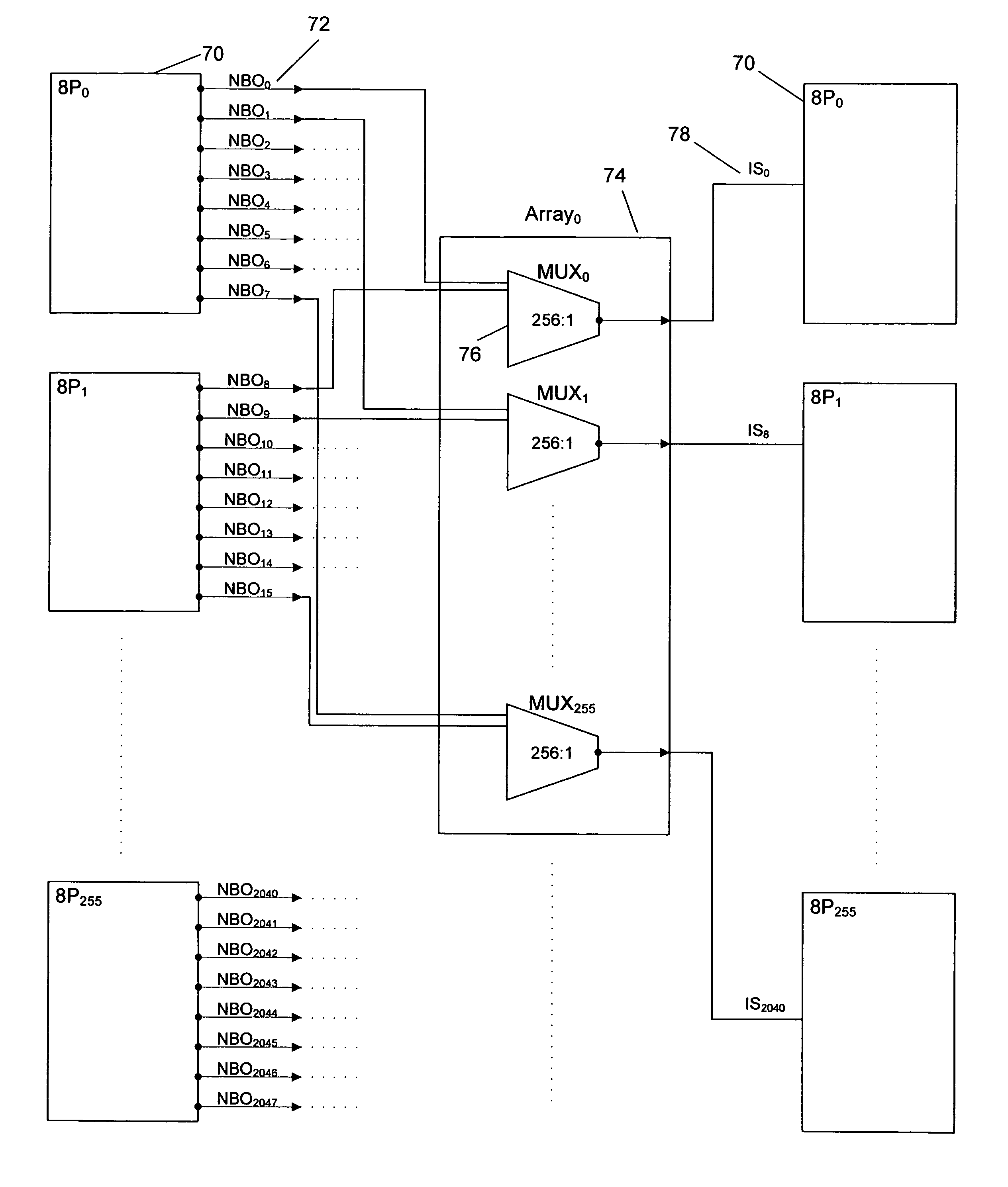

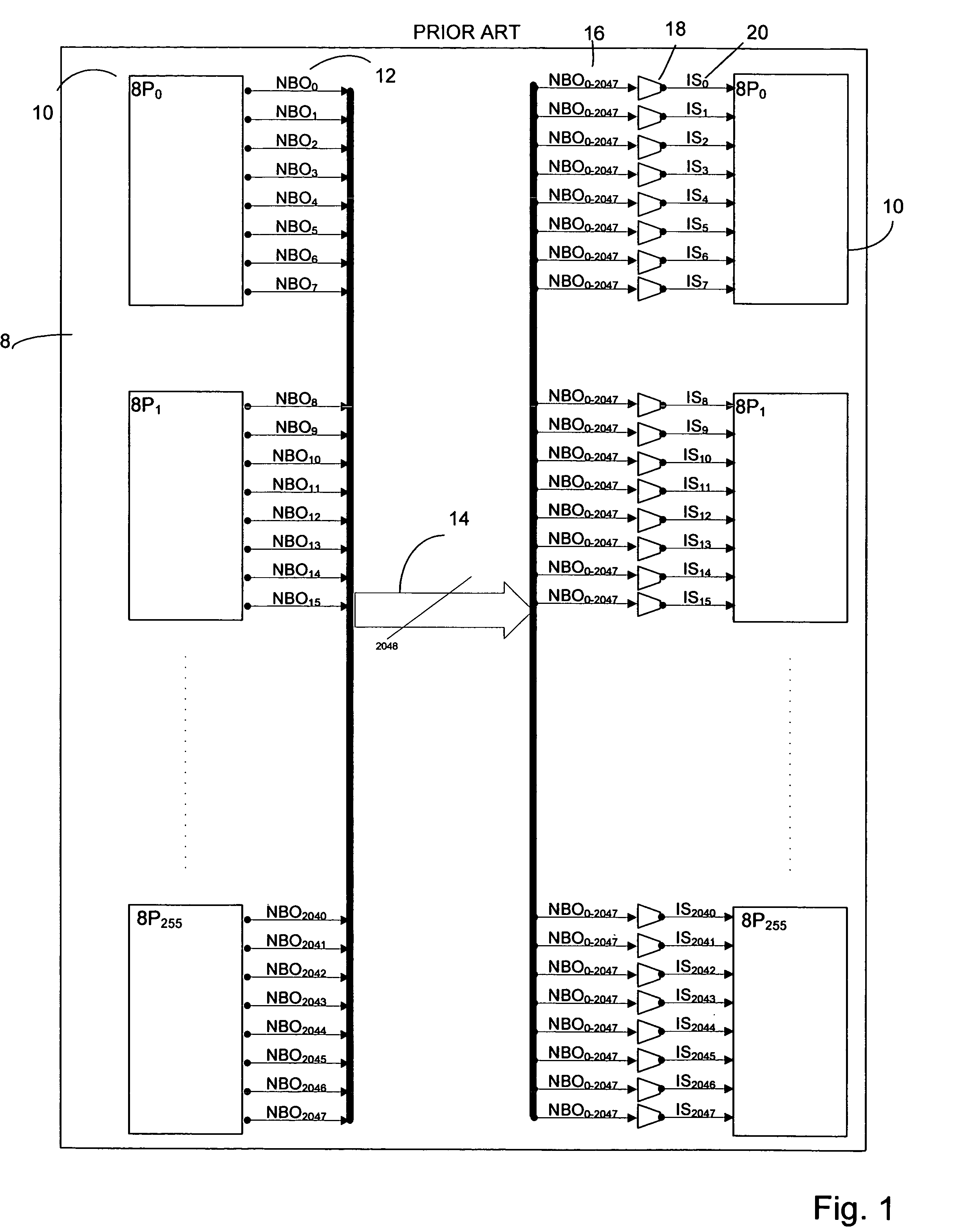

The present system and methods are directed to the interconnection of clusters of emulation processors comprising emulation processors in a software-driven hardware design verification system. The processors each output one NBO output signal. The clusters are interconnected by partitioning a common NBO bus into a number of smaller NBO busses, each carrying unique NBO signals but together carrying every NBO. Each of the smaller NBO busses are passed into a series of multiplexers, each dedicated to a particular processor. The multiplexers select a signal for output back to the emulation clusters. The multiplexers that handle these smaller NBO busses are narrower than was previously required, thus reducing the amount of power, interconnect, and area required by the multiplexer array and dedicated interconnect.

Owner:CADENCE DESIGN SYST INC

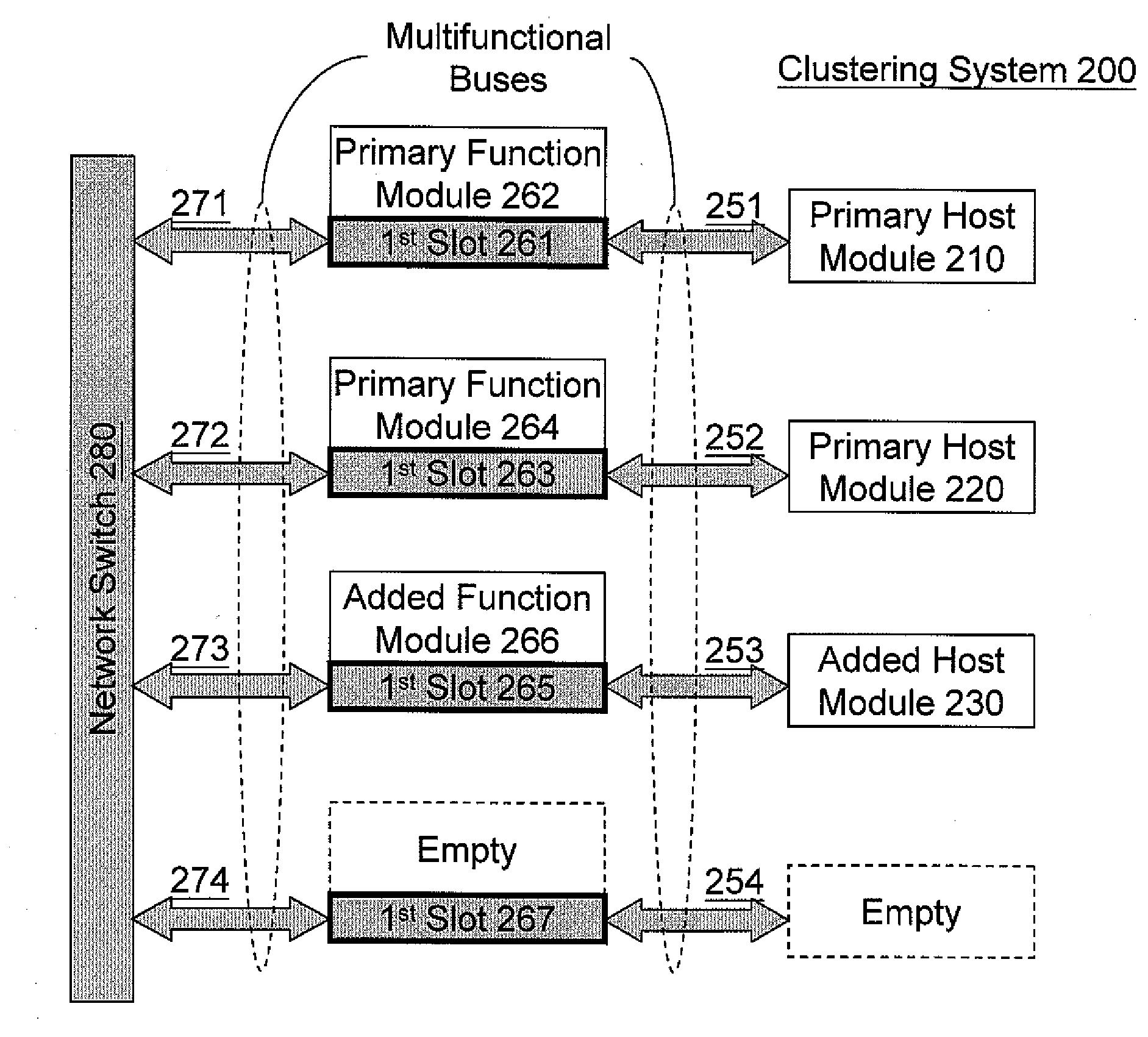

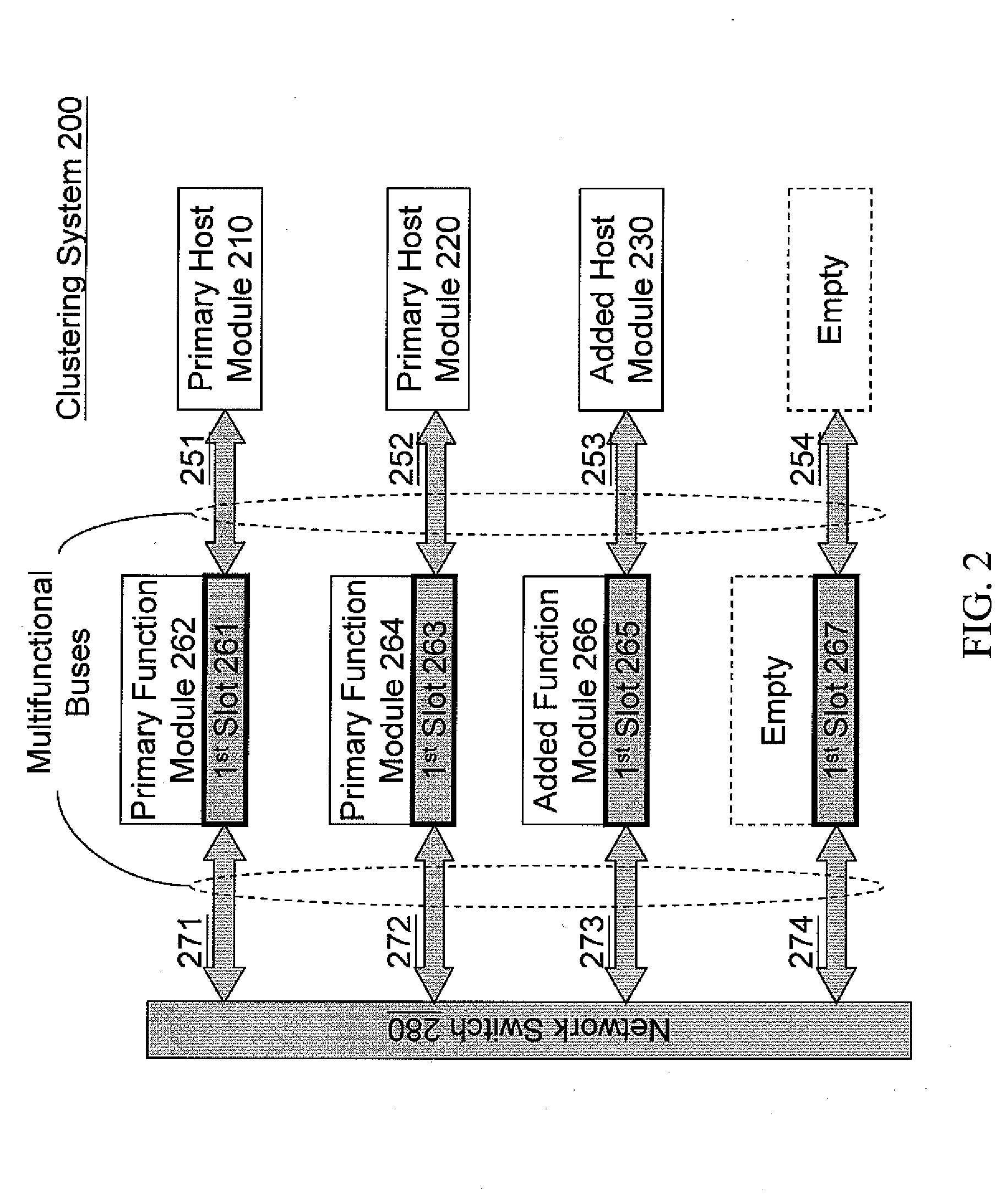

Clustering System and Flexible Interconnection Architecture Thereof

InactiveUS20080307149A1Flexible system configurationElectric digital data processingElectricityNetwork switch

An interconnection architecture is provided for flexibly connecting a primary host module or an added host module to a network switch in a clustering system. The interconnection architecture mainly includes plural first slots, a primary function module, an added function module and plural multifunctional buses. The first slots electrically connect the network switch with the primary and added host modules. The primary function module inserts in one of the first slots to electrically connect the primary host module with the network switch; and the added function module inserts in one of the first slots to electrically connect the added host module with the network switch. The multifunctional buses connect the network switch with the first slots and also connect the first slots with the primary host module and the added host module.

Owner:TYAN COMP

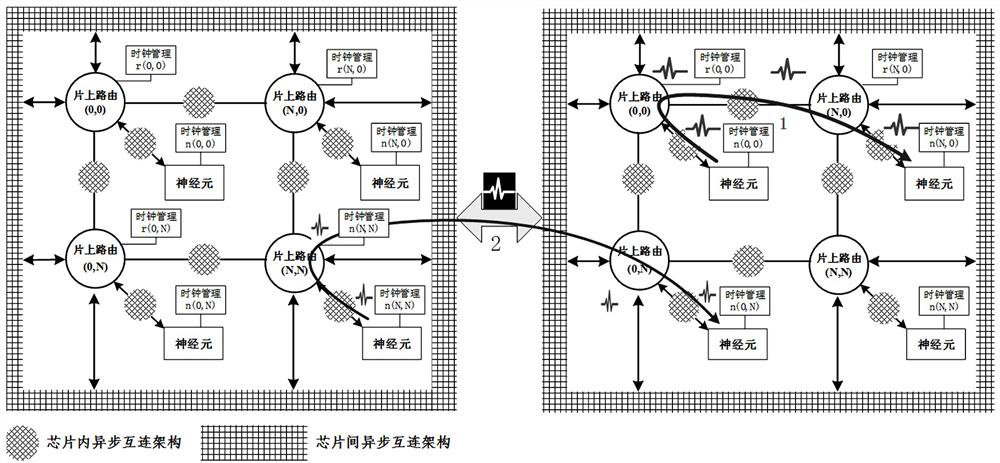

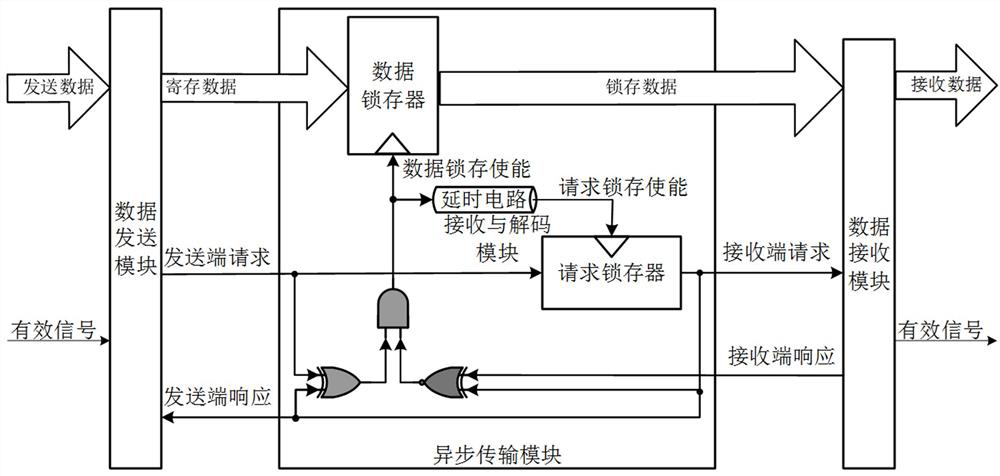

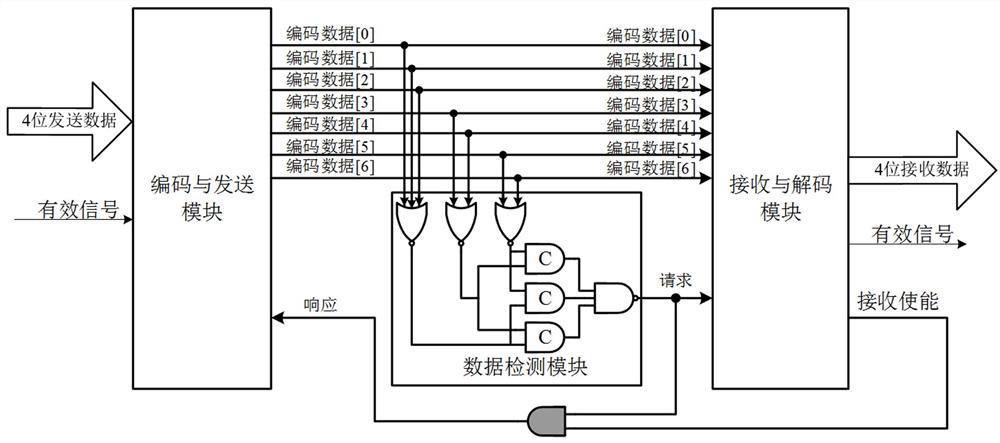

Asynchronous communication interconnection architecture and brain-like chip with same

ActiveCN112783261AEfficient cascade expansionEfficient integrationDigital computer detailsGenerating/distributing signalsComputer hardwareComputer architecture

The invention relates to the technical field of artificial neural networks, in particular to an asynchronous communication interconnection architecture and a brain-like chip with the architecture, and the asynchronous communication interconnection architecture comprises an in-chip asynchronous communication interconnection architecture, an inter-chip asynchronous communication interconnection architecture, a neuron calculation unit and an on-chip routing unit; the neuron calculation unit and the on-chip routing unit are respectively provided with an independent clock management module in an independent clock domain, the neuron calculation unit and the on-chip routing unit are connected in the same brain chip, and the on-chip routing unit and the adjacent on-chip routing unit are connected through the on-chip asynchronous communication interconnection architecture. The adjacent brain-like chips are connected with each other through the asynchronous communication interconnection architecture between the chips. According to the method, a large number of neuron computing units can be efficiently integrated in the brain-like chip, and meanwhile, the brain-like chip is efficiently cascaded and expanded, so that neuron computing resources of a large scale are obtained.

Owner:ZHEJIANG LAB +1

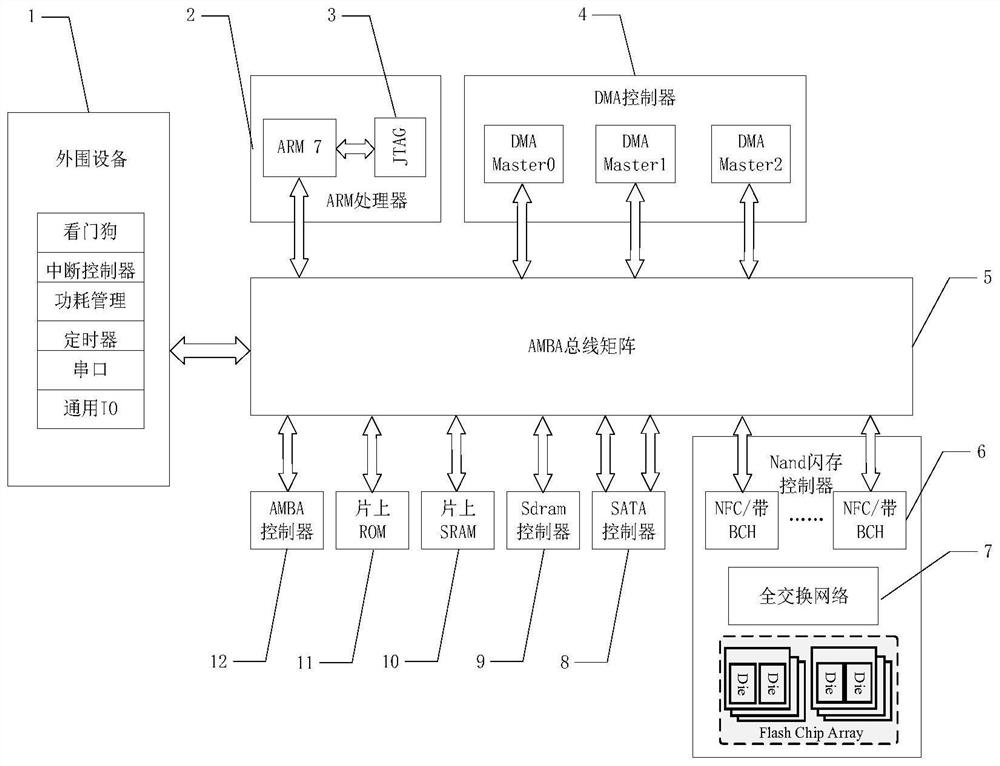

SSD (Solid State Disk) with multi-channel full-interconnection architecture and control method of SSD

InactiveCN112114744AReduce the problem of uneven resource utilizationIncrease profitInput/output to record carriersComputer hardwareComputer architecture

The invention discloses an SSD (Solid State Disk) with a multi-channel full-interconnection architecture and a control method of the SSD, which are characterized in that channels and chips are connected by utilizing a full-interconnection network, specific IDs (Identities) are distributed to different channels and chips in a routing chip through an SSD main control algorithm, different ID connections are controlled by sending commands through control pins, and the main control algorithm maintains a channel and chip state list. When a read-write request comes, the main control algorithm traverses the channels and the chip state list, finds out the channels and the chips meeting the requirements, and selects the first channel and the first chip meeting the requirements from the channels andthe chips meeting the requirements by adopting an FIFO strategy after the channels and the chips meeting the requirements exist, so that SSD solid-state disk control of a multi-channel full-interconnection architecture is realized. Interconnection between any channel and any chip is realized, and any chip and any channel do not conflict with links of other existing chips and channels after being interconnected. According to the invention, the problem of non-uniform channel utilization rate caused by conditions such as workload and the like is effectively reduced, and the read-write performanceof the SSD is improved.

Owner:XI AN JIAOTONG UNIV

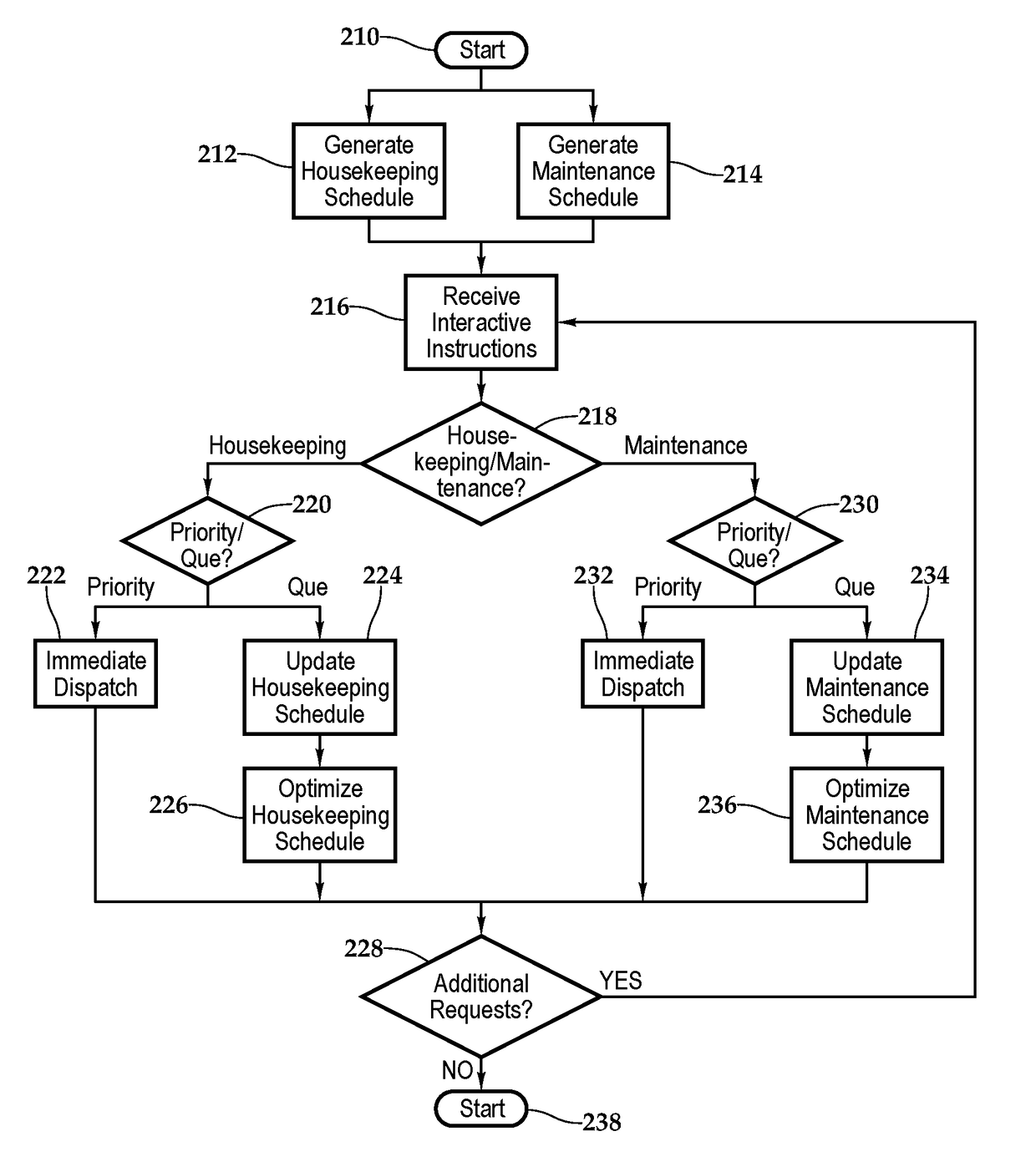

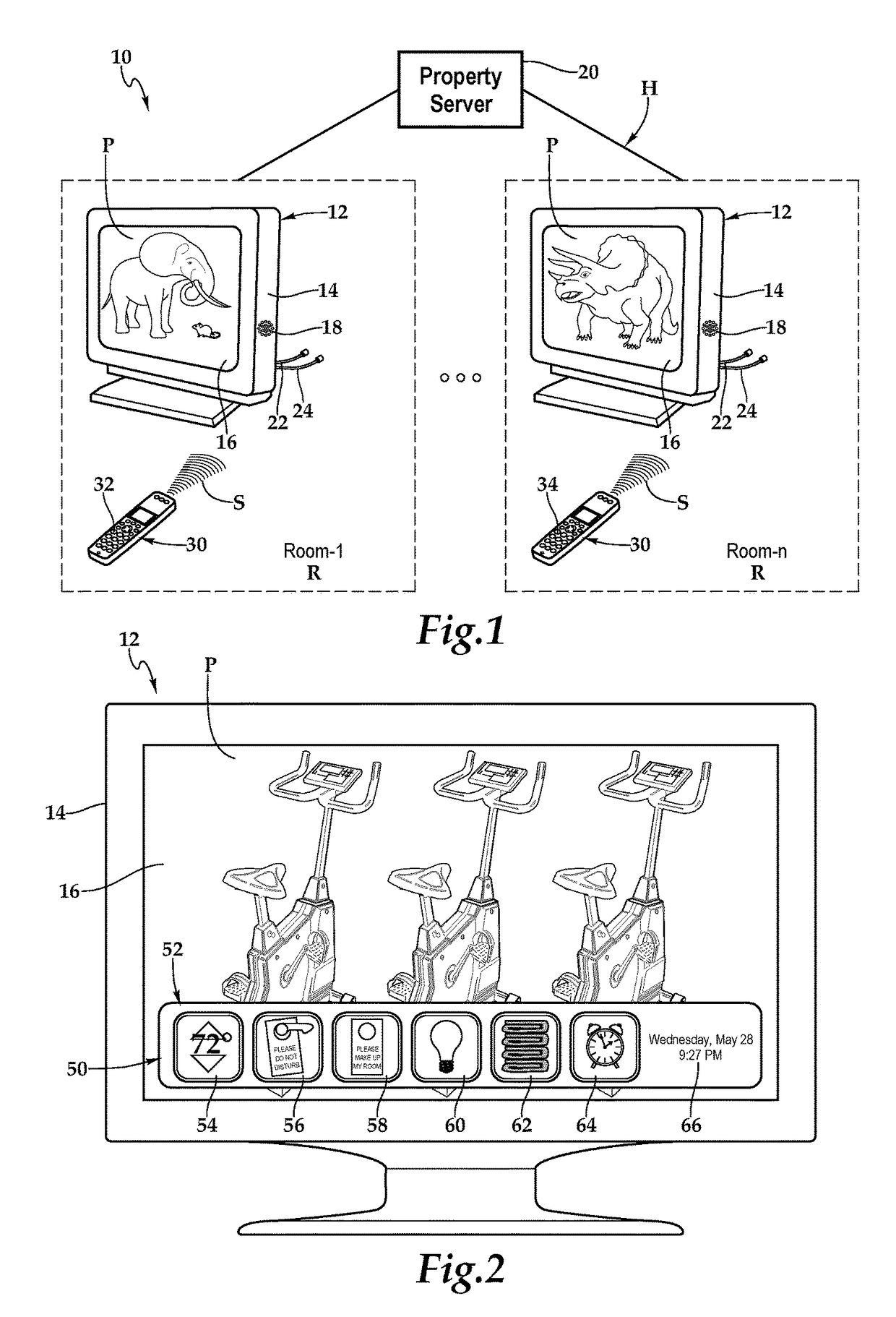

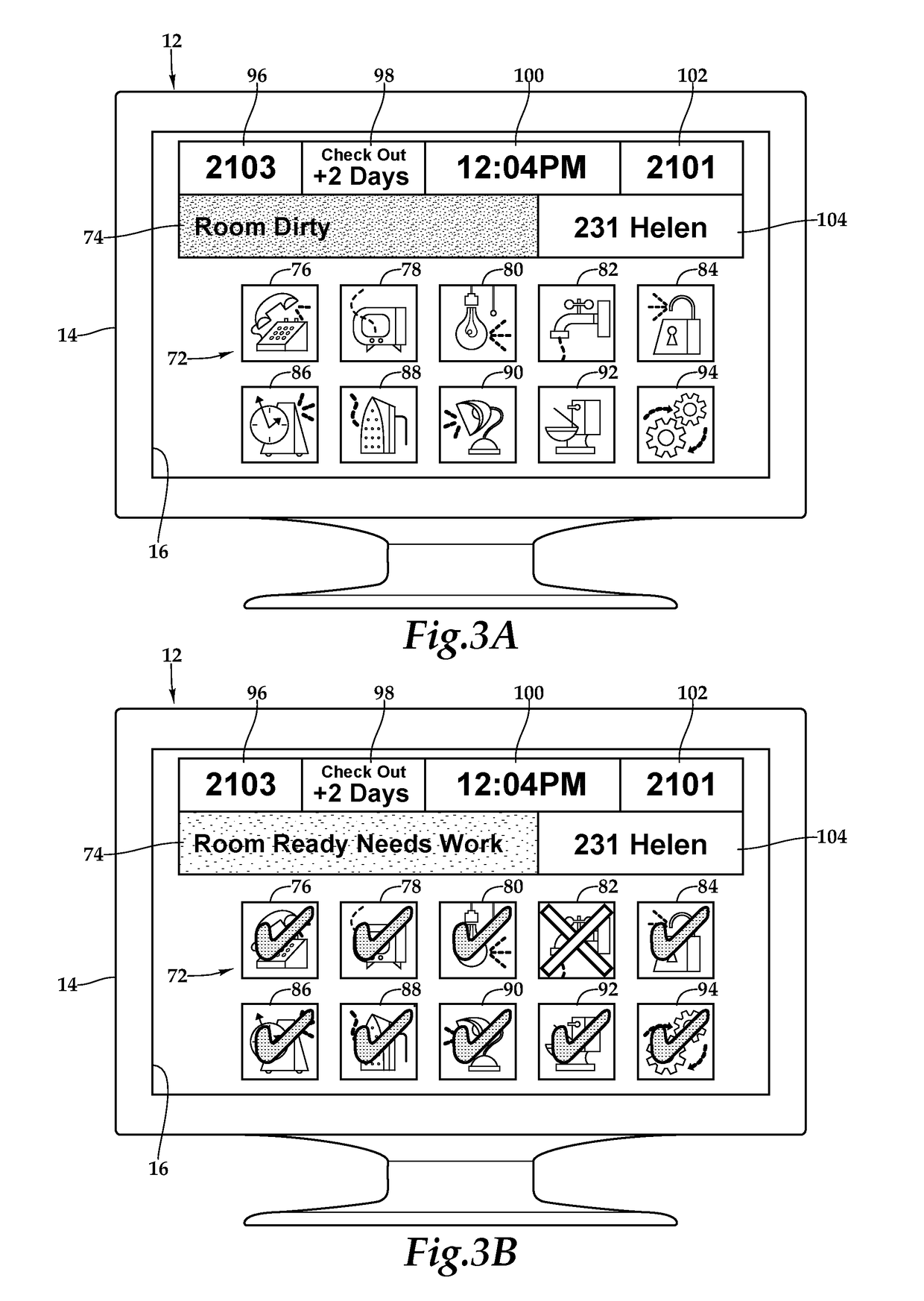

Television with interactive portal and system and method for use of same

ActiveUS10085044B2Reduce dependenceReduce failureSelective content distributionRelevance feedbackCable television

A television with an interactive portal and system and method for use of the same are disclosed. In one embodiment of the television, the television is deployed to provide an interactive portal in a hospitality establishment having multiple rooms, such as a hotel. The television is associated with a room and includes a housing that secures a processor, memory, tuner, panel, and audio driver in an interconnected architecture. The television generates a guest interactive portal as well as a housekeeping interactive portal for a guest and housekeeper, respectively. Each of the portals provides relevant feedback on the condition of the room to a server associated with the hotel.

Owner:ENSEO LLC

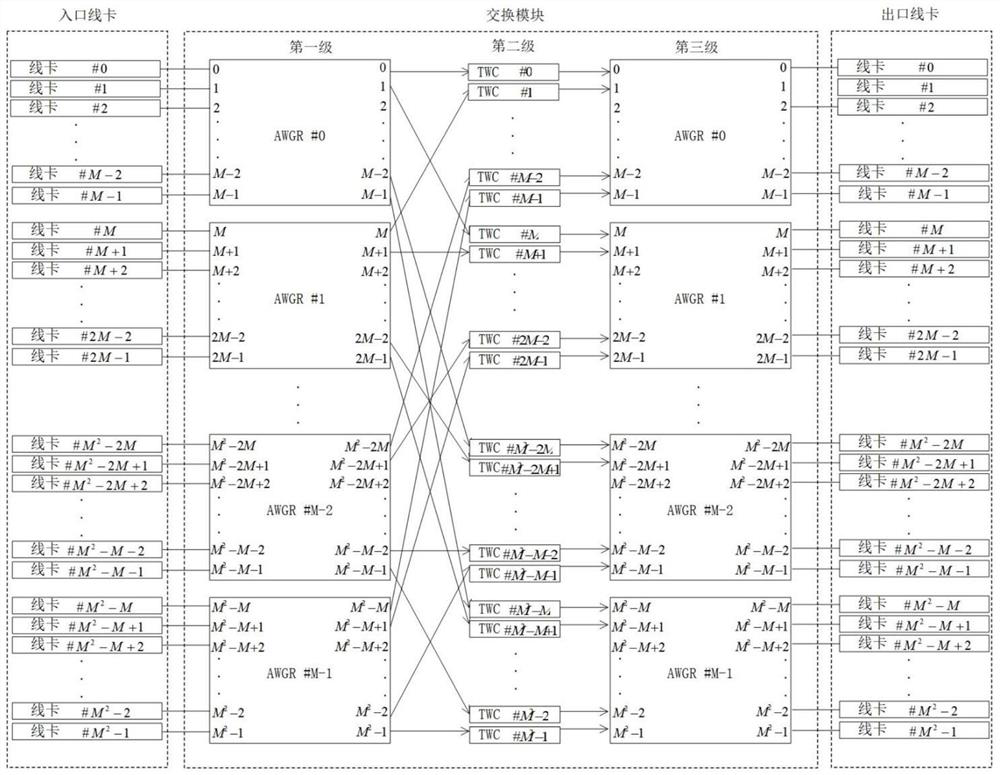

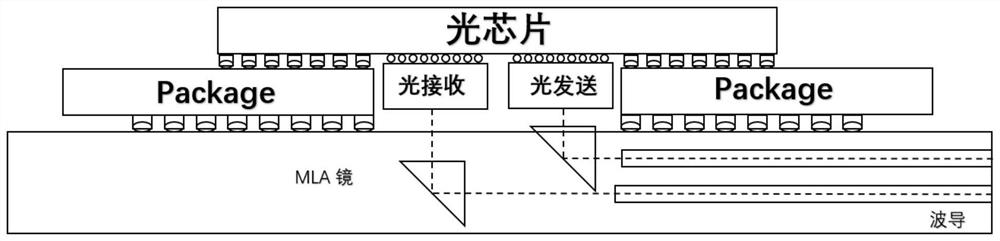

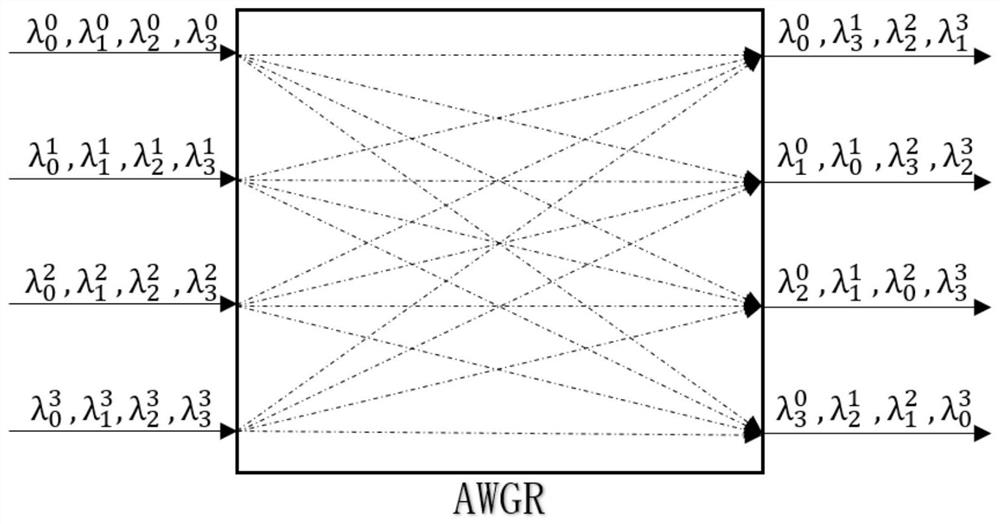

Buffer-free optical interconnect architecture and method for data center

ActiveCN111865472AIncrease the number of nodesPort bandwidth is lowWavelength-division multiplex systemsLine cardWavelength modulation

The invention discloses a buffer-free optical interconnection architecture and method for a data center. The architecture is divided into an inlet wire clamp, a three-stage exchange module and an outlet wire clamp, wherein the exchange module comprises a first-stage AWGR, a third-stage AWGR and a second-stage TWC; the first-stage AWGR input port is provided with an inlet wire clamp, the third-stage AWGR output port is provided with an outlet wire clamp, and the first-stage AWGR output port and the third-stage AWGR input port are connected through a second-stage TWC. Signals of the server are subjected to wavelength division multiplexing and then forwarded to the switching module through wavelength modulation of the entrance line card, optical signals with specific wavelengths are forwardedto the exit line card through TWC wavelength conversion and AWGR cyclic routing, and the exit line card processes the optical signals and then sends the optical signals to the server. High-capacity,high-reliability and low-power-consumption all-optical interconnection is achieved, a data center is effectively flattened, the defects that a traditional space switch is low in port bandwidth and limited in port number are overcome, and the all-optical interconnection system has the advantages of being high in reliability, low in complexity, low in delay and the like.

Owner:ZHEJIANG UNIV +1

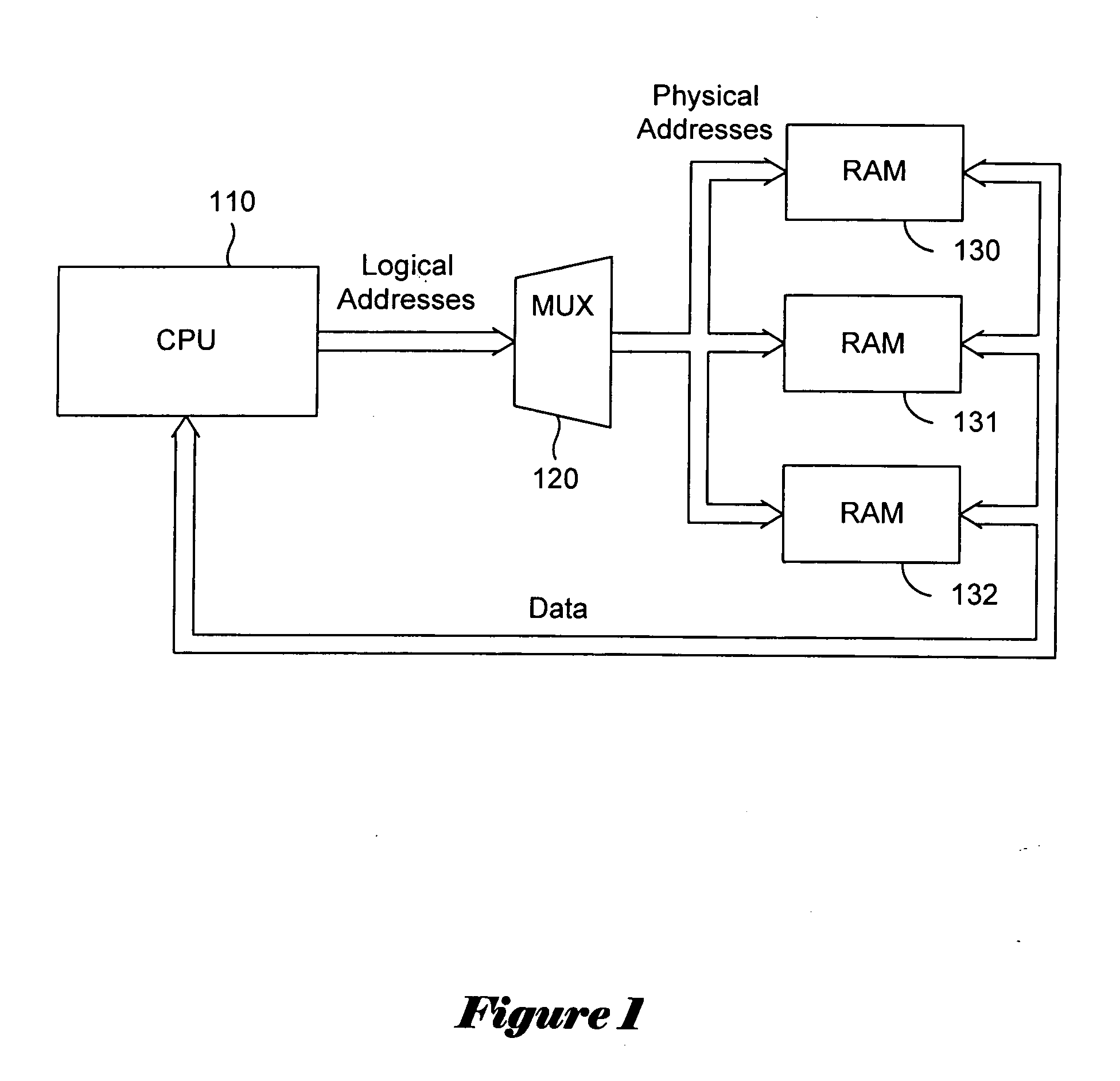

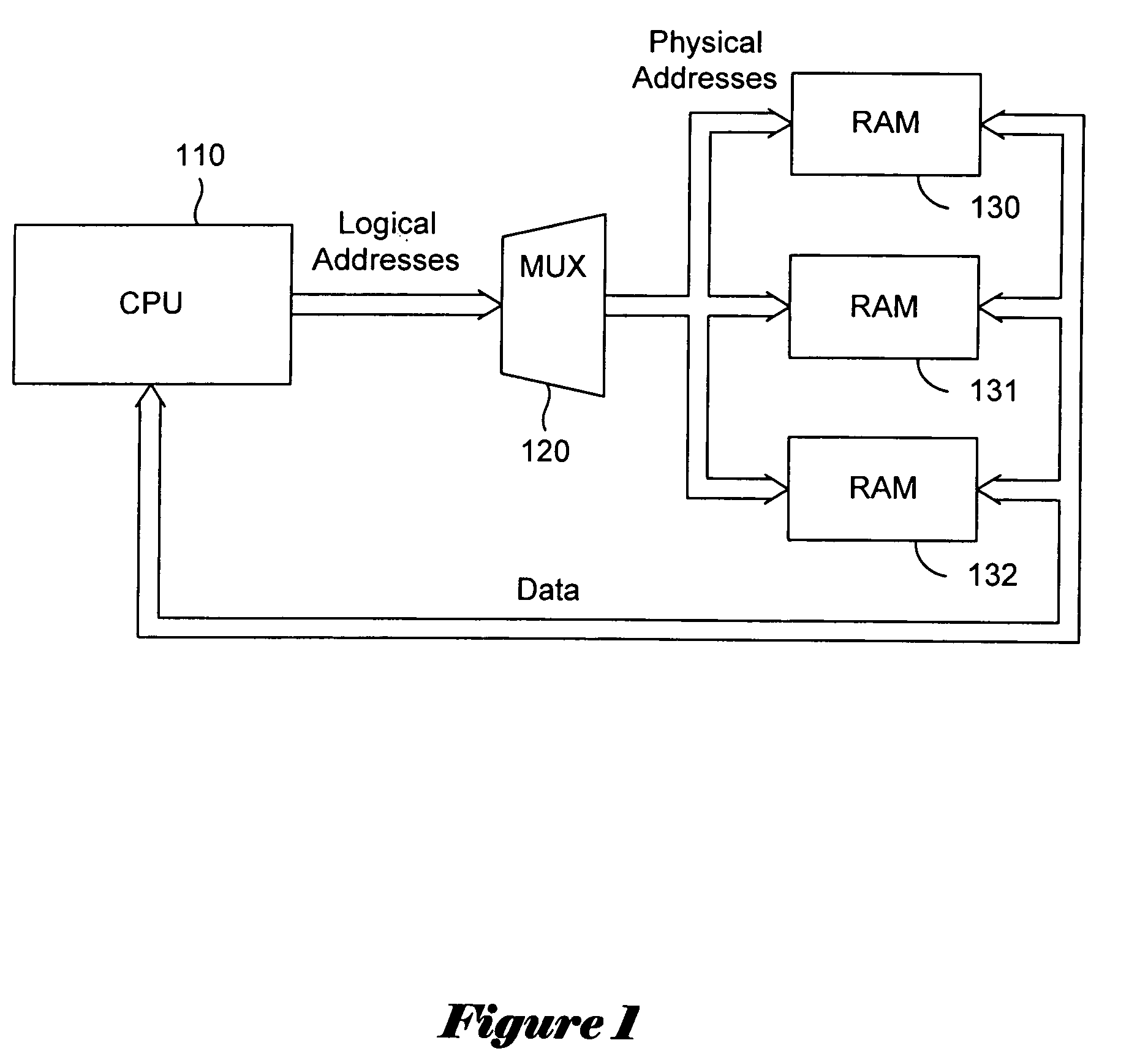

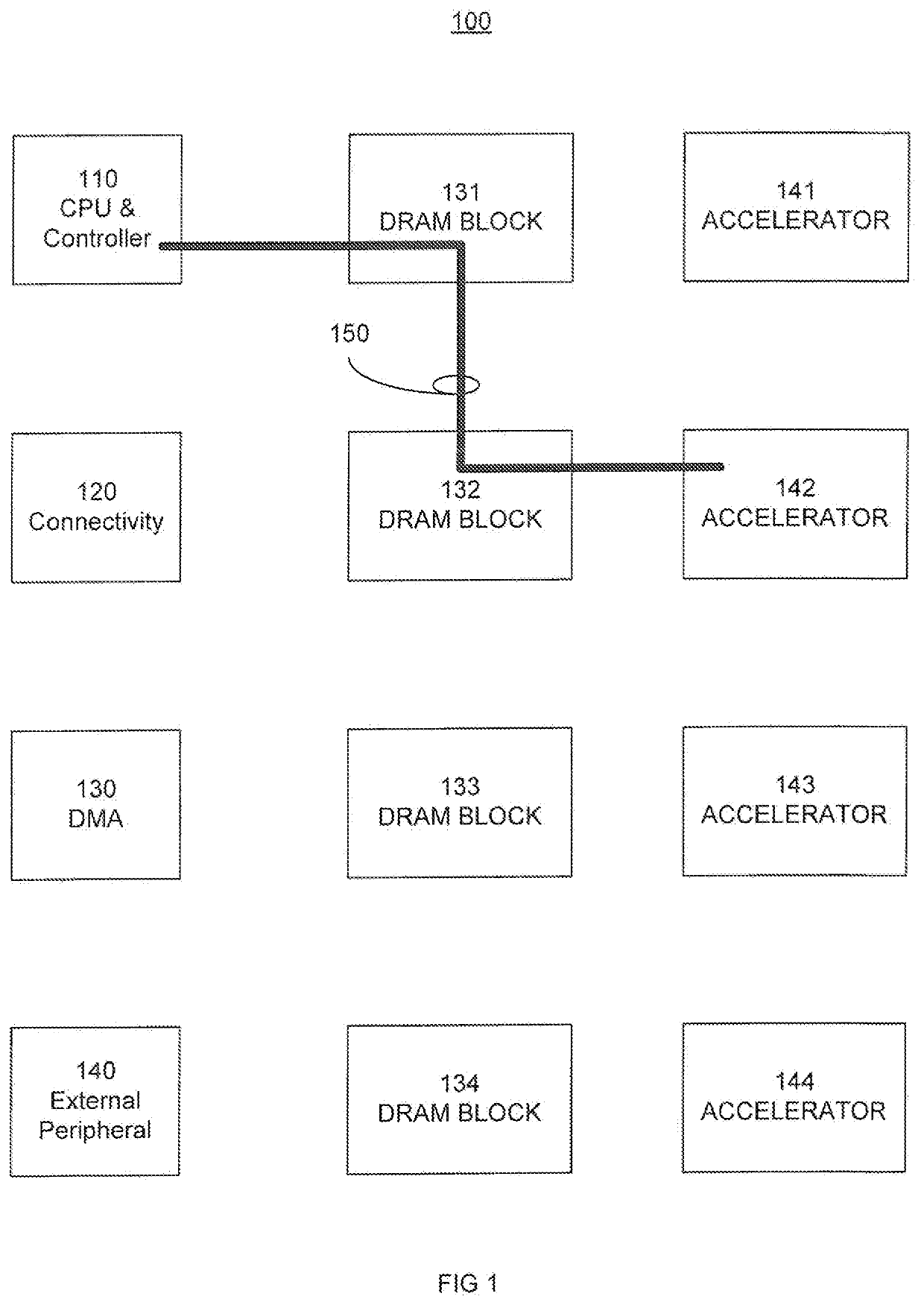

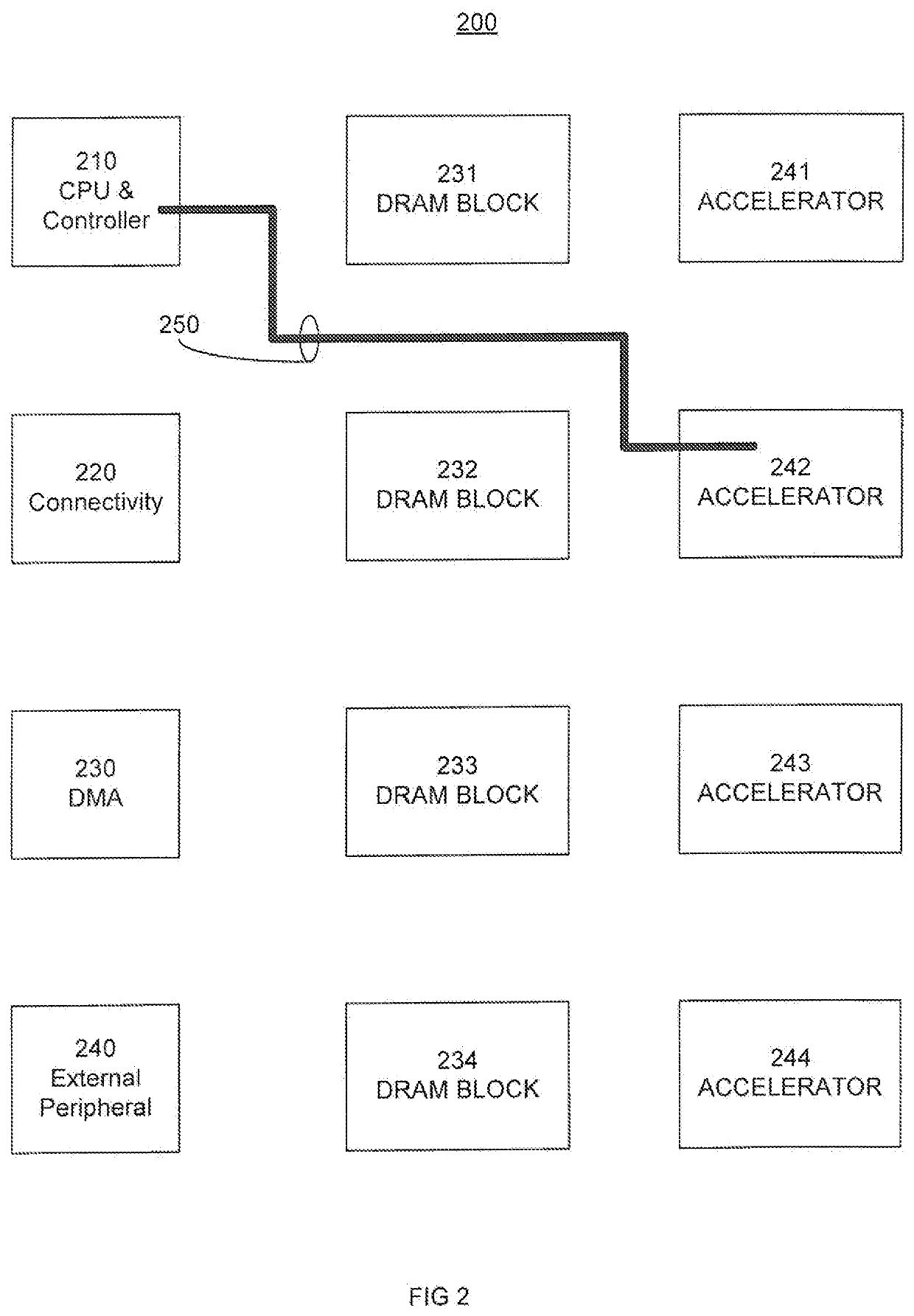

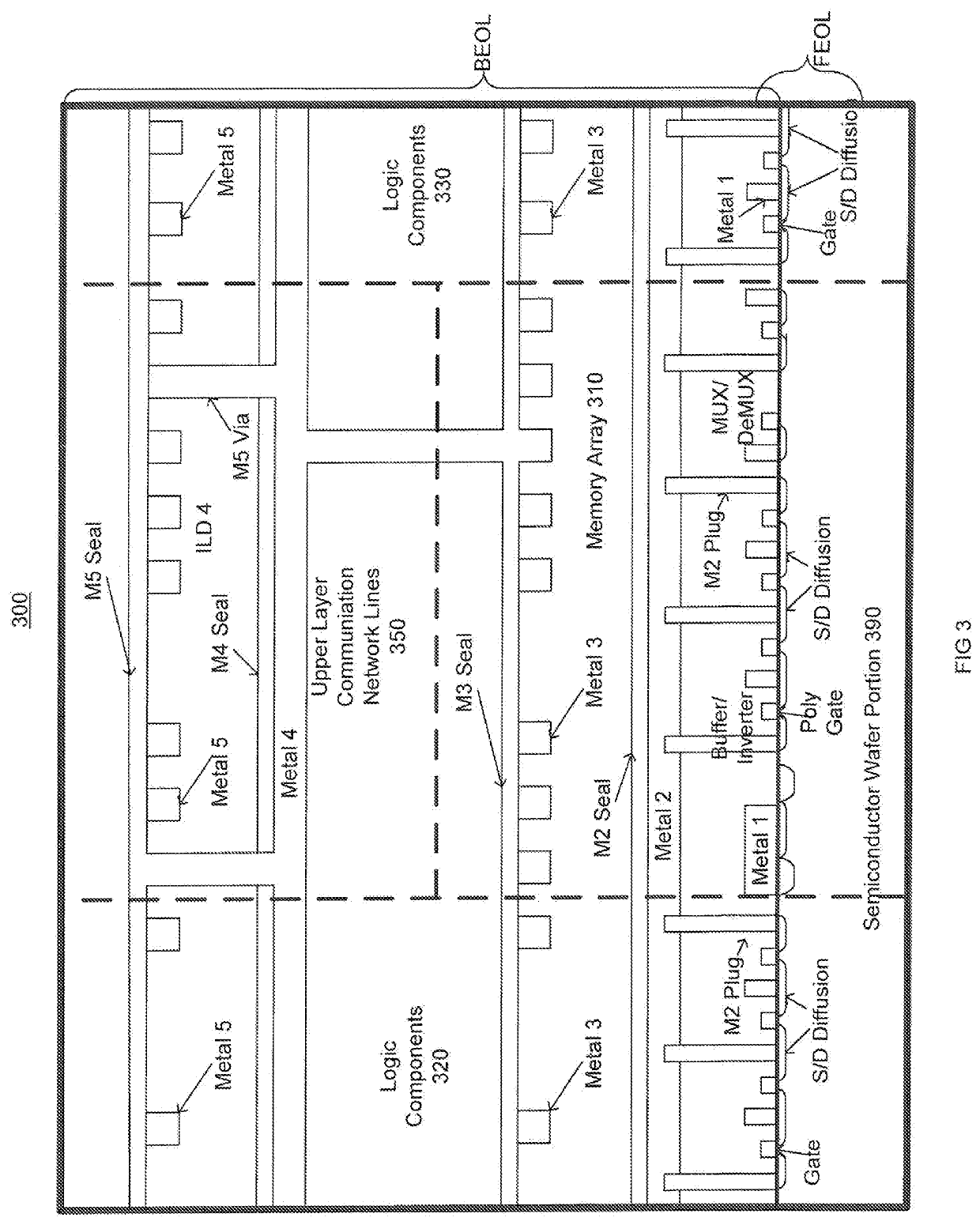

Memory interconnection architecture systems and methods

ActiveUS20220101887A1Increase delayAccelerate the application processDigital storageComputer architectureEngineering

The systems and methods are configured to efficiently and effectively include processing capabilities in memory. In one embodiment, a processing in memory (PIM) chip a memory array, logic components, and an interconnection network. The memory array is configured to store information. In one exemplary implementation the memory array includes storage cells and array periphery components. The logic components can be configured to process information stored in the memory array. The interconnection network is configured to communicatively couple the logic components. The interconnection network can include interconnect wires, and a portion of the interconnect wires are located in a metal layer area that is located above the memory array.

Owner:ALIBABA GRP HLDG LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com