Method for storing multiple cache queues in parallel

A storage method and cache queue technology, applied in the field of parallel storage of multiple cache queues, can solve the problems of high security processing requirements, congestion, and large storage capacity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The technical solutions of the present invention will be described in further detail below through specific implementation methods.

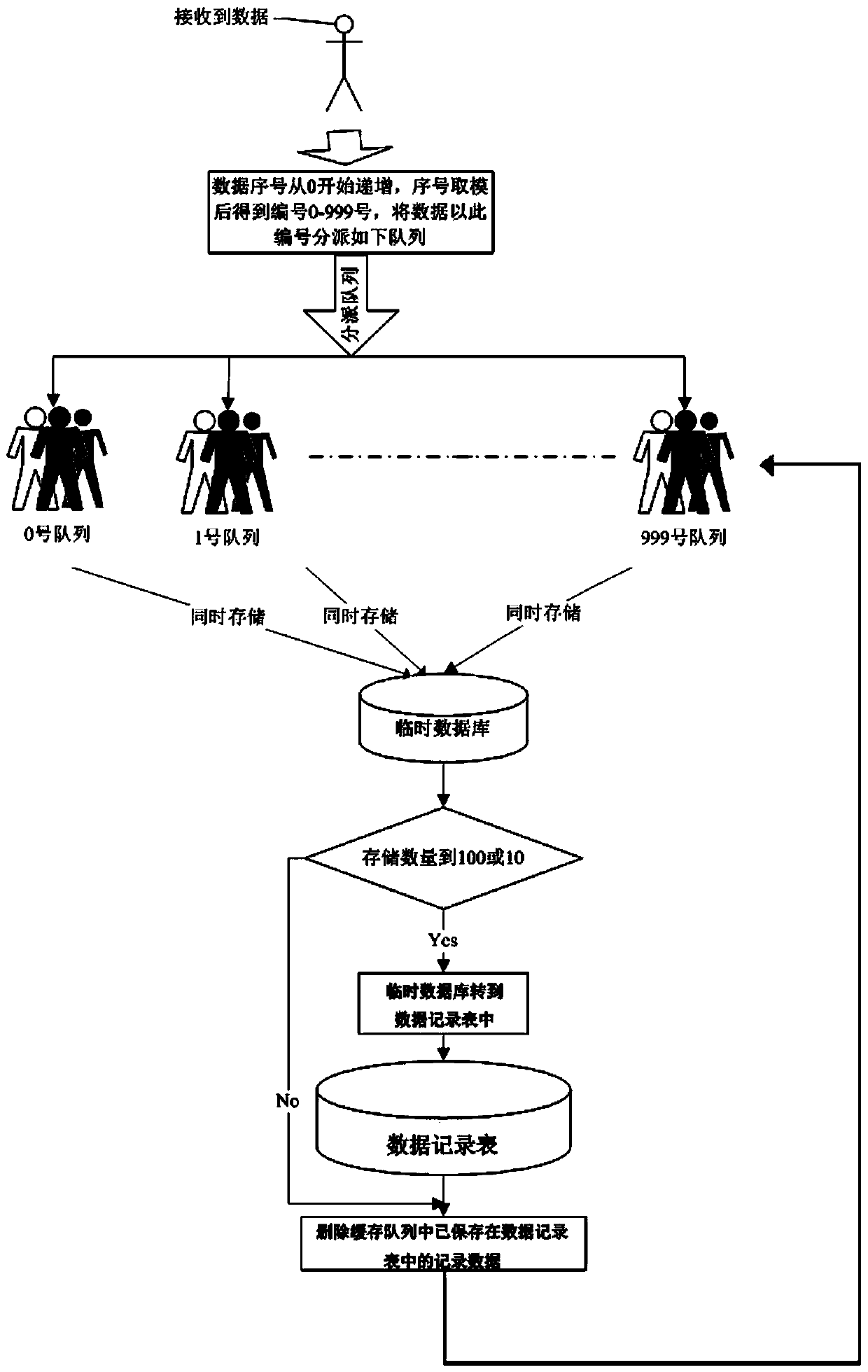

[0026] Such as figure 1 As shown, a multi-cache queue parallel preservation method includes the following steps:

[0027] S1, determine the type of record data, and apply for a cache space and a data saving thread for each record data in the memory, each cache space includes 1000 cache queues, and the number of the cache queues is 0 to 999, each The cache queue is linked in the form of a linked list;

[0028] Preferably, the record data is divided into three types: thickness record data DeepThick, waveform record data Wave_New and alarm record data AlarmDat;

[0029] Specifically, the data structure of the cache corresponding to the thickness record data is: ClassArrDeepBuff[] Arr_Buff_Deep = new ClassArrDeepBuff[1000]; the storage type is ClassArrDeepBuff, and the variable is List lst_Buff_Deep data;

[0030] The data structure of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com