Virtual touch screen human-computer interaction method, system and device based on depth sensor

A technology of depth sensor and human-computer interaction, which is applied in the field of virtual touch screen human-computer interaction based on depth sensor, can solve the problems of low commercialization, low reliability, and poor stability, so as to improve reliability, flexibility, and accuracy Degree, reduce the effect of misuse

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

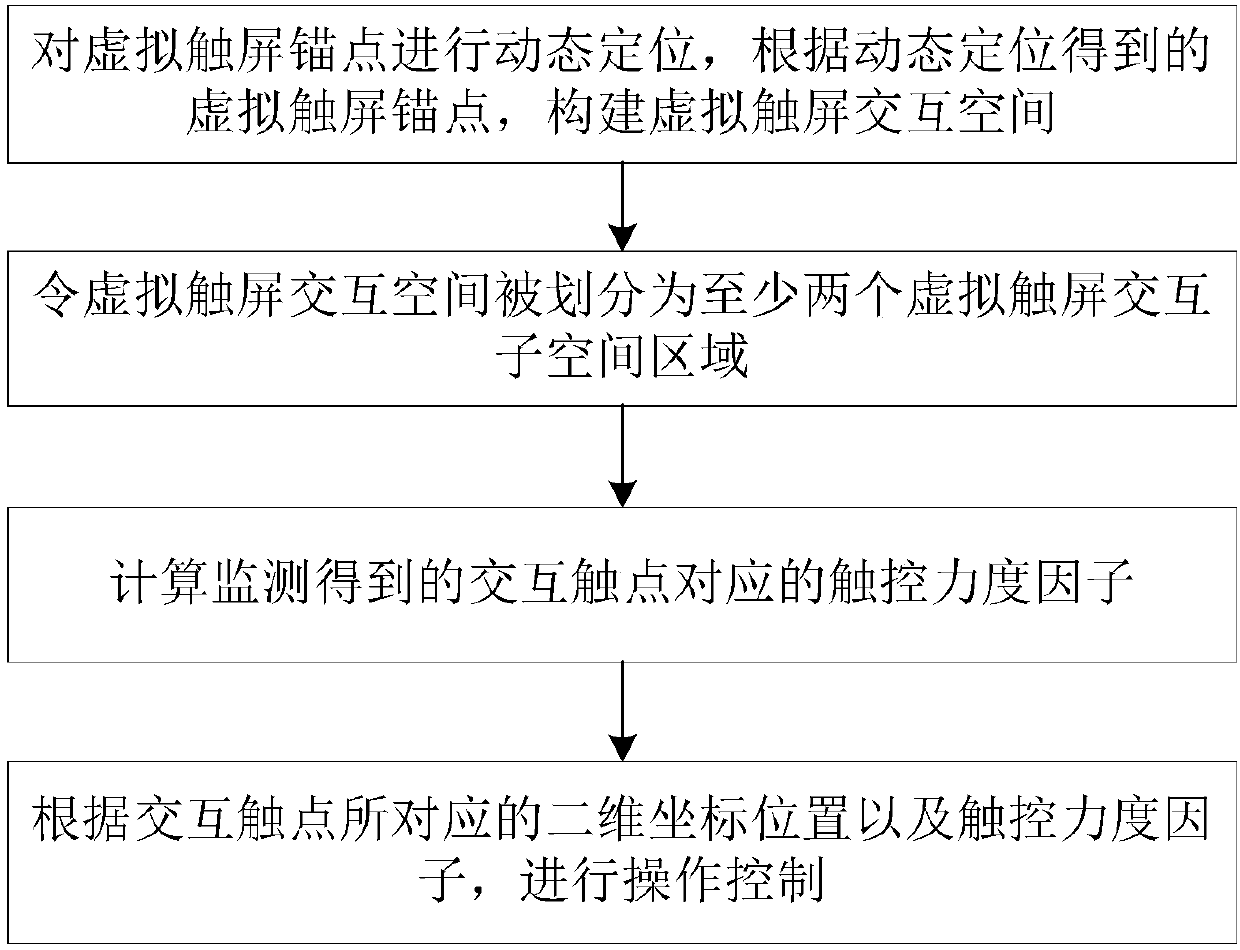

[0068] Such as figure 1 Shown, the virtual touch-screen human-computer interaction method based on depth sensor, this method comprises the following steps:

[0069] Dynamically locate the anchor point of the virtual touch screen, and construct a virtual touch screen interactive space according to the virtual touch screen anchor point obtained by dynamic positioning;

[0070] Carry out spatial division to the virtual touch screen interaction space, so that the virtual touch screen interaction space is divided into at least two virtual touch screen interaction subspace areas;

[0071] Monitor the interactive touch points, and calculate the touch force factor corresponding to the monitored interactive touch points; wherein, the interactive touch points refer to the three-dimensional coordinate positions of the limbs in the virtual touch screen interaction space;

[0072] According to the two-dimensional coordinate position corresponding to the interactive touch point and the tou...

Embodiment 2

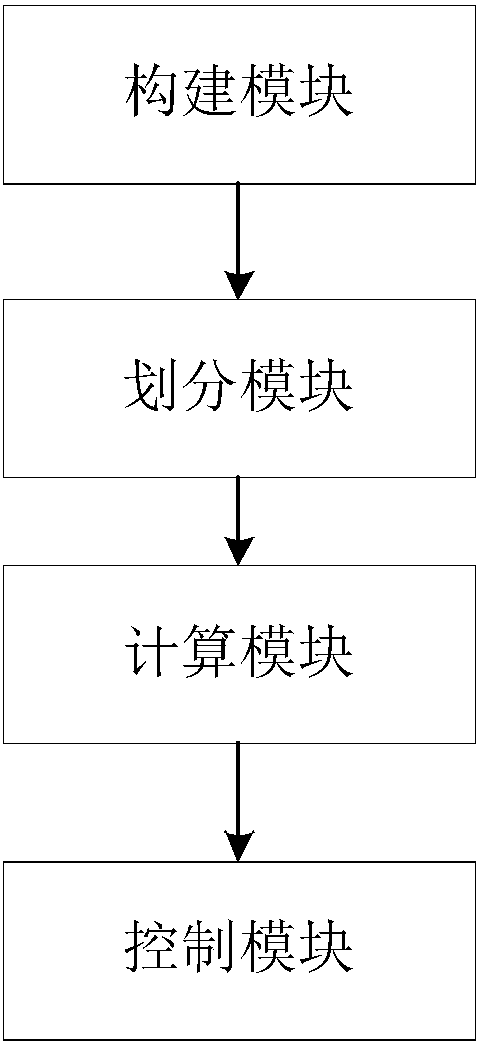

[0107] The program system corresponding to the above method, such as figure 2 As shown, the virtual touch screen human-computer interaction system based on the depth sensor, the system includes:

[0108] The construction module is used to dynamically locate the anchor point of the virtual touch screen, and construct a virtual touch screen interactive space according to the virtual touch screen anchor point obtained by dynamic positioning;

[0109] The division module is used for spatially dividing the virtual touch screen interaction space, so that the virtual touch screen interaction space is divided into at least two virtual touch screen interaction subspace areas;

[0110] The calculation module is used to monitor the interactive touch point, and calculate the touch strength factor corresponding to the monitored interactive touch point; wherein, the interactive touch point refers to the three-dimensional coordinate position of the limb in the virtual touch screen interacti...

Embodiment 3

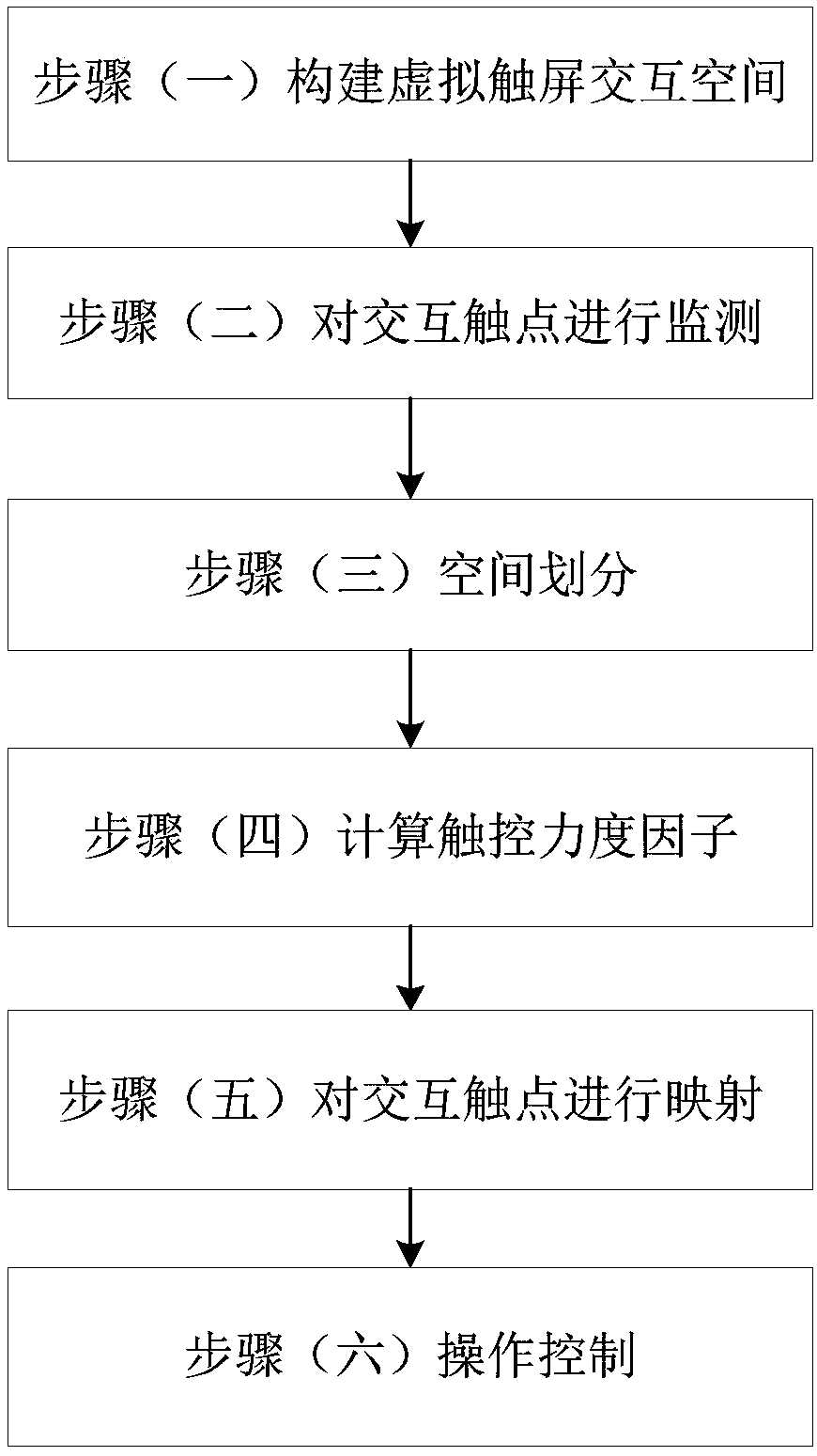

[0135] A software and hardware combination device corresponding to the above method, a virtual touch screen human-computer interaction device based on a depth sensor, which includes:

[0136] memory for storing programs;

[0137] a processor for loading said program and performing the following steps:

[0138] Dynamically locate the anchor point of the virtual touch screen, and construct a virtual touch screen interactive space according to the virtual touch screen anchor point obtained by dynamic positioning;

[0139] Carry out spatial division to the virtual touch screen interaction space, so that the virtual touch screen interaction space is divided into at least two virtual touch screen interaction subspace areas;

[0140] Monitor the interactive touch points, and calculate the touch force factor corresponding to the monitored interactive touch points; wherein, the interactive touch points refer to the three-dimensional coordinate positions of the limbs in the virtual tou...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com