Front-end system for performing mass data interaction based on distributed message queue

A message queue and front-end system technology, which is applied in the field of front-end systems based on distributed message queues for massive data interaction, can solve problems such as bottlenecks in processing capabilities, inability to expand processing capabilities, and lack of data receiving and sending, and achieve easy maintenance, Reduce daily operating pressure and save manpower

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings.

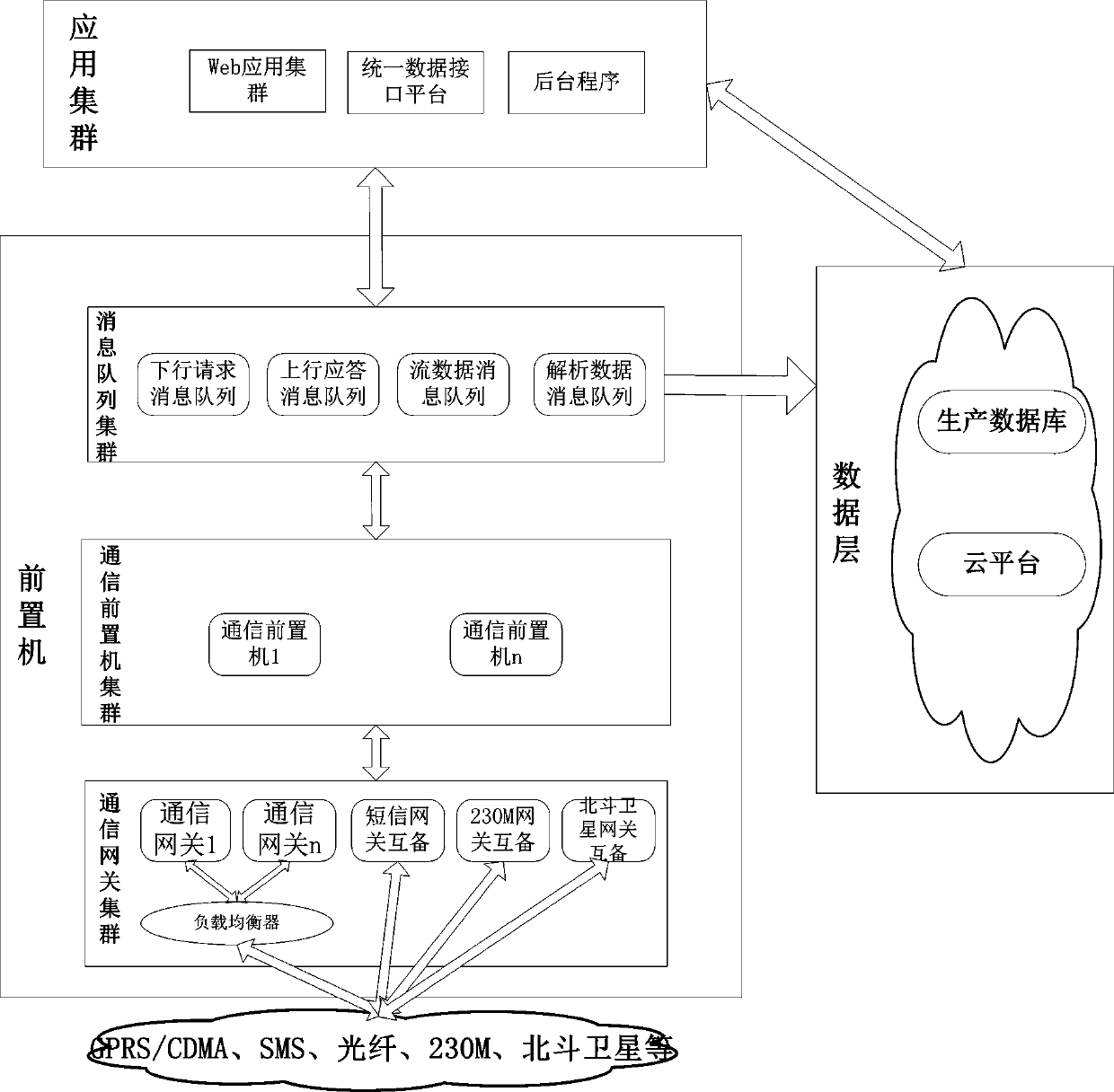

[0017] Such as figure 1 As shown, this technical solution includes a communication gateway cluster for maintaining various communication channel links of field terminals, a front-end processor connected to the communication gateway cluster for message sending and receiving scheduling, and a data processing unit connected to the front-end processor. A message queue cluster for queue processing; the message queue cluster is connected to the application cluster and the data layer, and the message queue cluster plays the role of a data bus inside the front-end system, and the uplink and downlink data are first inserted into the distributed message queue, and each processing node Obtain a corresponding amount of data from the message queue for processing according to its own processing capabilities.

[0018] Wherein, the communication ga...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com