Automatic ship tracking method and system based on deep learning network and mean shift

A technology of deep learning network and mean shift, applied in neural learning methods, biological neural network models, character and pattern recognition, etc., can solve problems such as lost targets, error accumulation, poor tracking effect of dynamically changing targets, etc., and achieve good stability sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] In order to better understand the technical solution of the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

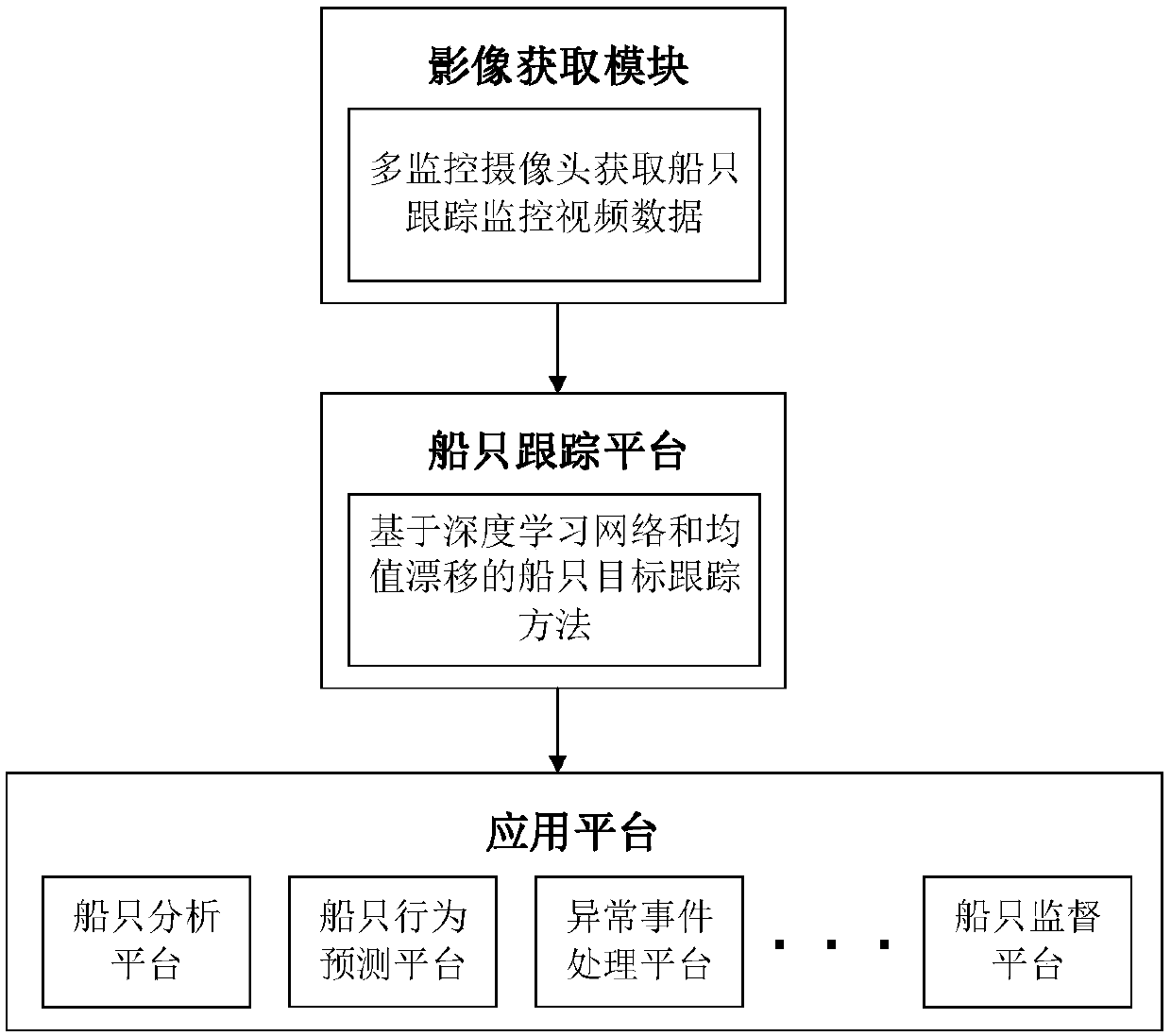

[0047] see figure 1 When the present invention is applied, the system architecture that can be adopted mainly includes a monitoring video acquisition module, a ship tracking platform, and an application platform. The surveillance video acquisition module mainly uses multiple visible light surveillance cameras to acquire video of the seaside area, and downloads the data to the ship tracking module. The ship tracking platform adopts the method of the invention to extract and automatically track the ship target, and transmit the abnormality of the ship target and the like to the application platform. According to the specific ship analysis platform, behavior prediction platform, abnormal event processing platform and ship supervision platform in the application platfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com