Big data cross-modal retrieval method and system based on deep integration Hash

A big data and hashing technology, applied in the field of big data cross-modal retrieval methods and systems, can solve problems that cannot alleviate the heterogeneity of different modalities, cannot generate high-quality, compact hash codes, and cannot effectively capture images. problems such as spatial dependencies and temporal dynamics of sentences, to achieve the effect of reducing heterogeneity and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

[0048] In the present invention, the terms "first" and "second" are only used to describe the difference, and should not be understood as indicating or implying relative importance. The term "plurality" means two or more, unless otherwise clearly defined.

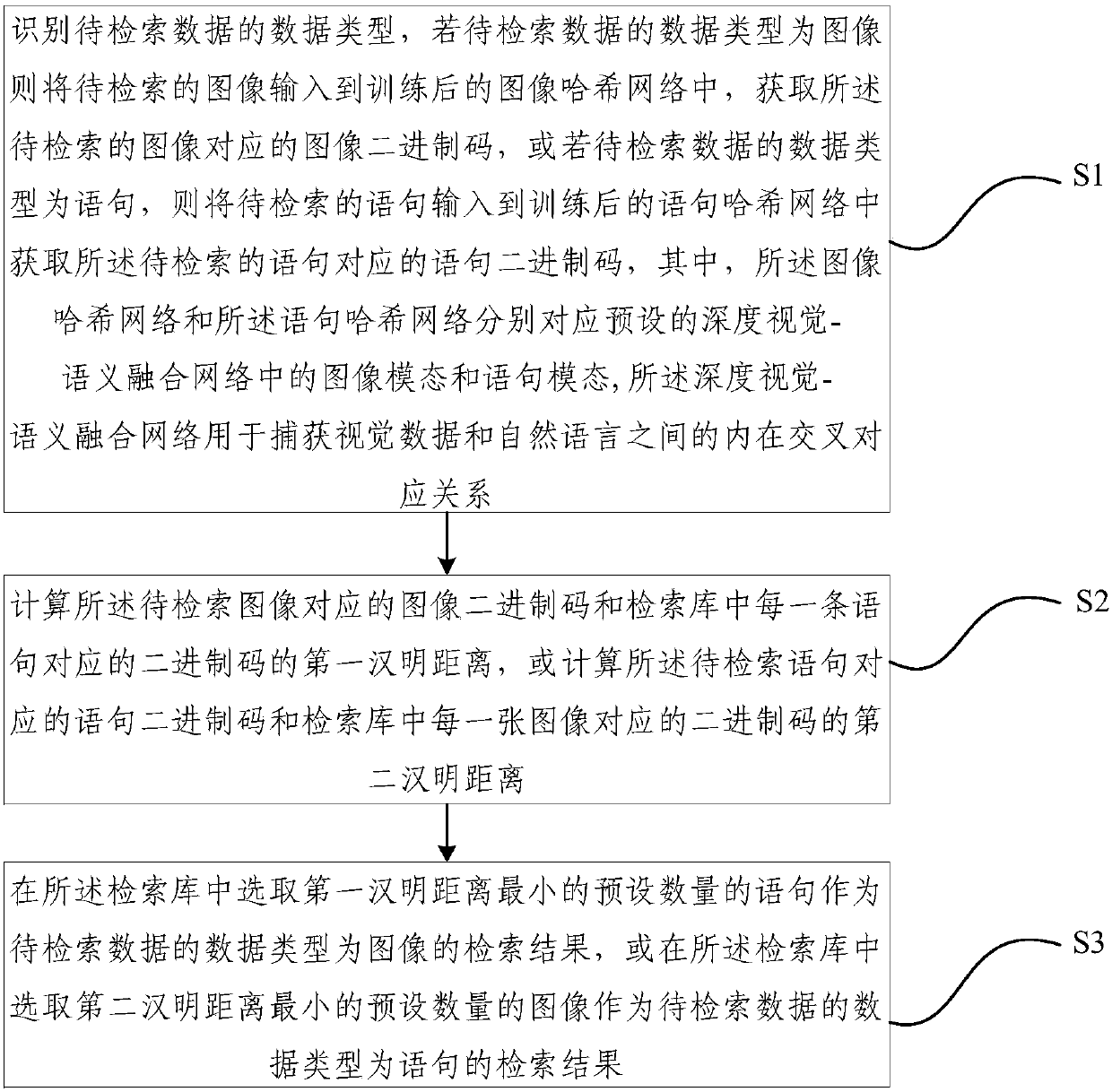

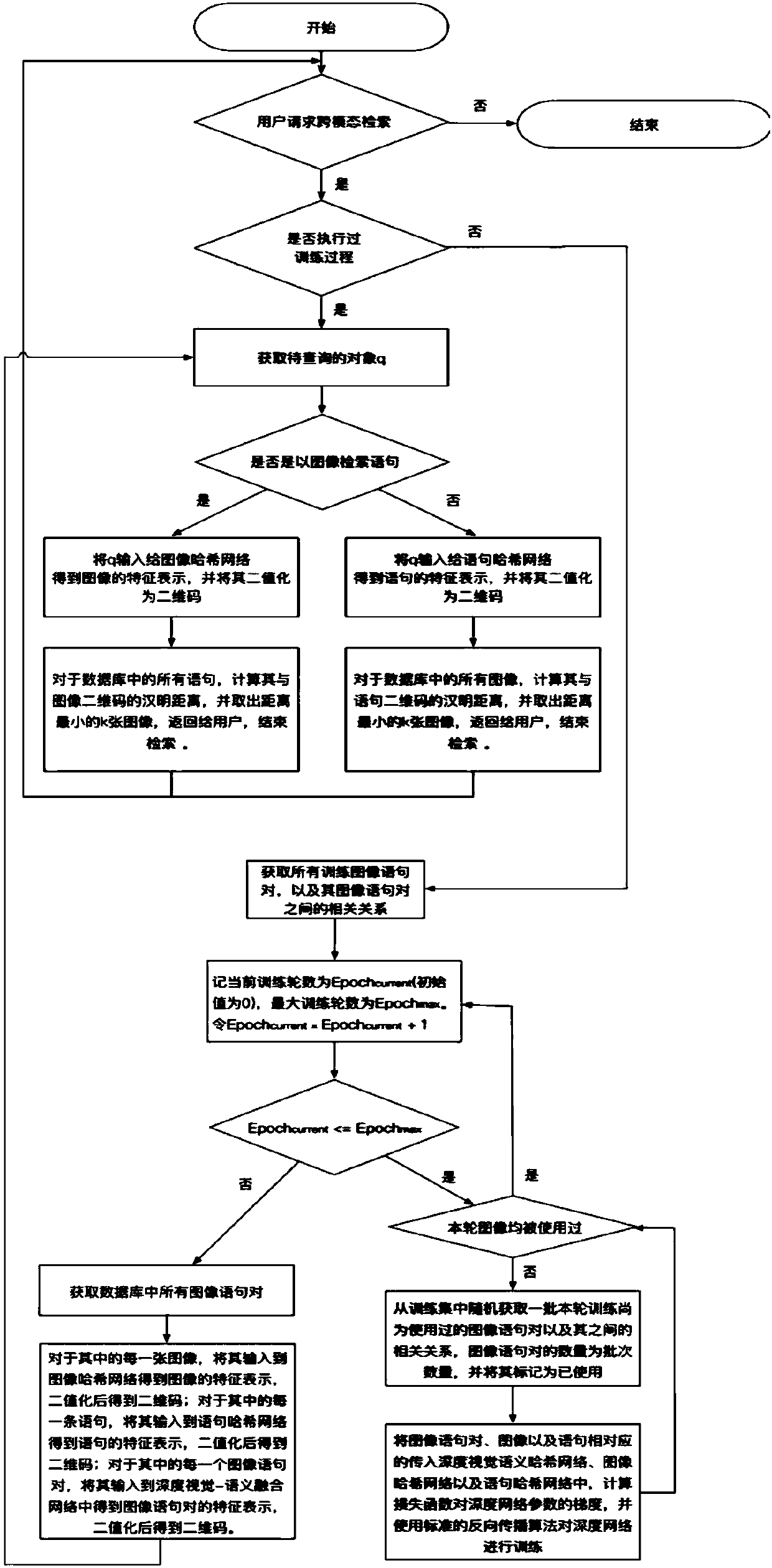

[0049] figure 1 It is a flow chart of a deep fusion hash-based cross-modal retrieval method for big data provided by an embodiment of the present invention, as shown in figure 1 As shown, the method includes:

[0050] S1. Identify the data type of the data to be retrieved. If the data type of the data to be retrieved is an image, input the image to be retrieved into the trained image hash network, and obtain the imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com