Parallel processing method for multi-input multi-output matrix convolution

A parallel processing, multi-output technology, applied in neural learning methods, neural architectures, biological neural network models, etc., can solve problems such as difficulty in exerting computing advantages and small convolution kernel scale, achieving high-performance computing capabilities and easy operation. , the effect of improving computational efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0030] Such as Figure 7 Shown, a kind of parallel processing method of multi-input multi-output matrix convolution of the present invention, its steps are:

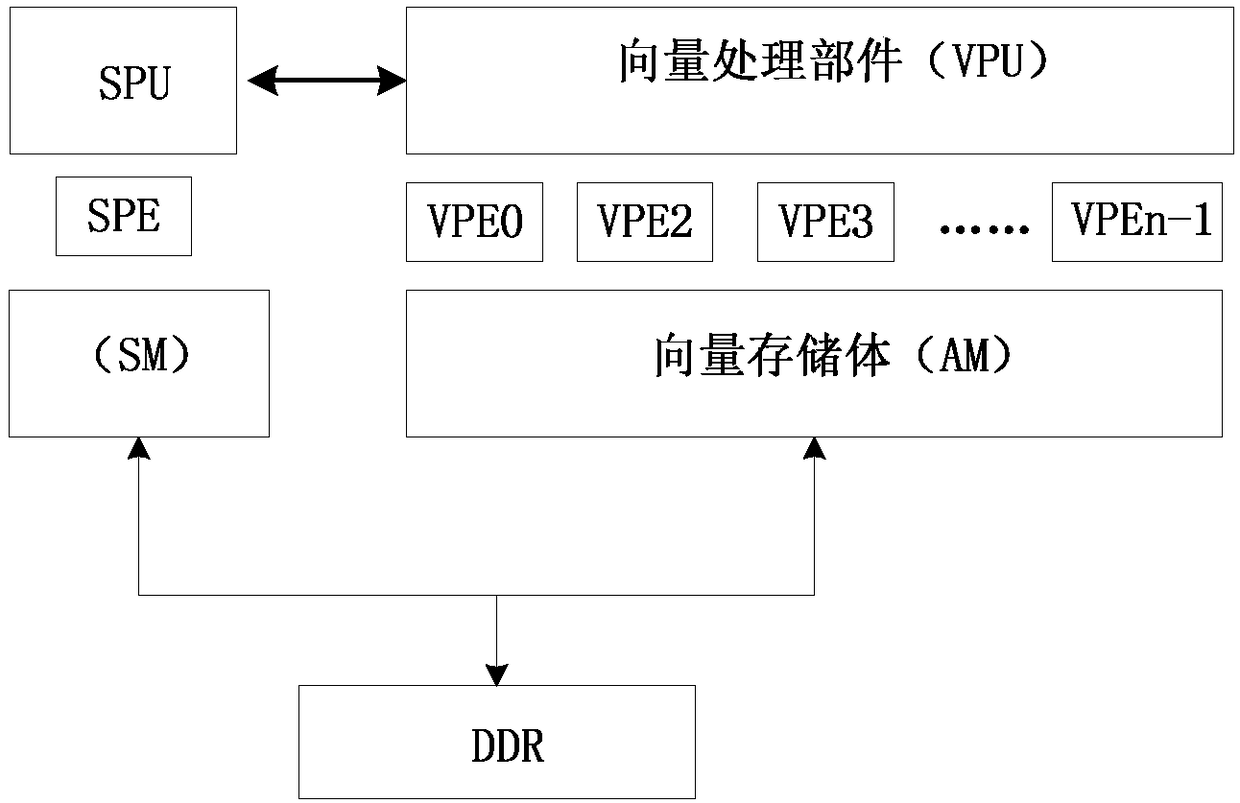

[0031] S1: According to the number N of vector processing units VPE of the vector processor, the number M of input feature maps, the number P of convolution kernels, the size k of convolution kernels, and the moving step size s, determine the optimal calculation scheme for output feature maps ;

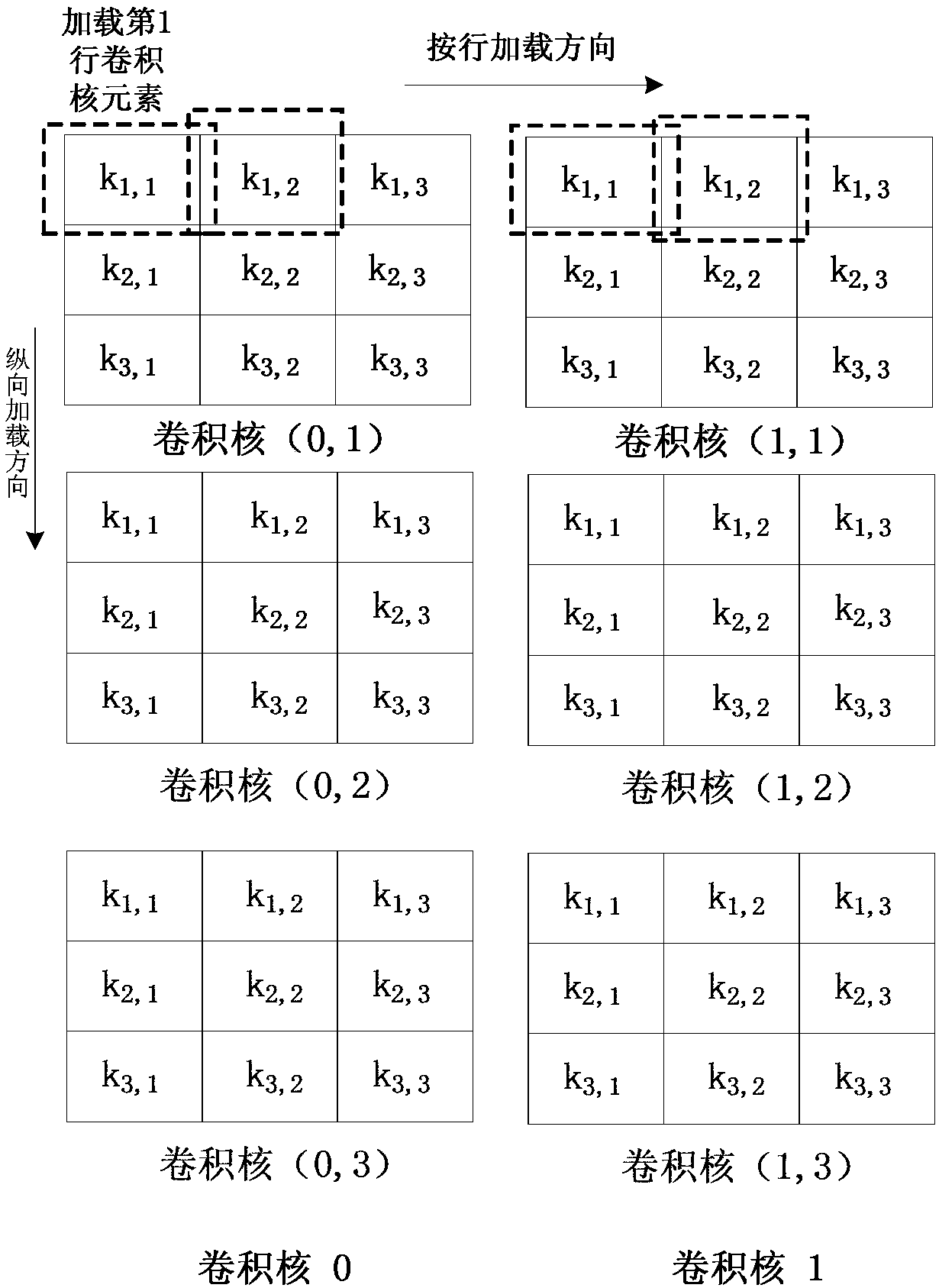

[0032] S2: Store the M input feature maps in the external storage DDR in turn, splice the N input convolution kernels according to the third dimension and row by row, and transfer the spliced convolution kernel matrix to the vector processor In the vector storage body of ; wherein, N<=p;

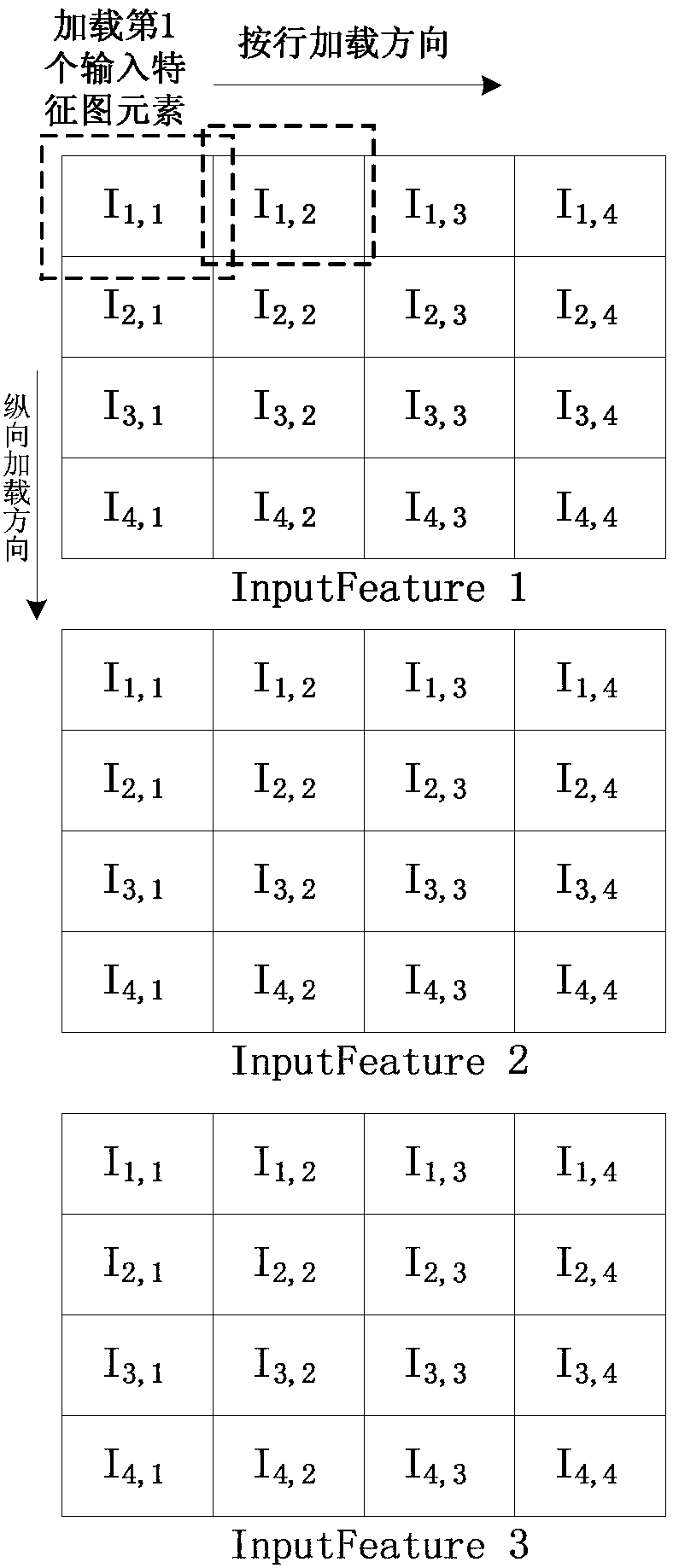

[0033] S3: Load input features figure 1 The first element of is broadcast to the vector regi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com