Projection full-convolution network three-dimensional model segmentation method based on fusion of multi-view-angle features

A fully convolutional network and 3D model technology, which is applied in the field of projected full convolutional network 3D model segmentation, can solve the problems of prolonging the frame training time, time-consuming viewing angle selection, and low practicability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

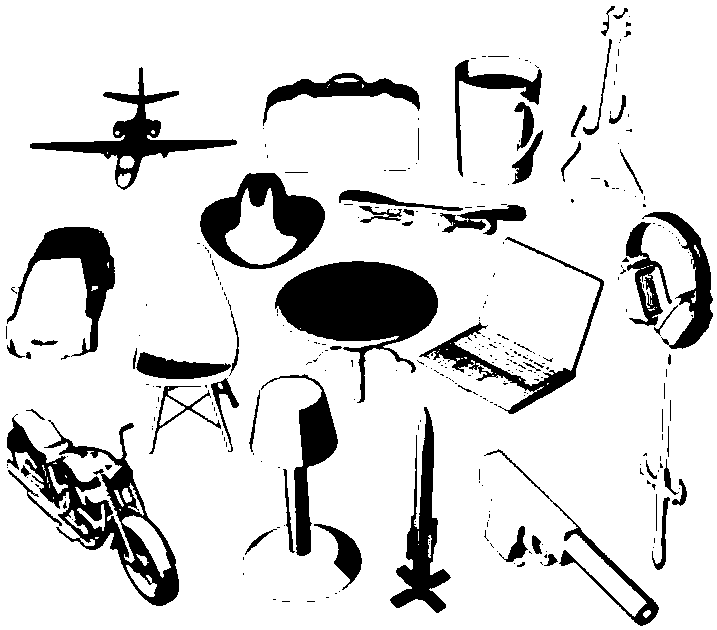

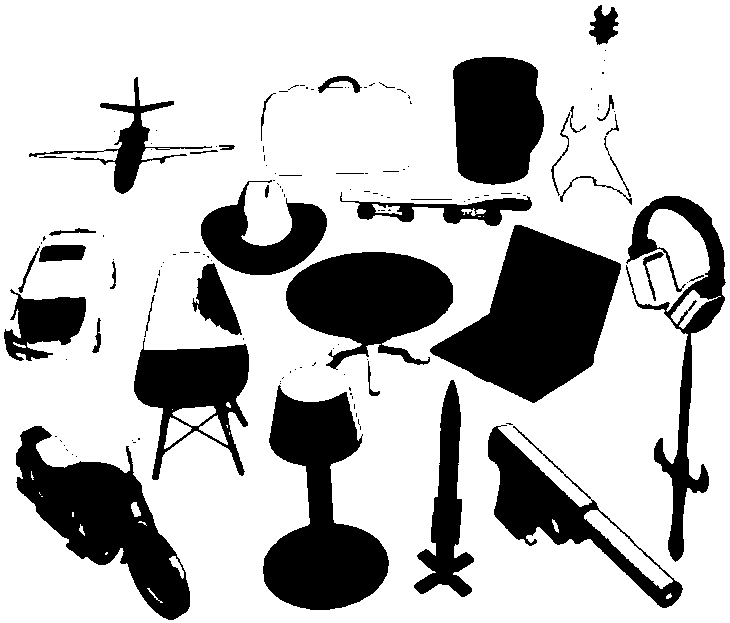

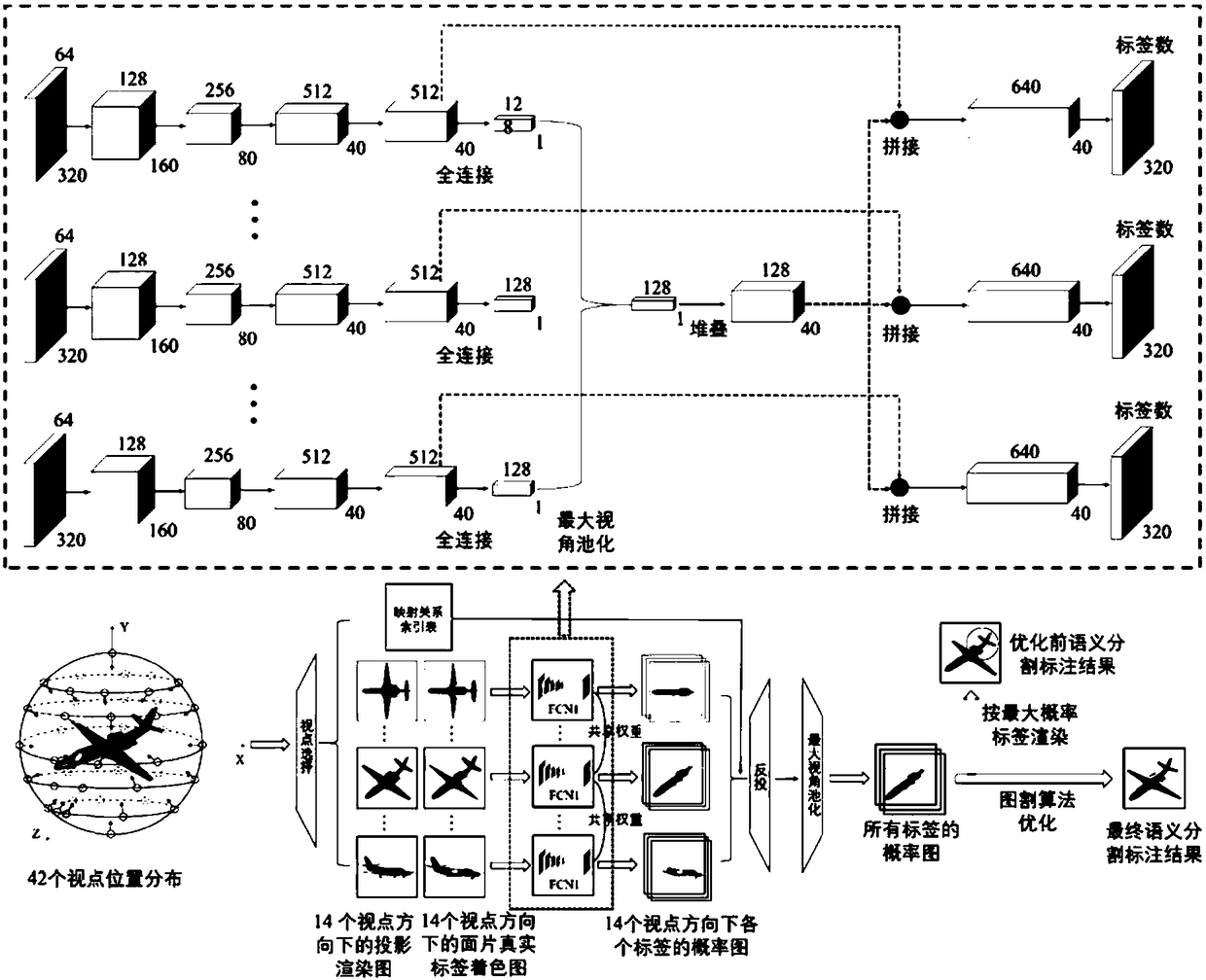

[0104] The objective tasks of the present invention are as Figure 1a with Figure 1b As shown, Figure 1a Is the original undivided model, Figure 1b Color rendering results for the semantically segmented tags. The structure of the whole method is as follows figure 2 Shown. The various steps of the present invention will be described below based on examples.

[0105] Step (1): Collect data on the input three-dimensional mesh model data set S. Taking model s as an example, it can be divided into the following steps:

[0106] Step (1.1), choose 14 viewpoints from 42 fixed viewpoints to maximize the coverage of the model patch;

[0107] Step (1.1.1), set 42 fixed viewpoints, such as image 3 As shown, the distance between the viewpoint and the origin of the coordinates depends on whether the projection images in all viewpoint directions of the model can be filled in the rendering window as much as possible. The size of the rendering window in this experiment is set to 320×320, and th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com