Three-dimensional point cloud matching method and system

A technology of 3D point cloud and matching method, which is applied in the field of image processing, can solve the problems of large matching error of feature points, complex registration method of color image and depth data, and inability to guarantee high-precision matching of final 3D point cloud, etc., to achieve guaranteed accuracy No loss, achieve high precision matching, and improve the effect of matching accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

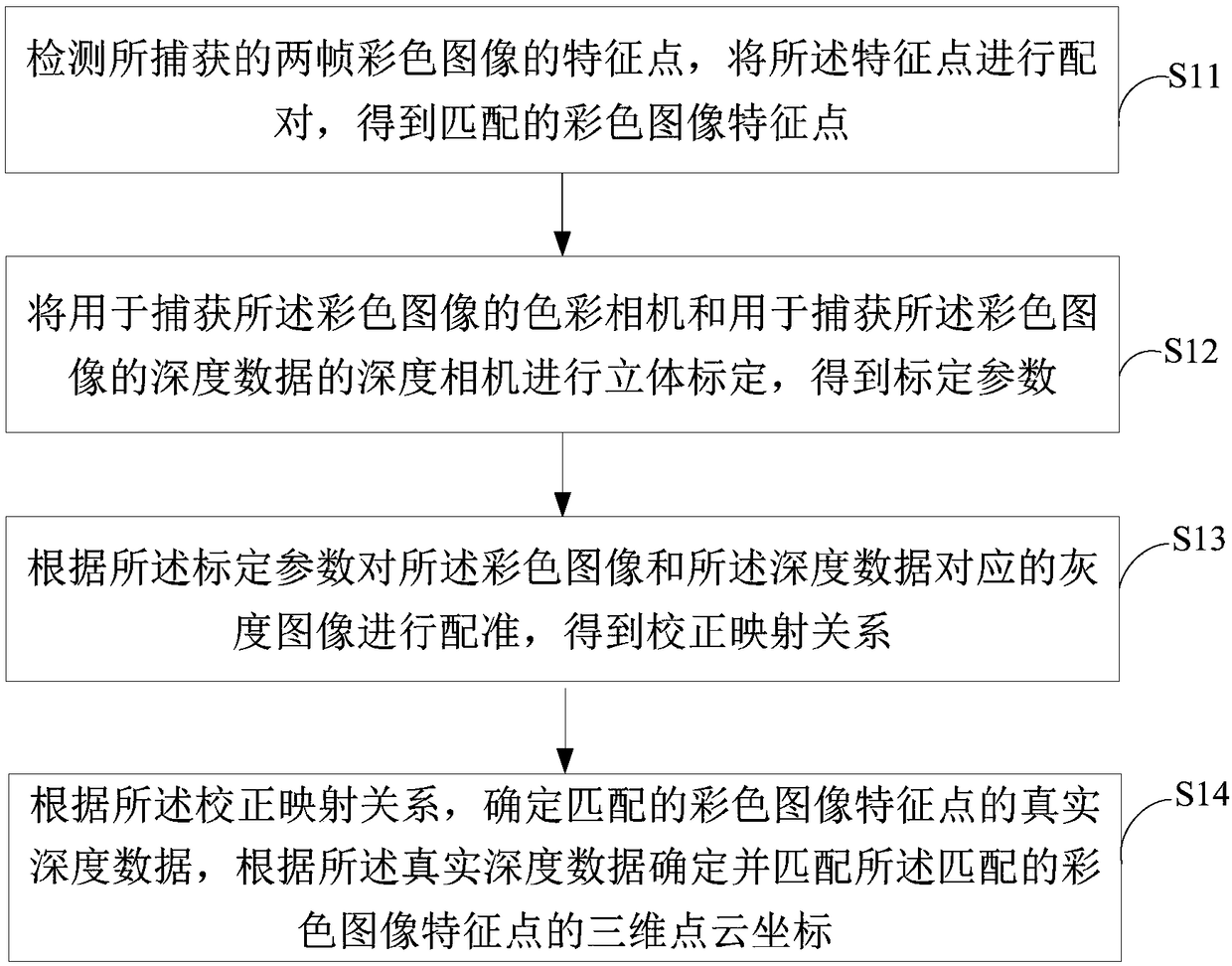

[0024] figure 1 A flow chart of a 3D point cloud matching method provided in the first embodiment of the present invention is shown, which is described in detail as follows:

[0025] Step S11, detecting the feature points of the captured two frames of color images, pairing the feature points to obtain matching color image feature points;

[0026] The 3D point cloud matching of color image feature points is a necessary step for continuous frame robot motion estimation and loop closure detection. The correctness of 3D point cloud matching directly affects the accuracy of RGB-D visual SLAM. Before matching the 3D point cloud of the feature points of the color image, it is first necessary to detect the feature points on two consecutive frames of color images, and then match the feature points on the two frames of images, filter and remove the wrongly paired feature points, and retain the matched color Image feature points.

[0027] Preferably, said detecting feature points of th...

Embodiment 2

[0073] Figure 5 It shows a structural diagram of a 3D point cloud matching system provided by the second embodiment of the present invention. The 3D point cloud matching system can be applied to various terminals. part. The 3D point cloud matching system includes: a feature point matching unit 51, a calibration parameter determining unit 52, a correction mapping relationship determining unit 53, and a 3D point cloud matching unit 54, wherein:

[0074] The feature point matching unit 51 is used to detect the feature points of the captured two frames of color images, and pair the feature points to obtain matching color image feature points;

[0075] Preferably, the feature point matching unit 51 specifically includes:

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com