Deep convolutional adversarial neural network-based human body action radar image classification method

A radar image and neural network technology, applied in the fields of instruments, character and pattern recognition, computer parts, etc., can solve the problems such as the lack of a unified and effective framework in the research field of human action and behavior recognition, and the analysis and classification methods of related technologies, and achieve the classification accuracy. Improve and improve the effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] In order to make the technical solution of the present invention clearer, the specific implementation manners of the present invention will be further described below. The present invention is concretely realized according to the following steps:

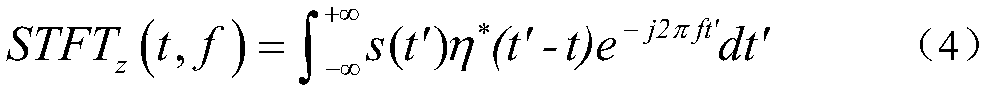

[0023] 1. Radar time-frequency image dataset construction

[0024] The present invention uses the MOCAP data set established by the Graphics Laboratory of Carnegie Mellon University. The dataset collects data based on the human ellipsoid motion model, which is derived from the Boulic human gait model, a global human gait model proposed by Boulic in 1990. This model models the human target echo and can divide the human body into two parts: Ten scattering parts are head, chest cavity, left upper arm, right upper arm, left forearm, right forearm, left thigh, right thigh, left calf and right calf. Different limb movements have different motion curve equations, and the echo form of the human body is the sum of all the different ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com