A complex behavior recognition method

A recognition method and behavior technology, applied in the field of image recognition, can solve problems such as incomplete descriptors, global feature deviation, loss of motion information, etc., and achieve the effect of improving the efficiency of action recognition, increasing the accuracy rate, and reducing the difficulty of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0094] Embodiment one: see Figure 7 As shown, a complex behavior recognition method includes the following steps:

[0095] (1) Use the depth sensor to obtain the three-dimensional bone joint point information of the target movement, and obtain the three-dimensional coordinates of each joint of the human body;

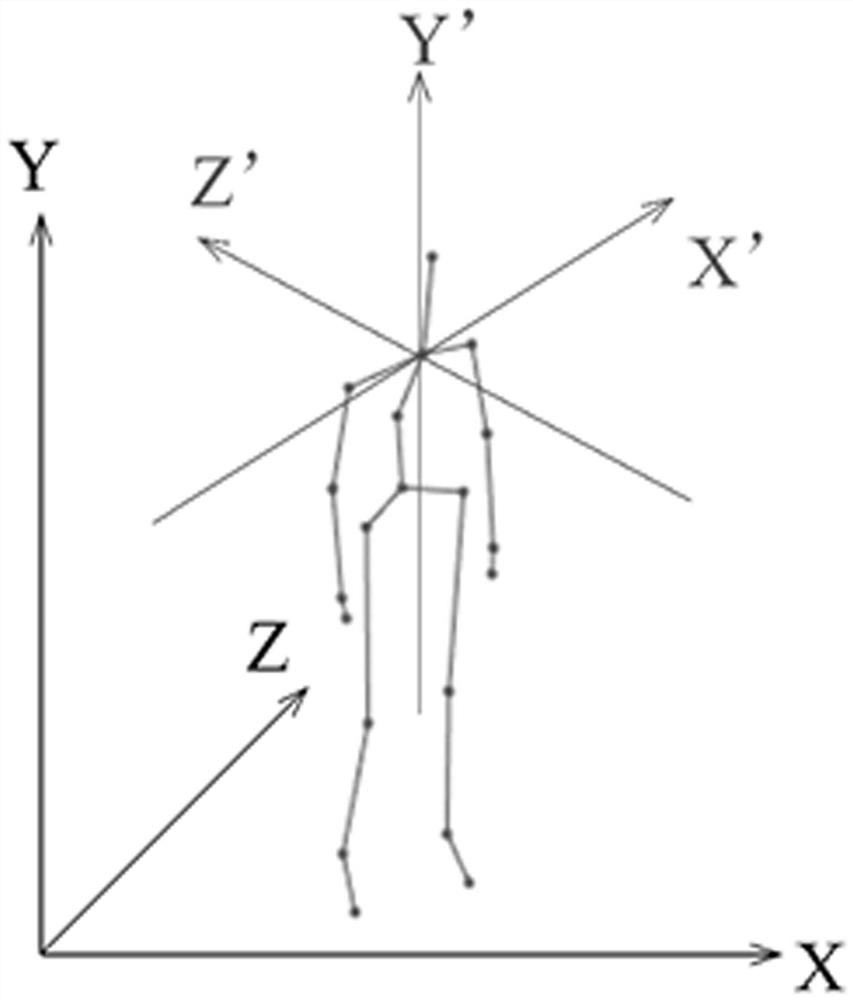

[0096] (2) Preprocess the bone joint point information and normalize the coordinate system, see figure 1 As shown, the horizontal axis is the vector from the left shoulder to the right shoulder, and the vertical axis is the vector from the hip bone to the midpoint of the shoulders. The coordinate system is normalized, and the X-Y-Z coordinate system is converted into the X’-Y’-Z’ coordinate system;

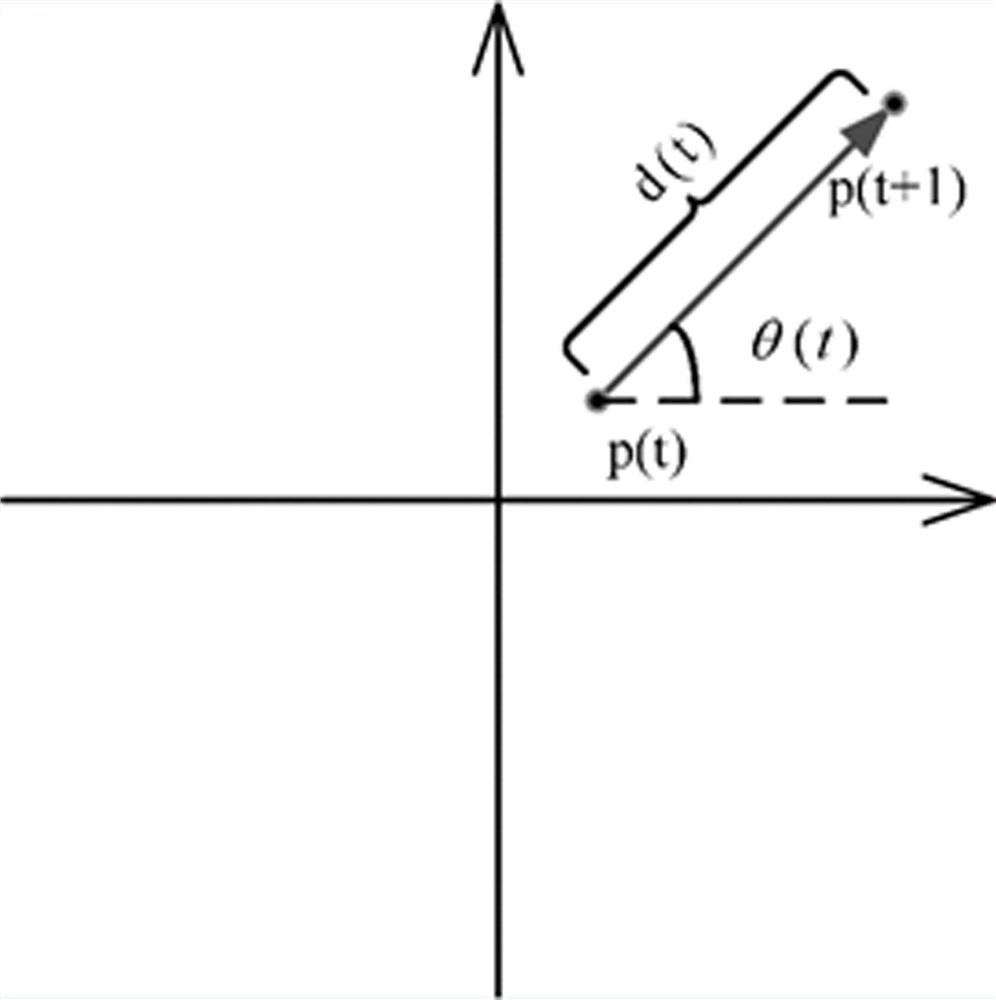

[0097] (3) Connect the 3D coordinates of each skeletal joint point in the action sequence in chronological order to obtain the 3D trajectories of all skeletal joint points;

[0098] This embodiment adopts a 60-frame action sequence S (swinging with both hands) with 20 sk...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com