Convolutional neural network-based multispectral image semantic cutting method

A convolutional neural network and multi-spectral image technology, applied in biological neural network models, image enhancement, neural architecture, etc., can solve problems such as interfering image cutting, loss of high-resolution image space information, loss of computing time, etc., to achieve The effect of improving precision, improving work efficiency and ensuring precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

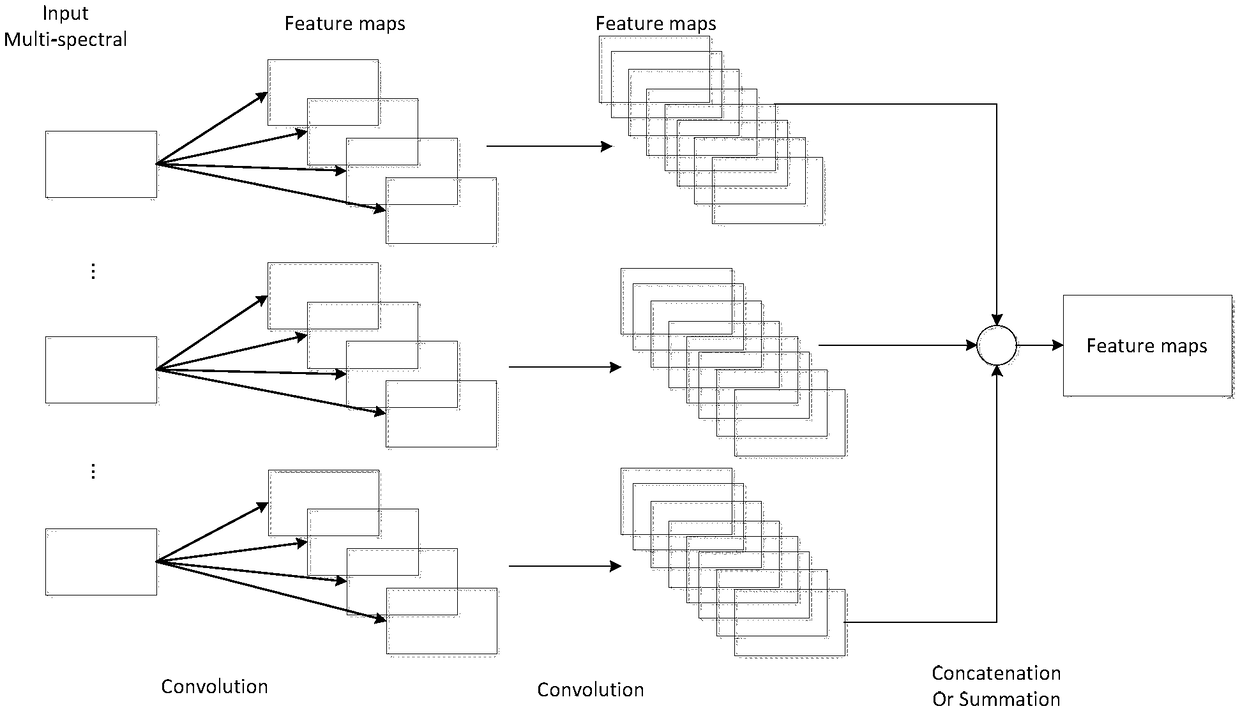

[0022] For multispectral images, firstly, the bands are separated according to the wavelength, and then independent convolution operations are performed on different bands, that is, each data channel of the multispectral image is independently convolved by using a convolutional neural network, and then each data channel is independently convolved. The feature maps after independent convolution are fused (concatenation, summation). When convolving each data channel of a multispectral image independently, convolution kernels of different sizes and numbers are selected according to different bands. When convolving each data channel of a multispectral image independently, different convolution layers are selected according to different bands. When implemented, the convolutional neural network adopts the U-NET neural network.

Embodiment 2

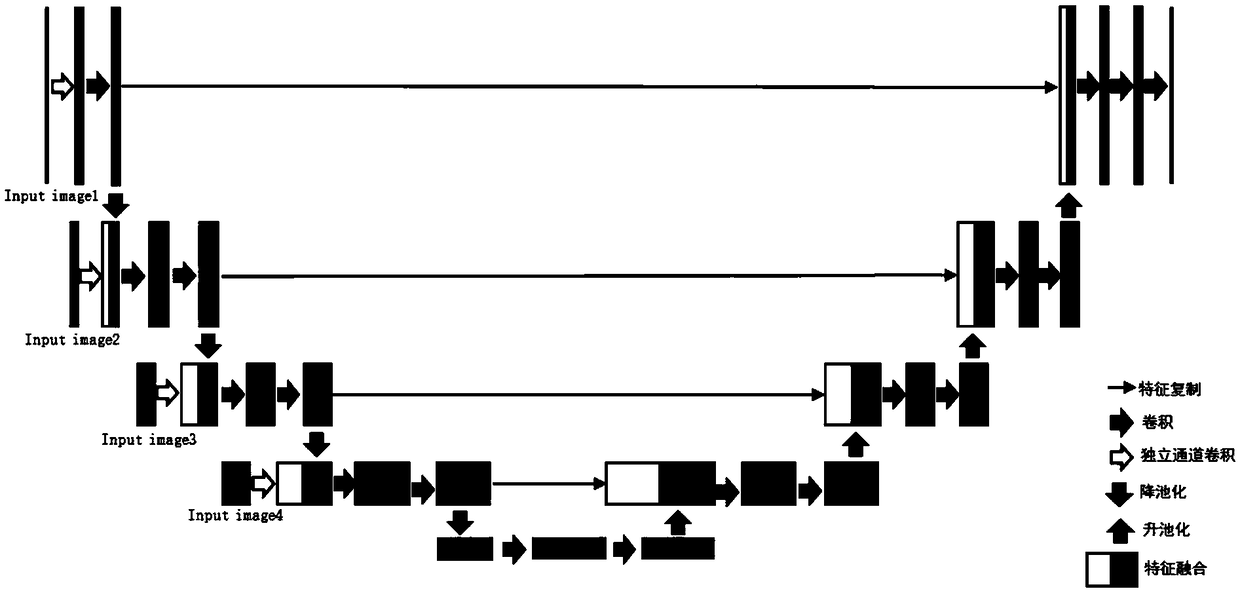

[0024] When the multispectral image has multiple resolutions, on the basis of using the multi-channel independent convolution in the first embodiment, the second implementation adopts the multi-channel independent convolution and multi-resolution input network. like figure 2 As shown, the U-NET network is transformed into a convolutional neural network that supports multiple resolution inputs. Similar to the traditional U-NET network, the network of the present invention consists of a scale shrinkage part and a scale expansion part, and the scale shrinkage part consists of a classical convolutional network. As the level of convolution increases, the image size is pooled with the convolution The number of convolution kernels increases with the increase of pooling times. The scale expansion part is the same as the scale expansion part of the U-NET network. For each upsampling step in the scale expansion part, the scale is doubled and the number of convolution kernels is halved...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com