Mapping method for deep learning model configuration file to FPGA configuration file

A configuration file and deep learning technology, which is applied in software maintenance/management, version control, creation/generation of source code, etc., can solve problems such as limiting promotion and application scope, increasing errors, and inconvenience, so as to improve the efficiency of R&D work and reduce Possibility of error, effect of reducing setup time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

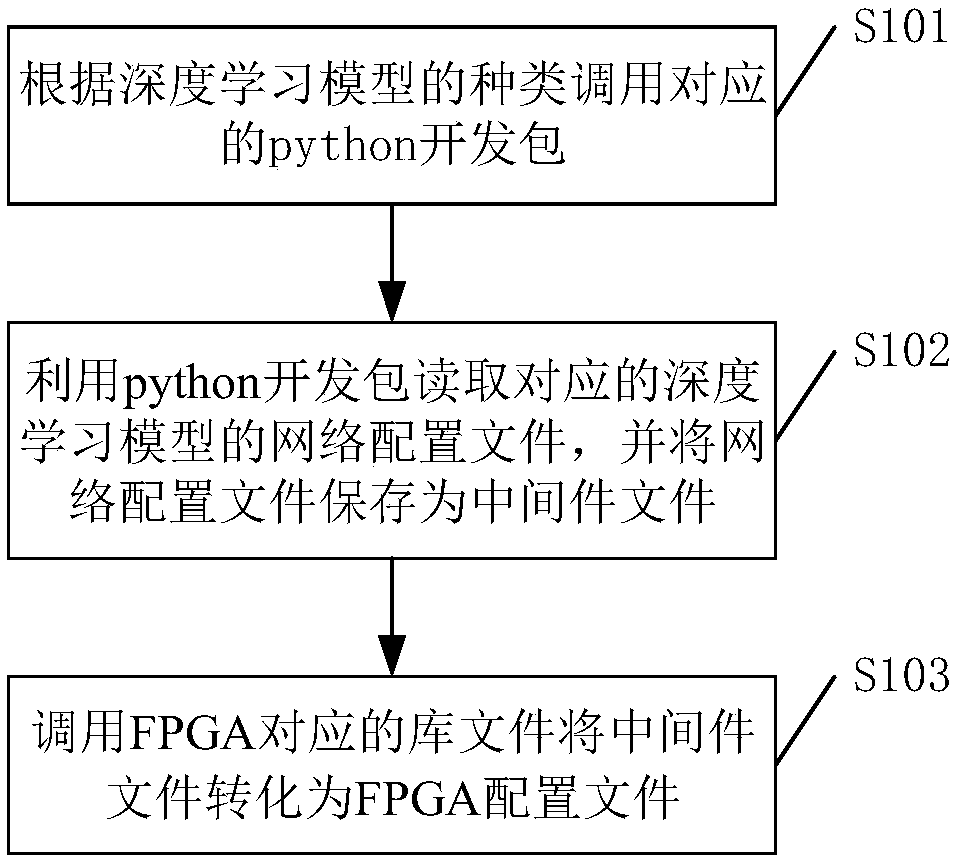

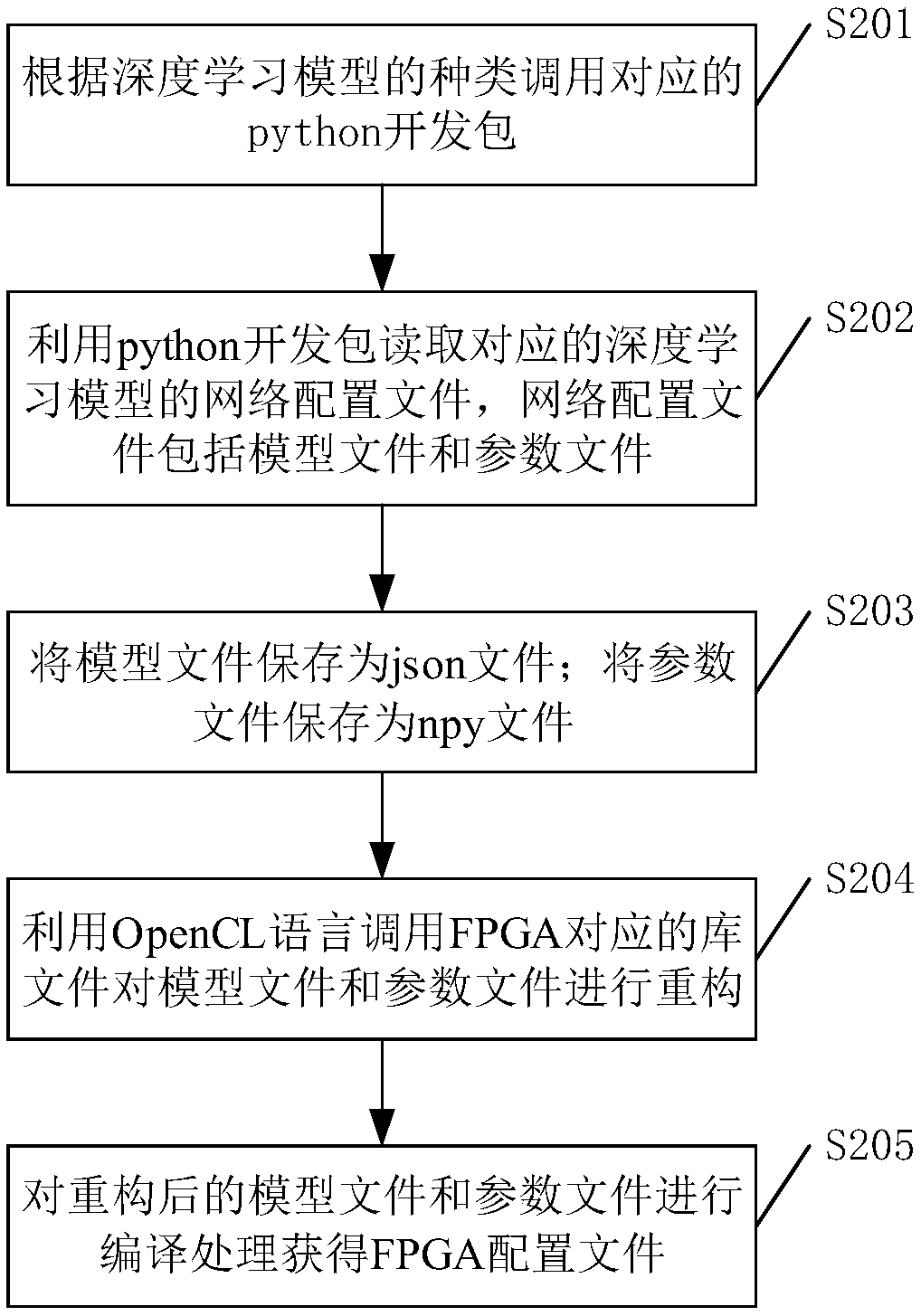

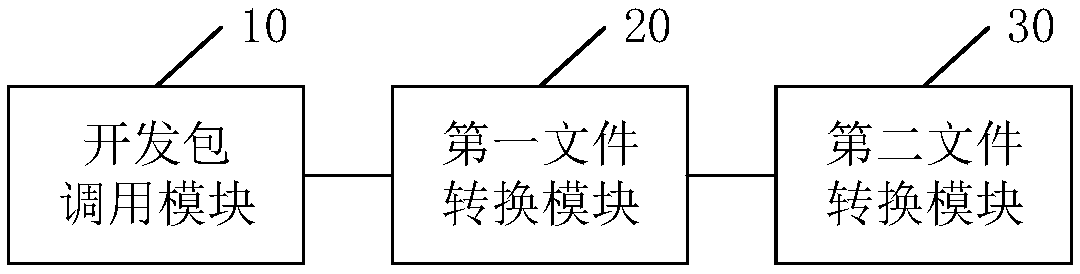

[0039] The core of this application is to provide a mapping method from a deep learning model configuration file to an FPGA configuration file. This mapping method can effectively reduce the time for setting a deep learning model configuration file on the FPGA, save manpower, and thereby reduce the possibility of human error. The efficiency of research and development is further improved; another core of the present application is to provide a mapping device, equipment and computer-readable storage medium from a deep learning model configuration file to an FPGA configuration file, all of which have the above-mentioned beneficial effects.

[0040] In order to make the purposes, technical solutions and advantages of the embodiments of the present application clearer, the technical solutions in the embodiments of the present application will be clearly and completely described below in conjunction with the drawings in the embodiments of the present application. Obviously, the descr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com