Semantic Generation Method of Remote Sensing Image Based on Fast Region Convolutional Neural Network

A convolutional neural network and remote sensing image technology, applied in the field of image semantic generation, can solve the problems of not being able to get the relationship between the target and the image as a whole, not being able to get the relationship between the target and the target in the image, and the method is not systematic enough.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Below in conjunction with accompanying drawing and specific embodiment, the present invention is described in further detail:

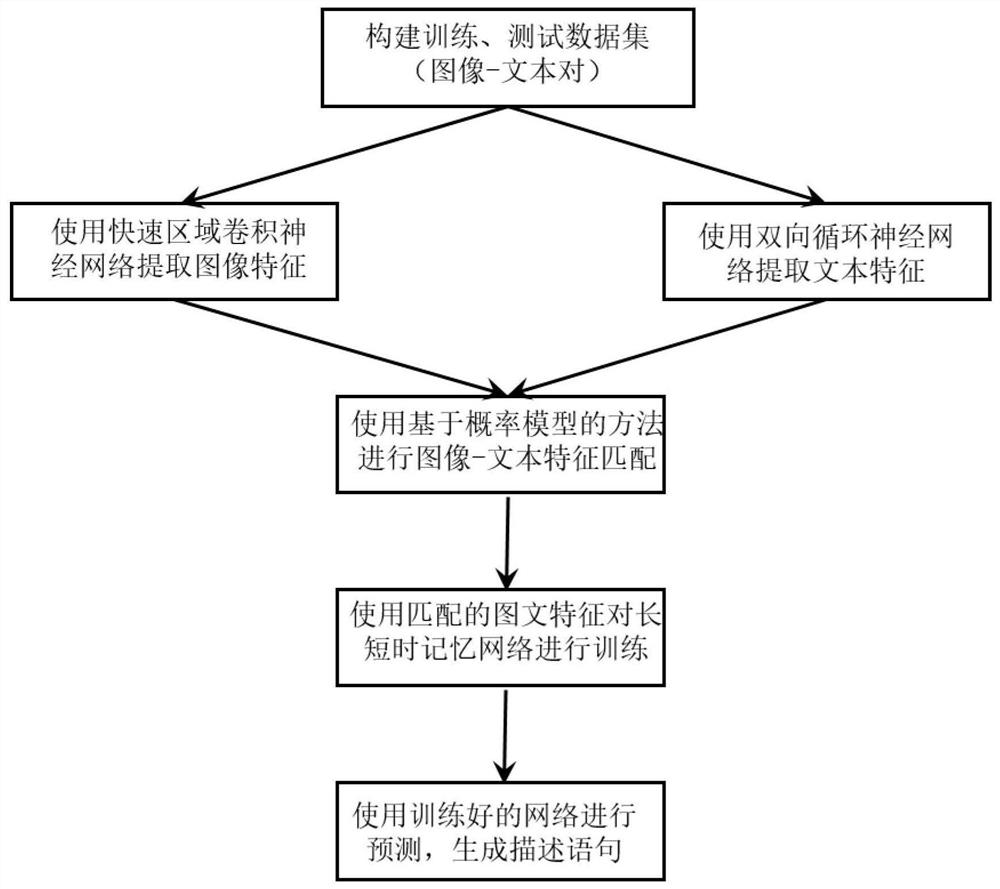

[0024] Refer to attached figure 1 , the realization steps of the present invention are as follows.

[0025] Step 1: Construct training sample set and test sample set.

[0026] Download the UCM-Captions Data Set, Sydney-Captions Data Set and RSICD three remote sensing image semantic generation datasets from the website of the State Key Laboratory of Surveying, Mapping and Remote Sensing at Wuhan University, and use 60% of the image-text pairs in each dataset as training samples. The remaining 40% image-text pairs are used as test samples.

[0027] Step 2 uses the fast area convolutional network to extract the image features of the remote sensing images in the training samples:

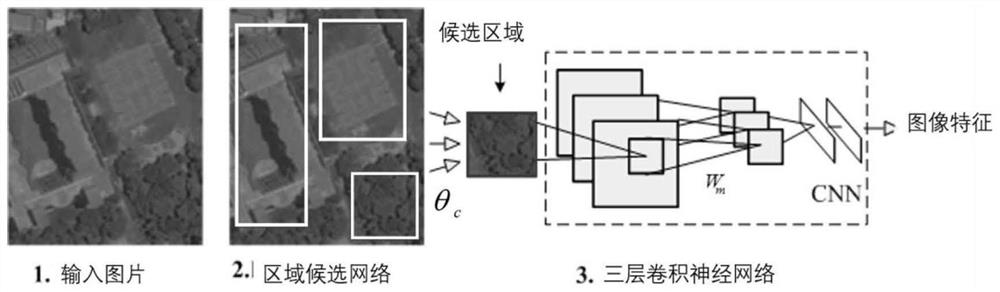

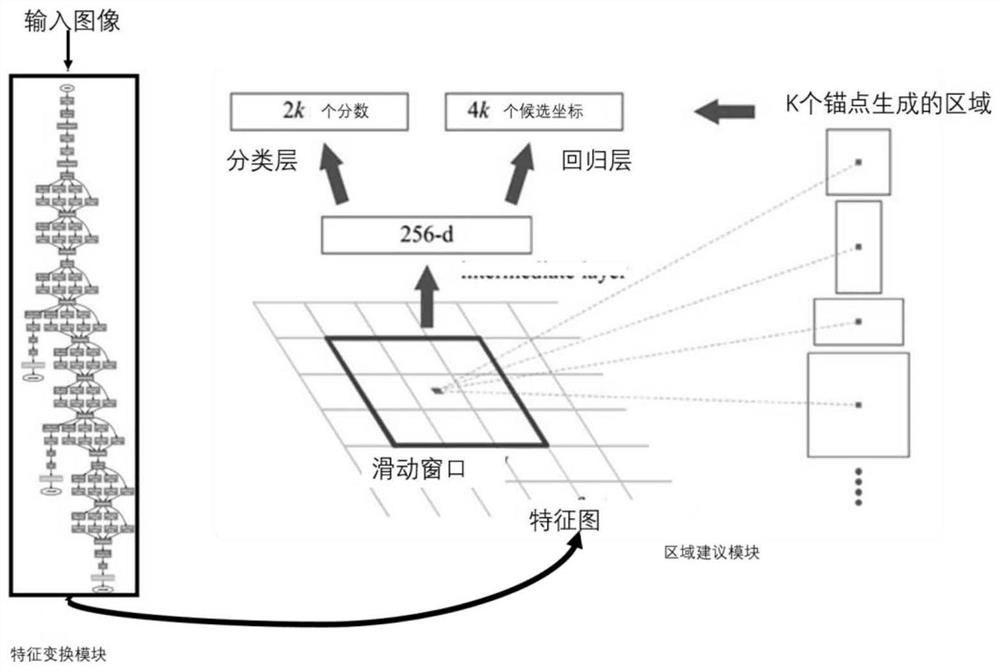

[0028] The structure of the fast area convolutional network is as follows figure 2 As shown, it contains a region candidate network and a three-layer convolutional ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com